Abstract

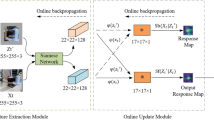

Despite the considerable progress that has been achieved in visual object tracking, it remains a challenge to track in low-light circumstances. Prior nighttime tracking methods suffer from either weak collaboration of cascade structures or the lack of pseudo supervision, and thus fail to bring out satisfactory results. In this paper, we develop a novel unsupervised domain adaptation framework for nighttime tracking. Specifically, we benefit from the establishment of pseudo supervision in the mean teacher network, and further extend it with three components at the input level and the optimization level. For the unlabeled target domain dataset, we first present an assignment-based object discovery strategy to generate suitable training patches. Additionally, a low-light enhancer is embedded to improve the pseudo labels that facilitate the following consistency learning. Finally, with the aid of better training data and pseudo labels, we replace the common mean square error with two stricter losses, which are entropy-decreasing classification consistency loss and confidence-weighted regression consistency loss, for better convergence. Experiments demonstrate that our proposed method achieves significant performance gains on multiple nighttime tracking benchmarks, and even brings slight enhancement on the source domain.

Supported by National Natural Science Foundation of China (62233005, 62293502), Program of Shanghai Academic Research Leader Under Grant 20XD1401300, Sino-German Center for Research Promotion (Grant M-0066) and Fundamental Research Funds for the Central Universities(222202317006)

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.S.: Fully-convolutional Siamese networks for object tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 850–865. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_56

Buslaev, A., Iglovikov, V.I., Khvedchenya, E., Parinov, A., Druzhinin, M., Kalinin, A.A.: Albumentations: fast and flexible image augmentations. Information 11(2), 125 (2020)

Cao, Z., Fu, C., Ye, J., Li, B., Li, Y.: HiFT: hierarchical feature transformer for aerial tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 15457–15466 (2021)

Chen, M., et al.: Learning domain adaptive object detection with probabilistic teacher. In: International Conference on Machine Learning, pp. 3040–3055. PMLR (2022)

Chen, Z., Zhong, B., Li, G., Zhang, S., Ji, R.: Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6668–6677 (2020)

Chen, Z., Zhu, L., Wan, L., Wang, S., Feng, W., Heng, P.A.: A multi-task mean teacher for semi-supervised shadow detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5611–5620 (2020)

Deng, J., Li, W., Chen, Y., Duan, L.: Unbiased mean teacher for cross-domain object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4091–4101 (2021)

Fan, H., et al.: LaSOT: a high-quality benchmark for large-scale single object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5374–5383 (2019)

Fu, C., Dong, H., Ye, J., Zheng, G., Li, S., Zhao, J.: HighlightNet: highlighting low-light potential features for real-time UAV tracking. In: 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 12146–12153. IEEE (2022)

Guo, D., Wang, J., Cui, Y., Wang, Z., Chen, S.: SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6269–6277 (2020)

Hoyer, L., Dai, D., Van Gool, L.: DAFormer: improving network architectures and training strategies for domain-adaptive semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9924–9935 (2022)

Huang, L., Zhao, X., Huang, K.: GOT-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 43(5), 1562–1577 (2019)

Li, B., Wu, W., Wang, Q., Zhang, F., Xing, J., Yan, J.: SiamRPN++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4282–4291 (2019)

Li, B., Fu, C., Ding, F., Ye, J., Lin, F.: ADTrack: target-aware dual filter learning for real-time anti-dark UAV tracking. In: 2021 IEEE International Conference on Robotics and Automation, pp. 496–502. IEEE (2021)

Li, C., Guo, C., Chen, C.: Learning to enhance low-light image via zero-reference deep curve estimation. IEEE Trans. Pattern Anal. Mach. Intell. 44, 4225–38 (2021)

Liu, Y., Tian, Y., Chen, Y., Liu, F., Belagiannis, V., Carneiro, G.: Perturbed and strict mean teachers for semi-supervised semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4258–4267 (2022)

Lukezic, A., Matas, J., Kristan, M.: D3S-a discriminative single shot segmentation tracker. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7133–7142 (2020)

Mueller, M., Smith, N., Ghanem, B.: A benchmark and simulator for UAV tracking. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 445–461. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_27

Qiao, H., Zhong, S., Chen, Z., Wang, H.: Improving performance of robots using human-inspired approaches: a survey. Sci. China Inf. Sci. 65(12), 221201 (2022)

Ramamonjison, R., Banitalebi-Dehkordi, A., Kang, X., Bai, X., Zhang, Y.: SimROD: a simple adaptation method for robust object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3570–3579 (2021)

Russakovsky, O., et al.: ImageNet large scale visual recognition challenge. Int. J. Comput. Vision 115(3), 211–252 (2015)

Sun, Q., Zhao, C., Tang, Y., Qian, F.: A survey on unsupervised domain adaptation in computer vision tasks. Scientia Sinica (Technologica) 52(1), 26–54 (2022)

Tang, S., Andriluka, M., Andres, B., Schiele, B.: Multiple people tracking by lifted multicut and person re-identification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3539–3548 (2017)

Tang, Y., et al.: Perception and navigation in autonomous systems in the era of learning: a survey. IEEE Trans. Neural Netw. Learn. Syst. (2022)

Tarvainen, A., Valpola, H.: Mean teachers are better role models: weight-averaged consistency targets improve semi-supervised deep learning results. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Xu, Y., Wang, Z., Li, Z., Yuan, Y., Yu, G.: SiamFC++: towards robust and accurate visual tracking with target estimation guidelines. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 12549–12556 (2020)

Ye, J., Fu, C., Cao, Z., An, S., Zheng, G., Li, B.: Tracker meets night: a transformer enhancer for UAV tracking. IEEE Robot. Autom. Lett. 7(2), 3866–3873 (2022)

Ye, J., Fu, C., Zheng, G., Cao, Z., Li, B.: DarkLighter: light up the darkness for UAV tracking. In: 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 3079–3085. IEEE (2021)

Ye, J., Fu, C., Zheng, G., Paudel, D.P., Chen, G.: Unsupervised domain adaptation for nighttime aerial tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8896–8905 (2022)

Zhang, L., Gonzalez-Garcia, A., Weijer, J.V.D., Danelljan, M., Khan, F.S.: Learning the model update for Siamese trackers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4010–4019 (2019)

Zhang, Z., Peng, H., Fu, J., Li, B., Hu, W.: Ocean: object-aware anchor-free tracking. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12366, pp. 771–787. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58589-1_46

Zhao, J.X., Liu, J.J., Fan, D.P., Cao, Y., Yang, J., Cheng, M.M.: EGNet: edge guidance network for salient object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8779–8788 (2019)

Zhou, H., Jiang, F., Lu, H.: SSDA-YOLO: semi-supervised domain adaptive yolo for cross-domain object detection. arXiv preprint: arXiv:2211.02213 (2022)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Chen, J., Sun, Q., Zhao, C., Ren, W., Tang, Y. (2024). Rethinking Unsupervised Domain Adaptation for Nighttime Tracking. In: Luo, B., Cheng, L., Wu, ZG., Li, H., Li, C. (eds) Neural Information Processing. ICONIP 2023. Communications in Computer and Information Science, vol 1968. Springer, Singapore. https://doi.org/10.1007/978-981-99-8181-6_30

Download citation

DOI: https://doi.org/10.1007/978-981-99-8181-6_30

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-8180-9

Online ISBN: 978-981-99-8181-6

eBook Packages: Computer ScienceComputer Science (R0)