Abstract

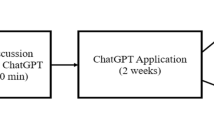

The recent rise in both popularity and performance of large language models has garnered considerable interest regarding their applicability to education. Technologies like ChatGPT, which can engage in human-like dialog, have already disrupted educational practices given their ability to answer a wide array of questions. Nevertheless, integrating these technologies into learning contexts faces both technological and pedagogical challenges, such as providing appropriate user interfaces and configuring interactions to ensure that conversations stay on topic. To better understand the potential large language models have to power educational chatbots, we propose an architecture to support educational chatbots that can be powered by these models. Using this architecture, we created a chatbot interface that was integrated into a web application aimed at teaching software engineering best practices. The application was then used to conduct a case study comprising a controlled experiment with 26 university software engineering students. Half of the students interacted with a version of the application equipped with the chatbot, while the other half completed the same lesson without the chatbot. While the results of our quantitative analysis did not identify significant differences between conditions, qualitative insights suggest that learners appreciated the chatbot. These results could serve as a starting point to optimize strategies for integrating large language models into pedagogical scenarios.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Note that placeholders are presented between angle brackets (<>).

References

Airbnb: Airbnb JavaScript Style Guide (2012). https://airbnb.io/javascript/

Bach, S.H., et al.: PromptSource: An Integrated Development Environment and Repository for Natural Language Prompts (2022). https://doi.org/10.48550/arXiv.2202.01279

Bergin, J.: Fourteen pedagogical patterns. In: Devos, M., Rüping, A. (eds.) Proceedings of the 5th European Conference on Pattern Languages of Programs (EuroPLoP 2000). Universitaetsverlag Konstanz, Irsee, Germany (2000)

Charmaz, K.: Constructing Grounded Theory: A Practical Guide through Qualitative Analysis. Sage, London (2006)

Farah, J.C., Spaenlehauer, B., Rodríguez-Triana, M.J., Ingram, S., Gillet, D.: Toward code review notebooks. In: 2022 International Conference on Advanced Learning Technologies (ICALT), New York, NY, USA, pp. 209–211. IEEE (2022). https://doi.org/10.1109/ICALT55010.2022.00068

Gillet, D., Vonèche-Cardia, I., Farah, J.C., Phan Hoang, K.L., Rodríguez-Triana, M.J.: Integrated model for comprehensive digital education platforms. In: 2022 IEEE Global Engineering Education Conference (EDUCON), New York, NY, USA, pp. 1586–1592. IEEE (2022). https://doi.org/10.1109/EDUCON52537.2022.9766795

Hunter, J.D.: Matplotlib: a 2D graphics environment. Comput. Sci. Eng. 9(3), 90–95 (2007)

Hutto, C.J., Gilbert, E.: VADER: a parsimonious rule-based model for sentiment analysis of social media text. In: Proceedings of the Eighth International AAAI Conference on Weblogs and Social Media, Ann Arbor, MI, USA, pp. 216–225. AAAI (2014). https://doi.org/10.1609/icwsm.v8i1.14550

Jiang, Z., Xu, F.F., Araki, J., Neubig, G.: How Can We Know What Language Models Know? (2020). https://doi.org/10.48550/arXiv.1911.12543

Johnson, S.C.: Lint, A C Program Checker. Technical report, Bell Laboratories, Murray Hill, NJ, USA (1978)

Kasneci, E., et al.: ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education (2023). https://doi.org/10.35542/osf.io/5er8f

Laugwitz, B., Held, T., Schrepp, M.: Construction and evaluation of a user experience questionnaire. In: Holzinger, A. (ed.) USAB 2008. LNCS, vol. 5298, pp. 63–76. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-89350-9_6

OpenAI: Introducing ChatGPT (2022). https://openai.com/blog/chatgpt

Reynolds, L., McDonell, K.: Prompt Programming for Large Language Models: Beyond the Few-Shot Paradigm (2021). https://doi.org/10.48550/arXiv.2102.07350

Song, D., Oh, E.Y., Rice, M.: Interacting with a conversational agent system for educational purposes in online courses. In: 2017 10th International Conference on Human System Interactions (HSI), Ulsan, South Korea, pp. 78–82. IEEE (2017). https://doi.org/10.1109/HSI.2017.8005002

Tómasdóttir, K.F., Aniche, M., van Deursen, A.: The adoption of JavaScript linters in practice: a case study on ESLint. IEEE Trans. Software Eng. 46(8), 863–891 (2020). https://doi.org/10.1109/TSE.2018.2871058

Zakas, N.C.: ESLint (2013). https://eslint.org/

Zhao, T.Z., Wallace, E., Feng, S., Klein, D., Singh, S.: Calibrate Before Use: Improving Few-Shot Performance of Language Models (2021). https://doi.org/10.48550/arXiv.2102.09690

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Farah, J.C., Ingram, S., Spaenlehauer, B., Lasne, F.KL., Gillet, D. (2023). Prompting Large Language Models to Power Educational Chatbots. In: Xie, H., Lai, CL., Chen, W., Xu, G., Popescu, E. (eds) Advances in Web-Based Learning – ICWL 2023. ICWL 2023. Lecture Notes in Computer Science, vol 14409. Springer, Singapore. https://doi.org/10.1007/978-981-99-8385-8_14

Download citation

DOI: https://doi.org/10.1007/978-981-99-8385-8_14

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-8384-1

Online ISBN: 978-981-99-8385-8

eBook Packages: Computer ScienceComputer Science (R0)