Abstract

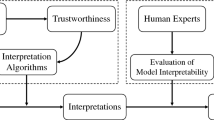

Convolutional neural network (CNN) models have seen advanced improvements in performance in various domains, but lack of interpretability is a major barrier to assurance and regulation during operation for acceptance and deployment of AI-assisted applications. There have been many works on input interpretability focusing on analyzing the input-output relations, but the internal logic of models has not been clarified in the current mainstream interpretability methods. In this study, we propose a novel hybrid CNN-interpreter through: (1) An original forward propagation mechanism to examine the layer-specific prediction results for local interpretability. (2) A new global interpretability that indicates the feature correlation and filter importance effects. By combining the local and global interpretabilities, hybrid CNN-interpreter enables us to have a solid understanding and monitoring of model context during the whole learning process with detailed and consistent representations. Finally, the proposed interpretabilities have been demonstrated to adapt to various CNN-based model structures.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Liu, H., et al.: Trustworthy AI: a computational perspective. arXiv preprint arXiv:2107.06641 (2021)

Zhang, Y., Weng, Y., Lund, J.: Applications of explainable artificial intelligence in diagnosis and surgery. Diagnostics 12(2), 237 (2022)

Pawar, U., O’Shea, D., Rea, S., O’Reilly, R.: Explainable AI in healthcare. In: 2020 International Conference on Cyber Situational Awareness, Data Analytics and Assessment (CyberSA), pp. 1–2 (2020)

Atakishiyev, S., Salameh, M., Yao, H., Goebel, R.: Explainable artificial intelligence for autonomous driving: a comprehensive overview and field guide for future research directions. arXiv preprint arXiv:2112.11561 (2021)

Zhang, Q.-S., Zhu, S.-C.: Visual interpretability for deep learning: a survey. Front. Inf. Technol. Electron. Eng. 19(1), 27–39 (2018)

Bińkowski, M., Sutherland, D.J., Arbel, M., Gretton, A.: Demystifying mmd GANs. arXiv preprint arXiv:1801.01401 (2018)

Waghen, K., Ouali, M.-S.: Multi-level interpretable logic tree analysis: a data-driven approach for hierarchical causality analysis. Expert Syst. Appl. 178, 115035 (2021)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Commun. ACM 60(6), 84–90 (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Zheng, Q., Chen, Z., Liu, H., Lu, Y., Li, J.: Msranet: learning discriminative embeddings for speaker verification via channel and spatial attention mechanism in alterable scenarios. Available at SSRN 4178119

Szegedy, C., et al.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2818–2826 (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Jeyaraj, P.R., Samuel Nadar, E.R.: Computer-assisted medical image classification for early diagnosis of oral cancer employing deep learning algorithm. J. Cancer Res. Clin. Oncol. 145(4), 829–837 (2019)

Gu, D., et al.: VINet: a visually interpretable image diagnosis network. IEEE Trans. Multimedia 22(7), 1720–1729 (2020)

Graziani, M., Andrearczyk, V., Marchand-Maillet, S., Müller, H.: Concept attribution: explaining CNN decisions to physicians. Comput. Biol. Med. 123, 103865 (2020)

Villain, E., Mattia, G. M., Nemmi, F., Péran, P., Franceries, X., le Lann, M. V.: Visual interpretation of CNN decision-making process using simulated brain MRI. In: 2021 IEEE 34th International Symposium on Computer-Based Medical Systems (CBMS), pp. 515–520 (2021)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Yang, W., Huang, G., Li, R., Yu, J., Chen, Y., Bai, Q. (2024). Hybrid CNN-Interpreter: Interprete Local and Global Contexts for CNN-Based Models. In: Liu, T., Webb, G., Yue, L., Wang, D. (eds) AI 2023: Advances in Artificial Intelligence. AI 2023. Lecture Notes in Computer Science(), vol 14472. Springer, Singapore. https://doi.org/10.1007/978-981-99-8391-9_16

Download citation

DOI: https://doi.org/10.1007/978-981-99-8391-9_16

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-8390-2

Online ISBN: 978-981-99-8391-9

eBook Packages: Computer ScienceComputer Science (R0)