Abstract

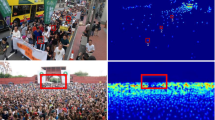

Although great progress has been made in crowd counting, accurate estimation of crowd numbers in high-density areas and full mitigation of the interference of background noise remain challenging. To address these issues, we propose a method called Double Attention Refinement Guided Counting Network (DARN). DARN introduces an attention-guided feature aggregation module that dynamically fuses features extracted from the Transformer backbone. By adaptively fusing features at different scales, this module can estimate the crowd for high-density areas by restoring the lost fine-grained information. Additionally, we propose a segmentation attention-guided refinement method with multiple stages. In this refinement process, crowd background noise is filtered by introducing segmentation attention maps as masks, resulting in a significant refinement of the foreground features. The introduction of multiple stages can further refine the features by utilizing fine-grained and global information. Extensive experiments were conducted on four challenging crowd counting datasets: ShanghaiTech A, UCF-QNRF, JHU-CROWD++, and NWPU-Crowd. The experimental results validate the effectiveness of the proposed method.

Supported by the National Natural Science Foundation of China (61972059, 62376041, 42071438, 62102347), China Postdoctoral Science Foundation(2021M69236), Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, Jilin University (93K172021K01).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Wu, B., Nevatia, R.: Detection of multiple, partially occluded humans in a single image by bayesian combination of edgelet part detectors. In: Tenth IEEE International Conference on Computer Vision, pp. 90–97. IEEE (2005)

Idrees, H., Saleemi, I., Seibert, C., et al.: Multi-source multi-scale counting in extremely dense crowd images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2547–2554. IEEE (2013)

Pham, V.Q., Kozakaya, T., Yamaguchi, O., et al.: Count forest: co-voting uncertain number of targets using random forest for crowd density estimation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3253–3261. IEEE (2015)

Li, Y., Zhang, X., Chen, D.: CSRnet: dilated convolutional neural networks for understanding the highly congested scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1091–1100. IEEE (2018)

Xu, Y., Liang, M., Gong, Z.: A crowd counting method based on multi-scale attention network. In: 2023 3rd International Conference on Neural Networks, Information and Communication Engineering, pp. 591–595. IEEE (2023)

Sindagi, V.A., Patel, V.M.: CNN-based cascaded multi-task learning of high-level prior and density estimation for crowd counting. In: 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), pp. 1–6. IEEE (2017)

Liang, D., Chen, X., Xu, W., et al.: Transcrowd: weakly-supervised crowd counting with transformers. SCIENCE CHINA Inf. Sci. 65(6), 160104 (2022)

Chu, X., Tian, Z., Wang, Y., et al.: Twins: revisiting the design of spatial attention in vision transformers. In: Advances in Neural Information Processing SystemSL, vol. 34, pp. 9355–9366 (2021)

Lin, H., Ma, Z., Hong, X., et al.: Semi-supervised crowd counting via density agency. In: Proceedings of the 30th ACM International Conference on Multimedia, pp. 1416–1426. ACM (2022)

Lin, H., Ma, Z., Ji, R., et al.: Boosting crowd counting via multifaceted attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19628–19637. IEEE (2022)

Dai, M., Huang, Z., Gao, J., et al.: Cross-head supervision for crowd counting with noisy annotations. In: 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5. IEEE (2023)

Wang, Q., Breckon, T.P.: Crowd counting via segmentation guided attention networks and curriculum loss. IEEE Trans. Intell. Transp. Syst. 23(9), 15233–15243 (2022)

Zhang, Y., Zhou, D., Chen, S., et al.: Single-image crowd counting via multi-column convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 589–597. IEEE (2016)

Idrees, H., et al.: Composition loss for counting, density map estimation and localization in dense crowds. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11206, pp. 544–559. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01216-8_33

Sindagi, V.A., Yasarla, R., Patel, V.M.: Jhu-crowd++: large-scale crowd counting dataset and a benchmark method. IEEE Trans. Pattern Anal. Mach. Intell. 44(5), 2594–2609 (2020)

Wang, Q., Gao, J., Lin, W., et al.: NWPU-crowd: a large-scale benchmark for crowd counting and localization. IEEE Trans. Pattern Anal. Mach. Intell. 43(6), 2141–2149 (2020)

Loshchilov, I., Hutter, F.: Decoupled Weight Decay Regularization. In: 7th International Conference on Learning Representations. ICLR (2019)

Liu, W., Salzmann, M., Fua, P.: Context-aware crowd counting. In: Conference on Computer Vision and Pattern Recognition, pp. 5099–5108. IEEE (2019)

Wan, J., Chan, A.: Modeling noisy annotations for crowd counting. In: Advances in Neural Information Processing Systems, vol. 33, pp. 3386–3396 (2020)

Xu, C., Liang, D., Xu, Y., et al.: Autoscale: learning to scale for crowd counting. Int. J. Comput. Vision 130(2), 405–434 (2022)

Cheng, Z.Q., Dai, Q., Li, H., et al.: Rethinking spatial invariance of convolutional networks for object counting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 19638–19648. IEEE (2022)

Gu, C., Wang, C., Gao, B.B., et al.: HDNet: a hierarchically decoupled network for crowd counting. In: IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6. IEEE (2022)

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Chang, S., Zhong, S., Zhou, L., Zhou, X., Gong, S. (2024). DARN: Crowd Counting Network Guided by Double Attention Refinement. In: Liu, Q., et al. Pattern Recognition and Computer Vision. PRCV 2023. Lecture Notes in Computer Science, vol 14434. Springer, Singapore. https://doi.org/10.1007/978-981-99-8549-4_37

Download citation

DOI: https://doi.org/10.1007/978-981-99-8549-4_37

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-8548-7

Online ISBN: 978-981-99-8549-4

eBook Packages: Computer ScienceComputer Science (R0)