Abstract

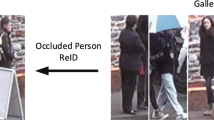

Occluded person re-identification (ReID) is a challenging computer vision task in which the goal is to identify specific pedestrians in occluded scenes across different devices. Some existing methods mainly focus on developing effective data augmentation and representation learning techniques to improve the performance of person ReID systems. However, existing data augmentation strategies can not make full use of the information in the training data to accurately simulate the occlusion scenario, resulting in poor generalization ability. Additionally, recent Vision Transformer (ViT)-based methods have been shown beneficial for addressing occluded person ReID as they have powerful representation learning ability, but they always ignore the information fusion between different levels of features. To alleviate these two issues, an improved ViT-based framework called Parallel Dense Vision Transformer and Augmentation Network (PDANet) is proposed to extract well and robustly features. We first design a parallel data augmentation strategy based on random stripe erasure to enrich the diversity of input sample for better cover real scenes through various processing methods, and improve the generalization ability of the model by learning the relationship between these different samples. We then develop a Densely Connected Vision Transformer (DCViT) module for feature encoding, which strengthens the feature propagation and improves the effectiveness of learning by establishing connections between different layers. Experimental results demonstrate the proposed method outperforms the existing methods on both the occluded person and the holistic person ReID benchmarks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bottou, L.: Stochastic gradient descent tricks. In: Neural Networks: Tricks of the Trade, 2nd edn., pp. 421–436 (2012)

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-End object detection with transformers. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12346, pp. 213–229. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58452-8_13

Chen, P., et al.: Occlude them all: Occlusion-aware attention network for occluded person re-id. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 11833–11842 (2021)

Dosovitskiy, A., et al.: An image is worth 16\(\times \)16 words: transformers for image recognition at scale. In: Proceedings of the International Conference on Learning Representations (ICLR) (2021)

Gao, S., Wang, J., Lu, H., Liu, Z.: Pose-guided visible part matching for occluded person reid. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11744–11752 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778 (2016)

He, L., Wang, Y., Liu, W., Zhao, H., Sun, Z., Feng, J.: Foreground-aware pyramid reconstruction for alignment-free occluded person re-identification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8450–8459 (2019)

He, S., Luo, H., Wang, P., Wang, F., Li, H., Jiang, W.: Transreid: transformer-based object re-identification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 15013–15022 (2021)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4700–4708 (2017)

Huang, H., Zheng, A., Li, C., He, R., et al.: Parallel augmentation and dual enhancement for occluded person re-identification. arXiv preprint arXiv:2210.05438 (2022)

Jia, M., Cheng, X., Lu, S., Zhang, J.: Learning disentangled representation implicitly via transformer for occluded person re-identification. IEEE Trans. Multimedia 25, 1294–1305 (2022)

Jia, M., et al.: Matching on sets: conquer occluded person re-identification without alignment. In: Proceedings of the About the Association for the Advancement of Artificial Intelligence (AAAI), vol. 35, pp. 1673–1681 (2021)

Li, Y., He, J., Zhang, T., Liu, X., Zhang, Y., Wu, F.: Diverse part discovery: Occluded person re-identification with part-aware transformer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2898–2907 (2021)

Ma, H., Li, X., Yuan, X., Zhao, C.: Denseformer: a dense transformer framework for person re-identification. IET Comput. Vision 17, 527–536 (2022)

Miao, J., Wu, Y., Liu, P., Ding, Y., Yang, Y.: Pose-guided feature alignment for occluded person re-identification. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 542–551 (2019)

Ristani, E., Solera, F., Zou, R., Cucchiara, R., Tomasi, C.: Performance measures and a data set for multi-target, multi-camera tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 17–35. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_2

Shorten, C., Khoshgoftaar, T.M.: A survey on image data augmentation for deep learning. J. Big Data 6(1), 1–48 (2019)

Strudel, R., Garcia, R., Laptev, I., Schmid, C.: Segmenter: transformer for semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 7262–7272 (2021)

Sun, Y., Zheng, L., Yang, Y., Tian, Q., Wang, S.: Beyond part models: person retrieval with refined part pooling (and a strong convolutional baseline). In: Proceedings of the European Conference on Computer Vision(ECCV), pp. 480–496 (2018)

Vaswani, A., et al.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30 (2017)

Wang, G., et al.: High-order information matters: Learning relation and topology for occluded person re-identification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), pp. 6449–6458 (2020)

Wang, P., Ding, C., Shao, Z., Hong, Z., Zhang, S., Tao, D.: Quality-aware part models for occluded person re-identification. IEEE Trans. Multimedia 25, 3154–3165 (2022)

Wang, T., Liu, H., Song, P., Guo, T., Shi, W.: Pose-guided feature disentangling for occluded person re-identification based on transformer. In: Proceedings of the About the Association for the Advancement of Artificial Intelligence(AAAI), vol. 36, pp. 2540–2549 (2022)

Wang, Z., Zhu, F., Tang, S., Zhao, R., He, L., Song, J.: Feature erasing and diffusion network for occluded person re-identification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4754–4763 (2022)

Zhao, Y., Zhu, S., Wang, D., Liang, Z.: Short range correlation transformer for occluded person re-identification. Neural Comput. Appl. 34(20), 17633–17645 (2022)

Zheng, C., Zhu, S., Mendieta, M., Yang, T., Chen, C., Ding, Z.: 3D human pose estimation with spatial and temporal transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision(ICCV), pp. 11656–11665 (2021)

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., Tian, Q.: Scalable person re-identification: a benchmark. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 1116–1124 (2015)

Zhong, Z., Zheng, L., Kang, G., Li, S., Yang, Y.: Random erasing data augmentation. In: Proceedings of the About the Association for the Advancement of Artificial Intelligence (AAAI), vol. 34, pp. 13001–13008 (2020)

Zhou, M., Liu, H., Lv, Z., Hong, W., Chen, X.: Motion-aware transformer for occluded person re-identification. arXiv preprint arXiv:2202.04243 (2022)

Zhu, K., Guo, H., Liu, Z., Tang, M., Wang, J.: Identity-guided human semantic parsing for person re-identification. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12348, pp. 346–363. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58580-8_21

Acknowledgement

This work was supported in part by the National Key Research and Development Program of China (Grant No. 2021ZD0112400), National Natural Science Foundation of China (Grant No. U1908214), the Program for Innovative Research Team in University of Liaoning Province (Grant No. LT2020015), the Support Plan for Key Field Innovation Team of Dalian (2021RT06), XXXXX, Program for the Liaoning Province Doctoral Research Starting Fund (Grant No. 2022-BS-336), Key Labora- tory of Advanced Design and Intelligent Computing (Dalian University), Ministry of Education (Grant No. ADIC2022003), Interdisciplinary project of Dalian University (Grant No. DLUXK-2023-QN-015).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Yang, C., Fan, W., Wei, Z., Yang, X., Zhang, Q., Zhou, D. (2024). Parallel Dense Vision Transformer and Augmentation Network for Occluded Person Re-identification. In: Hu, SM., Cai, Y., Rosin, P. (eds) Computer-Aided Design and Computer Graphics. CADGraphics 2023. Lecture Notes in Computer Science, vol 14250. Springer, Singapore. https://doi.org/10.1007/978-981-99-9666-7_10

Download citation

DOI: https://doi.org/10.1007/978-981-99-9666-7_10

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-9665-0

Online ISBN: 978-981-99-9666-7

eBook Packages: Computer ScienceComputer Science (R0)