Abstract

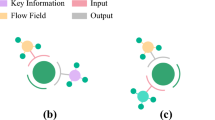

Deep learning-based approaches for three-dimensional (3D) grid understanding and processing tasks have been extensively studied in recent years. Despite the great success in various scenarios, the existing approaches fail to effectively utilize the velocity information in the flow field, resulting in the actual requirements of post-processing tasks being difficult to meet by the extracted features. To fully integrate structural information in the 3D grid and velocity information, this paper constructs a flow-field-aware network (FFANet) for 3D grid classification and segmentation tasks. The main innovations include: (i) using the self-attention mechanism to build a multi-scale feature learning network to learn the distribution feature of the velocity field and structure feature of different scales in the 3D flow field grid, respectively, for generating a global feature with more discriminative representation information; (ii) constructing a fine-grained semantic learning network based on a co-attention mechanism to adaptively learn the weight matrix between the above two features to enhance the effective semantic utilization of the global feature; (iii) according to the practical requirements of post-processing in numerical simulation, we designed two downstream tasks: 1) surface grid identification task and 2) feature edge extraction task. The experimental results show that the accuracy (Acc) and intersection-over-union (IoU) performance of the FFANet compared favourably to the 3D mesh data analysis approaches.

This work is supported by National Numerical Wind tunnel project.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Carvalho, L., von Wangenheim, A.: 3D object recognition and classification: a systematic literature review. Pattern Anal. Appl. 22, 1243–1292 (2019)

Chen, Y., Zhao, J., Shi, C., Yuan, D.: Mesh convolution: a novel feature extraction method for 3D nonrigid object classification. IEEE Trans. Multimedia 23, 3098–3111 (2020)

Dai, A., Niessner, M.: Scan2Mesh: from unstructured range scans to 3D meshes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Dong, Q., et al.: Laplacian2Mesh: laplacian-based mesh understanding (2023)

Dosovitskiy, A., et al.: An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Eisfeld, B., Brodersen, O.: Advanced turbulence modelling and stress analysis for the DLR-F6 configuration. In: 23rd AIAA Applied Aerodynamics Conference, p. 4727 (2005)

Feng, Y., Feng, Y., You, H., Zhao, X., Gao, Y.: MeshNet: mesh neural network for 3D shape representation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8279–8286 (2019)

Haim, N., Segol, N., Ben-Hamu, H., Maron, H., Lipman, Y.: Surface networks via general covers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 632–641 (2019)

Hanocka, R., Hertz, A., Fish, N., Giryes, R., Fleishman, S., Cohen-Or, D.: MeshCNN: a network with an edge. ACM Trans. Graph. (TOG) 38(4), 1–12 (2019)

He, K., Chen, X., Xie, S., Li, Y., Dollár, P., Girshick, R.: Masked autoencoders are scalable vision learners. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16000–16009 (2022)

Hu, S.M., et al.: Subdivision-based mesh convolution networks. ACM Trans. Graph. (TOG) 41(3), 1–16 (2022)

Jiang, W., Wang, W., Hu, H.: Bi-directional co-attention network for image captioning. ACM Trans. Multimedia Comput. Commun. Appl. (TOMM) 17(4), 1–20 (2021)

Lahav, A., Tal, A.: MeshWalker: deep mesh understanding by random walks. ACM Trans. Graph. (TOG) 39(6), 1–13 (2020)

Li, X., Li, R., Zhu, L., Fu, C.W., Heng, P.A.: DNF-Net: a deep normal filtering network for mesh denoising. IEEE Trans. Visual Comput. Graphics 27(10), 4060–4072 (2020)

Liang, Y., Zhao, S., Yu, B., Zhang, J., He, F.: Meshmae: masked autoencoders for 3D mesh data analysis. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds.) ECCV 2022. LNCS, vol. 13663, pp. 37–54. Springer, Cham (2022). https://doi.org/10.1007/978-3-031-20062-5_3

Lu, J., Yang, J., Batra, D., Parikh, D.: Hierarchical question-image co-attention for visual question answering. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Makwana, P., Makadiya, J.: Numerical simulation of flow over airfoil and different techniques to reduce flow separation along with basic CFD model: a review study. Int. J. Eng. Res. 3(4), 399–404 (2014)

Milano, F., Loquercio, A., Rosinol, A., Scaramuzza, D., Carlone, L.: Primal-dual mesh convolutional neural networks. Adv. Neural. Inf. Process. Syst. 33, 952–963 (2020)

Qi, C.R., Su, H., Mo, K., Guibas, L.J.: Pointnet: deep learning on point sets for 3D classification and segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 652–660 (2017)

Qi, C.R., Yi, L., Su, H., Guibas, L.J.: Pointnet++: deep hierarchical feature learning on point sets in a metric space. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Qi, S., et al.: Review of multi-view 3D object recognition methods based on deep learning. Displays 69, 102053 (2021)

Scarselli, F., Gori, M., Tsoi, A.C., Hagenbuchner, M., Monfardini, G.: The graph neural network model. IEEE Trans. Neural Networks 20(1), 61–80 (2008)

Sekar, V., Jiang, Q., Shu, C., Khoo, B.C.: Fast flow field prediction over airfoils using deep learning approach. Phys. Fluids 31(5), 057103 (2019)

Sharp, N., Attaiki, S., Crane, K., Ovsjanikov, M.: Diffusionnet: discretization agnostic learning on surfaces. ACM Trans. Graph. (TOG) 41(3), 1–16 (2022)

Shi, W., Rajkumar, R.: Point-GNN: graph neural network for 3D object detection in a point cloud. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Yang, Y., Liu, S., Pan, H., Liu, Y., Tong, X.: PFCNN: convolutional neural networks on 3D surfaces using parallel frames. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13578–13587 (2020)

Yuan, S., Fang, Y.: ROSS: robust learning of one-shot 3D shape segmentation. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 1961–1969 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2024 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Deng, J., Xing, D., Chen, C., Han, Y., Chen, J. (2024). FFANet: Dual Attention-Based Flow Field Aware Network for 3D Grid Classification and Segmentation. In: Hu, SM., Cai, Y., Rosin, P. (eds) Computer-Aided Design and Computer Graphics. CADGraphics 2023. Lecture Notes in Computer Science, vol 14250. Springer, Singapore. https://doi.org/10.1007/978-981-99-9666-7_3

Download citation

DOI: https://doi.org/10.1007/978-981-99-9666-7_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-9665-0

Online ISBN: 978-981-99-9666-7

eBook Packages: Computer ScienceComputer Science (R0)