Abstract

There are a variety of methods in the literature which seek to make iterative estimation algorithms more manageable by breaking the iterations into a greater number of simpler or faster steps. Those algorithms which deal at each step with a proper subset of the parameters are called in this paper partitioned algorithms. Partitioned algorithms in effect replace the original estimation problem with a series of problems of lower dimension. The purpose of the paper is to characterize some of the circumstances under which this process of dimension reduction leads to significant benefits.

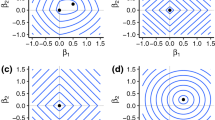

Four types of partitioned algorithms are distinguished: reduced objective function methods, nested (partial Gauss-Seidel) iterations, zigzag (full Gauss-Seidel) iterations, and leapfrog (non-simultaneous) iterations. Emphasis is given to Newton-type methods using analytic derivatives, but a nested EM algorithm is also given. Nested Newton methods are shown to be equivalent to applying to same Newton method to the reduced objective function, and are applied to separable regression and generalized linear models. Nesting is shown generally to improve the convergence of Newton-type methods, both by improving the quadratic approximation to the log-likelihood and by improving the accuracy with which the observed information matrix can be approximated. Nesting is recommended whenever a subset of parameters is relatively easily estimated. The zigzag method is shown to produce a stable but generally slow iteration; it is fast and recommended when the parameter subsets have approximately uncorrelated estimates. The leapfrog iteration has less guaranteed properties in general, but is similar to nesting and zigzagging when the parameter subsets are orthogonal.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Aitkin, M. (1987) Modelling variance heterogeneity in normal regression using GLIM. Appl. Statist., 36, 332–9.

Amari, S. (1982) Differential geometry of curved exponential families—curvatures and information loss. Ann. Statist., 10, 357–85.

Amari, S. (1985) Differential geometrical methods in statistics. Lecture Notes in Statistics 28, Springer-Verlag, Heidelberg.

Barham, R. H. and Drane, W. (1972) An algorithm for least estimation of non-linear parameters when some of the parameters are linear. Technometrics, 14, 757–66.

Barndorff-Nielsen, O. E. (1977) Exponentially decreasing distributions for the logarithm of particle size. J. Roy. Statist. Soc. A, 353, 401–9.

Bates, D. M. and Lindstrom, M. J. (1986) Nonlinear least squares with conditionally linear parameters. In: Proceedings Statistical Computing Section, American Statistical Association, New York.

Bates, D. M. and Watts, D. G. (1980) Relative curvature measures of nonlinearity. J. R. Statist. Soc. B, 42, 1–25.

Bates, D. M. and Watts, D. G. (1988) Nonlinear Regression Analysis and its Applications. Wiley, New York.

Box, G. E. P. and Cox, D. R. (1964) An analysis of transformation (with discussion). J. R. Statistic. Soc. B, 26, 211–52.

Chambers, J. M. and Hastie, T. J. (ed.) (1992) Statistical Modelsin S. Wadsworth & Brooks/Cole, Pacific Grove, CA.

Dempster, A. P., Laird, N. M. and Rubin, D. B. (1977) Maximum likelihood from incomplete data via the EM algorithm (with discussion). J. R. Statist. Soc. B, 39, 1–37.

Dieudonné, J. A. E. (1960) Foundations of Modern Analysis. Academic Press, New York.

Efron, B. (1975) Defining the curvature of a statistical problem (with applications to second order efficiency). Ann. Statist., 3, 1189–242.

Fieller, N. R. J., Flenley, E. C. and Olbricht, W. (1992) Statistics of particle size data. Applied Statist., 41, 127–46.

Gallant, A. R. (1987) Nonlinear Statistical Models. Wiley, New York.

Golub, G. H. and Pereyra, V. (1973) The differentiation of pseudo-inverses and nonlinear least squares problems whose variables separate. SIAM J. Numer. Anal., 10, 413–32.

Golub, G. H. and Pereyra, V. (1976) The differentiation of pseudo-inverses, separable nonlinear least square problems and other tales. In: Generalized Inverses and Applications, pp. 303–24. Academic Press, New York.

Golub, G. H. and van Loan, C. F. (1983) Matrix Computations. Johns Hopkins University Press, Baltimore, MD.

Hartley, H. O., (1948) The estimation of non-linear parameters by ‘internal least squares’. Biometrika, 35, 32–45.

Harville, D. A. (1973) Fitting partially linear models by weighted least squares. Technometrics, 15, 509–15.

Jennrich, R. I. (1969) Asymptotic properties of non-linear least squares estimators. Ann. Math. Statist., 40, 633–43.

Jensen, J. (1988) Maximum likelihood estimation of hyperbolic parameters from grouped observations. Comput. Geosci., 14, 380–408.

Jensen, S. T., Johansen, S. and Lauritzen, S. L. (1991) Globally convergent algorithms for maximizing a likelihood function. Biometrika, 78, 867–77.

Jørgensen, B. (1984) The delta algorithm and GLIM. Int. Statist. Rev., 52, 282–300.

Jørgensen, B. (1987) Exponential dispersion models. J. R. Statist. Soc. B, 49, 127–62.

Kass, R. E. and Slate, E. H. (1992) Reparametrization and diagnostics of posterior non-normality. In Bayesian Statistics 4. Proceedings of the Fourth Valencia International Meeting (J. O. Berger, J. M. Bernardo, A. P. Dawid, D.V. Lindley and A. F. M. Smith, eds.) 289–305. Oxford University Press.

Kaufmann, L. (1975) A variable projection method for solving separable nonlinear least squares problems. BIT, 15, 49–57.

Khuri, A. I. (1984) A note on D-optimal designs for partially nonlinear regression models. Technometrics, 26, 59–61.

Kowalik, J. and Osborne, M. R. (1968) Methods for Unconstrained Optimization Problems. American Elsevier, New York.

Lawton, W. H. and Sylvestre, E. A. (1971) Elimination of linear parameters in nonlinear regression. Technometrics, 13, 461–7.

McCullagh, P. and Nelder, J. A. (1989) Generalized Linear Models, 2nd edn. Chapman and Hall, London.

Ortega, J. M. and Rheinboldt, W. C. (1970) Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, New York.

Osborne, M. R. (1972) Some aspects of nonlinear least squares calculations. In: Numerical Methods for Nonlinear Optimization, Lootsma, F. (ed.), Academic Press, New York.

Osborne, M. R. (1987) Estimating nonlinear models by maximum likelihood for the exponential family. SIAM J. Sci. Statist. Comp., 8, 446–56.

Osborne, M. R. (1992) Fisher's method of scoring. Int. Statist. Rev., 60, 99–117.

Osborne, M. R. and Smyth, G. K. (1991) A modified Prony algorithm for fitting functions defined by difference equations. SIAM J. Sci. Statist. Comp., 12, 362–82.

Ostrowski, A. M. (1960) Solutions of Equations and Systems of Equations. Academic Press, New York.

Pimentel-Gomes, F. (1953) The use of Mitscherlich's regression law in the analysis of experiments with fertilizers. Biometrics, 9, 498–516.

Pregibon, D. (1980) Goodness of link tests for generalised linear models. Appl. Statist., 29, 15–24.

Rao, C. R. (1973) Linear Statistical Inference and its Applications. Wiley, New York.

Ratkowsky, D. A. (1983) Nonlinear Regression Modelling, A Unified Practical Approach, Dekker, New York.

Ratkowsky, D. A. (1989) Handbook of Nonlinear Regression Models. Dekker, New York.

Richards, F. S. G. (1961) A method of maximum-likelihood estimation. J. R. Statist. Soc. B, 23, 469–75.

Ross, G. J. S. (1970) The efficient use of function minimization in non-linear maximum-likelihood estimation. Appl. Statist., 19, 205–21.

Ross, G. J. S. (1990) Nonlinear Estimation. Springer-Verlag, New York.

Ruhe, A. and Wedin, P. A. (1980) Algorithms for separable nonlinear least squares problems. SIAM Rev., 22, 318–37.

Scallan, A. (1982) Some aspects of parametric link functions. In: GLIM 82, R. Gilchrist (ed.), New York: Springer-Verlag.

Scallan, A., Gilchrist, R. and Green, M. (1984) Fitting parametric link functions in generalised linear models. Comput. Statist. Data Anal., 2, 37–49.

Schall, R. (1991) Estimation in generalized linear models with random effects. Biometrika, 78, 719–27.

Seber, G. A. F. and Wild, C. J. (1989) Nonlinear Regression. Wiley, New York.

Smyth, G. K. (1987) Curvature and convergence. 1987 Proceedings of the Statistical Computing Section. American Statistical Association, Virginia, pp. 278–83.

Smyth, G. K. (1989) Generalized linear models with varying dispersion. J. R. Statist. Soc. B, 51, 47–60.

Smyth, G. K. (1992) Using Poisson-gamma generalized linear models to model data with exact zeros. Technical Report, Department of Mathematics, University of Queensland.

Sprott, D. A. (1973) Normal likelihoods and their relation to large sample theory estimation. Biometrika, 60, 457–65.

Stevens, W. L. (1951) Asymptotic regression. Biometrics, 7, 247–67.

Thisted, R. (1988) Elements of Statistical Computing. Chapman & Hall, New York.

Tweedie, M. C. K. (1984) An index which distinguishes between some important exponential families. In: Statistics: Applications and New Directions. Proceedings of the Indian Statistical Institute Golden Jubilee International Conference (eds. J. K. Ghosh and J. Roy), pp. 579–604. Indian Statistical Institute, Calcutta.

Varah, J. M. (1990) Relative sizes of the Hessian terms in nonlinear parameter estimation. SIAM J. Sci. Stat. Comput., 11, 174–9.

Verbyla, A. P. (1993) Modelling variance heterogeneity: residual maximum likelihood and diagnostics. J. Roy. Statist. Soc. B, 55, 493–508.

Walling, D. (1968) Non-linear least squares curve fitting when some parameters are linear. Texas J. Science, 20, 119–24.

Weisberg, S. and Welsh, A. H. (1994) Adapting for the missing link. Ann. Statist., 22, 1674–1700.

Wermuth, N. and Scheidt, E. (1977) Algorithm AS105: Fitting a covariance selection model to a matrix. Appl. Statist., 26, 88–92.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Smyth, G.K. Partitioned algorithms for maximum likelihood and other non-linear estimation. Stat Comput 6, 201–216 (1996). https://doi.org/10.1007/BF00140865

Issue Date:

DOI: https://doi.org/10.1007/BF00140865