Abstract

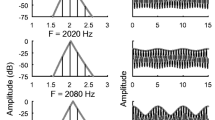

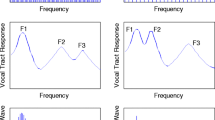

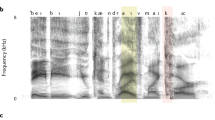

We propose a new model for speaker-independent vowel recognition which uses the flexibility of the dynamic linking that results from the synchronization of oscillating neural units. The system consists of an input layer and three neural layers, which are referred to as the A-, B- and C-centers. The input signals are a time series of linear prediction (LPC) spectrum envelopes of auditory signals. At each time-window within the series, the A-center receives input signals and extracts local peaks of the spectrum envelope, i.e., formants, and encodes them into local groups of independent oscillations. Speaker-independent vowel characteristics are embedded as a connection matrix in the B-center according to statistical data of Japanese vowels. The associative interaction in the B-center and reciprocal interaction between the A- and B-centers selectively activate a vowel as a global synchronized pattern over two centers. The C-center evaluates the synchronized activities among the three formant regions to give the selective output of the category among the five Japanese vowels. Thus, a flexible ability of dynamical linking among features is achieved over the three centers. The capability in the present system was investigated for speaker-independent recognition of Japanese vowels. The system demonstrated a remarkable ability for the recognition of vowels very similar to that of human listeners, including misleading vowels. In addition, it showed stable recognition for unsteady input signals and robustness against background noise. The optimum condition of the frequency of oscillation is discussed in comparison with stimulus-dependent synchronizations observed in neurophysiological experiments of the cortex.

Similar content being viewed by others

References

Chernikoff R, Brogden WJ (1949) The effect of response termination of stimulus upon reaction time. J Comp Physiol Psychol 42:357–364

Cherry EC (1953) Some experiments on the recognition of speech, with one and with two ears. J Acoust Soc Am 25:975–979

Eckhorn R, Bauer R, Jordan W, Brosch M, Kruse W, Munk M, Reitboeck HJ (1988) Coherent oscillations: a mechanism of feature linking in the visual cortex? Biol Cybern 60:121–130

Eckhorn R, Reitboeck HJ, Arndt M, Dicke P (1990) Feature linking via synchronization among distributed assemblies: simulations of results from cat visual cortex. Neural Comput 2:293–307

Fant G (1966) A note on vocal tract size factors and non-uniform f-pattern scalings. Q Prog Status Rep Speech Transmission Lab 4:22–30

Freeman WJ (1975) Mass action in the nervous system. Academic, New York

Fujisaki H, Nakamura N (1969) Normalization and recognition of vowels. Annual report of the Engineering Research Institute, University of Tokyo 28:61–66

Fukunishi K, Murai N, Uno H (1992) Dynamic characteristics of the auditory cortex of guinea pigs observed with multichannel optical recording. Biol Cybern 67:501–509

Fukunishi K, Murai N, Uno H, Miyashita T (1993) Cortical neural networks revealed by spatiotemporal neural observation and analysis on guinea pig auditory cortex. IJCNN, pp 73–76

Gray CM, Singer W (1987) Stimulus-specific neuronal oscillations in the cat visual cortex: a cortical functional unit. Soc Neurosci Abstr 13:404.

Gray CM, Koenig P, Engel AK, Singer W (1989) Oscillatory responses in cat visual cortex exhibit inter-columnar synchronization which reflects global stimulus properties. Nature 338:334–337

Irino T, Kawahara H (1990) A method for designing neural networks using nonlinear multivariate analysis: application to speaker-independent vowel recognition. Neural Comput 2:386–397

Kasuya H, Suzuki H, Kido K. (1968) Changes in pitch and first three formant frequencies of five Japanese vowels with age and sex of speakers (in Japanese). J Acoust Soc Jpn 24:355–364

Koenig P, Schillen TB (1991) Stimulus-dependent assembly formation of oscillatory responses. I. Synchronization. Neural Comput 3:155–166

Malsburg C von der, Buhmann J (1992) Sensory segmentation with coupled neural oscillators. Biol Cybern 67:233–242

Malsburg C von der, Schneider W (1986) A neural cocktail-party processor. Biol Cybern 54:29–40

Pantev C, Makeig S, Hoke M, Galambos R, Hampson S, Gallen C (1991) Human auditory evoked gamma-band magnetic fields. Proc Natl Acad Sci USA 88:8996–9000

Ribary U, Ioannides AA, Singh KD, Hasson R, Bolton JPR, Lado F, Mogilner A, Llinas R (1991) Magnetic field tomography of coherent thalamocortical 40-Hz oscillations in humans. Proc Natl Acad Sci USA 88:11037–11041

Shimizu H, Yamaguchi Y (1987) Synergetic computers and holonics-information dynamics of a semantic computer. Physics Scripta 36:970–985

Shimizu H, Yamaguchi Y (1991) The self-organization of neuronal representations of semantic information of vision. In: Holden AV, Kryukov VI (eds) Neurocomputers and attention I. Neurobiology, synchronization and chaos. Manchester University Press, Manchester, pp 383–403

Shimizu H, Yamaguchi Y, Tsuda I, Yano M (1985) Pattern recognition based on holonic information dynamics towards synergetic computers. In: Haken H (eds) Complex system-operational approaches. Springer, Berlin Heidelberg New York, pp. 225–239

Sporns O, Gaily JA, Reeke GN Jr, Edelman GM (1989) Reentrant signaling among simulated neuronal groups leads to coherency in their oscillatory activity. Proc Natl Acad Sci USA 86:7265–7269

Stevens SS, Volkmann J (1940) The relation of pitch to frequency: a revised scale. Am J Psychol 53:329–353

Syrdal AK, Gopal HS (1986) A perceptual model of vowel recognition based on the auditory representation of American English vowels. J Acoust Soc Am 79:1086–1100

Taniguchi I, Horikawa J, Moriyama T, Nasu M (1992) Spatio-temporal pattern of frequency representation in the auditory cortex. Neurosci Lett 146:37–40

Tiitinen H, Sinkkonen J, Reinikainen K, Alho K, Lavikainen J, Naatanen R (1993) Selective attention enhances the auditory 40-Hz transient response in humans. Nature 364:59–60

Yamaguchi Y, Shimizu H (1993) Pattern recognition with figureground separation by generation of coherent oscillations. Neuron Netw (in press)

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Liu, F., Yamaguchi, Y. & Shimizu, H. Flexible vowel recognition by the generation of dynamic coherence in oscillator neural networks: speaker-independent vowel recognition. Biol. Cybern. 71, 105–114 (1994). https://doi.org/10.1007/BF00197313

Received:

Accepted:

Issue Date:

DOI: https://doi.org/10.1007/BF00197313