Abstract

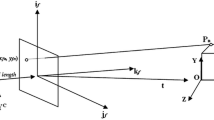

This paper deals with stereo camera-based estimation of sensor translation in the presence of modest sensor rotation and moving objects. It also deals with the estimation of object translation from a moving sensor. The approach is based on Gabor filters, direct passive navigation, and Kalman filters.

Three difficult problems associated with the estimation of motion from an image sequence are solved. (1) The temporal correspondence problem is solved using multi-scale prediction and phase gradients. (2) Segmentation of the image measurements into groups belonging to stationary and moving objects is achieved using the “Mahalanobis distance.” (3) Compensation for sensor rotation is achieved by internally representing the inter-frame (short-term) rotation in a rigid-body model. These three solutions possess a circular dependency, forming a “cycle of perception.” A “seeding” process is developed to correctly initialize the cycle. An additional complication is the translation-rotation ambiguity that sometimes exists when sensor motion is estimated from an image velocity field. Temporal averaging using Kalman filters reduces the effect of motion ambiguities. Experimental results from real image sequences confirm the utility of the approach.

Similar content being viewed by others

References

G. Adiv, “Determining three-dimensional motion and structure from optical flow generated by several moving objects,”IEEE Trans. Pattern Anal. Machine Intell., vol. 7, no. 4, pp. 384–401, pp. 477-489, 1985.

G. Adiv, “Inherent ambiguities in recovering 3-D motion and structure from a noisy flow field,”IEEE Trans. Pattern Anal. Machine Intell., vol. 11, no. 5, 1989.

N. Ayache and O.D. Faugeras, “Maintaining representations of the environment of a mobile robot,”IEEE Trans. on Robotics and Automation, vol. 5, no. 6, pp. 804–819, 1989.

A.C. Bovik, N. Gopal, T. Emmoth, and A. Restrepo, “Localized measurements of emergent image frequencies by Gabor wavelets,”IEEE Trans. Information Theory, vol. 38, no. 2, pp. 691–712, 1992.

R.N. Braithwaite and M.P. Beddoes, “Obstacle detection for an autonomous vehicle,” inProceedings, Third Conf. on Military Robotic Applications, Medicine Hat, AB, Canada, 1991, pp. 206–213.

R.N. Braithwaite, “Stereo-based obstacle detection using Gabor filters,” Ph.D. thesis, Dep. Elec. Eng., Univ. of British Columbia, Vancouver, Canada, 1992.

R.G. Brown,Introduction to Random Signal Analysis and Kalman Filtering. New York, NY: Wiley and Sons, 1983.

W. Burger and B. Bhanu, “Estimating 3-D egomotion from perspective image sequences,”IEEE Trans. Pattern Anal. Machine Intell., vol. 12, no. 11, pp. 1040–1058, 1990.

J.G. Daugman, “Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters,”J. Opt. Soc. Am. A, vol. 2, no. 7, pp. 1160–1169, 1985.

J.G. Daugman, “Entropy reduction and decorrelation in visual coding by oriented neural receptive fields,”IEEE Trans. Biomedical Eng., vol. 36, no. 1, pp. 107–114, 1989.

E.D. Dickmanns and B.D. Mysliwetz, “Recursive 3-D road and relative ego-state recognition,”Trans. Pattern Anal. Machine Intell., vol. 14, no. 2, pp. 199–213, 1992.

E.D. Dickmanns, B. Mysliwetz, and T. Christians, “An integrated spatio-temporal approach to automatic visual guidance of autonomous vehicles,”IEEE Trans. Systems Man Cybern., vol. 20, no. 6, pp. 1273–1284, 1990.

E.D. Dickmanns and T. Christians, “Relative 3D-state estimation for autonomous visual guidance of road vehicles,”Robotics and Autonomous Systems, vol. 7, pp. 113–123, 1991.

D.J. Fleet, A.D. Jepson, and M.R. Jenkin, “Phase-based disparity measurement,”CVGIP: Image Understanding, vol. 53, no. 2, pp. 198–210, 1991.

D.J. Fleet and A.D. Jepson, “Computation of component image velocity from local phase information,”Int. J. Comp. Vision, vol. 5, no. 1, pp. 77–104, 1990.

D. Gabor, “Theory of communication,”J. Inst. Elec. Eng., vol. 93, pp. 429–457, 1946.

B.Y. Hayashi and S. Negahdaripour, “Direct motion stereo: recovery of observer motion and scene structure,” inProceedings, IEEE Third Int. Conf. Comp. Vision, 1990, pp. 446–450.

D.J. Heeger and G. Hager, “Egomotion and the stabilized world,” inProceedings, Second Int. Conf. on Comp. Vision, Tampa, FL, 1988, pp. 435–440.

B.K.P. Horn and E.J. Weldon Jr., “Direct methods for recovering motion,”Int. J. of Comp. Vision, vol. 2, pp. 51–76, 1988.

B.K.P. Horn,Robot Vision. MIT Press, 1986.

E. Ito and J. Aloimonos, “Determining three dimensional transformation parameters from images: theory,”IEEE 1987 Int. Conf. on Robotics and Automation, 1987, pp. 57–61.

L.H. Matthies,Dynamic Stereo Vision. Ph.D. thesis, CMU-CS-89-195, 1989.

L. Matthies, “Stereo vision for planetary rovers: stochastic modeling to near real-time implementation,” JPL D-8131, 1991.

L. Matthies and S.A. Shafer, “Error modeling in stereo navigation,” vol. 3, no. 3, pp. 239–248, 1987.

H.P. Moravec, “The Stanford Cart and the CMU Rover,”Proc. IEEE, vol. 71, no. 7, pp. 872–884, 1983.

S. Negahdaripour and B.K.P. Horn, “Direct passive navigation,”IEEE Trans. Pattern Anal. Machine Intell., vol. 9, pp. 168–176, 1987.

T.D. Sanger, “Stereo disparity using Gabor filters,”Biol. Cybern., no. 59, pp. 405–418, 1988.

U. Solder and V. Graffe, “Object detection in real time,” inProceedings, SPIE Advances in Intelligent Systems, Boston, MA, 1990, pp. 104–111.

F. Thomanek and D. Dickmanns, “Obstacle detection, tracking, and state estimation for autonomous road vehicle guidance,” inProceedings, Int. Conf. on Intelligent Robots and Systems, Raleigh, NC, 1992, pp. 1399–1407.

C. Thorpe, M.H. Hebert, T. Kanade, and S.A. Shafer, “Vision and navigation for the Carnegie-Mellon Navlab,”IEEE Trans. Pattern Anal. Machine Intell., vol. 10, no. 3, pp. 362–373, 1988.

M.A. Turk, D.G. Morgenthaler, K.D. Gremban, and M. Marra, “VITS—A vision system for autonomous vehicle navigation,”IEEE Trans. Pattern Anal. Machine Intell., vol. 10, no. 3, pp. 342–361, 1988.

W.H. Warren and D.J. Hannon, “Direction of self-motion is perceived from optical flow,”Nature, vol. 336, pp. 162–163, 1988.

M. Yamamoto, “A general aperture problem for direct estimation of 3-D motion parameters,”IEEE Trans. Pattern Anal. Machine Intell. vol. 11, no. 5, pp. 528–536, 1989.

Author information

Authors and Affiliations

Additional information

Financial support from the Natural Science and Engineering Research Council (NSERC) of Canada is acknowledged.

Rights and permissions

About this article

Cite this article

Braithwaite, R.N., Beddoes, M.P. Estimating camera and object translation in the presence of camera rotation. J Math Imaging Vis 5, 43–57 (1995). https://doi.org/10.1007/BF01250252

Issue Date:

DOI: https://doi.org/10.1007/BF01250252