Abstract

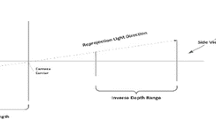

Due to the aperture problem, the only motion measurement in images, whose computation does not require any assumptions about the scene in view, is normal flow—the projection of image motion on the gradient direction. In this paper we show how a monocular observer can estimate its 3D motion relative to the scene by using normal flow measurements in a global and qualitative way. The problem is addressed through a search technique. By checking constraints imposed by 3D motion parameters on the normal flow field, the possible space of solutions is gradually reduced. In the four modules that comprise the solution, constraints of increasing restriction are considered, culminating in testing every single normal flow value for its consistency with a set of motion parameters. The fact that motion is rigid defines geometric relations between certain values of the normal flow field. The selected values form patterns in the image plane that are dependent on only some of the motion parameters. These patterns, which are determined by the signs of the normal flow values, are searched for in order to find the axes of translation and rotation. The third rotational component is computed from normal flow vectors that are only due to rotational motion. Finally, by looking at the complete data set, all solutions that cannot give rise to the given normal flow field are discarded from the solution space.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Adiv, G. 1985. Determining 3d motion and structure from optical flow generated by several moving objects.IEEE Transactions on Pattern Analysis and Machine Intelligence, 7:384–401.

Adiv, G. 1985. Inherent ambiguities in recovering 3d motion and structure from a noisy flow field. InProc. IEEE Conference on Computer Vision and Pattern Recognition, pp. 70–77.

Aloimonos, J. and Brown, C. 1984. Direct processing of curvilinear sensor motion from a sequence of perspective images. InProc. Workshop on Computer Vision: Representation and Control, pp. 72–77.

Aloimonos, J. and Shulman, D. 1989.Integration of Visual Modules: An Extension of the Marr Paradigm. Academic Press, Boston.

Barnard, S. and Thompson, W. 1980. Disparity analysis of images.IEEE Transactions on Pattern Analysis and Machine Intelligence, 2:333–340.

Bergholm, F. 1988. Motion from flow along contours: A note on robustness and ambiguous cases.International Journal of Computer Vision, 3:395–415.

Bruss, A. and Horn, B. 1983. Passive navigation.Computer Vision, Graphics, and Image Processing, 21:3–20.

Burger, W. and Bhanu, B. 1990. Estimating 3-d egomotion from perspective image sequences.IEEE Transactions on Pattern Analysis and Machine Intelligence, 12:1040–1058.

Chandrashekar, S. and Chellappa, R. 1991. Passive navigation in a partially known environment. InProc. IEEE Workshop on Visual Motion, pp. 2–7.

Faugeras, O. and Maybank, S. 1990. Motion from point matches: Multiplicity of solutions.International Journal of Computer Vision, 4:225–246.

Fermüller, C. and Aloimonos, Y. 1992. Estimating time to collision. Technical Report CAR-TR, Center for Automation Research, University of Maryland.

Fermüller, C. and Aloimonos, Y. 1992. Tracking facilitates 3-d motion estimation.Biological Cybernetics, 67:259–268.

Fermüller, C. and Aloimonos, Y. 1994. Vision and action. Technical Report CAR-TR-722, Center for Automation Research, University of Maryland (alsoImage and Vision Computing Journal, in press).

Hildreth, E. 1983.The Measurement of Visual Motion. MIT Press, Cambridge, Massachusets.

Horn, B. 1987. Motion fields are hardly ever ambiguous.International Journal of Computer Vision, 1:259–274.

Horn, B. 1990. Relative orientation.International Journal of Computer Vision, 4:59–78.

B. Horn and B. Schunck. 1981. Determining optical flow.Artificial Intelligence, 17:185–203.

Horn, B. and Weldon, E. 1987. Computationally efficient methods for recovering translational motion. InProc. International Conference on Computer Vision, pp. 2–11.

Jain, R. 1983. Direct computation of the focus of expansion.IEEE Transactions on Pattern Analysis and Machine Intelligence, 5:58–64.

Liu, Y. and Huang, T. 1988. Estimation of rigid body motion using straight line correspondences.Computer Vision, Graphics, and Image Processing, 43:37–52.

Longuet-Higgins, H. 1981. A computer algorithm for reconstruction of a scene from two projections.Nature, 293:133–135.

Longuet-Higgins, H.C. and Prazdny, K. 1980. The interpretation of a moving retinal image.Proceedings of the Royal Society, London B, 208:385–397.

Navab, N., Faugeras, O., and Vieville, T. 1993. The critical sets of lines for camera displacement estimation: A mixed euclidian-projective and constructive approach. InProc. International Conference on Computer Vision.

Negahdaripour, S. 1986.Direct Passive Navigation. PhD thesis, Department of Mechanical Engineering, MIT, Cambridge, MA.

Nelson, R. and Aloimonos, J. 1988. Finding motion parameters from spherical flow fields (or the advantage of having eyes in the back of your head).Biological Cybernetics, 58:261–273.

Prazdny, K. 1981. Determining instantaneous direction of motion from optical flow generated by a curvilinear moving observer.Computer Vision, Graphics, and Image Processing, 17:238–248.

Spetsakis, M. and Aloimonos, J. 1988. Optimal computing of structure from motion using point correspondence. InProc. International Conference on Computer Vision, pp. 449–453.

Spetsakis, M. and Aloimonos, J. 1990. Structure from motion using line correspondences.International Journal of Computer Vision, 1:171–183.

Spetsakis, M. and Aloimonos, J. 1991. A multi-frame approach to visual motion perception.International Journal of Computer Vision, 6:245–255.

Tsai, R. and Huang, T. 1984. Uniqueness and estimation of three-dimensional motion parameters of rigid objects with curved surfaces.IEEE Transactions on Pattern Analysis and Machine Intelligence, 6:13–27.

Ullman, S. 1979. The interpretation of structure from motion.Proceedings of the Royal Society, London B, 203:405–426.

Verri, A. and Poggio, T. 1989. Motion field and optical flow: Qualitative properties.IEEE Transactions on Pattern Analysis and Machine Intelligence, 11:490–498.

Verri, A., Straforini, M., and Torre, V. 1992. Computational aspects of motion perception in natural and artificial vision systems.Philosophical Transactions of the Royal Society London, B, 337:429–443.

Waxman, A. 1987. Image flow theory: A framework for 3-d inference from time-varying imagery. In C. Brown, editor,Advances in Computer Vision. Erlbaum, Hillsdale, NJ.

White, G. and Weldon, E. 1988. Utilizing gradient vector distributions to recover motion parameters. InProc. International Conference on Computer Vision, pp. 64–73.

Author information

Authors and Affiliations

Additional information

Research supported in part by NSF (Grant IRI-90-57934), ONR (Contract N00014-93-1-0257) and ARPA (Order No. 8459).

Rights and permissions

About this article

Cite this article

Fermüller, C., Aloimonos, Y. Qualitative egomotion. Int J Comput Vision 15, 7–29 (1995). https://doi.org/10.1007/BF01450848

Revised:

Accepted:

Issue Date:

DOI: https://doi.org/10.1007/BF01450848