Abstract

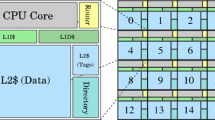

Cache coherency in a scalable parallel computer architecture requires mechanisms beyond the conventional common bus based snooping approaches which are limited to about 16 processors. The new Convex SPP-1000 achieves cache coherency across 128 processors through a two-level shared memory NUMA structure employing directory based and SCI protocol mechanisms. While hardware support for managing a common global name space minimizes overhead costs and simplifies programming, latency considerations for remote accesses may still dominate and can under unfavorable conditions constrain scalability. This paper provides the first published evaluation of the SP-1000 hierarchical cache coherency mechanisms from the perspective of measured latency and its impact on basic global How control mechanisms, scaling of a parallel science code, and sensitivity of cache miss rates to system scale. It is shown that global remote access latency is only a factor of seven greater than that of local cache miss penalty and that scaling of a challenging scientific application is not severely degraded by the hierarchical structure for achieving consistency across the system processor caches.

Similar content being viewed by others

References

IEEE Standard for Scalable Coherent Interface, IEEE (1993).

T. Sterling, D. Savarese, P. Merkey, and J. Gardner, An Initial Evaluation of the Convex SPP-1000 for Earth and Space Science Applications, Proc. of the First Int’l. Symp. on High Performance Computing Architecture (January 1995).

Hewlett Packard Company, PA-RISC 1.1 Architecture and Instruction Set Reference Manual, Hewlett Packard Company (1992).

Thinking Machines Corporation, Connection Machine CM-5 Technical Summary, Cambridge, Massachusetts (1992).

Intel Corporation, Paragon User’s Guide, Beaverton, Oregon (1993).

Cray Research, Inc., CRAY T3D System Architecture Overview, Eagan, Minnesota.

CONVEX Computer Corporation, Exemplar Architecture Manual, Richardson, Texas (1993).

Kendall Square Research Corporation, KSR Technical Summary, Waltham, Massachusetts (1992).

CONVEX Computer Corporation, Camelot MACH Microkernel Interface Specification: Architecture Interface Library, Richardson, Texas (May 1993).

J. E. Barnes and P. Hut, A Hierarchical O(n log n) Force Calculation Algorithm, Nature, Vol. 342 (1986).

L. Hernquist, Vectorization of Tree Traversals, Journal of Computational Physics, Vol. 87 (1990).

K. Olson and J. Dorband, An Implementation of a Tree Code on a SIMD Parallel Computer, Astrophysical Journal Supplement Series (September 1994).

CONVEX Computer Corporation, Exemplar Programming Guide, Richardson, Texas (1993).

A. Agarwal, D. Chaiken, and K. Johnson et al., The MIT Alewife Machine: A Large-Scale Distributed-Memory Multiprocessor, In: Scalable Shared Memory Multiprocessors, M. Dubois and S.S. Thakkar, Eds., Kluwer Academic Publishers, pp. 239–261 (1992).

D. Chaiken, J. Kubiatowitz, and A. Agarwal, Limit LESS Directories: A Scalable Cache Coherence Scheme, Proc. of the Fourth Int’l. Conf. on Architectural Support for Programming Languages and Operating Systems (ASPLOS IV), pp. 224–234 (1991).

M. S. Warren and J. K. Salmon, A Parallel Hashed Oct-tree N-Body Algorithm, Proc. of Supercomputing ’93, Washington: IEEE Computer Society Press (1993).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sterling, T., Savarese, D., Merkey, P. et al. An Empirical Evaluation of the Convex SPP-1000 Hierarchical Shared Memory System. Int J Parallel Prog 24, 377–396 (1996). https://doi.org/10.1007/BF03356755

Published:

Issue Date:

DOI: https://doi.org/10.1007/BF03356755