Abstract

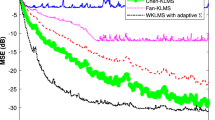

The kernel least mean square (KLMS) algorithm is the simplest algorithm in kernel adaptive filters. However, the network growth of KLMS is still an issue for preventing its online applications, especially when the length of training data is large. The Nyström method is an efficient method for curbing the growth of the network size. In this paper, we apply the Nyström method to the KLMS algorithm, generating a novel algorithm named kernel least mean square based on the Nyström method (NysKLMS). In comparison with the KLMS algorithm, the proposed NysKLMS algorithm can reduce the computational complexity, significantly. The NysKLMS algorithm is proved to be convergent in the mean square sense when its step size satisfies some conditions. In addition, the theoretical steady-state excess mean square error of NysKLMS supported by simulations is calculated to evaluate the filtering accuracy. Simulations on system identification and nonlinear channel equalization show that the NysKLMS algorithm can approach the filtering performance of the KLMS algorithm by using much lower computational complexity, and outperform the KLMS with the novelty criterion, the KLMS with the surprise criterion, the quantized KLMS, the fixed-budget QKLMS, and the random Fourier features KLMS.

Similar content being viewed by others

References

T.Y. Al-Naffouri, A.H. Sayed, Transient analysis of adaptive filters with error nonlinearities. IEEE Trans. Signal Process. 51(3), 653–663 (2003)

A.Z.U.M. Al-Saggaf, M. Moinuddin, M. Arif, The q-least mean squares algorithm. Signal Process. 111, 50–60 (2015)

N. Aronszajn, Theory of reproducing kernels. Trans. Am. Math. Soc. 68(3), 337–404 (1950)

P. Bouboulis, S. Pougkakiotis, S. Theodoridis, Efficient KLMS and KRLS algorithms: a random Fourier feature perspective, in IEEE Statistical Signal Processing Workshop (SSP), Palma de Mallorca, Spain, 26–29 June 2016, pp. 1–5

C. Burges, A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Disc. 2(2), 121–167 (1998)

B. Chen, L. Li, W. Liu, J.C. Príncipe, Nonlinear adaptive filtering in kernel spaces (Springer, Berlin, 2014), pp. 715–734

B. Chen, S. Zhao, P. Zhu, J.C. Príncipe, Mean square convergence analysis for kernel least mean square algorithm. Signal Process. 92(11), 2624–2632 (2012)

B. Chen, S. Zhao, P. Zhu, J.C. Príncipe, Quantized kernel least mean square algorithm. IEEE Trans. Neural Netw. Learn. Syst. 23, 22–32 (2012)

S. Craciun, D. Cheney, K. Gugel, J.C. Sanchez, J.C. Príncipe, Wireless transmission of neural signals using entropy and mutual information compression. IEEE Trans. Neural Syst. Rehabil. Eng. 19(1), 35–44 (2011)

L. Csato, M. Opper, Sparse on-line Gaussian processes. Neural Comput. 14(3), 641–668 (2002)

P. Drineas, M.W. Mahoney, On the nystrom method for approximating a Gram matrix for improved kernel-based learning. J. Mach. Learn. Res. 6, 2153–2175 (2005)

Y. Engel, S. Mannor, R. Meir, The kernel recursive least-squares algorithm. IEEE Trans. Signal Process. 52(8), 2275–2285 (2004)

H. Fan, Q. Song, A linear recurrent kernel online learning algorithm with sparse updates. Neural Netw. 50(2), 142–153 (2014)

Z. Hu, M. Lin, C. Zhang, Dependent online kernel learning with constant number of random fourier features. IEEE Trans. Neural Netw. Learn. Syst. 26(10), 2464–2476 (2015)

A. Khalili, A. Rastegarnia, M.K. Islam, T.Y. Rezaii, Codebook design for vector quantization based on a kernel fuzzy learning algorithm. Circuits Syst. Signal Process. 30(5), 999–1010 (2011)

A. Khalili, A. Rastegarnia, M.K. Islam, T.Y. Rezaii, Steady-state tracking analysis of adaptive filter with maximum correntropy criterion. Circuits Syst. Signal Process. 36(4), 1725–1734 (2017)

S. Khan, I. Naseem, R. Togneri, M. Bennamoun, A novel adaptive kernel for the RBF neural networks. Circuits Syst. Signal Process. 36(4), 1639–1653 (2017)

J. Kivinen, A. Smola, R. Williamson, Online learning with kernels. IEEE Trans. Signal Process. 52(8), 2165–2176 (2004)

T. Lehn-Schiøler, A. Hegde, D. Erdogmus, J.C. Príncipe, Vector quantization using information theoretic concepts. Natural Comput. 4(1), 39–51 (2005)

S. Li, L. Song, T. Qiu, Steady-state and tracking analysis of fractional lower-order constant modulus algorithm. Circuits Syst. Signal Process. 30(6), 1275–1288 (2011)

M. Lin, L. Zhang, R. Jin, S. Weng, C. Zhang, Online kernel learning with nearly constant support vectors. Neurocomputing 179, 26–36 (2016)

Y.Y. Linde, A. Buzo, R.M. Gray, An algorithm for vector quantizer design. IEEE Trans. Commun. 28(1), 84–95 (1980)

W. Liu, I. Park, J.C. Príncipe, An information theoretic approach of designing sparse kernel adaptive filters. IEEE Trans. Neural Netw. 20(12), 1950–1961 (2009)

W. Liu, P. Pokharel, J.C. Príncipe, The kernel least-mean-square algorithm. IEEE Trans. Signal Process. 56(2), 543–554 (2008)

W. Liu, J.C. Príncipe, Kernel affine projection algorithms. EURASIP J. Adv. Signal Process. 2008(1), 1–13 (2008)

S. Nan, L. Sun, B. Chen, Z. Lin, K.A. Toh, Density-dependent quantized least squares support vector machine for large data sets. IEEE Trans. Neural Netw. Learn. Syst. 28(1), 94–106 (2015)

J. Platt, A resource-allocating network for function interpolation. Neural Comput. 3(2), 213–225 (1991)

J.C. Príncipe, W. Liu, S.S. Haykin, Kernel adaptive filtering: a comprehensive introduction (Wiley, New York, 2010)

A. Rahimi, B. Recht, Random features for large scale kernel machines, in Advances in Neural Information Processing Systems, Vancouver, Canada, 3–6 December 2007, pp. 1177–1184

A.H. Sayed, Fundamentals of adaptive filtering (Wiley, New York, 2003)

K. Slavakis, S. Theodoridis, I. Yamada, Online kernel-based classification using adaptive projection algorithms. IEEE Trans. Signal Process. 56(7), 2781–2796 (2008)

S. Smale, D. Zhou, Geometry on probability spaces. Constr. Approx. 30(3), 311–323 (2009)

S. Wang, Y. Zheng, S. Duan, L. Wang, Simplified quantised kernel least mean square algorithm with fixed budget. Electron Lett. 52, 1453–1455 (2016)

S. Wang, Y. Zheng, S. Duan, L. Wang, H. Tan, Quantized kernel maximum correntropy and its mean square convergence analysis. Digital Signal Process. 63(3), 164–176 (2017)

C. Williams, M. Seeger, Using the nystrom method to speed up kernel machines, in Advances in Neural Information Processing Systems, Vancouver, Canada, 3–8 December 2001, pp. 682–688

T. Yang, Y. Li, M. Mahdavi, R. Jin, Z. Zhou, Nystrom method vs random Fourier features: a theoretical and empirical comparison, in Advances in Neural Information Processing Systems, Lake Tahoe, USA, 3–6 December 2012, pp. 485–493

N.R. Yousef, A.H. Sayed, A unified approach to the steady-state and tracking analyses of adaptive filters. IEEE Trans. Signal Process. 49(2), 314–324 (2001)

S. Zhao, B. Chen, P. Zhu, J.C. Príncipe, Fixed budget quantized kernel least-mean-square algorithm. Signal Process. 93(9), 2759–2770 (2013)

Acknowledgements

This work was supported by National Natural Science Foundation of China (Grant No. 61671389), China Postdoctoral Science Foundation Funded Project (Grant No. 2017M620779), Chongqing Postdoctoral Science Foundation Special Funded Project (Grant No. Xm2017107), and the Fundamental Research Funds for the Central Universities (Grant No. XDJK2016C096).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, SY., Wang, WY., Dang, LJ. et al. Kernel Least Mean Square Based on the Nyström Method. Circuits Syst Signal Process 38, 3133–3151 (2019). https://doi.org/10.1007/s00034-018-1006-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-018-1006-2