Abstract

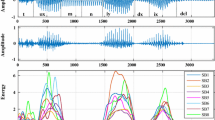

Automatic detection of vowels plays a significant role in the analysis and synthesis of speech signal. Detecting vowels within a speech utterance in noisy environment and varied contexts is a very challenging task. In this work, a robust technique based on non-local means (NLM) estimation is proposed for the detection of vowels in noisy speech signals. In the NLM algorithm, the signal value at each sample point is estimated as the weighted sum of signal values at other sample points within a search neighborhood. The weight value is computed by finding square of the difference between the signal values belonging to two different segments. During the estimation, one segment is kept as fixed, while other segment is slid over the search neighborhood. For any particular sample point, the sum of those weight values is significantly less when the segments under consideration are higher in magnitude. In a given speech signal, the vowels are regions of high energy. This will be true even under noisy conditions. In this work, the sum of weight values (SWV), computed at each time instant is used as a discriminating feature for detecting the vowels in a given speech signal. In the proposed approach, the regions where the SWV exhibits significant transitions and attain lower values for a considerable duration of time compared to the preceding and succeeding regions are hypothesized as the vowels. This hypothesis is statistically validated for detecting vowels under clean as well as noisy test conditions. For proper comparison, a three-class statistical classifier (vowel, non-vowel and silence) is developed for detecting the vowels in a given speech signal. For developing the said classifier, the mel-frequency cepstral coefficients are used as the acoustic feature vectors, while deep neural network (DNN)-hidden Markov model (HMM) is employed for acoustic modeling. The proposed vowel detection method is observed to outperform the DNN-HMM-based statistical classifiers as well as existing signal processing approaches under both clean and noisy test conditions.

Similar content being viewed by others

References

N. Adiga, S.M. Prasanna, A hybrid text-to-speech synthesis using vowel and non vowel like regions, in Proceedings of the INDICON, pp. 1–5 (2014)

T. Anastasakos, J. Mcdonough, R. Schwartz, J. Makhoul, A compact model for speaker-adaptive training. Proc. Int. Conf. Spok. Lang. Process 2, 1137–1140 (1996)

A. Buades, B. Coll, J.M. Morel, A non-local algorithm for image denoising, in Computer Vision and Pattern Recognition, pp. 60–65 (2005)

A. Buades, B. Coll, J.M. Morel, A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 4(2), 490–530 (2005)

G. Dahl, D. Yu, L. Deng, A. Acero, Context-dependent pre-trained deep neural networks for large vocabulary speech recognition. IEEE Trans. Audio Speech Lang. Process 20(1), 30–42 (2012)

S.B. Davis, P. Mermelstein, Comparison of parametric representations for monosyllabic word recognition in continuously spoken sentences. IEEE Trans. Acoust. Speech Signal Process ASSP–28(4), 357–366 (1980)

K.T. Deepak, S.R.M. Prasanna, Foreground speech segmentation and enhancement using glottal closure instants and mel cepstral coefficients. IEEE/ACM Trans. Audio Speech Lang. Process 24(7), 1205–1219 (2016)

C.A. Deledalle, V. Duval, J. Salmon, Non-local methods with shape-adaptive patches (nlm-sap). J. Math. Imaging Vis. 43(2), 103–120 (2012)

V. Digalakis, D. Rtischev, L. Neumeyer, Speaker adaptation using constrained estimation of Gaussian mixtures. IEEE Trans. Audio Speech Lang. Process 3(5), 357–366 (1995)

N. Fakotakis, A. Tsopanoglou, G. Kokkinakis, A text-independent speaker recognition system based on vowel spotting. Speech Commun. 12(1), 57–68 (1993)

M.J.F. Gales, Semi-tied covariance matrices for hidden Markov models. IEEE Trans. Speech Audio Process 7(3), 272–281 (1999)

J. Garofolo, L. Lamel, W. Fisher, J. Fiscus, D. Pallett, N. Dahlgren, V. Zue, TIMIT Acoustic-Phonetic Continuous Speech Corpus LDC93S1, vol. 33 (Linguistic Data Consortium, Philadelphia, 1993)

D.J. Hermes, Vowel onset detection. J. Acoust. Soc. Am. 87(2), 866–873 (1990)

Q. Jin, A. Waibel, Application of LDA to speaker recognition, in Proceedings of the Interspeech, pp. 250–253 (2000)

A. Kumar, S. Shahnawazuddin, G. Pradhan, Exploring different acoustic modeling techniques for the detection of vowels in speech signal, in Proceedings of the National Conference on Communication (NCC), pp. 1–5 (2016)

A. Kumar, S. Shahnawazuddin, G. Pradhan, Improvements in the detection of vowel onset and offset points in a speech sequence. Circuits Syst. Signal Process 36, 1–26 (2016)

A. Kumar, S. Shahnawazuddin, G. Pradhan, Non-local estimation of speech signal for vowel onset point detection in varied environments, in Proceedings of the Interspeech, Stockholm, Sweden, pp. 429–433 (2017)

T. Lotter, P. Vary, Speech enhancement by map spectral amplitude estimation using a super-Gaussian speech model. EURASIP J. Appl. Signal Process 2005, 1110–1126 (2005)

Y. Lu, P.C. Loizou, Estimators of the magnitude-squared spectrum and methods for incorporating snr uncertainty. IEEE Trans. Audio Speech Lang. Process 19(5), 1123–1137 (2011)

V.K. Mittal, B. Yegnanarayana, Effect of glottal dynamics in the production of shouted speech. J. Acoust. Soc. Am. 133(5), 3050–3061 (2013)

V.K. Mittal, B. Yegnanarayana, Study of changes in glottal vibration characteristics during laughter, in INTERSPEECH (2014)

V.K. Mittal, B. Yegnanarayana, P. Bhaskararao, Study of the effects of vocal tract constriction on glottal vibration. J. Acoust. Soc. Am. 136(4), 1932–1941 (2014)

D. Povey, L. Burget, M. Agarwal, P. Akyazi, F. Kai, A. Ghoshal, O. Glembek, N. Goel, M. Karafiát, A. Rastrow, R.C. Rose, P. Schwarz, S. Thomas, The subspace Gaussian mixture model—a structured model for speech recognition. Comput. Speech Lang. 25(2), 404–439 (2011)

D. Povey, A. Ghoshal, G. Boulianne, L. Burget, O. Glembek, N. Goel, M. Hannemann, P. Motlicek, Y. Qian, P. Schwarz, J. Silovsky, G. Stemmer, K. Vesely, The kaldi speech recognition toolkit, in Workshop on Automatic Speech Recognition and Understanding (2011)

G. Pradhan, B. Haris, S. Prasanna, R. Sinha, Speaker verification in sensor and acoustic environment mismatch conditions. Int. J. Speech Technol. 15(3), 381–392 (2012)

G. Pradhan, A. Kumar, S. Shahnawazuddin, Excitation source features for improving the detection of vowel onset and offset points in a speech sequence, in Proceedings of the Interspeech 2017, pp. 1884–1888 (2017)

G. Pradhan, S.M. Prasanna, Speaker verification by vowel and nonvowel like segmentation. IEEE Trans. Audio Speech Lang. Process 21(4), 854–867 (2013)

S.M. Prasanna, G. Pradhan, Significance of vowel-like regions for speaker verification under degraded conditions. IEEE Trans. Audio Speech Lang. Process 19(8), 2552–2565 (2011)

S.R.M. Prasanna, B.V.S. Reddy, P. Krishnamoorthy, Vowel onset point detection using source, spectral peaks, and modulation spectrum energies. IEEE Trans. Audio Speech Lang. Process 17(4), 556–565 (2009)

S.R.M. Prasanna, B. Yegnanarayana, Detection of vowel onset point events using excitation source information, in Proceedings of the Interspeech, pp. 1133–1136 (2005)

J. Rao, C.C. Sekhar, B. Yegnanarayana, Neural network based approach for detection of vowel onset points, in Proceedings of the International Conference on Advanced Pattern Recognition Digital Technologies, vol. 1, pp. 316–320 (1999)

K.S. Rao, A.K. Vuppala, Speaker Identification and Time Scale Modification Using VOPs (Springer, Berlin, 2014)

K.S. Rao, B. Yegnanarayana, Duration modification using glottal closure instants and vowel onset points. Speech Commun. 51(12), 1263–1269 (2009)

S.P. Rath, D. Povey, K. Veselý, J. Cernocký, Improved feature process for deep neural networks, in Proceedings of the Interspeech (2013)

B.S. Reddy, K.V. Rao, S.M. Prasanna, Keyword spotting using vowel onset point, vector quantization and hidden Markov modeling based techniques, in Proceedings of the TENCON, pp. 1–4 (2008)

B. Sarma, S. Prajwal, S.M. Prasanna, Improved vowel onset and offset points detection using bessel features, in Proceedings of the International Conference on Signal Processing and Communication, pp. 1–6 (2014)

P. Singh, G. Pradhan, Exploring the non-local similarity present in variational mode functions for effective ecg denoising, in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 861–865. IEEE (2018)

P. Singh, G. Pradhan, Variational mode decomposition based ecg denoising using non-local means and wavelet domain filtering. Australas. Phys. Eng. Sci. Med. 41, 1–14 (2018)

P. Singh, S. Shahnawazuddin, G. Pradhan, An efficient ecg denoising technique based on non-local means estimation and modified empirical mode decomposition. Circuits Syst. Signal Process. 37(10), 4527–4547 (2018)

N. Srinivas, G. Pradhan, S. Shahnawazuddin, Enhancement of noisy speech signal by non-local means estimation of variational mode functions. Proc. Interspeech 2018, 1156–1160 (2018)

K.N. Stevens, Acoustic Phonetics (The MIT Press Cambridge, London, 2000)

B.H. Tracey, E.L. Miller, Nonlocal means denoising of ecg signals. IEEE Trans. Biomed. Eng. 59(9), 2383–2386 (2012)

D. Van De Ville, M. Kocher, Sure-based non-local means. IEEE Signal Process. Lett. 16(11), 973–976 (2009)

A. Varga, H.J.M. Steeneken, Assessment for automatic speech recognition: II. NOISEX-92: a database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 12(3), 247–251 (1993)

A. Vuppala, J. Yadav, S. Chakrabarti, K.S. Rao, Vowel onset point detection for low bit rate coded speech. IEEE Trans. Audio Speech Lang. Process. 20(6), 1894–1903 (2012)

A.K. Vuppala, K.S. Rao, Vowel onset point detection for noisy speech using spectral energy at formant frequencies. Int. J. Speech Technol. 16(2), 229–235 (2013)

A.K. Vuppala, K.S. Rao, S. Chakrabarti, Improved vowel onset point detection using epoch intervals. AEU Int. J. Electron. Commun. 66(8), 697–700 (2012)

H.K. Vydana, S.R. Kadiri, A.K. Vuppala, Vowel-based non-uniform prosody modification for emotion conversion. Circuits Syst. Signal Process. 35(5), 1643–1663 (2016)

J. Wang, C. Hu, S. Hung, J. Lee, A hierarchical neural network based C/V segmentation algorithm for Mandarin speech recognition. IEEE Trans. Signal Process. 39(9), 2141–2146 (1991)

J.H. Wang, S.H. Chen, A C/V segmentation algorithm for Mandarin speech using wavelet transforms, in Proceedings of the International Conference on Acoustics, Speech, Signal Process., vol. 1, pp. 417–420 (1999)

P.J. Wolfe, S.J. Godsill, Simple alternatives to the Ephraim and Malah suppression rule for speech enhancement, in Signal Processing Workshop on Statistical Signal Processing, pp. 496–499 (2001)

J. Yadav, K.S. Rao, Detection of vowel offset point from speech signal. IEEE Signal Process. Lett. 20(4), 299–302 (2013)

Acknowledgements

We thank the anonymous reviewers for their careful reading of our manuscript and their insightful comments and suggestions that have greatly improved the quality of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kumar, A., Pradhan, G. & Shahnawazuddin, S. An Adaptive Method for Robust Detection of Vowels in Noisy Environment. Circuits Syst Signal Process 38, 4180–4201 (2019). https://doi.org/10.1007/s00034-019-01052-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-019-01052-x