Abstract

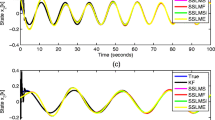

In order to improve tracking accuracy of time-varying sparse signals, a sparse state Kalman filter algorithm based on Kalman gain matrix is proposed in this paper. Under the constraint of sparse state, minimizing the mean square error and solving the optimization problem by using the symmetrical alternating direction multiplier method (symmetrical ADMM), while keeping the update expression of state estimation unchanged, a Kalman gain matrix is obtained which can make the state estimation sparse. In addition, this paper also discusses two different sparse constraints which are L1 norm constraint and cardinality function constraint. The proposed algorithm can be implemented under the framework of conventional Kalman filter algorithm, no need to introduce additional frameworks. Two groups of dynamic signal models, a slow change of signal and a random walk of nonzero elements, are simulated. Simulation results show that the proposed algorithm can improve the tracking accuracy of conventional Kalman filter algorithm for time-varying sparse signal.

Similar content being viewed by others

Data Availability

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

A. Charles, C. Rozell, Dynamic filtering of sparse signals using reweighted ℓ 1, in IEEE International Conference on Acoustics, Speech and Signal Processing (IEEE, 2013), pp. 6451–6455

S. Boyd, N. Parikh, E. Chu, et al., Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 3(1), 1–122 (2011)

A. Carmi, P. Gurfil, D. Kanevsky, Methods for sparse signal recovery using Kalman filtering with embedded pseudo-measurement norms and quasi-norms. IEEE Trans. Signal Process. 58(4), 2405–2409 (2009)

Q. Deng, H. Zeng, J. Zhang, S. Tian, J. Cao, Z. Li, A. Liu, Compressed sensing for image reconstruction via back-off and rectification of greedy algorithm. Signal Process. 157, 280–287 (2019)

M. Doneva, Mathematical models for magnetic resonance imaging reconstruction: an overview of the approaches, problems, and future research areas. IEEE Signal Process. Mag. 37(1), 24–32 (2020)

D. Donoho, Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006)

B. He, H. Liu, Z. Wang, X. Yuan, A strictly contractive Peaceman-Rachford splitting method for convex programming. SIAM J. Optim. 24(3), 1011–1040 (2014)

B. He, F. Ma, X. Yuan, Convergence study on the symmetric version of ADMM with larger step sizes. SIAM J. Imaging Sci. 9(3), 1467–1501 (2016)

F. Lin, M. Fardad, M. Jovanović, Design of optimal sparse feedback gains via the alternating direction method of multipliers. IEEE Trans. Autom. Control 58(9), 2426–2431 (2013)

E. Masazade, M. Fardad, P. Varshney, Sparsity-promoting extended Kalman filtering for target tracking in wireless sensor networks. IEEE Signal Process. Lett. 19(12), 845–848 (2012)

L. Pishdad, F. Labeau, Analytic minimum mean-square error bounds in linear dynamic systems with Gaussian mixture noise statistics. IEEE Access 8, 67990–67999 (2020)

J. Ranstam, J. Cook, LASSO regression. J. Br. Surg. 105(10), 1348–1348 (2018)

T. Saha, S. Srivastava, S. Khare, P. Stanimirović, M. Petković, An improved algorithm for basis pursuit problem and its applications. Appl. Math. Comput. 355, 385–398 (2019)

S. Thrun, Probabilistic robotics. Commun. ACM 45(3), 52–57 (2002)

N. Vaswani, Kalman filtered compressed sensing, in 15th IEEE International Conference on Image Processing (IEEE, 2008), pp. 893–896

Acknowledgements

This work was supported by China Academy of Railway Sciences locomotive running Department condition monitoring system (Grant: 9151524108).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors state that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The solution of \(G_{t}\) subproblem:

1. When \(g\left( {G_{t} } \right) = \left\| {{\hat{x}}_{t|t - 1} + G_{t} \cdot e_{t} } \right\|_{1}\):

The optimal solution of piecewise function can be classified and discussed in different regions. Writing a as an optimization problem:

Firstly, solving unconstrained optimization. By optimality conditions:

When \(\hat{x}_{t|t - 1,i} + G_{t,i} \cdot e_{t} = V_{i}^{k} \cdot e_{t} + \hat{x}_{t|t - 1,i} - \frac{\beta }{\rho }e_{t}^{T} \cdot e_{t} \ge 0\), namely: when \(V_{i}^{k} \cdot e_{t} + \hat{x}_{t|t - 1,i} \ge \frac{\beta }{\rho }e_{t}^{T} \cdot e_{t}\):

When \(V_{i}^{k} \cdot e_{t} + \hat{x}_{t|t - 1,i} < \frac{\beta }{\rho }e_{t}^{T} \cdot e_{t}\), The optimal solution appears at boundary \(\hat{x}_{t|t - 1,i} + G_{t,i} \cdot e_{t} = 0\), This is equivalent to solve a constrained optimization problem. Let its Lagrange function be:

According to the optimality conditions:

Hence:

In conclusion:

Then write b in the form of optimization problem:

Similarly:

In summary:

2. When \(g\left( {G_{t} } \right) = {\text{card}}\left( {\hat{x}_{t|t - 1} + G_{t} \cdot e_{t} } \right)\):

It can be noted that when \(\hat{x}_{t|t - 1,i} + G_{t,i} \cdot e_{t} \ne 0\), \(G_{t,i} = V_{i}^{k}\) minimizes the objective function, and the minimum value is \(\beta\). When \({\hat{x}}_{t|t - 1,i} + G_{t,i} \cdot e_{t} = 0\), at this point, an equality constrained optimization problem is formed:

Its Lagrange function is:

By optimality conditions:

Hence:

In summary:

Notice that when \({\hat{x}}_{t|t - 1,i} + V_{i}^{k} \cdot e_{t} = 0\), \(G_{t,i}^{k + 1} = V_{i}^{k}\).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shao, T., Luo, Q. A Sparse State Kalman Filter Algorithm Based on Kalman Gain. Circuits Syst Signal Process 42, 2305–2320 (2023). https://doi.org/10.1007/s00034-022-02215-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-022-02215-z