Abstract

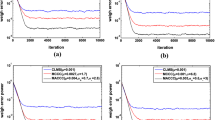

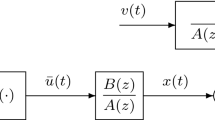

Recently, the widely linear complex-valued estimated-input adaptive filter (WLC-EIAF) used in processing noisy inputs and outputs has received increasing attention in machine learning and signal processing. In this paper, a fixed-point widely linear complex-valued estimated-input maximum complex correntropy criterion (FPWLC-EIMCCC) algorithm is proposed to deal with noncircular complex signals with noise in input and output. Compared with the existing algorithms, benefiting from the fixed-point method, the proposed FPWLC-EIMCCC can simultaneously have better steady-state performance and faster convergence speed. Furthermore, the theoretical analysis of the FPWLC-EIMCCC is performed by transforming FPWLC-EIMCCC into a gradient-like version based on the matrix inverse lemma and some approximations. Simulation results on system identification, channel estimation, and wind prediction show that FPWLC-EIMCCC significantly improves the filtering performance.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

T. Adali, P.J. Schreier, L.L. Scharf, Complex-valued signal processing: The proper way to deal with impropriety. IEEE Trans. Signal Process. 59(11), 5101–5125 (2011)

M. Alquran, A.R. Mayyas, Design of a nonlinear stability controller for ground vehicles subjected to a tire blowout using double-integral sliding-mode controller. SAE Int. J. Veh. Dyn. Stab. NVH 5(3), 1–5 (2021)

H. Cheng, S. Chen, Fading performance evaluation of a semi-blind adaptive space–time equaliser for frequency selective MIMO systems. J. Frankl. Inst. 348(10), 2823–2838 (2011)

B. Chen, X. Wang, N. Lu, S. Wang, J. Cao, J. Qin, Mixture correntropy for robust learning. Pattern Recognit. 79, 318–327 (2018)

L. Chen, H. Qu, J. Zhao, Generalized Correntropy based deep learning in presence of non-Gaussian noises. Neurocomputing 278, 41–50 (2018)

F. Dong, G. Qian, S. Wang, Complex Correntropy with variable center: Definition, properties, and application to adaptive filtering. Entropy 22(1), 70 (2020)

F. Dong, G. Qian, S. Wang, Bias-Compensated MCCC Algorithm for Widely Linear Adaptive Filtering with Noisy Data. IEEE Trans. Circuits Syst. II Express Briefs 67(12), 3587–3591 (2020)

G. Gowtham, S. Burra, A. Kar, J. Østergaard, P. Sooraksa, V. Mladenovic, D.B. Haddad, A Family of adaptive Volterra filters based on maximum correntropy criterion for improved active control of impulsive noise. Circuits Syst. Signal Process. 41(2), 1019–1037 (2022)

J.P.F. Guimaraes, A.I.R. Fontes, J.B.A. Rego, A.D.M. Martins, J.C. Principe, Complex correntropy: Probabilistic interpretation and application to complex-valued data. IEEE Signal Process. Lett. 24(1), 42–45 (2016)

J.P.F. Guimaraes, A.I.R. Fontes, J.B.A. Rego, A.D.M. Martins, J.C. Principe, Complex correntropy function: Properties, and application to a channel equalization problem. Expert Syst. Appl. 107, 173–181 (2018)

J.P.F. Guimaraes, F.B. Da Silva, A.I.R. Fontes, R. Von Borries, A.D.M. Martins, Complex correntropy applied to a compressive sensing problem in an impulsive noise environment. IEEE Access 7, 151652–151660 (2019)

V.C. Goginen, S. Mula, Logarithmic cost based constrained adaptive filtering algorithms for sensor array beamforming. IEEE Sensors J. 18(14), 5897–5905 (2018)

S. Haykin, Adaptive filtering theory, 3rd edn. (Prentice Hall, New York, 1996)

M. Ikenoue, S. Kanae, Z.J. Yang, K. Wada, Bias-compensation based method for errors-in-variables model identification. IFAC Proc. Vol. 41(2), 1360–1365 (2008)

L. Lu, L. Chen, Z. Zheng, Y. Yu, X. Yang, Behavior of the LMS algorithm with hyperbolic secant cost. J. Frankl. Inst. 357(3), 1943–1960 (2020)

L. Li, Y.F. Pu, Widely linear complex-valued least mean M-estimate algorithms: Design and performance analysis. Circuits Syst. Signal Process 41(10), 5785–5806 (2022)

F. Lai, C. Huang, C. Jiang, Comparative study on bifurcation and stability control of vehicle lateral dynamics. SAE Int. J. Veh. Dyn. Stab. NVH 6(1), 35–52 (2022)

I. Markovsky, S. Van Huffel, Overview of total least-squares methods. Signal Process. 87(10), 2283–2302 (2007)

W. Ma, D. Zheng, Y. Li, Z. Zhang, B. Chen, Bias-compensated normalized maximum correntropy criterion algorithm for system identification with noisy input. Signal Process. 152, 160–164 (2018)

F. Ma, H. Bai, X. Zhang, C. Xu, Y. Li, Generalised maximum complex correntropy-based DOA estimation in presence of impulsive noise. IET Radar Sonar Navig. 14(6), 793–802 (2020)

D.P. Mandic, V.S.L. Goh, Complex valued nonlinear adaptive filters: Noncircularity, widely linear and neural models (Wiley, New York, 2009)

D.P. Mandic, S. Javidi, S.L. Goh, A. Kuh, K. Aihara, Complex-valued prediction of wind profile using augmented complex statistics. Renew. Energ. 34(1), 196–201 (2009)

A. Pouradabi, A. Rastegarnia, S. Zandi, W.M. Bazzi, S. Sanei, A class of diffusion proportionate subband adaptive filters for sparse system identification over distributed networks. Circuits Syst. Signal Process. 40, 6242–6264 (2021)

G. Qian, S. Wang, L. Wang, S. Duan, Convergence analysis of a fixed point algorithm under maximum complex correntropy criterion. IEEE Signal Process. Lett. 25(12), 1830–1834 (2018)

G. Qian, S. Wang, H.H.C. Iu, Maximum total complex correntropy for adaptive filter. IEEE Trans. on Signal Process. 68, 978–989 (2020)

G. Qian, X. Ning, S. Wang, Mixture complex correntropy for adaptive filter. IEEE Trans. Circuits Syst. II Express Briefs 66(8), 1476–1480 (2018)

M. Salah, M. Dessouky, B. Abdelhamid, Variable step size LMS algorithm based on function control. Circuits Syst. Signal Process 32(6), 3121–3130 (2013)

L. Shi, H. Zhao, X. Zeng, Y. Yu, Variable step-size widely linear complex-valued NLMS algorithm and its performance analysis. Signal Process. 165, 1–6 (2019)

P.J. Schreier, L.L. Scharf, Statistical signal processing of complex-valued data: the theory of improper and noncircular signals (Cambridge University Press, Cambridge, 2010)

T. Tian, F.Y. Wu, K. Yang, Block-sparsity regularized maximum correntropy criterion for structured-sparse system identification. J. Frankl. Inst. 357(17), 12960–12985 (2020)

Z. Tianjun, H. Wan, Z. Wang, M. Wei, X. Xu, Z. Zhiliang, D. Sanmiao, Model reference adaptive control of semi-active suspension model based on AdaBoost algorithm for rollover prediction. SAE Int. J. Veh. Dyn. Stab. NVH 6(1), 71–86 (2021)

K. Triantafyllopoulos, On the central moments of the multidimensional Gaussian distribution. Math. Sci. 28(2), 125–128 (2003)

S. Van Huffel, J. Vandewalle, The total least squares problem: Computational aspects and analysis (SIAM Publishers, Philadelphia, 1991)

W. Wang, H. Zhao, L. Lu, Y. Yu, Bias-compensated constrained least mean square adaptive filter algorithm for noisy input and its performance analysis. Digit. Signal Process. 84, 26–37 (2019)

S. Wang, L. Dang, G. Qian, Y. Jiang, Kernel recursive maximum correntropy with Nyström approximation. Neurocomputing 329, 424–432 (2019)

K. Xiong, S. Wang, Robust least mean logarithmic square adaptive filtering algorithms. J. Frankl. Inst. 356(1), 654–674 (2019)

Y. Xia, D.P. Mandic, Augmented performance bounds on strictly linear and widely linear estimators with complex data. IEEE Trans. Signal Process. 2(66), 507–514 (2018)

X. Yang, T. Xie, Y. Guo, D. Zhou, Remote sensing image super-resolution based on convolutional blind denoising adaptive dense connection. IET Image Process. 15(11), 2508–2520 (2021)

X. Yang, Y. Zhang, Y. Guo, D. Zhou, An image super-resolution deep learning network based on multi-level feature extraction module. Multimed. Tools. Appl. 80(5), 7063–7075 (2021)

X. Yang, X. Li, Z. Li, D. Zhou, Image super-resolution based on deep neural network of multiple attention mechanism. J. Vis. Commun. Image Represent. 75, 103019 (2021)

N.R. Yousef, A.H. Sayed, A unified approach to the steady-state and tracking analyses of adaptive filters. IEEE Trans. Signal Process. 49(2), 314–324 (2001)

Y.B. Zhao, T. Yan, W.Y. Chen, H.Z. Lu, A collaborative spline adaptive filter for nonlinear echo cancellation. Circuits Syst. Signal Process. 40(4), 1699–1719 (2021)

S. Zhang, J. Zhang, W.X. Zheng, H.C. So, Widely linear complex-valued estimated-input LMS algorithm for bias-compensated adaptive filtering with noisy measurements. IEEE Trans. Signal Process. 67(13), 3592–3605 (2019)

Y. Zhang, G. Cui, Bias compensation methods for stochastic systems with colored noise. Appl. Math. Modell. 35(4), 1709–1716 (2011)

H. Zhao, Z. Zheng, Bias-compensated affine-projection-like algorithms with noisy input. Electron. Lett. 52(9), 712–714 (2016)

Z. Zheng, Z. Liu, H. Zhao, Bias-compensated normalized least-mean fourth algorithm for noisy input. Circuits Syst. Signal Process. 36(9), 3864–3873 (2017)

X. Zhang, Matrix analysis and application, 2nd edn. (Tsinghua University Press, Beijing, 2013)

Acknowledgements

This work was supported by Fundamental Research Funds for the Central Universities (19CX05003A-14).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

Appendix A: Evaluation of \(T_{1}\)

Taking \({\overline{\mathbf{R}}} = E\left[ {{\hat{\mathbf{x}}}_{c} {\hat{\mathbf{x}}}_{c}^{H} } \right]\) into consideration, we further obtain

Based on (46) and the block matrix inverse lemma [47], \({\overline{\mathbf{R}}}^{ - 1}\) is derived as follows:

where \({\overline{\mathbf{R}}}_{1}^{ - 1} = \frac{1}{{\sigma_{x}^{2} }}\frac{1}{{1 - (1 - 2\xi^{2} )^{2} }}\left[ {\begin{array}{*{20}c} {{\mathbf{I}}_{L} } & {\mathbf{0}} \\ {\mathbf{0}} & {{\mathbf{I}}_{L} } \\ \end{array} } \right]\), \({\overline{\mathbf{R}}}_{2}^{ - 1} = - \frac{1}{{\sigma_{x}^{2} }}\frac{{1 - 2\xi^{2} }}{{1 - (1 - 2\xi^{2} )^{2} }}\)\(\left[ {\begin{array}{*{20}c} {\mathbf{0}} & {{\mathbf{I}}_{L} } \\ {{\mathbf{I}}_{L} } & {\mathbf{0}} \\ \end{array} } \right]\), and L represents the length of the filter.

Thus, we have

Furthermore, we get

and

where \({\mathbf{x}}_{c,i} \left( n \right) = \left[ {{\mathbf{x}}_{i}^{T} \left( n \right) {\mathbf{x}}_{i}^{H} \left( n \right)} \right]^{T}\), and \(\frac{{1 - 2\xi^{2} }}{{(1 - 2\xi^{2} )^{2} - 1}} \le {0}\).

Based on (49), we have

Therefore, the range for \(T_{{0}}\) is

Appendix B: Evaluation of \(T_{2}\) and \(T_{{4}}\)

In the steady state, assuming that \(\left| {e_{a,i} \left( n \right)} \right|^{2}\) is statistically independent of \({\mathbf{x}}_{c,i}^{H} \left( n \right){\overline{\mathbf{R}}}^{ - 2} {\mathbf{x}}_{c,i} \left( n \right)\) [41] for long adaptive filter, we get

Similarly, \(T_{{4}}\) can be rewritten as

Appendix C: Evaluation of \(T_{{3}}\)

Based on the Gaussian fourth-order moment theorem [32], we get

Substituting (56) into (55), we have

Appendix D: Evaluation of \(T_{{5}}\)

Appendix E: Evaluation of \(T_{{6}}\), \(T_{{7}}\), \(T_{{8}}\) and \(T_{{9}}\)

Based on the independence assumption and \({\hat{\varvec{\upomega}}}^{H} \left( {n - 1} \right) \approx {{\varvec{\upomega}}}_{{{\text{opt}}}}\), we can get the evaluation of \(T_{{6}}\), \(T_{{7}}\), \(T_{{8}}\) and \(T_{{9}}\) as follows:

Appendix F: Evaluation of \(T_{{{10}}}\)

Appendix G: Evaluation of \(T_{{{11}}}\)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qiu, C., Ruan, Z. & Qian, G. Fixed-Point Widely Linear MCCC for Bias-Compensated Adaptive Filtering. Circuits Syst Signal Process 42, 2959–2985 (2023). https://doi.org/10.1007/s00034-022-02247-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-022-02247-5