Abstract

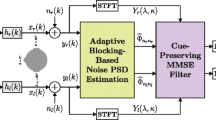

Multi-channel speech enhancement techniques are mainly based on optimal multi-channel speech estimators that comprise a minimum variance distortionless response (MVDR) beamformer followed by a single-channel Wiener post-filter. There are two problems in the application of this theoretically optimal solution. The first is the high sensitivity of the MVDR beamformer to errors in the estimated acoustic transfer function (ATF). The second is the accuracy of the time-varying post-filter coefficients estimated from non-stationary speech and noise. Mask-based beamforming developed in the last decade considerably improves the performance of the MVDR beamformer. In addition, the estimated time–frequency mask can be successfully used in post-filter design. In this paper, we propose several improvements to this approach. First, we propose an end-fire microphone array with a better directivity index than the corresponding broadside array. The proposed microphone array is composed of unidirectional microphone capsules that increase the directivity of the microphone array. Second, we propose preprocessing using a delay-and-sum beamformer before estimating the ideal ratio mask (IRM). Next, we propose a simplified generalized sidelobe canceller (S-GSC), which does not need to estimate ATF. We also improved the design of its blocking matrix by scaling the null space eigenvectors of the speech covariance matrix. The proposed computationally efficient multiple iteration method improves the adaptation of the S-GSC parameters. Finally, we improved the previous IRM-based post-filter, considering the SNR improvement at the output of the S-GSC beamformer. The integral speech enhancement procedure was tested on real room recordings using PESK, STOI, and SDR measures.

Similar content being viewed by others

Data Availability

The datasets generated and analyzed during the current study are available from the corresponding author upon reasonable request.

Notes

To be more precise, the principal eigenvector is equal to AT up to a multiplicative complex constant.

References

J. Barker, R. Marxer, E. Vincent, S. Watanabe, The third ‘CHiME’ speech separation and recognition challenge: analysis and outcomes. Comput. Speech Lang. 46, 605–626 (2017). https://doi.org/10.1016/J.CSL.2016.10.005

J. Benesty, J. Chen, Y. Huang, Microphone array signal processing, in Springer Topics in Signal Processing (Springer, 2008), pp. 1–240. https://doi.org/10.1007/978-3-540-78612-2

B.R. Breed, J. Strauss, A short proof of the equivalence of LCMV and GSC beamforming. IEEE Signal Process. Lett. 9, 168–169 (2002). https://doi.org/10.1109/LSP.2002.800506

K.M. Buckley, L.J. Griffiths, Adaptive generalized sidelobe canceller with derivative constraints. IEEE Trans. Antennas Propag. AP-34, 311–319 (1986). https://doi.org/10.1109/tap.1986.1143832

J. Capon, High-resolution frequency-wavenumber spectrum analysis. Proc. IEEE 57, 1408–1418 (1969). https://doi.org/10.1109/PROC.1969.7278

J. Chen, Y. Wang, D. Wang, A feature study for classification-based speech separation at low signal-to-noise ratios. IEEE/ACM Trans. Audio Speech Lang. Process. 22, 1993–2002 (2014). https://doi.org/10.1109/TASLP.2014.2359159

F. Chollet, Deep Learning with Python (Manning Publications, 2017)

J. Du, Y.H. Tu, L. Sun, F. Ma, H.K. Wang, J. Pan, C. Liu, J.D. Chen, C.H. Lee, The USTC-iFlytek system for CHiME-4 challenge. Proc. CHiME 4, 36–38 (2016)

G.W.X. Elko, Differential microphone arrays, in Audio Signal Processing for Next-Generation Multimedia Communication Systems. ed. by Y. Huang, J. Benesty (Springer, Boston, 2004). https://doi.org/10.1007/1-4020-7769-6_2

K. Eneman, M. Moonen, Iterated partitioned block frequency-domain adaptive filtering for acoustic echo cancellation. IEEE Trans. Speech Audio Process. 11(2), 143–158 (2003)

H. Erdogan, T. Hayashi, J. R. Hershey, T. Hori, C. Hori, W. N. Hsu, S. Kim, J. L. Roux, Z. Meng, S. Watanabe, Multi-channel speech recognition: LSTMs all the way through, in CHiME-4 Workshop (2016), pp. 1–4

H. Erdogan, J. R. Hershey, S. Watanabe, J. Le Roux, Phase-sensitive and recognition-boosted speech separation using deep recurrent neural networks, in 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2015), pp. 708–712. https://doi.org/10.1109/ICASSP.2015.7178061

H. Erdogan, J. R. Hershey, S. Watanabe, M. I. Mandel, J. Le Roux, Improved mvdr beamforming using single-channel mask prediction networks, in Interspeech (2016), pp. 1981–1985

J.M. Festen, R. Plomp, Effects of fluctuating noise and interfering speech on the speech-reception threshold for impaired and normal hearing. J. Acoust. Soc. Am. 88(4), 1725–1736 (1990)

O.L. Frost, An algorithm for linearly constrained adaptive array processing. Proc. IEEE 60, 926–935 (1972). https://doi.org/10.1109/PROC.1972.8817

S. Gannot, D. Burshtein, E. Weinstein, Signal enhancement using beamforming and nonstationarity with applications to speech. IEEE Trans. Signal Process. 49, 1614–1626 (2001). https://doi.org/10.1109/78.934132

S. Gannot, E. Vincent, S. Markovich-Golan, A. Ozerov, A Consolidated perspective on multimicrophone speech enhancement and source separation. IEEE/ACM Trans. Audio Speech Lang. Process. 25, 692–730 (2017). https://doi.org/10.1109/TASLP.2016.2647702

J.S. Garofolo, L.F. Lamel, W.M. Fisher, J.G. Fiscus, D.S. Pallett, N.L. Dahlgren, V. Zue, TIMIT Acoustic-Phonetic Continuous Speech Corpus—Linguistic Data Consortium (No. LDC93S1), (University of Pennsylvania, 1993). https://doi.org/10.35111/17gk-bn40

L.J. Griffiths, C.W. Jim, An alternative approach to linearly constrained adaptive beamforming. IEEE Trans. Antennas Propag. 30, 27–34 (1982). https://doi.org/10.1109/TAP.1982.1142739

R. Haeb-Umbach, J. Heymann, L. Drude, S. Watanabe, M. Delcroix, T. Nakatani, Far-field automatic speech recognition. Proc. IEEE 109, 124–148 (2020). https://doi.org/10.1109/JPROC.2020.3018668

J.W. Hall, E. Buss, J.H. Grose, P.A. Roush, Effects of age and hearing impairment on the ability to benefit from temporal and spectral modulation. Ear Hear. 33(3), 340 (2012)

J. Heymann, M. Bacchiani, T.N. Sainath, Performance of mask based statistical beamforming in a smart home scenario, in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (IEEE, 2018), pp. 6722–6726. https://doi.org/10.1109/ICASSP.2018.8462372

J. Heymann, L. Drude, R. Haeb-Umbach, Neural network based spectral mask estimation for acoustic beamforming, in ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings (Institute of Electrical and Electronics Engineers Inc. 2016), pp. 196–200. https://doi.org/10.1109/ICASSP.2016.7471664

J. Heymann, L. Drude, R. Haeb-Umbach, Wide residual BLSTM network with discriminative speaker adaptation for robust speech recognition, in CHiME 2016 workshop, vol. 78, p. 79 (2016)

C. Marro, Y. Mahieux, K.U. Simmer, Analysis of noise reduction and dereverberation techniques based on microphone arrays with postfiltering. IEEE Trans. Speech Audio Process. 6, 240–259 (1998). https://doi.org/10.1109/89.668818

I.A. McCovan, H. Bourlard, Microphone array post-filter based on noise field coherence. IEEE Trans. Speech Audio Process. 11, 709–716 (2003). https://doi.org/10.1109/TSA.2003.818212

T. Menne, J. Heymann, A. Alexandridis, K. Irie, A. Zeyer, P. Golik, I. Kulikov, L. Drude, R. Schlüter, H. Ney, R. Häb-Umbach, A. Mouchtaris, The RWTH/UPB/FORTH system combination for the 4th CHiME challenge evaluation, in 4th CHiME Speech Separation and Recognition Challenge Workshop (2016)

C. Pan, J. Chen, J. Benesty, On the noise reduction performance of the MVDR beamformer in noisy and reverberant environments, in ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings (IEEE, 2014), pp. 815–819. https://doi.org/10.1109/ICASSP.2014.6853710

L. Pfeifenberger, M. Zohrer, F. Pernkopf, DNN-based speech mask estimation for eigenvector beamforming, in ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings (Institute of Electrical and Electronics Engineers Inc., 2017), pp. 66–70. https://doi.org/10.1109/ICASSP.2017.7952119

A.W. Rix, J.G. Beerends, M.P. Hollier, A.P. Hekstra, Perceptual evaluation of speech quality (PESQ)—a new method for speech quality assessment of telephone networks and codecs, in ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings, pp. 749–752 (2001). https://doi.org/10.1109/icassp.2001.941023

Z.M. Šarić, I.I. Papp, D.D. Kukolj, I. Velikić, G. Velikić, Partitioned block frequency domain acoustic echo canceller with fast multiple iterations. Digital Signal Process. 27, 119–128 (2014)

Z.M. Saric, D.P. Simic, S.T. Jovicic, A new post-filter algorithm combined with two-step adaptive beamformer. Circuits Syst. Signal Process. 30, 483–500 (2011). https://doi.org/10.1007/s00034-010-9233-1

Z.M. Šarić, M. Subotić, R. Bilibajkić, M. Barjaktarović, J. Stojanović, Supervised speech separation combined with adaptive beamforming. Comput. Speech Lang. 76, 101409 (2022). https://doi.org/10.1016/j.csl.2022.101409

Z.M. Saric, M. Subotic, R. Bilibajkic, M. Barjaktarovic, N. Zdravkovic, Performance analysis of MVDR beamformer applied on an end-fire microphone array composed of unidirectional microphones. Arch. Acoust. 46(4), 611–621 (2021). https://doi.org/10.24425/aoa.2021.138154

S. Siami-Namini, N. Tavakoli, A.S. Namin, The performance of LSTM and BiLSTM in forecasting time series, in IEEE International Conference on Big Data (Big Data) (2019), pp. 3285–3292. https://doi.org/10.1109/BigData47090.2019.9005997

K.U. Simmer, J. Bitzer, C. Marro, Post-filtering techniques, in Microphone Arrays. ed. by M. Brandstein, D. Ward (Springer, Berlin, 2001), pp. 39–60. https://doi.org/10.1007/978-3-662-04619-7_3

M. Souden, J. Benesty, S. Affes, On optimal frequency-domain multichannel linear filtering for noise reduction. IEEE Trans. Audio Speech Lang. Process. 18(2), 260–276 (2009)

M. Strake, B. Defraene, K. Fluyt, W. Tirry, T. Fingscheidt, Speech enhancement by LSTM-based noise suppression followed by CNN-based speech restoration. EURASIP J. Adv. Signal Process. 2020, 49 (2020). https://doi.org/10.1186/s13634-020-00707-1

C.H. Taal, R.C. Hendriks, R. Heusdens, J. Jensen, An algorithm for intelligibility prediction of time-frequency weighted noisy speech. IEEE Trans. Audio Speech Lang. Process. 19, 2125–2136 (2011). https://doi.org/10.1109/TASL.2011.2114881

Y. Tachioka, S. Watanabe, T. Hori, The MELCO/MERL system combination approach for the fourth CHiME challenge, in Proceedings of the Fourth CHiME Challenge Workshop (2016), pp. 1–3

H.L. Van Trees, Optimum Array Processing (Wiley, New York, 2002). https://doi.org/10.1002/0471221104

A. Varga, H.J.M. Steeneken, Assessment for automatic speech recognition: II. NOISEX-92: a database and an experiment to study the effect of additive noise on speech recognition systems. Speech Commun. 12, 247–251 (1993). https://doi.org/10.1016/0167-6393(93)90095-3

E. Vincent, R. Gribonval, C. Févotte, Performance measurement in blind audio source separation. IEEE Trans. Audio Speech Lang. Process. 14, 1462–1469 (2006). https://doi.org/10.1109/TSA.2005.858005

D. Wang, J. Chen, Supervised speech separation based on deep learning: an overview. IEEE/ACM Trans. Audio Speech Lang. Process. (2018). https://doi.org/10.1109/TASLP.2018.2842159

Y. Wang, K. Han, D. Wang, Exploring monaural features for classification-based speech segregation. IEEE Trans. Audio Speech Lang. Process. Trans. Audio Speech Lang. Process. 21(2), 270–279 (2013)

Y. Wang, A. Narayanan, D.L. Wang, On training targets for supervised speech separation. IEEE/ACM Trans. Audio Speech Lang. Process. 22, 1849–1858 (2014). https://doi.org/10.1109/TASLP.2014.2352935

E. Warsitz, R. Haeb-Umbach, Blind acoustic beamforming based on generalized eigenvalue decomposition. IEEE Trans. Audio Speech Lang. Process. 15(5), 1529–1539 (2007)

F. Weninger, H. Erdogan, S. Watanabe, E. Vincent, J. Le Roux, J.R. Hershey, B. Schuller, Speech enhancement with LSTM recurrent neural networks and its application to noise-robust ASR, in Latent variable analysis and signal separation. LVA/ICA 2015. Lecture Notes in Computer Science, vol. 9237, ed. by E. Vincent, A. Yeredor, Z. Koldovský, P. Tichavský (Springer, Cham, 2015). https://doi.org/10.1007/978-3-319-22482-4_11

H. Xiang, B. Wang, Z. Ou, The THU-SPMI CHiME-4 system: lightweight design with advanced multi-channel processing, feature enhancement, and language modeling, in CHiME-4 Workshop (2016)

X. Xiao, S. Zhao, D.H.H. Nguyen, X. Zhong, D.L. Jones, E.S. Chng, H. Li, The NTU-ADSC systems for reverberation challenge 2014, in Proceedings of REVERB Challenge Workshop (Spoken Language Systems MIT Computer Science and Artificial Intelligence Laboratory, Cambridge, MA, USA, 2014), p. 2

T. Yoshioka, N. Ito, M. Delcroix, A. Ogawa, K. Kinoshita, M. Fujimoto, C. Yu, W.J. Fabian, M. Espi, T. Higuchi, S. Araki, T. Nakatani, The NTT CHiME-3 system: advances in speech enhancement and recognition for mobile multi-microphone devices, in 2015 IEEE Workshop on Automatic Speech Recognition and Understanding, ASRU 2015—Proceeding (2016), pp. 436–443. https://doi.org/10.1109/ASRU.2015.7404828

R. Zelinski, A microphone array with adaptive post-filtering for noise reduction in reverberant rooms, in ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings (IEEE, 1988), pp. 2578–2581. https://doi.org/10.1109/icassp.1988.197172

X. Zhang, Z.Q. Wang, D. Wang, A speech enhancement algorithm by iterating single- and multi-microphone processing and its application to robust ASR, in ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing—Proceedings (Institute of Electrical and Electronics Engineers Inc., 2017), pp. 276–280. https://doi.org/10.1109/ICASSP.2017.7952161

X.L. Zhang, Deep ad-hoc beamforming. Comput. Speech Lang. (2021). https://doi.org/10.1016/j.csl.2021.101201

Acknowledgements

This paper results from research funded by the Ministry of Education, Science and Technological Development of the Republic of Serbia.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The computational complexity of individual processing steps for the proposed solution is displayed in Tables 5, 6 and 7. Constants used in the calculations are defined in Table 4. Table 8 displays a summary.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Šarić, Z., Subotić, M., Bilibajkić, R. et al. Mask-Based Beamforming Applied to the End-Fire Microphone Array. Circuits Syst Signal Process 43, 1661–1696 (2024). https://doi.org/10.1007/s00034-023-02530-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02530-z