Abstract

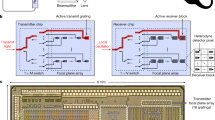

This paper presents the full VLSI implementation of a new high-resolution depth-sensing system on a chip (SoC) based on active infrared structured light, which estimates the 3D scene depth by matching randomized speckle patterns, akin to the Microsoft Kinect. We present a module to enhance the consistency of speckle patterns for robust matching, and hence improve the range of depth estimation. We present a simple and efficient hardware structure for a block-matching-based disparity estimation algorithm, which facilitates rapid generation of disparity maps in real time. For depth estimation from disparity maps, we propose a hardware friendly solution by producing a lookup table from a curve fitted to calculate and calibrate the depth value in a single step, which does not need to explicitly calibrate the parameters of the imaging sensors, such as the length of the baseline. We have implemented these ideas in an end-to-end SoC using FPGA. Compared with the Kinect, our depth-sensing SoC has wider effective ranging limit (0.6–4.5 m), and has an uppermost \(1280\times 1024\) processing capacity with 60 Hz frame frequency. Its depth resolution is 1 mm@0.82 m. Our system is superior to Kinect in terms of operating range, processing frame rate, and resolution.

Similar content being viewed by others

References

Kinect for Xbox 360. http://www.xbox.com/en-SG/Xbox360/Accessories/Kinect (2015). Accessed Apr 2015

Han, J., Shao, L., Xu, D., et al.: Enhanced computer vision with microsoft kinect sensor: a review. IEEE Trans. Cybern. 43(5), 1318–1334 (2013)

Zhang, Z.: Microsoft kinect sensor and its effect. MultiMedia IEEE. 19(2), 4–10 (2012)

Shotton, J., Sharp, T., Kipman, A., et al.: Real-time human pose recognition in parts from single depth images. Commun. ACM 56(1), 116–124 (2013)

Henry, P., Krainin, M., Herbst, E., et al.: RGB-D mapping: using Kinect-style depth cameras for dense 3D modeling of indoor environments. Int. J. Robotics Res. 31(5), 647–663 (2012)

Izadi, S., Kim, D., Hilliges, O., et al.: Kinect Fusion: real-time 3D reconstruction and interaction using a moving depth camera. In: Proceedings of the 24th annual ACM symposium on User interface software and technology. pp. 559–568 (2011)

Ganapathi, V., Plagemann, C., Koller, D., et al.: Real time motion capture using a single time-of-flight camera. In: 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 755–762 (2010)

Bevilacqua, A., Di Stefano, L., Azzari, P.: People tracking using a time-of-flight depth sensor. In: 2006 IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS’06), vol. 6, pp. 89–93 (2006)

Goesele, M., Curless, B., Seitz, S.M.: Multi-view stereo revisited. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 2402–2409. IEEE (2006)

Murray, D., Jennings, C.: Stereo vision based mapping and navigation for mobile robots. In: 1997 IEEE International Conference on Robotics and Automation, vol. 2, pp. 1694–1699. IEEE (1997)

Jain, R., Kasturi, R., Schunck, B.G.: Machine vision, vol. 5. McGraw-Hill, New York (1995)

Geiger, D., Ladendorf, B., Yuille, A.: Occlusions and binocular stereo. Int. J. Comput. Vision 14(3), 211–226 (1995)

Strecha, C., Fransens, R., Van Gool, L.: Combined depth and outlier estimation in multi-view stereo. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 2394–2401. IEEE (2006)

Bradley, D., Boubekeur, T., Heidrich, W.: Accurate multi-view reconstruction using robust binocular stereo and surface meshing. In: 2008 IEEE International Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–8. IEEE (2008)

Kanade, T., Yoshida, A., Oda, K., et al.: A stereo machine for video-rate dense depth mapping and its new applications. In: 1996 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR96, pp. 196–202. IEEE (1996)

Gokturk, S.B., Yalcin, H., Bamji, C.: A time-of-flight depth sensor-system description, issues and solutions. In: Conference on Computer Vision and Pattern Recognition Workshop (CVPRW04), pp. 35–35. IEEE (2004)

Foix, S., Alenya, G., Torras, C.: Lock-in time-of-flight (TOF) cameras: a survey. Sensors J. IEEE 11(9), 1917–1926 (2011)

Kim, W., Yibing, W., Ovsiannikov, I., et al.: A 1.5 Mpixel RGBZ CMOS image sensor for simultaneous color and range image capture. In: Solid-State Circuits Conference Digest of Technical Papers (ISSCC), 2012 IEEE International, pp. 392–394. IEEE (2012)

Blais, F.: Review of 20 years of range sensor development. J. Electron. Imaging 13(1), 231–240 (2004)

Huang, P.S., Zhang, S.: 3-d optical measurement using phase shifting based methods. In: Optics East 2005, vol. 6000, no. 1, pp. 2–12. International Society for Optics and Photonics (2005)

DApuzzo, N.: Overview of 3d surface digitization technologies in europe. In: Electronic Imaging 2006, vol. 6056, no. 1, pp. 1–13. International Society for Optics and Photonics (2006)

Gomercic, M., Winter, D.: Projector for an arrangement for three-dimensional optical measurement of objects (2009). US Patent 7,532,332

Geng, J.: Structured-light 3d surface imaging: a tutorial. Adv. Optics Photonics 3(2), 128–160 (2011)

Prime Sense. http://www.primesense.com/ (2011). Accessed October 2012

Garcia, J., Zalevsky, Z.: Range mapping using speckle decorrelation (2008). US Patent 7,433,024

Shpunt, A.: Optical designs for zero order reduction (2008). US Patent App. 12/330,766

Kim, S.Y., Cho, J.H., Koschan, A., et al.: Spatial and temporal enhancement of depth images captured by a time-of-flight depth sensor. In: 2010 20th International Conference on Pattern Recognition (ICPR), pp. 2358–2361. IEEE (2010)

Lai, P., Tian, D., Lopez, P.: Depth map processing with iterative joint multilateral filtering. In: 2010 Picture Coding Symposium (PCS), pp. 9–12. IEEE (2010)

Camplani, M., Salgado, L.: Efficient spatio-temporal hole filling strategy for kinect depth maps. Proc. SPIE 8920, 1–10 (2012)

Maini, R., Aggarwal, H.: A comprehensive review of image enhancement techniques. arXiv preprint arXiv:1003.4053 (2010)

Jourlin, M., Pinoli, J.C.: A model for logarithmic image processing. J. Microsc. 149(1), 21–35 (1988)

Deng, G., Cahill, L., Tobin, G.: The study of logarithmic image processing model and its application to image enhancement. IEEE Trans. Image Process. 4(4), 506–512 (1994)

Abed, K.H., Siferd, R.E.: Cmos vlsi implementation of a lowpower logarithmic converter. IEEE Trans. Comput. 52(11), 1421–1433 (2003)

Imaging, Aptina: MT9M001: 1/2-Inch Megapixel CMOS Digital Image Sensor. MT9M001 DS Rev, vol. 1, 1–27(2004)

Khoshelham, K., Elberink, S.O.: Accuracy and resolution of kinect depth data for indoor mapping applications. Mol. Divers. Preserv. Int. 12(2), 1437–1454 (2012)

Acknowledgments

This work was supported by “the Fundamental Research Funds for the Central Universities”, NSFC under Grant No. 61228303 and Shaanxi Industrial Projects.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yao, H., Ge, C., Hua, G. et al. The VLSI implementation of a high-resolution depth-sensing SoC based on active structured light. Machine Vision and Applications 26, 533–548 (2015). https://doi.org/10.1007/s00138-015-0680-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-015-0680-3