Abstract

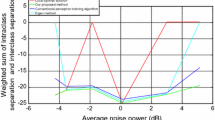

Maximum margin criterion (MMC) is a promising feature extraction method proposed recently to enhance the well-known linear discriminant analysis. However, due to the maximization of L2-norm-based distances between different classes, the features extracted by MMC are not robust enough in the sense that in the case of multi-class, the faraway class pair may skew the solution from the desired one, thus leading the nearby class pair to overlap. Aiming at addressing this problem to enhance the performance of MMC, in this paper, we present a novel algorithm called complete robust maximum margin criterion (CRMMC) which includes three key components. To deemphasize the impact of faraway class pair, we maximize the L1-norm-based distance between different classes. To eliminate possible correlations between features, we incorporate an orthonormality constraint into CRMMC. To fully exploit discriminant information contained in the whole feature space, we decompose CRMMC into two orthogonal complementary subspaces, from which the discriminant features are extracted. In such a way, CRMMC can iteratively extract features by solving two related constrained optimization problems. To solve the resulting mathematical models, we further develop an effective algorithm by properly combining polarity function and optimal projected gradient method. Extensive experiments on both synthesized and benchmark datasets verify the effectiveness of the proposed method.

Similar content being viewed by others

References

Turk, M.A., Pentland, A.P.: Face recognition using eigenfaces. In: Proceedings of the CVPR, pp. 586–591 (1991)

Belhumeur, P., Hespanha, J., Kriegman, D.: Eigenfaces vs. fisherfaces: recognition using class specific linear projection. In: Proceedings of the ECCV, pp. 43-58 (1996)

Li, H., Jiang, T., Zhang, K.: Efficient and robust feature extraction by maximum margin criterion. IEEE Trans. Neural Netw. 17, 157–165 (2006)

Fisher, R.A.: The use of multiple measurements in taxonomic problems. Ann. Human Genet. 7, 179–188 (1936)

Loog, M., Duin, R.P.W., Haeb-Umbach, R.: Multiclass linear dimension reduction by weighted pairwise Fisher criteria. IEEE Trans. Pattern Anal. Mach. Intell. 23, 762–766 (2001)

Tao, D., Li, X., Wu, X., Maybank, S.J.: Geometric mean for subspace selection. IEEE Trans. Pattern Anal. Mach. Intell. 31, 260–274 (2009)

Lotlikar, R., Kothari, R.: Fractional-step dimensionality reduction. IEEE Trans. Pattern Anal. Mach. Intell. 22, 623–627 (2000)

Yu, H., Yang, J.: A direct LDA algorithm for high-dimensional data-with application to face recognition. Pattern Recognit. 34, 2067–2069 (2001)

Jin, Z., Yang, J., Hu, Z., Lou, Z.: Face recognition based on the uncorrelated discriminant transformation. Pattern Recognit. 34, 1405–1416 (2001)

Liang, Y., Li, C., Gong, W., Pan, Y.: Uncorrelated linear discriminant analysis based on weighted pairwise Fisher criterion. Pattern Recognit. 40, 3606–3615 (2007)

Price, J.R., Gee, T.F.: Face recognition using direct, weighted linear discriminant analysis and modular subspaces. Pattern Recognit. 38, 209–219 (2005)

Xu, B., Huang, K., Liu, C.L.: Dimensionality reduction by minimal distance maximization. In: Proceedings of the ICPR, pp. 569–572 (2010)

Xu, B., Huang, K., Liu, C.L.: Maxi–Min discriminant analysis via online learning. Neural Netw. 34, 56–64 (2012)

Ji, S., Ye, J.: Generalized linear discriminant analysis: a unified framework and efficient model selection. IEEE Trans. Neural Netw. 19, 1768–1782 (2008)

Howland, P., Park, H.: Generalizing discriminant analysis using the generalized singular value decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 26, 995–1006 (2004)

Ye, J., Xiong, T.: Computational and theoretical analysis of null space and orthogonal linear discriminant analysis. J. Mach. Learn. Res. 7, 1183–1204 (2006)

Hu, R.-X., Jia, W., Huang, D.-S., Lei, Y.-K.: Maximum margin criterion with tensor representation. Neurocomputing 73, 1541–1549 (2010)

Yang, W., Wang, J., Ren, M., Yang, J., Zhang, L., Liu, G.: Feature extraction based on Laplacian bidirectional maximum margin criterion. Pattern Recognit. 42, 2327–2334 (2009)

Zheng, W., Zou, C., Zhao, L.: Weighted maximum margin discriminant analysis with kernels. Neurocomputing 67, 357–362 (2005)

Lu, G.-F., Lin, Z., Jin, Z.: Face recognition using discriminant locality preserving projections based on maximum margin criterion. Pattern Recognit. 43, 3572–3579 (2010)

Kwak, N.: Principal component analysis based on L1-norm maximization. IEEE Trans. Pattern Anal. Mach. Intell. 30, 1672–1680 (2008)

Li, X., Pang, Y., Yuan, Y.: L1-norm-based 2DPCA. IEEE Trans. Syst. Man Cybern. Part B Cybern. 40, 1170–1175 (2010)

Pang, Y., Yuan, Y.: Outlier-resisting graph embedding. Neurocomputing 73, 968–974 (2009)

Okada, T., Tomita, S.: An optimal orthonormal system for discriminant analysis. Pattern Recognit. 18, 139–144 (1985)

Yang, J.: Why can LDA be performed in PCA transformed space? Pattern Recognit. 36, 563–566 (2003)

Yang, J., Frangi, A.F., Zhang, D., Jin, Z.: KPCA plus LDA: a complete kernel Fisher discriminant framework for feature extraction and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 27, 230–244 (2005)

Bertsekas, D.P.: Nonlinear Programming, 2nd edn, Athena (1999)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer, Berlin (2003)

Liu, K., Cheng, Y.-Q., Yang, J.-Y., Xiao, L.: An efficient algorithm for Foley–Sammon optimal set of discriminant vectors by algebraic method. Int. J. Pattern Recognit. Artif. Intell. 6, 817–829 (1992)

Boyd, S., Vandenberghe, L.: Convex Optimization. Cambridge University Press, Cambridge (2004)

Zhou, T., Tao, D., Wu, X.: NESVM: a fast gradient method for support vector machines. In: Proceedings of the ICDM, pp. 679–688 (2010)

Yale face database (online available). http://cvc.yale.edu/projects/yalefaces/yalefaces.html

Georghiades, A., Belhumeur, P., Kriegman, D.: From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 23(6), 643–660 (2001)

Samaria, F.S., Harter, A.C.: Parameterisation of a stochastic model for human face identification. In: Proceedings of the 2nd IEEE International Workshop on Applications of Computer Vision, Sarasota, Florida, pp. 138–142 (1994)

UMist face database (online avaiable). http://images.ee.umist.ac.uk/danny/database.html

Acknowledgments

The authors would like to thank the editor and the anonymous reviewers for their critical and constructive comments and suggestions. This work was partially supported by the National Natural Science Foundation of China (Grant No. 61203244, 61403172, 61573171 and 61202318) and China Postdoctoral Science Foundation (2015T80511, 2014M561592), the Talent Foundation of Jiangsu University, China (No. 14JDG066) and the ministry of transportation of China (Grant No. 2013-364-836-900).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Proof of Proposition 1

Proof

First, we prove the convergence of Algorithm 1. According to the step 3 of Algorithm 1, it can be seen that the updating for x is non-increasing. That is to say, for each t, we have

Additionally, note that \(|{a_i^\mathrm{T} x^{( {t+1})}}|=sign( {a_i^\mathrm{T} x^{( {t+1})}})a_i^\mathrm{T} x^{( {t+1})}\ge sign( {a_i^\mathrm{T} x^{( t)}})a_i^\mathrm{T} x^{( {t+1})}=p_i^{( t)} a_i^\mathrm{T} x^{( {t+1})}\) and \(| {a_i^\mathrm{T} x^{( t)}}|=sign( {a_i^\mathrm{T} x^{( t)}})a_i^\mathrm{T} x^{( t)}=p_i^{( t)} a_i^\mathrm{T} x^{( t)}\); it follows that

This inequality holds for each i; thus by adding them together, we obtain

Adding (20) to (18), we arrive at

In other words, for two consecutive iterations \(x^{( t)}\) and \(x^{( {t+1})}\) of Algorithm 1, the value of the original objective f( x) in (12) or (14) will not increase. Considering the objective function (12) is bounded from below on the feasible region, the established monotone convergence theorem can guarantee that Algorithm 1 will converge.

Second, suppose that Algorithm 1 converges to a limit point \(x^*\), according to subproblem (15), \(x^*\) should satisfy the following problem:

Then, the Lagrangian function of (22) can be written as

where \(\lambda _1 \),\(\lambda _2 \) are the Lagrangian multiplier vectors. Since \(x^*\) is the optimal solution of (22), we can obtain the following equation by following the KKT condition [30]

The above equation is exactly the KKT condition of the original problem (12), thus showing that \(x^*\) is a local optimal solution of (12).

Appendix 2: Proof of Proposition 2

Proof

To solve constrained minimization problem (16), we introduce the following Lagrangian function:

where \(\alpha \) and \(\beta \) are vectors of Lagrangian multipliers. According to the KKT conditions [30], we obtain the following equation:

Substituting (26) into (25) yields the following dual problem in variables \(\alpha \) and \(\beta \):

Noting that the above maximization problem is unconstrained in \(\alpha \) and \(\beta \), it follows that

Solving the above equations and taking into account \(M^\mathrm{T}M=I\), we have

Inserting (30) into (26) and considering that \(I-MM^\mathrm{T}\) must be positive semi-definite, we finally get

Rights and permissions

About this article

Cite this article

Chen, X., Cai, Y., Chen, L. et al. Discriminant feature extraction for image recognition using complete robust maximum margin criterion. Machine Vision and Applications 26, 857–870 (2015). https://doi.org/10.1007/s00138-015-0709-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-015-0709-7