Abstract

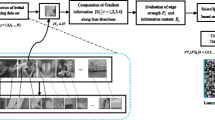

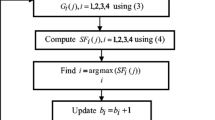

For the image fusion method using sparse representation, the adaptive dictionary and fusion rule have a great influence on the multi-modality image fusion, and the maximum \(L_{1}\) norm fusion rule may cause gray inconsistency in the fusion result. In order to solve this problem, we proposed an improved multi-modality image fusion method by combining the joint patch clustering-based adaptive dictionary and sparse representation in this study. First, we used a Gaussian filter to separate the high- and low-frequency information. Second, we adopted the local energy-weighted strategy to complete the low-frequency fusion. Third, we used the joint patch clustering algorithm to reconstruct an over-complete adaptive learning dictionary, designed a hybrid fusion rule depending on the similarity of multi-norm of sparse representation coefficients, and completed the high-frequency fusion. Last, we obtained the fusion result by transforming the frequency domain into the spatial domain. We adopted the fusion metrics to evaluate the fusion results quantitatively and proved the superiority of the proposed method by comparing the state-of-the-art image fusion methods. The results showed that this method has the highest fusion metrics in average gradient, general image quality, and edge preservation. The results also showed that this method has the best performance in subjective vision. We demonstrated that this method has strong robustness by analyzing the parameter’s influence on the fusion result and consuming time. We extended this method to the infrared and visible image fusion and multi-focus image fusion perfectly. In summary, this method has the advantages of good robustness and wide application.

Similar content being viewed by others

Availability of Data and Materials

The datasets supporting the conclusions of this article are available in the repository: http://www.med.harvard.edu/AANLIB/. https://figshare.com/articles/TN_Image_Fusion_Dataset/1008029. https://mansournejati.ece.iut.ac.ir/content/lytro-multi-focus-dataset

References

Kaur H, Koundal D, Kadyan V.: Image fusion techniques: a survey. Arch. Comput. Methods Eng., https://doi.org/10.1007/s11831-021-09540-7 (2021).

Goshtasby, A., Nikolov, S.: Image fusion: advances in the state of the art. Inf. Fusion 8(2), 114–118 (2007). https://doi.org/10.1016/j.inffus.2006.04.001

Ma, J., Ma, Y., Li, C.: Infrared and visible image fusion methods and applications: a survey. Inf. Fusion, https://doi.org/10.1016/j.inffus.2018.02.004(2019).

James, A.P., Dasarathy, B.V.: Medical image fusion: A survey of the state of the art. Inf. Fusion 19, 4–19 (2014). https://doi.org/10.1016/j.inffus.2013.12.002

Li, S., Yang, B., Hu, J.: Performance comparison of different multi-resolution transforms for image fusion. Inf. Fusion 12(2), 74–84 (2011). https://doi.org/10.1016/j.inffus.2010.03.002

Nejati, M., Samavi, S., Karimi, N.: Surface area-based focus criterion for multi-focus image fusion. Inf. Fusion 36(15), 284–295 (2017). https://doi.org/10.1016/j.inffus.2016.12.009

Eckhorn, R., Reitbock, H.J., Arndt, M., Dicke, P.: A neural network for feature linking via synchronous activity: results from cat visual cortex and from simulations. Can. J. Microbiol. 46(8), 759–763 (1989)

Broussard, R.P., Rogers, S.K.: Physiologically motivated image fusion using pulse-coupled neural networks. Proc. Appl. Sci. Artif. Neural Netw. II, 372–384 (1996). https://doi.org/10.1117/12.235981

Li, T.: Research on multi sensor image information fusion method and application, Central South University (2001).

Zhang, J.Y., Liang, J.L.: Image fusion based on pulse-coupled neural networks [J]. Computer Simulation 21(1), 102–104 (2004)

Qu, X.B.: Image fusion algorithm based on spatial frequency motivated pulse coupled neural networks in NSCT domain. ACTA AUTOM ATICA SINICA 34, 1508–1514 (2008)

Kong, W.W., Zhang, L.J., Lei, Y.: Novel fusion method for visible light and infrared images based on NSST–SF–PCNN. Infr. Phys. Technol. 65(1), 103–112 (2014). https://doi.org/10.1016/j.infrared.2014.04.003

Wang, Z., Ma, Y.: Medical image fusion using m-PCNN. Inf. Fusion 9(2), 176–185 (2008). https://doi.org/10.1016/j.inffus.2007.04.003

Wang, C., Zhao, Z.Z., Ren, Q.Q., et al.: Multi-modality anatomical and functional medical image fusion based on simplified-spatial frequency-pulse coupled neural networks and region energy-weighted average strategy in non-sub sampled contourlet transform domain. J. Med. Imag. Health Inf. 9, 1017–1027 (2019). https://doi.org/10.1166/jmihi.2019.2669

Xu, X., Shan, D., Wang, G., et al.: Multimodal medical image fusion using PCNN optimized by the QPSO algorithm. Appl. Soft Comput. 48, 588–595 (2016). https://doi.org/10.1016/j.asoc.2016.03.028

Wang, Q., Nie, R.C., Zhou, D.M., Jin, X., He, K.J., Yu, J.F.: Image fusion algorithm using PCNN model parameters of multi-objective particle swarm optimization. J. Image Graph. 21(1), 1295–1306 (2016)

Yu, N., Qiu, T., Bi, F., Wang, A.: Image features extraction and fusion based on joint sparse representation. IEEE J. Select. Topics Signal Process. 5(5), 1074–1082 (2011)

Zhang, Q., Liu, Y., Blum, R.S. et al.: Sparse representation based multi-sensor image fusion: a review, Inf. Fusion, S1566253517301136 (2017).

Yang, B., Li, S.: Multi focus image fusion and restoration with sparse representation. IEEE Trans. Instrum. Meas. 59(4), 884–892 (2010). https://doi.org/10.1109/TIM.2009.2026612

Yin, H.T.: Sparse representation with learned multiscale dictionary for image fusion. Neurocomputing 148, 600–610 (2015). https://doi.org/10.1016/j.neucom.2014.07.003

Xin, L.G., Wang, R.L., Wang, G.Y., et al.: Remote Sensing Image Fusion based on DCT. Appl. Res. Comput. 022(004), 242–243 (2005)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing over complete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Li, Y., Li, F.Y., Bai, B.D., et al.: Image fusion via nonlocal sparse K-SVD dictionary learning. Appl. Opt. 55, 1814–1823 (2016). https://doi.org/10.1364/AO.55.001814

Kim, M., Han, D.K., Ko, H.: Joint patch clustering-based dictionary learning for multimodal image fusion. Inf. Fusion 27, 198–214 (2016). https://doi.org/10.1016/j.inffus.2015.03.003

Kim, M., Han, D.K., Ko, H.: Multimodal image fusion via sparse representation with local patch dictionaries. IEEE Int. Conf. Image Process. 2013, 1301–1305 (2013). https://doi.org/10.1109/ICIP.2013.6738268

Shen, Y., Xiong, H., Dai, W.: Multiscale dictionary learning for hierarchical sparse representation, IEEE International Conference on Multimedia & Expo. IEEE (2017).

Piella, G., Heijmans, H.: A new quality metric for image fusion, Proceedings of 10th International Conference on Image Processing, Barcelona, Spain, 173–176 (2003).

A.J. Keith, A. J., AlexBecker, J.: The Whole Brain Atlas on CD-ROM, 1999, Amsterdam, Holland, Lippincott Williams & Wilkins.

Alexander, T.: TNO Image Fusion Dataset. figshare. Dataset. 2014, 10.6084/m9.figshare.1008029.v1

Nejati, M., Samavi, S., Shirani, S.: Multi-focus Image Fusion Using Dictionary-Based Sparse Representation [J]. Information Fusion 25, 72–84 (2015). https://doi.org/10.1016/j.inffus.2014.10.004

Acknowledgements

This work was supported by the Scientific and Technological Project of Henan Province, China (Grants nos. 202102310536), and the Open Project Program of the Third Affiliated Hospital of Xinxiang Medical University (No. KFKTYB202109)

Author information

Authors and Affiliations

Contributions

Chang Wang contributed to conceptualization, methodology, software, validation, and writing—original draft. Yang Wu helped in formal analysis, conceptualization, resources, and software. Junqiang Zhao was involved in methodology, supervision, and writing—review and editing. Yi Yu performed supervision, project administration, and writing—review and editing.

Corresponding author

Ethics declarations

Competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, C., Wu, Y., Yu, Y. et al. Joint patch clustering-based adaptive dictionary and sparse representation for multi-modality image fusion. Machine Vision and Applications 33, 69 (2022). https://doi.org/10.1007/s00138-022-01322-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01322-w