Abstract

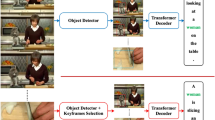

Video captioning is an important problem involved in many applications. It aims to generate some descriptions of the content of a video. Most of existing methods for video captioning are based on the deep encoder–decoder models, particularly, the attention-based models (say Transformer). However, the existing transformer-based models may not fully exploit the semantic context, that is, only using the left-to-right style of context but ignoring the right-to-left counterpart. In this paper, we introduce a bidirectional (forward-backward) decoder to exploit both the left-to-right and right-to-left styles of context for the Transformer-based video captioning model. Thus, our model is called bidirectional Transformer (dubbed BiTransformer). Specifically, in the bridge of the encoder and forward decoder (aiming to capture the left-to-right context) used in the existing Transformer-based models, we plug in a backward decoder to capture the right-to-left context. Equipped with such bidirectional decoder, the semantic context of videos will be more fully exploited, resulting in better video captions. The effectiveness of our model is demonstrated over two benchmark datasets, i.e., MSVD and MSR-VTT,via comparing to the state-of-the-art methods. Particularly, in terms of the important evaluation metric CIDEr, the proposed model outperforms the state-of-the-art models with improvements of 1.2% in both datasets.

Similar content being viewed by others

References

Aafaq, N., Akhtar, N., Liu, W., Gilani, S.Z., Mian, A.: Spatio-temporal dynamics and semantic attribute enriched visual encoding for video captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 12487–12496 (2019)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? A new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017)

Chen, D., Dolan, W.: Collecting highly parallel data for paraphrase evaluation. In: Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Association for Computational Linguistics, Portland, Oregon, USA, pp. 190–200 (2011). https://www.aclweb.org/anthology/P11-1020

Chen, L., Yan, X., Xiao, J., Zhang, H., Pu, S., Zhuang, Y.: Counterfactual samples synthesizing for robust visual question answering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Chen, M., Li, Y., Zhang, Z., Huang, S.: Tvt: two-view transformer network for video captioning. In: Asian Conference on Machine Learning, pp. 847–862 (2018)

Chen, X., Zhang, S., Song, D., Ouyang, P., Yin, S.: Transformer with bidirectional decoder for speech recognition. In: Proceedings of Interspeech, 2020, pp. 1773–1777 (2020)

Chen, Y., Wang, S., Zhang, W., Huang, Q.: Less is more: picking informative frames for video captioning. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 358–373 (2018)

Denkowski, M., Lavie, A.: Meteor universal: language specific translation evaluation for any target language. In: Proceedings of the Ninth Workshop on Statistical Machine Translation, pp. 376–380 (2014)

Guadarrama, S., Krishnamoorthy, N., Malkarnenkar, G., Venugopalan, S., Mooney, R., Darrell, T., Saenko, K.: Youtube2text: recognizing and describing arbitrary activities using semantic hierarchies and zero-shot recognition. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2712–2719 (2013)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Jiang, H., Misra, I., Rohrbach, M., Learned-Miller, E., Chen, X.: In defense of grid features for visual question answering. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Jin, T., Huang, S., Chen, M., Li, Y., Zhang, Z.: Sbat: video captioning with sparse boundary-aware transformer. In: IJCAI (2020)

Kay, W., Carreira, J., Simonyan, K., Zhang, B., Hillier, C., Vijayanarasimhan, S., Viola, F., Green, T., Back, T., Natsev, P., et al. The kinetics human action video dataset. arXiv preprint arXiv:1705.06950 (2017)

Kingma, D.P, Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Kojima, A., Tamura, T., Fukunaga, K.: Natural language description of human activities from video images based on concept hierarchy of actions. Int. J. Comput. Vis. 50(2), 171–184 (2002)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Proceedings of the 25th International Conference on Neural Information Processing Systems—Volume 1, Curran Associates Inc., Red Hook, NY, USA, NIPS’12, pp. 1097–1105 (2012)

Lei, J., Wang, L., Shen, Y., Yu, D., Berg, T., Bansal, M.: MART: memory-augmented recurrent transformer for coherent video paragraph captioning. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Association for Computational Linguistics, Online, pp. 2603–2614 (2020). https://doi.org/10.18653/v1/2020.acl-main.233. https://www.aclweb.org/anthology/2020.acl-main.233

Lin, C.Y.: Rouge: a package for automatic evaluation of summaries. In: Text Summarization Branches Out, pp. 74–81 (2004)

Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th annual meeting of the Association for Computational Linguistics, pp. 311–318 (2002)

Pei, W., Zhang, J., Wang, X., Ke, L., Shen, X., Tai, Y.W.: Memory-attended recurrent network for video captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8347–8356 (2019)

Rohrbach, M., Qiu, W., Titov, I., Thater, S., Pinkal, M., Schiele, B.: Translating video content to natural language descriptions. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 433–440 (2013)

Szegedy, C., Ioffe, S., Vanhoucke, V., Alemi, A.: Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31 (2017)

Teney, D., Wu, Q., van den Hengel, A.: Visual question answering: a tutorial. IEEE Signal Process. Mag. 34(6), 63–75 (2017). https://doi.org/10.1109/MSP.2017.2739826

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Vedantam, R., Lawrence Zitnick, C., Parikh, D.: Cider: consensus-based image description evaluation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4566–4575 (2015)

Venugopalan, S., Rohrbach, M., Donahue, J., Mooney, R., Darrell, T., Saenko, K.: Sequence to sequence-video to text. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4534–4542 (2015)

Venugopalan, S., Xu, H., Donahue, J., Rohrbach, M., Mooney, R., Saenko, K.: Translating videos to natural language using deep recurrent neural networks. In: Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 1494–1504 (2015)

Voykinska, V., Azenkot, S., Wu, S., Leshed, G.: How blind people interact with visual content on social networking services. In: ACM Conference on Computer-Supported Cooperative Work & Social Computing, Association for Computing Machinery, New York, NY, USA, CSCW ’16, pp. 1584–1595. https://doi.org/10.1145/2818048.2820013 (2016)

Wang, B., Ma, L., Zhang, W., Liu, W.: Reconstruction network for video captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7622–7631 (2018)

Wang, B., Ma, L., Zhang, W., Jiang, W., Wang, J., Liu, W.: Controllable video captioning with pos sequence guidance based on gated fusion network. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2641–2650 (2019)

Wu, Y., Zhu, L., Jiang, L., Yang, Y.: Decoupled novel object captioner. In: Proceedings of the 26th ACM International Conference on Multimedia, pp. 1029–1037 (2018)

Wu, Y., Jiang, L., Yang, Y.: Switchable novel object captioner. IEEE Trans. Pattern Anal. Mach. Intell. (2022). https://doi.org/10.1109/TPAMI.2022.3144984

Xu, J., Mei, T., Yao, T., Rui, Y.: Msr-vtt: a large video description dataset for bridging video and language. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5288–5296 (2016)

Yao, L., Torabi, A., Cho, K., Ballas, N., Pal, C., Larochelle, H., Courville, A.: Describing videos by exploiting temporal structure. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4507–4515 (2015)

Yuan, L., Wang, T., Zhang, X., Tay, F.E., Jie, Z., Liu, W., Feng, J.: Central similarity quantization for efficient image and video retrieval. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020)

Zhang, J., Peng, Y.: Object-aware aggregation with bidirectional temporal graph for video captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8327–8336 (2019)

Zhang, S., Peng, H., Fu, J., Luo, J.: Learning 2d temporal adjacent networks for moment localization with natural language. In: AAAI (2020)

Zhang, X., Su, J., Qin, Y., Liu, Y., Ji, R., Wang, H.: Asynchronous bidirectional decoding for neural machine translation. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Zhang, Z., Shi, Y., Yuan, C., Li, B., Wang, P., Hu, W., Zha, Z.J.: Object relational graph with teacher-recommended learning for video captioning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13278–13288 (2020)

Zhang, Z., Qi, Z., Yuan, C., Shan, Y., Li, B., Deng, Y., Hu, W.: Open-book video captioning with retrieve-copy-generate network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9837–9846 (2021)

Zhou, L., Zhou, Y., Corso, J.J., Socher, R., Xiong, C.: End-to-end dense video captioning with masked transformer. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8739–8748 (2018)

Zhou, L., Zhang, J., Zong, C.: Synchronous bidirectional neural machine translation. Trans. Assoc. Comput. Linguist. 7(5), 91–105 (2019)

Acknowledgements

The research is supported by the National Natural Science Foundation (NNSF) of China (Nos. 61877031, 61876074). We thank all reviewers for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhong, M., Zhang, H., Wang, Y. et al. BiTransformer: augmenting semantic context in video captioning via bidirectional decoder. Machine Vision and Applications 33, 77 (2022). https://doi.org/10.1007/s00138-022-01329-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01329-3