Abstract

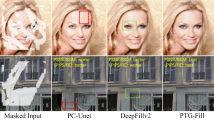

In this work, we propose a two-stage architecture to perform image inpainting from coarse to fine. The framework extracts advantages from different designs in the literature and integrates them into the inpainting network. We apply region normalization to generate coarse blur results with the correct structure. Then, contextual attention is applied to utilize the texture information of background regions to generate the final result. Although using region normalization can improve the performance and quality of the network, it often results in visible color shifts. To solve this problem, we introduce perceptual color distance in the loss function. In quantitative comparison experiments, the proposed method is superior to the existing similar methods in Inception Score, Fréchet Inception Distance, and perceptual color distance. In qualitative comparison experiments, the proposed method can effectively resolve the problem of color shifts.

Similar content being viewed by others

References

Guillemot, C., Le Meur, O.: Image inpainting: overview and recent advances. IEEE Signal Process. Mag. 31(1), 127–144 (2014)

Kang, H., Hwang, D., Lee, J.: Specular highlight region restoration using image clustering and inpainting. J. Vis. Commun. Image Represent. 77(9), 103106 (2021)

Bertalmio, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. In: Proceedings of 27th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH), USA, pp. 417–424 (2000)

Ballester, C., Bertalmio, M., Caselles, V., Sapiro, G., Verdera, J.: Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans. Image Process. 10(8), 1200–1211 (2001)

Bertalmio, M., Vese, L., Sapiro, G., Osher, S.: Simultaneous structure and texture image inpainting. IEEE Trans. Image Process. 12(8), 882–889 (2003)

Drori, I., Cohen-Or, D., Yeshurun, H.: Fragment-based image completion. ACM Trans. Graph. 22(3), 303–312 (2003)

Criminisi, A., Perez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 13(9), 1200–1212 (2004)

Chen, Z., Dai, C., Jiang, L., Sheng, B., Zhang, J., Lin, W., Yuan, Y.: Structure-aware image inpainting using patch scale optimization. J. Vis. Commun. Image Represent. 40, 312–323 (2016)

Barnes, C., Shechtman, E., Finkelstein, A., Goldman, D.: PatchMatch: a randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 28(3), 24 (2009)

Barnes, C., Shechtman, E., Goldman, D.B., Finkelstein, A.: The generalized PatchMatch correspondence algorithm. In: Proceedings of European Conference on Computer Vision (ECCV), vol. 6313, pp. 29–43 (2010)

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., Efros, A.A.: Context encoders: feature learning by inpainting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2536–2544 (2016)

Iizuka, S., Simo-Serra, E., Ishikawa, H.: Globally and locally consistent image completion. ACM Trans. Graph. 36(4), 107 (2017)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Generative image inpainting with contextual attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5505–5514 (2018)

Liu, G., Reda, F.A., Shih, K.J., Wang, T.-C., Tao, A., Catanzaro, B.: Image inpainting for irregular holes using partial convolutions. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 85–100 (2018)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.: Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 4471–4480 (2019)

Li, S. et al.: Interactive separation network for image inpainting. In: 2020 IEEE International Conference on Image Processing (ICIP), pp. 1008–1012 (2020)

Yi, Z., Tang, Q., Azizi, S., Jang, D., Xu, Z.: Contextual residual aggregation for ultra high-resolution image inpainting. In: Proceedings of the IEEE Conference Computer Vision and Pattern Recognition (CVPR), pp. 7508–7517 (2020)

Zheng, C., Cham, T.J., Cai, J.: Pluralistic free-form image completion. Int. J. Comput. Vis. 129, 2786–2805 (2021)

Li, X., Wang, L., Cheng, Q., Wu, P., Gan, W., Fang, L.: Cloud removal in remote sensing images using nonnegative matrix factorization and error correction. ISPRS J. Photogramm. Remote. Sens. 148, 103–113 (2019)

Wang, N., Zhang, Y., Zhang, L.: Dynamic selection network for image inpainting. IEEE Trans. Image Process. 30, 1784–1798 (2021)

Wang, W., Zhang, J., Niu, L., Ling, H., Yang, X., Zhang, L.: Parallel multi-resolution fusion network for image inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 14559–14568 (2021)

Yu, T. et al.: Region normalization for image inpainting. In: Proceedings of the Association for the Advancement of Artificial Intelligence (AAAI), pp. 12733–12740 (2020)

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Proceedings of the Advances in Neural Information Processing Systems, pp. 2672–2680 (2014)

Sharma, G., Wu, W., Dalal, E.N.: The CIEDE2000 color-difference formula: implementation notes, supplementary test data, and mathematical observations. Color Res. Appl. 30(1), 21–30 (2005)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern Recognition (CVPR), pp. 770–778 (2016)

Ulyanov, D., Vedaldi, A., Lempitsky, V.: Instance normalization: the missing ingredient for fast stylization (2016). arXiv:1607.08022

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. LNCS 9351, 234–241 (2015)

Isola, P., Zhu, J.-Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks (2016). arXiv:1611.07004

McDonald, R., Smith, K.J.: CIE94—a new colour-difference formula. JSDC 111, 376–379 (1995)

Zhao, Z., Liu, Z., Larson, M.: Towards large yet imperceptible adversarial image perturbations with perceptual color distance. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1036–1045 (2020)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 448–456 (2015)

Zhou, B., Lapedriza, A., Khosla, A., Oliva, A., Torralba, A.: Places: a 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1452–1464 (2018)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In: Proceedings of the Neural Information Processing Systems (NeurIPS), pp. 6626–6637 (2017)

Salimans, T., Goodfellow, I., Zaremba, W., Cheung, V., Radford, A., Chen, X., Chen, X.: Improved techniques for training GANs. In: Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), pp. 2234–2242 (2016)

Oncu, A.I., Deger, F., Hardeberg, J.Y.: Evaluation of digital inpainting quality in the context of artwork restoration. In: Proceedings of the European Conference on Computer Vision, pp. 561–570 (2012)

Acknowledgements

This research is supported in part by Ministry of Science and Technology, Taiwan, under Grant Number 110-2221-E-008-074-MY2.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cheng, HY., Yu, CC. & Li, CY. Improved two-stage image inpainting with perceptual color loss and modified region normalization. Machine Vision and Applications 33, 94 (2022). https://doi.org/10.1007/s00138-022-01344-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01344-4