Abstract

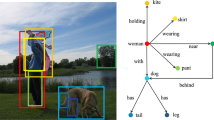

Scene graphs present the semantic highlights of the underlying image in directed graph form. Their automated generation is often restricted to detecting multiple relations for objects. The diverse attributes of the objects are neglected in the process. We propose a Multiple Attribute Detector that can capture structured attribute information of an object, i.e. attribute type, value in the form of a triplet. The module is capable of generating multiple such triplets for every detected object in the scene. It can be integrated with existing scene graph generation frameworks without altering relation detection mechanism to yield comprehensive scene graphs. We have also created new datasets for this purpose that include attribute type-value data per object.

Similar content being viewed by others

References

Aditya, S., Yang, Y., Baral, C., et al.: From images to sentences through scene description graphs using commonsense reasoning and knowledge. arXiv:1511.03292v1 [cs.CV] (2015)

Aditya, S., Yang, Y., Baral, C., et al.: Image understanding using vision and reasoning through scene description graph. Comput. Vis. Image Underst. 3, 33–45 (2018)

Chen, T., Yu, W., Chen, R., et al.: Knowledge-embedded routing network for scene graph generation. In: IEEE Conference on Computer Vision and Pattern Recognition (2019)

Farhadi, A,. Endres, I., Hoiem, D., et al.: Describing objects by their attributes. In: IEEE Conference on Computer Vision and Pattern Recognition (2009)

Gu, J., Zhao, H., Lin, Z., et al.: Scene graph generation with external knowledge and image reconstruction. In: IEEE Conference on Computer Vision and Pattern Recognition (2019)

Hudson, D., Manning, C.D.: Gqa–a new dataset for real-world visual reasoning and compositional question answering. In: IEEE Conference on Computer Vision and Pattern Recognition, pp 6700–6709 (2019)

Isola, P., Lim, J.J., Adelson, E.H.: Discovering states and transformations in image collections. In: IEEE Conference on Computer Vision and Pattern Recognition (2015)

Johnson, J., Krishna, R., Stark, M., et al.: Image retrieval using scene graphs. In: IEEE Conference on Computer Vision and Pattern Recognition (2015)

Johnson, J., Gupta, A., Fei-Fei, L .: Image generation from scene graphs. In: IEEE Conference on Computer Vision and Pattern Recognition (2018)

Klawonn, M., Heim, E.: Generating triples with adversarial networks for scene graph construction. In: Association for the Advancement of Artificial Intelligence (2018)

Lampert, C.H., Nickisch, H., Harmeling, S.: Attribute-based classification for zero-shot visual object categorization. IEEE Trans. Pattern Anal. Mach. Intell. 36 (2014)

Li, Y., Ouyang, W., Zhou, B., et al.: Scene graph generation from objects, phrases and region captions. In: International Conference on Computer Vision (2017)

Li, Y., Ouyang, W., Zhou, B., et al.: Factorizable net: An efficient subgraph-based framework for scene graph generation. In: European Conference on Computer Vision (2018)

Li, Y.L., Xu, Y., Mao, X., et al.: Symmetry and group in attribute-object compositions. In: IEEE Conference on Computer Vision and Pattern Recognition (2020)

Lin, X., Ding, C., Zeng, J., et al.: (2020). Gps-net: Graph property sensing network for scene graph generation. In: IEEE Conference on Computer Vision and Pattern Recognition

Liu, H., Yan, N., Mortazavi, M., et al.: Fully convolutional scene graph generation. In: IEEE Conference on Computer Vision and Pattern Recognition (2021)

Lu, C., Krishna, R., Bernstein, M., et al.: Visual relationship detection with language priors. In: European Conference on Computer Vision (2016)

Mancini, M., Naeem, M.F., Xian, Y., et al.: Open world compositional zero-shot learning. In: IEEE Conference on Computer Vision and Pattern Recognition (2021)

Misra, I., Gupta, A., Hebert, M .:From red wine to red tomato - composition with context. In: IEEE Conference on Computer Vision and Pattern Recognition (2017)

Naeem M.F, Xian Y, Tombari F, et al Learning graph embeddings for compositional zero-shot learning. In: IEEE Conference on Computer Vision and Pattern Recognition (2021)

Nagarajan, T., Grauman, K.: Attributes as operators. In: European Conference on Computer Vision (2018)

Nan, Z., Liu, Y., Zheng, N., et al Recognizing unseen attribute-object pair with generative model. In: Association for the Advancement of Artificial Intelligence (2019)

Parikh, D., Grauman, K.: Relative attributes. In: International Conference on Computer Vision (2011)

Purushwalkam, S., Nickel, M, Gupta, A., et al. Task-driven modular networks for zero-shot compositional learning. In: International Conference on Computer Vision (2019)

Krishna, R., YZ, Groth, O., Johnson, J., et al.: Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vis. 123 (2017)

Ren, S., He, K., Girshick, R., et al.: Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39, 1137–1149 (2017)

Schuster, S., Krishna, R., Chang, A., et al.: Generating semantically precise scene graphs from textual descriptions for improved image retrieval. In: ACL Workshop on Vision and Language, pp. 70–80 (2015)

Shi, J., Zhang, H., Li, J.: Explainable and explicit visual reasoning over scene graphs. In: IEEE Conference on Computer Vision and Pattern Recognition (2019)

Tang, K.: Scene graph benchmark in pytorch. https://github.com/KaihuaTang/Scene-Graph-Benchmark.pytorch (2020)

Tang, K., Zhang, H., Wu, B., et al.: Learning to compose dynamic tree structures for visual contexts. In: IEEE Conference on Computer Vision and Pattern Recognition (2019)

Tang, K., Niu, Y., Huang, J., et al .: Unbiased scene graph generation from biased training. In: IEEE Conference on Computer Vision and Pattern Recognition (2020)

Teney, D., Liu, L., van Den Hengel, A.: Graph-structured representations for visual question answering. In: IEEE Conference on Computer Vision and Pattern Recognition (2017)

Wan, H., Luo, Y., Peng, B., et al.: Representation learning for scene graph completion via jointly structural and visual embedding. In: International Joint Conference on Artificial Intelligence (2018)

Wei, K., Yang, M., Wang, H., et al.: Adversarial fine-grained composition learning for unseen attribute-object recognition. In: International Conference on Computer Vision (2019)

Woo, S., Kim, D., Cho, D., et al.: Linknet: Relational embedding for scene graph. In: Neural Information Processing Systems (2018)

Xu, D., Zhu, Y., Choy, C.B., et al.: Scene graph generation by iterative message passing. In: IEEE Conference on Computer Vision and Pattern Recognition (2017)

Yang, J., Lu, J., Lee, S., et al.: (2018) Graph r-cnn for scene graph generation. In: European Conference on Computer Vision (2017)

Yang, X., Tang, K., Zhang, H., et al.: Auto-encoding scene graphs for image captioning. In: IEEE Conference on Computer Vision and Pattern Recognition (2019)

Yao, T., Pan, Y., Li, Y., et al Exploring visual relationship for image captioning. In: European Conference on Computer Vision (2018)

Yu, A., Grauman K.: Semantic jitter: Dense supervision for visual comparisons via synthetic images. In: International Conference on Computer Vision (2017)

Zareian, A., Karaman, S., et al.: Bridging knowledge graphs to generate scene graphs. In: European Conference on Computer Vision (2020a)

Zareian, A., Wang, Z., You, H., et al.: Learning visual commonsense for robust scene graph generation. In: European Conference on Computer Vision (2020b)

Zellers, R., Yatskar, M., Thomson, S., et al.: Neural motifs-scene graph parsing with global context. In: IEEE Conference on Computer Vision and Pattern Recognition (2018)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Patil, C., Abhyankar, A. Generating comprehensive scene graphs with integrated multiple attribute detection. Machine Vision and Applications 34, 11 (2023). https://doi.org/10.1007/s00138-022-01361-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-022-01361-3