Abstract

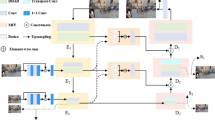

Blind image deblurring is a fundamental and challenging task in the field of computer vision. Despite image deblurring has been made considerable progress, there is still room for improvement in the visual effect and details of the images. Therefore, we present an image deblurring model based on local and non-local features for non-uniform scene deblurring in an end-to-end fashion. Correspondingly, we develop a dense dilated block (DDB) and an improved attention module (IAM) to excavate local and non-local features, respectively. DDB focuses on enhancing feature correlation and constructing complex features in high dimensions by exploiting local features. IAM is a gate mechanism, which implicates spatial context information and attention maps based on non-local channels dependencies. Compared to the previous methods, our method surpasses state-of-the-art (SOTA) methods on both synthetic datasets and real-world images.

Similar content being viewed by others

References

Xu, L., Zheng, S., Jia, J.: Unnatural \({L_0}\) sparse representation for natural image deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1107–1114 (2013)

Xu, L., Jia, J.: Two-phase kernel estimation for robust motion deblurring. In: Proceedings of the European Conference on Computer Vision, pp. 157–170 (2010)

Cho, S., Lee, S.: Fast motion deblurring. ACM Trans. Graph. 28, 145 (2009). https://doi.org/10.1145/1618452.1618491

Pan, J., Sun, D., Pfister, H., et al: Blind image deblurring using dark channel prior. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1628–1636 (2016)

Ren, W., Cao, X., Pan, J., et al.: Image deblurring via enhanced low-rank prior. IEEE Trans. Image Process. 25, 3426–3437 (2016)

Sun, L., Cho, S., Wang, J., et al.: Edge-based blur kernel estimation using patch priors. In: ICCP, pp. 1–8 (2013)

Zhang, H., Yang, J., Zhang, Y., et al. Sparse representation based blind image deblurring. In: ICME, pp. 1–6 (2011)

Hyun Kim, T., Ahn, B., Mu Lee, K.: Dynamic scene deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3160–3167 (2013)

Hyun Kim, T., Mu Lee, K.: Segmentation-free dynamic scene deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2766–2773 (2014)

Pan, J., Hu, Z., Su, Z, et al.: Soft-segmentation guided object motion deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 459–468 (2016)

Sun, J., Cao, W., Xu, Z., et al.: Learning a convolutional neural network for non-uniform motion blur removal. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 769–777 (2015)

Gong, D., Yang, J., Liu, L., et al.: From motion blur to motion flow: a deep learning solution for removing heterogeneous motion blur. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 1, 2, 5, 6, 7 (2017)

Hradiš, M., Kotera, J., Zemcík, P, et al.: Convolutional neural networks for direct text deblurring. In: Proceedings of the European Conference on Computer Vision, 2 (2015)

Jin, M., Hirsch, M., Favaro, P.: Learning face deblurring fast and wide. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 745–753 (2018)

Mao, X., Shen, C., Yang, Y.B.: Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In: Advances in Neural Information Processing Systems, pp. 2802–2810 (2016)

Nah, S., Kim, T.H., Lee, K.M.: Deep multi-scale convolutional neural network for dynamic scene deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 257–265 (2017)

Tao, X., Gao, H., Shen, X., et al.: Scale-recurrent network for deep image deblurring. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8174–8182 (2018)

Kupyn, O., Budzan, V., Mykhailych, M., et al.: Deblurgan: blind motion deblurring using conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8183–8192 (2018)

Nimisha, T.M., Sunil, K., Rajagopalan, A.: Unsupervised class-specific deblurring. In: Proceedings of the European Conference on Computer Vision, pp. 353–369 (2018)

Kupyn, O., Martyniuk, T., Wu, J., et al.: DeblurGAN-v2: Deblurring (Orders-of-Magnitude) Faster and Better. arXiv arXiv:1908.03826 (2019)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv arXiv:1409.1556 (2014)

Szegedy, C., Liu, W., Jia, Y., et al. Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

Zhu, J.Y., Park, T., Isola, P., et al.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: ICCV, pp. 2223–2232 (2017)

Li, C., Cong, R., Hou, J., et al., Nested network with twostream pyramid for salient object detection in optical remote sensing images, arXiv arXiv:1906.08462 (2019)

Li, C., Guo, C., Ren, W., et al.: An underwater image enhancement benchmark dataset and beyond, arXiv arXiv:1901.05495 (2019)

Li, C., Guo, C., Guo, J., et al.: PDR-Net: perception-inspired single image dehazing network with refinement. IEEE Trans. Multimedia 22(3):704–716 ( 2019)

Li, C., Anwar, S., Porikli, F.: Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit, 98:107038 (2020)

Li, C., Guo, C., Guo, J., et al.: Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 25(12), 5664–5677 (2016)

Yuan, Y., Su, W., Ma, D.: Efficient dynamic scene deblurring using spatially variant deconvolution network with optical flow guided training. in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3555–3564 (2020)

Li, X., Zhang, H., Zhang, R.: Adaptive graph auto-encoder for general data clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1, 2–34 (2021). https://doi.org/10.1109/TPAMI.2021.3125687

Zhang, H., Zhang, R., Li, X.: Embedding graph auto-encoder for graph clustering, arXiv arXiv:2002.08643 (2020)

Li, C., Guo, J., Guo, C.: Emerging from water: underwater image color correction based on weakly supervised color transfer. SPL. 25(3):323–327 (2018)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Isola, P., Zhu, J.Y., Zhou, T., et al.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1125–1134 (2017)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Proceedings of the European Conference on Computer Vision, pp. 694–711 (2016)

Gulrajani, I., Ahmed, F., Arjovsky, et al.: Improved training of Wasserstein GANs, arXiv arXiv:1704.00028 (2017)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv arXiv:1412.6980 (2014)

Köhler, R., Hirsch, M., Mohler, B., et al.: Recording and playback of camera shake: benchmarking blind deconvolution with a real-world database. In: Proceedings of the European Conference on Computer Vision, pp. 27–40 (2012)

Lai, W.S., Huang, J.B., Hu, Z., et al.: A comparative study for single image blind deblurring. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1701–1709 (2016)

Wang, Z., Bovik, A.C., Sheikh, H.R., et al.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004)

Qi, Q., Guo, J., Jin, W.: EGAN: non-uniform image deblurring based on edge adversarial mechanism and partial weight sharing network. Signal Process. Image Commun. (2020). https://doi.org/10.1155/2021/1391801

Mustaniemi, J., Kannala, J., Sarkka, S., et al.: Gyroscope-aided motion deblurring with deep networks. arXiv arXiv:1810.00986 (2018)

Qi, Q.: Image fine-grained for non-uniform scenes deblurring. Artif. Intell. Commun. Netw. 101(11), AICON 2021, LNICST 397, pp. 1C13 (2021)

Acknowledgements

This work was supported in part by the Foundation of Grant 2023-ZJ-950Q and Grant 2022TQ04.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qi, Q. Dynamic scene blind image deblurring based on local and non-local features. Machine Vision and Applications 34, 39 (2023). https://doi.org/10.1007/s00138-023-01384-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-023-01384-4