Abstract

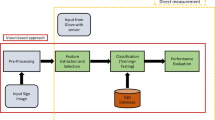

Sign language is the predominant form of communication among a large group of society. The nature of sign languages is visual. This makes them very different from spoken languages. Unfortunately, very few able people can understand sign language making communication with the hearing-impaired extremely difficult. Research in the field of sign language recognition can help reduce the barrier between deaf and able people. A lot of work has been done on sign language recognition for numerous languages such as American sign language and Chinese sign language. Unfortunately, very little to no work has been done for Pakistan Sign Language. Any contribution in Pakistan Sign Language recognition is limited to static images instead of gestures. Furthermore, the dataset available for this language is very small in terms of the number of examples per word which makes it very difficult to train deep networks that require a considerable amount of training data. Data Augmentation techniques help the network generalize better by providing more variety in the training data. In this paper, a pipeline for the Pakistan Sign Language recognition system is proposed that incorporates an augmentation unit. To validate the effectiveness of the proposed pipeline, three deep learning models, C3D, I3D, and TSM are used. Results show that translation and rotation are the two best augmentation techniques for the Pakistan Sign Language dataset. The models trained using our data-augment-supported pipeline outperform other methods that only used the original data. The most suitable model is C3D which not only produced an accuracy of 93.33% but also has a low training time as compared to other models.

Similar content being viewed by others

References

International Day of Sign Languages—United Nations. https://www.un.org/en/observances/sign-languages-day

Adaloglou, N., et al.: A comprehensive study on sign language recognition methods. CoRR (2020). arXiv:2007.12530

Lim, K.M., Tan, A.W., Lee, C.P., Tan, S.C.: Isolated sign language recognition using convolutional neural network hand modelling and hand energy image. Multimed. Tools Appl. 78(14), 19917–19944 (2019). https://doi.org/10.1007/s11042-019-7263-7

Deaf Statistic—PADEAF. https://www.padeaf.org/quick-links/deaf-statistics

Gao, Q., Sun, L., Han, C., Guo, J.: American sign language fingerspelling recognition using RGB-D and DFANet, pp. 3151–3156 (2022)

Damaneh, M.M., Mohanna, F., Jafari, P.: Static hand gesture recognition in sign language based on convolutional neural network with feature extraction method using ORB descriptor and Gabor filter. Expert Syst. Appl. 211, 118559 (2023). https://doi.org/10.1016/j.eswa.2022.118559

Sethia, D., Singh, P., Mohapatra, B., Kulkarni, A.J., Mirjalili, S., Udgata, S.K. (eds): Gesture recognition for American sign language using pytorch and convolutional neural network. In: Kulkarni, A.J., Mirjalili, S., Udgata, S.K. (eds) Intelligent Systems and Applications, pp. 307–317. Springer Nature Singapore, Singapore (2023)

Kamal, S.M., Chen, Y., Li, S., Shi, X., Zheng, J.: Technical approaches to Chinese sign language processing: a review. IEEE Access 7, 96926–96935 (2019). https://doi.org/10.1109/ACCESS.2019.2929174

Zhang, Y., Long, L., Shi, D., He, H., Liu, X.: Research and improvement of Chinese sign language detection algorithm based on yolov5s, pp 577–581 (2022)

Hu, J., Liu, Y., Lam, K.-M., Lou, P.: STFE-Net: a spatial-temporal feature extraction network for continuous sign language translation. IEEE Access 11, 46204–46217 (2023). https://doi.org/10.1109/ACCESS.2023.3234743

PSL Dictionary (2020). https://psl.org.pk/dictionary. Accessed 08 June 2022

Lokhande, P., Prajapati, R., Pansare, S.: Data gloves for sign language recognition system. Int. J. Comput. Appl. 975, 8887 (2015)

Ahmed, M.A., Zaidan, B.B., Zaidan, A.A., Salih, M.M., Lakulu, M.M.B.: A review on systems-based sensory gloves for sign language recognition state of the art between 2007 and 2017. Sensors 18(7), 2208 (2018)

Nikam, A.S., Ambekar, A.G.: Sign language recognition using image based hand gesture recognition techniques, pp. 1–5 (2016)

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3d convolutional networks (2015)

Pu, J., Zhou, W., Li, H., Chen, E., Gong, Y., Tie, Y. (eds): Sign language recognition with multi-modal features. In: Chen, E., Gong, Y., Tie, Y. (eds) Advances in Multimedia Information Processing—PCM 2016, pp. 252–261. Springer International Publishing, Cham (2016)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? A new model and the kinetics dataset. CoRR (2017). arXiv:1705.07750

Maruyama, M., et al.: Word-level sign language recognition with multi-stream neural networks focusing on local regions (2021). arXiv:2106.15989

Xie, P., et al.: Multi-scale local-temporal similarity fusion for continuous sign language recognition. Pattern Recognit. 136, 109233 (2023). https://doi.org/10.1016/j.patcog.2022.109233

Qiu, Z., Yao, T., Mei, T.: Learning spatio-temporal representation with pseudo-3d residual networks (2017). arXiv:1711.10305

Sun, L., Jia, K., Yeung, D.-Y., Shi, B.E.: Human action recognition using factorized spatio-temporal convolutional networks (2015). arXiv:1510.00562

Xie, S., Sun, C., Huang, J., Tu, Z., Murphy, K.: Rethinking spatiotemporal feature learning: speed-accuracy trade-offs in video classification (2018). arXiv:1712.04851

Tran, D., et al.: A closer look at spatiotemporal convolutions for action recognition (2018). arXiv:1711.11248

Lin, J., Gan, C., Han, S.: Tsm: temporal shift module for efficient video understanding (2019)

Li, D., Opazo, C. R., Yu, X., Li, H.: Word-level deep sign language recognition from video: A new large-scale dataset and methods comparison. CoRR (2019). arXiv:1910.11006

Vaswani, A., et al.: Attention is all you need (2017). arXiv:1706.03762

Slimane, F.B., Bouguessa, M.: Context matters: self-attention for sign language recognition (2021). arXiv:2101.04632

Sandler, M., Howard, A.G., Zhu, M., Zhmoginov, A., Chen, L.: Inverted residuals and linear bottlenecks: mobile networks for classification, detection and segmentation. CoRR (2018). arXiv:1801.04381

Graves, A., Fernández, S., Gomez, F., Schmidhuber, J.: Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In: ICML ’06, pp. 369–376. Association for Computing Machinery, New York, NY, USA (2006). https://doi.org/10.1145/1143844.1143891

De Coster, M., Van Herreweghe, M., Dambre, J.: Sign language recognition with transformer networks, pp. 6018–6024. European Language Resources Association, Marseille (2020). https://aclanthology.org/2020.lrec-1.737

Cao, Z., Hidalgo, G., Simon, T., Wei, S.-E., Sheikh, Y.: Openpose: realtime multi-person 2d pose estimation using part affinity fields (2019). arXiv:1812.08008

Boháček, M., Hrúz, M.: Sign pose-based transformer for word-level sign language recognition, pp. 182–191 (2022)

Kındıroglu, A.A., Özdemir, O., Akarun, L.: Aligning accumulative representations for sign language recognition. Mach. Vision Appl. 34(1) (2022). https://doi.org/10.1007/s00138-022-01367-x

Sincan, O.M., Keles, H.Y.: Using motion history images with 3d convolutional networks in isolated sign language recognition. In: CoRR (2021). arXiv:2110.12396

Jiang, S., et al.: Skeleton aware multi-modal sign language recognition. CoRR (2021). arXiv:2103.08833

Töngi, R.: Application of transfer learning to sign language recognition using an inflated 3d deep convolutional neural network. CoRR (2021). arXiv:2103.05111

Boukdir, A., Benaddy, M., Ellahyani, A., Meslouhi, O.E., Kardouchi, M.: Isolated video-based Arabic sign language recognition using convolutional and recursive neural networks. Arab. J. Sci. Eng. 47(2), 2187–2199 (2022)

Alvi, A.K., et al.: Pakistan sign language recognition using statistical template matching. Int. J. Inf. Technol. 1(1), 1–12 (2004)

Raees, M.R., Ullah, S., Ur Rahman, S., Rabbi, I.: Image based recognition of Pakistan sign language. J. Eng. Res. 4 (2016). https://doi.org/10.7603/s40632-016-0002-6

Raziq, N., Latif, S., Xhafa, F., Barolli, L., Amato, F. (eds): Pakistan sign language recognition and translation system using leap motion device. In: Xhafa, F., Barolli, L., Amato, F. (eds) Advances on P2P, Parallel, Grid, Cloud and Internet Computing, pp. 895–902 Springer International Publishing, Cham (2017)

Malik, M.S.A., et al.: Pakistan sign language detection using PCA and KNN. Int. J. Adv. Comput. Sci. Appl. 9(4) (2018). https://doi.org/10.14569/IJACSA.2018.090414

Naseem, M., Sarafraz, S., Abbas, A., Haider, A.: Developing a prototype to translate Pakistan sign language into text and speech while using convolutional neural networking. J. Educ. Pract. 10(15) (2019)

Shah, F., et al.: Sign language recognition using multiple kernel learning: a case study of Pakistan sign language. IEEE Access 9, 67548–67558 (2021)

Javaid, S., Rizvi, S.: A novel action transformer network for hybrid multimodal sign language recognition. Comput. Mater. Continua 74, 596–611 (2023). https://doi.org/10.32604/cmc.2023.031924

Saqlain, S., Khan, J., Naqvi, S., Ghani, A.: Symmetric mean binary pattern based Pakistan sign language recognition using multiclass support vector machines. Neural Comput. Appl. 35 (2022). https://doi.org/10.1007/s00521-022-07804-2

Mirza, M., Rashid, S.M., Azim, F., Ali, S., Khan, S.: Vision-based Pakistani sign language recognition using bag-of-words and support vector machines. Sci. Rep. 12 (2022). https://doi.org/10.1038/s41598-022-15864-6

Linguistic Resources. https://cle.org.pk/software/ling_resources.htm

Ahamed, K.U., et al.: A deep learning approach using effective preprocessing techniques to detect COVID-19 from chest CT-scan and X-ray images. Comput. Biol. Med. 139, 105014 (2021)

Karpathy, A., et al.: Large-scale video classification with convolutional neural networks, pp. 1725–1732 (2014)

Ji, S., Xu, W., Yang, M., Yu, K.: 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 221–231 (2013). https://doi.org/10.1109/TPAMI.2012.59

Contributors, M.: Openmmlab’s next generation video understanding toolbox and benchmark. https://github.com/open-mmlab/mmaction2 (2020)

Body Segmentation. https://github.com/tensorflow/tfjs-models/tree/master/body-segmentation

Kumar, C.A., Sheela, K.A.: Development of a speech to Indian sign language translator, pp. 341–348. Springer (2023)

Anitha Sheela, K., Kumar, C.A., Sandhya, J., Ravindra, G.: Indian sign language translator, pp. 7–12 (2022)

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical standards

This statement is to certify that the author list is correct. The Authors also confirm that this research has not been published previously and that it is not under consideration for publication elsewhere. On behalf of all Co-Authors, the Corresponding Author shall bear full responsibility for the submission. There is no conflict of interest. This research did not involve any human participants and/or animals.

Conflict of interest

The authors declare that they have no relevant financial or non-financial interests to disclose. There is no personal relationship that could influence the work reported in this paper. No funding was received for conducting this study. The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hamza, H.M., Wali, A. Pakistan sign language recognition: leveraging deep learning models with limited dataset. Machine Vision and Applications 34, 71 (2023). https://doi.org/10.1007/s00138-023-01429-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-023-01429-8