Abstract

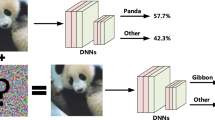

Deep learning has been used in many computer-vision-based applications. However, deep neural networks are vulnerable to adversarial examples that have been crafted specifically to fool a system while being imperceptible to humans. In this paper, we propose a detection defense method based on heterogeneous denoising on foreground and background (HDFB). Since an image region that dominates to the output classification is usually sensitive to adversarial perturbations, HDFB focuses defense on the foreground region rather than the whole image. First, HDFB uses class activation map to segment examples into foreground and background regions. Second, the foreground and background are encoded to square patches. Third, the encoded foreground is zoomed in and out and is denoised in two scales. Subsequently, the encoded background is denoised once using bilateral filtering. After that, the denoised foreground and background patches are decoded. Finally, the decoded foreground and background are stitched together as a denoised sample for classification. If the classifications of the denoised and input images are different, the input image is detected as an adversarial example. The comparison experiments are implemented on CIFAR-10 and MiniImageNet. The average detection rate (DR) against white-box attacks on the test sets of the two datasets is 86.4%. The average DR against black-box attacks on MiniImageNet is 88.4%. The experimental results suggest that HDFB shows high performance on adversarial examples and is robust against white-box and black-box adversarial attacks. However, HDFB is insecure if its defense parameters are exposed to attackers.

Similar content being viewed by others

Data Availability

Not applicable.

References

Gao, H., Wu, S., Wang, Y., Kim, J.Y., Xu, Y.: FSOD4RSI: Few-shot object detection for remote sensing images via features aggregation and scale attention. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 17, 4784–4796 (2024). https://doi.org/10.1109/JSTARS.2024.3362748

Liao, R., Zhai, J., Zhang, F.: Optimization model based on attention mechanism for few-shot image classification. Mach. Vis. Appl. 35(2), 19 (2024). https://doi.org/10.1007/s00138-023-01502-2

Chen, J., Bai, T.: SAANet: Spatial adaptive alignment network for object detection in automatic driving. Image Vis. Comput. 94, 103873 (2020). https://doi.org/10.1016/j.imavis.2020.103873

Fang, L., Bowen, S., Jianxi, M., Weixing, S.: YOLOMH: You only look once for multi-task driving perception with high efficiency. Mach. Vis. Appl. 35(3), 44 (2024). https://doi.org/10.1007/s00138-024-01525-3

Radford, A., Kim, J.W., Xu, T., Brockman, G., Mcleavey, C., Sutskever, I.: Robust speech recognition via large-scale weak supervision. In: 40th International Conference on Machine Learning (ICML), Honolulu, Hawaii, USA, vol. 202, pp. 28492–28518 (2023)

Tolie, H.F., Ren, J., Elyan, E.: DICAM: deep inception and channel-wise attention modules for underwater image enhancement. Neurocomputing 584, 127585 (2024). https://doi.org/10.1016/j.neucom.2024.127585

Ding, X., Cheng, Y., Luo, Y., Li, Q., Gope, P.: Consensus adversarial defense method based on augmented examples. IEEE Trans. Ind. Inf. 19(1), 984–994 (2023). https://doi.org/10.1109/TII.2022.3169973

Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and harnessing adversarial examples. In: 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA (2015)

Dong, Y., Liao, F., Pang, T., Su, H., Zhu, J., Hu, X., Li, J.: Boosting adversarial attacks with momentum. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, pp. 9185–9193 (2018). https://doi.org/10.1109/CVPR.2018.00957

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., Vladu, A.: Towards deep learning models resistant to adversarial attacks. In: 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada (2018)

Moosavi-Dezfooli, S.-M., Fawzi, A., Frossard, P.: DeepFool: A simple and accurate method to fool deep neural networks. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 2574–2582 (2016). https://doi.org/10.1109/CVPR.2016.282

Carlini, N., Wagner, D.: Towards evaluating the robustness of neural networks. In: 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, pp. 39–57 (2017). https://doi.org/10.1109/SP.2017.49

Wang, H., Li, G., Liu, X., Lin, L.: A hamiltonian monte carlo method for probabilistic adversarial attack and learning. IEEE Trans. Pattern Anal. Mach. Intell. 44(4), 1725–1737 (2022). https://doi.org/10.1109/TPAMI.2020.3032061

Jin, G., Shen, S., Zhang, D., Dai, F., Zhang, Y.: APE-GAN: Adversarial perturbation elimination with GAN. In: IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, United Kingdom, pp. 3842–3846 (2019). https://doi.org/10.1109/ICASSP.2019.8683044

Gupta, P., Rahtu, E.: CIIDefence: Defeating adversarial attacks by fusing class-specific image inpainting and image denoising. In: 2019 IEEE International Conference on Computer Vision (ICCV), Seoul, Korea (South), pp. 6708–6717 (2019). https://doi.org/10.1109/ICCV.2019.00681

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 2921–2929 (2016). https://doi.org/10.1109/CVPR.2016.319

Zhang, Z., Song, X., Sun, X., Stojanovic, V.: Hybrid-driven-based fuzzy secure filtering for nonlinear parabolic partial differential equation systems with cyber attacks. Int. J. Adapt. Control Signal Process. 37(2), 380–398 (2023). https://doi.org/10.1002/acs.3529

Stojanovic, V., Nedic, N.: Joint state and parameter robust estimation of stochastic nonlinear systems. Int. J. Robust Nonlinear Control 26(14), 3058–3074 (2016). https://doi.org/10.1002/rnc.3490

Stojanovic, V., Nedic, N.: Robust Kalman filtering for nonlinear multivariable stochastic systems in the presence of non-gaussian noise. Int. J. Robust Nonlinear Control 26(3), 445–460 (2016). https://doi.org/10.1002/rnc.3319

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-CAM: Visual explanations from deep networks via gradient-based localization. In: 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, pp. 618–626 (2017). https://doi.org/10.1109/ICCV.2017.74

Kurakin, A., Goodfellow, I.J., Bengio, S.: Adversarial examples in the physical world. In: 5th International Conference on Learning Representations (ICLR), Toulon, France (2017)

Zhang, H., Yu, Y., Jiao, J., Xing, E.P., Ghaoui, L.E., Jordan, M.I.: Theoretically principled trade-off between robustness and accuracy. In: 36th International Conference on Machine Learning (ICML), Long Beach, California, USA, vol. 97, pp. 7472–7482 (2019)

Wong, E., Rice, L., Kolter, J.Z.: Fast is better than free: Revisiting adversarial training. In: 8th International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia (2020)

Liu, D., Wu, L.Y., Li, B., Boussaid, F., Bennamoun, M., Xie, X., Liang, C.: Jacobian norm with selective input gradient regularization for interpretable adversarial defense. Pattern Recogn. 145, 109902 (2024). https://doi.org/10.1016/j.patcog.2023.109902

Zhang, Y., Wang, T., Zhao, R., Wen, W., Zhu, Y.: RAPP: Reversible privacy preservation for various face attributes. IEEE Trans. Inf. Forensics Secur. 18, 3074–3087 (2023). https://doi.org/10.1109/TIFS.2023.3274359

Ye, X., Zhu, Y., Zhang, M., Deng, H.: Differential privacy data release scheme using microaggregation with conditional feature selection. IEEE Internet Things J. 10(20), 18302–18314 (2023). https://doi.org/10.1109/JIOT.2023.3279440

Eleftheriadis, C., Symeonidis, A., Katsaros, P.: Adversarial robustness improvement for deep neural networks. Mach. Vis. Appl. 35(3), 35 (2024). https://doi.org/10.1007/s00138-024-01519-1

Tramèr, F., Kurakin, A., Papernot, N., Goodfellow, I., Boneh, D., McDaniel, P.: Ensemble adversarial training: Attacks and defenses. In: 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada (2018)

Song, C., He, K., Wang, L., Hopcroft, J.E.: Improving the generalization of adversarial training with domain adaptation. In: 7th International Conference on Learning Representations (ICLR), New Orleans, LA, USA (2019)

Xie, C., Wu, Y., Maaten, L., Yuille, A., He, K.: Feature denoising for improving adversarial robustness. In: 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp. 501–509 (2019). https://doi.org/10.1109/CVPR.2019.00059

Mustafa, A., Khan, S.H., Hayat, M., Goecke, R., Shen, J., Shao, L.: Deeply supervised discriminative learning for adversarial defense. IEEE Trans. Pattern Anal. Mach. Intell. 43(9), 3154–3166 (2020). https://doi.org/10.1109/TPAMI.2020.2978474

Chen, J., Zheng, H., Chen, R., Xiong, H.: RCA-SOC: A novel adversarial defense by refocusing on critical areas and strengthening object contours. Comput. Secur. 96, 101916 (2020). https://doi.org/10.1016/j.cose.2020.101916

Zhu, J., Peng, G., Wang, D.: Dual-domain-based adversarial defense with conditional VAE and Bayesian network. IEEE Trans. Ind. Inf. 17(1), 596–605 (2020). https://doi.org/10.1109/TII.2020.2964154

Stojanovic, V., Nedic, N.: Robust identification of OE model with constrained output using optimal input design. J. Franklin Inst. 353(2), 576–593 (2016). https://doi.org/10.1016/j.jfranklin.2015.12.007

Guo, C., Rana, M., Cissé, M., Van Der Maaten, L.: Countering adversarial images using input transformations. In: 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada (2018)

Xie, C., Wang, J., Zhang, Z., Ren, Z., Yuille, A.L.: Mitigating adversarial effects through randomization (iclr), Vancouver, BC, Canada. In: 6th International Conference on Learning Representations (2018)

Song, Y., Kim, T., Nowozin, S., Ermon, S., Kushman, N.: PixelDefend: Leveraging generative models to understand and defend against adversarial examples. In: 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada (2018)

Prakash, A., Moran, N., Garber, S., DiLillo, A., Storer, J.: Deflecting adversarial attacks with pixel deflection. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, pp. 8571–8580 (2018). https://doi.org/10.1109/CVPR.2018.00894

Samangouei, P., Kabkab, M., Chellappa, R.: Defense-GAN: Protecting classifiers against adversarial attacks using generative models. In: 6th International Conference on Learning Representations (ICLR), Vancouver, BC, Canada (2018)

Sun, B., Tsai, N., Liu, F., Yu, R., Su, H.: Adversarial defense by stratified convolutional sparse coding. In: 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, pp. 11439–11448 (2019). https://doi.org/10.1109/CVPR.2019.01171

Liao, F., Liang, M., Dong, Y., Pang, T., Hu, X., Zhu, J.: Defense against adversarial attacks using high-level representation guided denoiser. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, pp. 1778–1787 (2018). https://doi.org/10.1109/CVPR.2018.00191

Liang, B., Li, H., Su, M., Li, X., Shi, W., Wang, X.: Detecting adversarial image examples in deep neural networks with adaptive noise reduction. IEEE Trans. Dependable Secure Comput. 18(1), 72–85 (2018). https://doi.org/10.1109/TDSC.2018.2874243

Deng, J., Dong, W., Socher, R., Li, L.J., Kai, L., Li, F.-F.: ImageNet: A large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, Florida, USA, pp. 248–255 (2009). https://doi.org/10.1109/CVPR.2009.5206848

Vinyals, O., Blundell, C., Lillicrap, T., Kavukcuoglu, K., Wierstra, D.: Matching networks for one shot learning. In: 29th Advances in Neural Information Processing Systems, Barcelona, Spain, pp. 3630–3638 (2016)

Ravi, S., Larochelle, H.: Optimization as a model for few-shot learning. In: 5th International Conference on Learning Representations (ICLR), Toulon, France (2017)

Krizhevsky, A., Hinton, G.: Learning multiple layers of features from tiny images. Technical report, University of Toronto (2009)

Ye, D., Chen, C., Liu, C., Wang, H., Jiang, S.: Detection defense against adversarial attacks with saliency map. Int. J. Intell. Syst. 37(12), 10193–10210 (2022). https://doi.org/10.1002/int.22458

Kuo, C.-W., Ma, C.-Y., Huang, J.-B., Kira, Z.: FeatMatch: Feature-based augmentation for semi-supervised learning. In: 16th European Conference on Computer Vision, Glasgow, UK, pp. 479–495 (2020). https://doi.org/10.1007/978-3-030-58523-5_28

Metzen, J.H., Genewein, T., Fischer, V., Bischoff, B.: On detecting adversarial perturbations. In: 5th International Conference on Learning Representations (ICLR), Toulon, France (2017)

Arazo, E., Ortego, D., Albert, P., O’Connor, N.E., McGuinness, K.: Pseudo-labeling and confirmation bias in deep semi-supervised learning. In: 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, United Kingdom, pp. 1–8 (2020). https://doi.org/10.1109/IJCNN48605.2020.9207304

Gao, S., Yu, S., Wu, L., Yao, S., Zhou, X.: Detecting adversarial examples by additional evidence from noise domain. IET Image Proc. 16(2), 378–392 (2022). https://doi.org/10.1049/ipr2.12354

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, pp. 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA (2015). https://doi.org/10.48550/arXiv.1409.1556

Papernot, N., Faghri, F., Carlini, N., Goodfellow, I., Feinman, R., Kurakin, A., Xie, C., Sharma, Y., Brown, T., Roy, A., Matyasko, A., Behzadan, V., Hambardzumyan, K., Zhang, Z., Juang, Y.-L., Li, Z., Sheatsley, R., Garg, A., Uesato, J., Gierke, W., Dong, Y., Berthelot, D., Hendricks, P., Rauber, J., Long, R., McDaniel, P.: Technical report on the cleverhans v2.1.0 adversarial examples library. arXiv:1610.00768 (2016). https://doi.org/10.48550/arXiv.1610.00768

Lu, J., Issaranon, T., Forsyth, D.: SafetyNet: Detecting and rejecting adversarial examples robustly. In: 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, pp. 446–454 (2017). https://doi.org/10.1109/ICCV.2017.56

Xu, W., Evans, D., Qi, Y.: Feature squeezing: Detecting adversarial examples in deep neural networks. In: 25th Network and Distributed System Security Symposium (NDSS), San Diego, California, USA (2018)

Feinman, R., Curtin, R.R., Shintre, S., Gardner, A.B.: Detecting adversarial samples from artifacts. arXiv:1703.00410 (2017). https://doi.org/10.48550/arXiv.1703.00410

Carlini, N., Wagner, D.A.: Adversarial examples are not easily detected: Bypassing ten detection methods. In: 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, pp. 3–14 (2017). https://doi.org/10.1145/3128572.3140444

Acknowledgements

This work was supported by Anhui Provincial Natural Science Foundation (1808085MF171); the National Natural Science Foundation of China (61972439, 61976006).

Funding

This work was supported by Anhui Provincial Natural Science Foundation (1808085MF171); the National Natural Science Foundation of China (61972439, 61976006).

Author information

Authors and Affiliations

Contributions

Lifang Zhu: Investigation, Methodology, Code, Software, Writing-original draft. Chao Liu: Investigation, Conceptualization, Code. Zhiqiang Zhang and Yifan Cheng: Conceptualization, Software. Biao Jie: Supervision, Funding acquisition. Xintao Ding: Project administration, Supervision, Conceptualization, Writing-review and editing, Funding acquisition. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no Conflict of interest to declare that are relevant to the content of this article.

Ethics approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhu, L., Liu, C., Zhang, Z. et al. An adversarial sample detection method based on heterogeneous denoising. Machine Vision and Applications 35, 96 (2024). https://doi.org/10.1007/s00138-024-01579-3

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-024-01579-3