Abstract

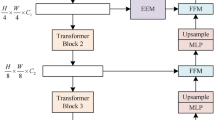

The importance of periodic corrosion inspection in steel structures cannot be overstated. However, current manual inspection approaches are fraught with challenges: they are time-consuming, subjective, and pose risks. To address these limitations, extensive research has been conducted over the past decade gauging the feasibility of Convolutional Neural Networks (CNNs) for automation of corrosion inspection. Meanwhile, Transformer networks have recently emerged as powerful tools in computer vision due to their ability to model intricate global relationships. In this paper, a novel hybrid architecture, dubbed CorFormer, is proposed for effective and efficient automation of corrosion inspection. The CorFormer network fuses Transformer and CNN layers at different stages of the encoder, which captures global context through Transformer layers while leveraging the inherent inductive bias of CNNs. To bridge the semantic gap between features generated by Transformer and CNN layers, a Semantic Gap Merger (SGM) module is introduced after each feature merge operation. The encoder is complemented by a hierarchical decoder, able to decrypt complex features at large and small scales. CorFormer is compared against state-of-the-art CNN and Transformer architectures for corrosion segmentation, and is found to outperform the best alternative by 2.7% in terms of Intersection over Union (IoU) across 10 validation data splits. Furthermore, it enables real-time inspection at an impressive rate of 28 frames per second. Rigorous statistical tests provide support for the findings presented in this study, and an extensive ablation study validates all design choices.

Similar content being viewed by others

Data availability

No datasets were generated or analysed during the current study.

References

Koch, G., Varney, J., Thompson, N., Moghissi, O., Gould, M., Payer, J.: International measures of prevention, application, and economics of corrosion technologies study. NACE International IMPACT Report (2016)

The Federal Highway Administration of The United States Department of Transportation: National Bridge Inspection Standard. The Federal Highway Administration of The United States Department of Transportation

Pidaparti, R.M., Aghazadeh, B.S., Whitfield, A., Rao, A., Mercier, G.P.: Classification of corrosion defects in NIAl bronze through image analysis. Corros. Sci. 52(11), 3661–3666 (2010)

Chun, P., Funatani, K., Furukwa, S., Ohga, M.: Grade classification of corrosion damage on the surface of weathering steel members by digital image processing. In: Proceedings of the Thirteenth East Asia-Pacific Conference on Structural Engineering and Construction (EASEC-13), p. 4 (2013)

Shen, H.-K., Chen, P.-H., Chang, L.-M.: Automated steel bridge coating rust defect recognition method based on color and texture feature. Autom. Constr. 31, 338–356 (2013)

Jahanshahi, M.R., Masri, S.F.: Parametric performance evaluation of wavelet-based corrosion detection algorithms for condition assessment of civil infrastructure systems. J. Comput. Civ. Eng. 27(4), 345–357 (2012)

Ghanta, S., Karp, T., Lee, S.: Wavelet domain detection of rust in steel bridge images. In: Acoustics, Speech and Signal Processing (ICASSP), 2011 IEEE International Conference On, pp. 1033–1036. IEEE (2011)

Jahanshahi, M.R., Kelly, J.S., Masri, S.F., Sukhatme, G.S.: A survey and evaluation of promising approaches for automatic image-based defect detection of bridge structures. Struct. Infrastruct. Eng. 5(6), 455–486 (2009)

Liao, K.-W., Lee, Y.-T.: Detection of rust defects on steel bridge coatings via digital image recognition. Autom. Constr. 71, 294–306 (2016)

Son, H., Hwang, N., Kim, C., Kim, C.: Rapid and automated determination of rusted surface areas of a steel bridge for robotic maintenance systems. Autom. Constr. 42, 13–24 (2014)

Shen, H.-K., Chen, P.-H., Chang, L.-M.: Human-visual-perception-like intensity recognition for color rust images based on artificial neural network. Autom. Constr. 90, 178–187 (2018)

Khan, A., Rauf, Z., Sohail, A., Khan, A.R., Asif, H., Asif, A., Farooq, U.: A survey of the vision transformers and their CNN-transformer based variants. Artif. Intell. Rev. 56(Suppl 3), 2917–2970 (2023)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Tulbure, A.-A., Tulbure, A.-A., Dulf, E.-H.: A review on modern defect detection models using DCNNs-deep convolutional neural networks. J. Adv. Res. 35, 33–48 (2022)

Zhu, J., Zhang, C., Qi, H., Lu, Z.: Vision-based defects detection for bridges using transfer learning and convolutional neural networks. Struct. Infrastruct. Eng. 16(7), 1037–1049 (2020)

Subedi, A., Tang, W., Mondal, T.G., Wu, R.-T., Jahanshahi, M.R.: Ensemble-based deep learning for autonomous bridge component and damage segmentation leveraging nested reg-unet. Smart Struct. Syst. 31(4), 335–349 (2023)

Chen, F.-C., Jahanshahi, M.R.: ARF-crack: rotation invariant deep fully convolutional network for pixel-level crack detection. Mach. Vis. Appl. 31(6), 47 (2020)

Yang, Y., Yang, S., Zhao, Q., Cao, H., Peng, X.: Weakly supervised collaborative localization learning method for sewer pipe defect detection. Mach. Vis. Appl. 35(5), 1–15 (2024)

Cha, Y.-J., Choi, W., Suh, G., Mahmoudkhani, S., Büyüköztürk, O.: Autonomous structural visual inspection using region-based deep learning for detecting multiple damage types. Comput. Aided Civ. Infrastruct. Eng. 33(9), 731–747 (2018)

Bastian, B.T., Jaspreeth, N., Ranjith, S.K., Jiji, C.: Visual inspection and characterization of external corrosion in pipelines using deep neural network. NDT & E Int. 107, 102134 (2019)

Atha, D.J., Jahanshahi, M.R.: Evaluation of deep learning approaches based on convolutional neural networks for corrosion detection. Struct. Health Monit. 17(5), 1110–1128 (2018)

Liu, L., Tan, E., Zhen, Y., Yin, X.J., Cai, Z.Q.: Ai-facilitated coating corrosion assessment system for productivity enhancement. In: 2018 13th IEEE Conference on Industrial Electronics and Applications (ICIEA), pp. 606–610. IEEE (2018)

Nguyen, T., Ozaslan, T., Miller, I.D., Keller, J., Loianno, G., Taylor, C.J., Lee, D.D., Kumar, V., Harwood, J.H., Wozencraft, J.: U-Net for MAV-based penstock inspection: an investigation of focal loss in multi-class segmentation for corrosion identification. arXiv preprint arXiv:1809.06576 (2018)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-assisted Intervention, pp. 234–241. Springer (2015)

Nash, W., Drummond, T., Birbilis, N.: Quantity beats quality for semantic segmentation of corrosion in images. arXiv preprint arXiv:1807.03138 (2018)

Hoskere, V., Narazaki, Y., Hoang, T., Spencer Jr, B.: Vision-based structural inspection using multiscale deep convolutional neural networks. arXiv preprint arXiv:1805.01055 (2018)

Duy, L.D., Anh, N.T., Son, N.T., Tung, N.V., Duong, N.B., Khan, M.H.R.: Deep learning in semantic segmentation of rust in images. In: Proceedings of the 2020 9th International Conference on Software and Computer Applications, pp. 129–132 (2020)

Huang, J., Liu, Q., Xiang, L., Li, G., Zhang, Y., Chen, W.: A lightweight residual model for corrosion segmentation with local contextual information. Appl. Sci. (2022). https://doi.org/10.3390/app12189095

Zhu, T., Zhu, S., Zheng, T., Ding, H., Song, W., Li, C.: HEU-Net: hybrid attention residual block-based network with external skip connections for metal corrosion semantic segmentation. Vis. Comput. 40, 1–15 (2023)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Safa, A., Mohamed, A., Issam, B., Mohamed-Yassine, H.: SegFormer: semantic segmentation based tranformers for corrosion detection. In: 2023 International Conference on Networking and Advanced Systems (ICNAS), pp. 1–6. IEEE (2023)

Xie, E., Wang, W., Yu, Z., Anandkumar, A., Alvarez, J.M., Luo, P.: SegFormer: simple and efficient design for semantic segmentation with transformers. Adv. Neural. Inf. Process. Syst. 34, 12077–12090 (2021)

Sookpong, S., Phimsiri, S., Tosawadi, T., Choppradit, P., Suttichaya, V., Utintu, C., Thamwiwatthana, E.: Comparison of corrosion segmentation techniques on oil and gas offshore critical assets. In: 2023 20th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), pp. 1–5. IEEE (2023)

Chen, Z., Duan, Y., Wang, W., He, J., Lu, T., Dai, J., Qiao, Y.: Vision transformer adapter for dense predictions. arXiv preprint arXiv:2205.08534 (2022)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network, pp. 2881–2890 (2017)

Liu, Z., Hu, H., Lin, Y., Yao, Z., Xie, Z., Wei, Y., Ning, J., Cao, Y., Zhang, Z., Dong, L., et al.: Swin transformer V2: scaling up capacity and resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12009–12019 (2022)

Tan, M., Le, Q.: EfficientNet: Rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114. PMLR (2019)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Chen, L.-C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587 (2017)

Chaurasia, A., Culurciello, E.: LinkNet: exploiting encoder representations for efficient semantic segmentation. In: 2017 IEEE Visual Communications and Image Processing (VCIP), pp. 1–4. IEEE (2017)

Zhou, B., Zhao, H., Puig, X., Xiao, T., Fidler, S., Barriuso, A., Torralba, A.: Semantic understanding of scenes through the ade20k dataset. Int. J. Comput. Vis. 127, 302–321 (2019)

Xiao, T., Liu, Y., Zhou, B., Jiang, Y., Sun, J.: Unified perceptual parsing for scene understanding. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 418–434 (2018)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE (2009)

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101 (2017)

Sudre, C.H., Li, W., Vercauteren, T., Ourselin, S., Jorge Cardoso, M.: Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In: Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: Third International Workshop, DLMIA 2017, and 7th International Workshop, ML-CDS 2017, Held in Conjunction with MICCAI 2017, Québec City, QC, Canada, September 14, Proceedings 3, pp. 240–248. Springer (2017)

Soomro, T.A., Afifi, A.J., Gao, J., Hellwich, O., Paul, M., Zheng, L.: Strided u-net model: retinal vessels segmentation using dice loss. In: 2018 Digital Image Computing: Techniques and Applications (DICTA), pp. 1–8. IEEE (2018)

Zhang, Y., Liu, S., Li, C., Wang, J.: Rethinking the dice loss for deep learning lesion segmentation in medical images. J. Shanghai Jiaotong Univ. (Sci.) 26, 93–102 (2021)

Lu, Y., Zhou, J.H., Guan, C.: Minimizing hybrid dice loss for highly imbalanced 3d neuroimage segmentation. In: 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), pp. 1059–1062. IEEE (2020)

Dietterich, T.G.: Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 10(7), 1895–1923 (1998)

Nadeau, C., Bengio, Y.: Inference for the generalization error. In: Advances in Neural Information Processing Systems, vol. 12 (1999)

Corani, G., Benavoli, A., Demšar, J., Mangili, F., Zaffalon, M.: Statistical comparison of classifiers through Bayesian hierarchical modelling. Mach. Learn. 106, 1817–1837 (2017)

Author information

Authors and Affiliations

Contributions

A.S. conceptualized and implemented the architecture, performed the experiments, and wrote the manuscript. C.Q. collected the dataset, and wrote part of the introduction and literature review sections. R.S. helped C.Q. with data collection and literature review, along with helping edit the manuscript. M.R.J. provided guidance throughout the duration of the project, from conception to completion, as well as helped edit the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Subedi, A., Qian, C., Sadeghian, R. et al. CorFormer: a hybrid transformer-CNN architecture for corrosion segmentation on metallic surfaces. Machine Vision and Applications 36, 45 (2025). https://doi.org/10.1007/s00138-025-01663-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-025-01663-2