Abstract

In this paper, we revisit the security of factoring-based signature schemes built via the Fiat–Shamir transform and show that they can admit tighter reductions to certain decisional complexity assumptions such as the quadratic-residuosity, the high-residuosity, and the \(\phi \)-hiding assumptions. We do so by proving that the underlying identification schemes used in these schemes are a particular case of the lossy identification notion introduced by Abdalla et al. at Eurocrypt 2012. Next, we show how to extend these results to the forward-security setting based on ideas from the Itkis–Reyzin forward-secure signature scheme. Unlike the original Itkis–Reyzin scheme, our construction can be instantiated under different decisional complexity assumptions and has a much tighter security reduction. Moreover, we also show that the tighter security reductions provided by our proof methodology can result in concrete efficiency gains in practice, both in the standard and forward-security setting, as long as the use of stronger security assumptions is deemed acceptable. Finally, we investigate the design of forward-secure signature schemes whose security reductions are fully tight.

Similar content being viewed by others

1 Introduction

A common paradigm for constructing signature schemes is to apply the Fiat–Shamir transform [28] to a secure three-move canonical identification protocol. In these protocols, the prover first sends a commitment to the verifier, which in turn chooses a random string from the challenge space and sends it back to the prover. Upon receiving the challenge, the prover sends a response to the verifier, which decides whether or not to accept based on the conversation transcript and the public key. To obtain the corresponding signature scheme, one simply makes the signing and verification algorithms non-interactive by computing the challenge as the hash of the message and the commitment. As shown by Abdalla et al. in [1, 2], the resulting signature scheme can be proven secure in the random oracle model as long as the identification scheme is secure against passive adversaries and the commitment has large enough min-entropy. Unfortunately, the reduction to the security of the identification scheme is not tight and loses a factor \(q_h\), where \(q_h\) denotes the number of queries to the random oracle.

If one assumes additional properties about the identification scheme, one can avoid impossibility results such as those in [29, 61, 63] and obtain a signature scheme with a tighter proof of security. For instance, in [53], Micali and Reyzin introduced a new method for converting identification schemes into signature schemes, known as the “swap method,” in which they reverse the roles of the commitment and challenge. More precisely, in their transform, the challenge is chosen uniformly at random from the challenge space and the commitment is computed as the hash of the message and the challenge. Although they only provided a tight security proof for the modified version of Micali’s signature scheme [50], their method generalizes to any scheme in which the prover can compute the response given only the challenge and the commitment, such as the factoring-based schemes in [26, 28, 34, 55, 56].Footnote 1 This is due to the fact that the prover in these schemes possesses a trapdoor (such as the factorization of the modulus in the public key) which allows it to compute the response. On the other hand, their method does not apply to discrete-log-based identification schemes in which the prover needs to know the discrete log of the commitment when computing the response, such as in [62].

In 2003, Katz and Wang [48] showed that tighter security reductions can be obtained even with respect to the Fiat–Shamir transform, by relying on a proof of membership rather than a proof of knowledge. In particular, using this idea, they proposed a signature scheme with a tight security reduction to the hardness of the DDH problem. They also informally mentioned that one could obtain similar results based on the quadratic-residuosity problem by relying on a proof that shows that a set of elements in \({{\mathbb Z}}^*_N\) are all quadratic residues. This result was extended to other settings by Abdalla et al. [5, 6], who presented three new signature schemes based on the hardness of the short exponent discrete log problem [59, 64], on the worst-case hardness of the shortest vector problem in ideal lattices [49, 58], and on the hardness of the Subset Sum problem [40, 51]. Additionally, they also formalized the intuition in [48] by introducing the notion of lossy identification schemes and showing that any such scheme can be transformed into a signature scheme via the Fiat–Shamir transform while preserving the tightness of the reduction.

Tight security from lossy identification. In light of these recent results, we revisit in this paper the security of factoring-based signature schemes built via the Fiat–Shamir transform. Even though the swap method from [53] could be applied in this setting (resulting in a slightly different scheme), our first contribution is to show that these signature schemes already admit tight security reductions to certain decisional complexity assumptions such as the quadratic-residuosity, the high-residuosity [57], and the \(\phi \)-hiding [21] assumptions. We do so by showing that the underlying identification schemes used in these schemes are a particular case of a lossy identification scheme [5, 6]. As shown in Sect. 4.1 in the case of the Guillou–Quisquater signature scheme [34], our tighter security reduction can result in concrete efficiency gains with respect to the swap method. However, this comes at the cost of relying on a stronger security assumption, namely the \(\phi \)-hiding [21] assumption, instead of the plain RSA assumption. Nevertheless, as explained by Kakvi and Kiltz in [44], for carefully chosen parameters, the currently best known attack against the \(\phi \)-hiding problems consists in factorizing the corresponding modulus, which is also the best known attack against the plain RSA assumption.

More generally, one needs to be careful when comparing the tightness of different security reductions, especially if the underlying complexity assumptions and security models are different. In order to have meaningful comparisons, we would like to stress that we focus mainly on schemes whose security holds in the random oracle model [16] and whose underlying computational assumptions have comparable complexity estimates, as in the case of the \(\phi \)-hiding [21] and plain RSA assumptions for carefully chosen parameters.

Tighter reductions for forward-secure signatures. Unlike the swap method of Micali and Reyzin, the prover in factoring-based signature schemes built via the Fiat–Shamir transform does not need to know the factorization of the modulus in order to be able to compute the response. Using this crucial fact, the second main contribution of this paper is to extend our results to the forward-security setting. To achieve this goal, we first introduce in Sect. 3 the notion of lossy key-evolving identification schemes and show how the latter can be turned into forward-secure signature schemes using a generalized version of the Fiat–Shamir transform. As in the case of standard signature schemes, this transformation does not incur a loss of a factor \(q_h\) in the security reduction. Nevertheless, we remark that the reduction is not entirely tight as we lose a factor T corresponding to the total number of time periods.

After introducing the notion of lossy key-evolving identification schemes, we show in Sect. 4.2 that a variant of the Itkis–Reyzin forward-secure signature scheme [41] (which can be seen as an extension of the Guillou–Quisquater scheme to the forward-security setting) admits a much tighter security reduction, albeit to a stronger assumption than the plain RSA assumption, namely the \(\phi \)-hiding assumption. However, we point out that the most efficient variant of the Itkis–Reyzin scheme does not rely on the plain RSA assumption but on the strong RSA assumption. There is currently no known reduction between the strong RSA and the \(\phi \)-hiding assumption.

Concrete security. As in the case of standard signature schemes, the tighter security reductions provided by our proof methodology can result in concrete efficiency gains in practice. More specifically, as we show in Sect. 5, our variant of the Itkis–Reyzin scheme outperforms the original scheme for most concrete choices of parameters.

Generic factoring-based signatures and forward-secure signatures. As an additional contribution, we show in Sect. 6 that all the above-mentioned schemes can be seen as straightforward instantiations of a generic factoring-based forward-secure signature scheme. This enables us to not only easily prove the security properties of these schemes, but to also design a new forward-secure scheme based on a new assumption, the gap \(2^t\)-residuosity.Footnote 2 This assumption has been independently considered and proven secure by Benhamouda, Herranz, Joye, and Libert in [9, 42], under a variant of the quadratic-residuosity assumption together with a new reasonable assumption called the “squared Jacobi symbol” assumption.

Impossibility and existential results for tight forward-secure signature schemes. As pointed out above, the reductions for our forward-secure signature schemes are not entirely tight as we still lose a factor T corresponding to the total number of time periods. Hence, an interesting question to ask is whether it is possible to provide a better security reduction for these schemes. To answer this question, we first show in Sect. 7 that the loss of a factor T in the proof of forward security cannot be avoided for a large class of key-evolving signature schemes, which includes the ones considered so far. This is achieved by extending Coron’s impossibility result in [22] to the forward-secure setting.

Next, in Sects. 8 and 9, we show how to avoid these impossibility results and build forward-secure signature schemes whose security reductions are fully tight. To do that, we first propose a new notion of security for signature schemes in Sect. 8: strong unforgeability in a multi-user setting with corruptions (M-SUF-CMA). This notion is related to the security definition given by Menezes and Smart in [54] but unlike theirs, our notion takes into account user corruptions. Next, we propose generic transformations from M-SUF-CMA signature schemes to forward-secure signature schemes which preserve tightness. Finally, in Sect. 9, we provide several instantiations of M-SUF-CMA signature schemes with tight security reductions to standard non-interactive hard problems. The results in Sects. 8 and 9 are mostly of theoretical interest as the schemes that we obtain are significantly less efficient than the ones in preceding sections.

In an independent paper [10, Section 5.1], Bader et al. also studied signature schemes in a multi-user setting (with corruptions). Using a meta-reduction, they showed that M-SUF-CMA cannot be tightly reduced to standard non-interactive hard problems, if secret keys can be re-randomized. On the one hand, contrary to our meta-reduction for forward-secure signature schemes, their meta-reduction allows for rewinding. On the other hand, if we forget about rewindings, our impossibility result implies the one in [10] for M-SUF-CMA signature schemes, as forward-secure signature schemes can be constructed from M-SUF-CMA signature schemes with a tight reduction.Footnote 3

Publication Note. An abridged version of this paper appeared in the proceedings of PKC 2013 [3]. In this version, we give more precise and formal security definitions and statements, we include complete proofs of security, and we provide new impossibility and existential results for tight forward-secure signature schemes. Most notably, we demonstrate that the loss of a factor T corresponding to the total number of time periods cannot be avoided in the proof of forward security for a large class of key-evolving signature schemes, including all the schemes considered in [3]. In addition, we also show how to avoid these impossibility results and build forward-secure signature schemes whose security reductions are fully tight.

Organization. Section 2 recalls some basic definitions and complexity assumptions used in the paper. Section 3 introduces lossy key-evolving identification schemes and shows how to transform them into forward-secure signature schemes. Section 4 applies our security proof methodology to two cases: the Guillou–Quisquater signature scheme and its extension to the forward-secure setting, which is a variant of the Itkis–Reyzin forward-secure signature scheme in [41]. Section 5 compares our variant of the Itkis–Reyzin forward-secure signature scheme to the original one and to the MMM scheme by Malkin et al. [52]. Section 6 introduces a generic factoring-based forward-secure signature scheme along with various instantiations. Section 7 provides further results regarding the reduction tightness of forward-secure signature schemes. In particular, it shows that the loss of a factor T in the proof of forward security cannot be avoided for a large class of key-evolving signature schemes, which includes the ones considered in the previous sections. Sections 8 and 9 show how to avoid the impossibility results in Sect. 7 and build forward-secure signature schemes with tight security reductions. The appendix provides additional results regarding forward-secure signature schemes. More precisely, Appendix A presents a few relations between different security notions for forward-signature schemes. Appendix B presents several results used in the security analysis of our signature schemes. Finally, Appendix C provides additional proofs used in the concrete security analysis in Sect. 5.

2 Preliminaries

2.1 Notation and Conventions

Let \({{\mathbb N}}\) denote the set of natural numbers. If \(N \in {{\mathbb N}}\) and \(N \ge 2\), then \({{\mathbb Z}}_N = {{\mathbb Z}}/N{{\mathbb Z}}\) is the ring of integers modulo N, and \({{\mathbb Z}}_N^*\) is its group of units. If \(e,N\in {{\mathbb N}}\) and \(e,N \ge 2\), then an element \(y \in {{\mathbb Z}}^*_N\) is an e-residue modulo N if there exists an element \(x \in {{\mathbb Z}}^*_N\) such that \(y = x^e \bmod N\). We denote the set of e-residues modulo N by \({\mathsf {HR}}_{N}[e]\).

If \(n \in {{\mathbb N}}\), then \(\{0,1\}^n\) denotes the set of n-bit strings, and \(\{0,1\}^*\) is the set of all bit strings. The empty string is denoted \(\bot \). An empty table \(\mathsf {T}\) is denoted [], and \(\mathsf {T}[x]\) is the value of the table at index x and is equal to \(\perp \) if undefined. If x is a string then |x| denotes its length, and if S is a set then |S| denotes its size. If S is finite, then \(x {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}S\) denotes the assignment to x of an element chosen uniformly at random from S. If \(\mathcal {A}\) is an algorithm, then \(y \leftarrow \mathcal {A}(x)\) denotes the assignment to y of the output of \(\mathcal {A}\) on input x, and if \(\mathcal {A}\) is randomized, then \(y {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}\mathcal {A}(x)\) denotes that the output of an execution of \(\mathcal {A}(x)\) (with fresh coins) is assigned to y. Unless otherwise indicated, an algorithm may be randomized. We denote by \( k \in {{\mathbb N}}\) the security parameter. Let \({{\mathbb P}}\) denote the set of primes and \({{\mathbb P}}_{{\ell _e}}\) denote the set of primes of length \({\ell _e}\). Most of our schemes are in the random oracle model [16].

2.2 Games

The definitions and proofs in this paper use code-based game-playing techniques [17]. In such games, there exist procedures for initialization (Initialize) and finalization (Finalize) and procedures to respond to adversary oracle queries. A game \(\text {G}\) is executed with an adversary \(\mathcal {A}\) as follows. First, Initialize executes and its outputs are the inputs to \(\mathcal {A}\). Then \(\mathcal {A}\) executes, its oracle queries being answered by the corresponding procedures of \(\text {G}\). When \(\mathcal {A}\) terminates, its output becomes the input to the Finalize procedure. The output of the latter, denoted \(\text {G}({\mathcal {A}})\), is called the output of the game, and “\(\text {G}({\mathcal {A}}) \,{\Rightarrow }\,y\)” denotes the event that the output takes a value y. The running time of an adversary is the worst-case time of the execution of the adversary with the game defining its security, so that the execution time of the called game procedures is included.

Review of code-based game-playing proofs. We recall some background on code-based game playing. The boolean flag \(\mathsf {bad}\) is assumed initialized to \(\mathsf {false}\). We say that games \(\text {G}_i,\text {G}_j\) are identical until \(\mathsf {bad}\) if their programs differ only in statements that (syntactically) follow the setting of \(\mathsf {bad}\) to \(\mathsf {true}\). For examples, games \(\text {G}_0,\text {G}_1\) of Fig. 3 are identical until \(\mathsf {bad}\). Let us now recall two lemmas stated in [13, 17].

Lemma 2.1

[17] Let \(\text {G}_i,\text {G}_j\) be identical-until-\(\mathsf {bad}\) games, and \(\mathcal {A}\) an adversary. Then we have \(|{\Pr \left[ \,{\text {G}_i(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } - {\Pr \left[ \,{\text {G}_j(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }| \le {\Pr \left[ \,{\text {G}_i(\mathcal {A}) \text{ sets } \mathsf {bad}}\,\right] } \).

Lemma 2.2

[13] Let \(\text {G}_i,\text {G}_j\) be identical-until-\(\mathsf {bad}\) games, and \(\mathcal {A}\) an adversary. Let \(\mathsf {Good}_i,\mathsf {Good}_j\) be the events that \(\mathsf {bad}\) is never set in games \(\text {G}_i,\text {G}_j\), respectively. Then, \({\Pr \left[ \,{\text {G}_i(\mathcal {A}) \,{\Rightarrow }\,1 \wedge \mathsf {Good}_i}\,\right] } = {\Pr \left[ \,{\text {G}_j(\mathcal {A}) \,{\Rightarrow }\,1 \wedge \mathsf {Good}_j}\,\right] }\).

2.3 Statistical Distance

Let \({\mathsf {D}}_1\) and \({\mathsf {D}}_2\) be two probability distributions over a finite set \(\mathcal {S}\) and let X and Y be two random variables with these two respective distributions. The statistical distance between \({\mathsf {D}}_1\) and \({\mathsf {D}}_2\) is also the statistical distance between X and Y:

If the statistical distance between \({\mathsf {D}}_1\) and \({\mathsf {D}}_2\) is less than or equal to \(\varepsilon \), we say that \({\mathsf {D}}_1\) and \({\mathsf {D}}_2\) are \(\varepsilon \)-close or are \(\varepsilon \)-statistically indistinguishable. If the \({\mathsf {D}}_1\) and \({\mathsf {D}}_2\) are 0-close, we say that \({\mathsf {D}}_1\) and \({\mathsf {D}}_2\) are perfectly indistinguishable.

We use the following lemma.

Lemma 2.3

Let \(S_0\) and \(S_1\) two finite sets such that \(S_1 \subseteq S_0\). Let \({\mathsf {D}}_0\) and \({\mathsf {D}}_1\) be the uniform distributions over \(S_0\) and \(S_1\), respectively. Let \(N_0=|S_0|\) and \(N_1 = |S_1|\) be the cardinals of \(S_0\) and \(S_1\), respectively. Then, the statistical distance between \({\mathsf {D}}_0\) and \({\mathsf {D}}_1\) is \(1-N_1/N_0\).

Proof

The statistical distance is:

\(\square \)

2.4 Complexity Assumptions

The security of the signature schemes being analyzed in this paper will be based on decisional assumptions over composite-order groups: the quadratic-residuosity assumption, the high-residuosity assumption, the \(\phi \)-hiding assumption, and a new assumption called the gap \(2^t\)-residuosity. We also need to recall the strong RSA assumption to be able to compare our scheme with the Itkis–Reyzin scheme [41].

For all these assumptions, the underlying problem consists in distinguishing two distributions \(D_1\) and \(D_2\). More precisely, an adversary \(\mathcal {D}\) is said to \((t,\varepsilon )\)-solve or \((t,\varepsilon )\)-break the underlying problem if it runs in time t and

Then the underlying problem is said to be \((t,\varepsilon )\)-hard if no adversary can \((t,\varepsilon )\)-solve it.

\(\phi \)-hiding assumption [21, 45] The \(\phi \)-hiding assumption, introduced by Cachin et al. [21], states that it is hard for an adversary to tell whether a prime number e divides the order \(\phi (N)\) of the group \({{\mathbb Z}}^*_N\). In this paper, we use a very slight variant of the formulation in [45].Footnote 4

More formally, let \({\ell _N}\) be a function of \( k \), let \({\ell _e}\) be a public positive constant smaller than \({\textstyle \frac{1}{4}} {\ell _N}\). Let \({\mathsf {RSA}}_{{\ell _N}}\) denote the set of all tuples \((N,p_1,p_2)\) such that \(N=p_1 p_2\) is \({\ell _N}\)-bit number which is the product of two distinct \({\ell _N}/2\)-bit primes, as in [45]. N is called an RSA modulus. Likewise, let R be a relation on \(p_1\) and \(p_2\). We denote by \({\mathsf {RSA}}_{{\ell _N}}[R]\) the subset of \({\mathsf {RSA}}_{{\ell _N}}\) for which the relation R holds. The \(\phi \)-hiding assumption states that the two following distributions are computationally indistinguishable:

where \(\phi (N)\) is the order of \({{\mathbb Z}}^*_N\).

We remark that the these two distributions can be sampled efficiently if we assume the widely-accepted Extended Riemann Hypothesis (Conjecture 8.4.4 of [18]).

Quadratic residuosity. The quadratic-residuosity assumption states that it is hard to distinguish a 2-residue (a.k.a, a quadratic residue) from an element of Jacobi symbol 1, modulo an RSA modulus N.

More formally, let N be an RSA modulus. We recall that \({\mathsf {HR}}_{N}[2]\) denotes the set of all 2-residues modulo N. Let \({\mathsf {J}}_{N}[2]\) be the set of elements in \({{\mathbb Z}}^*_N\) with Jacobi symbol 1. The quadratic-residuosity assumption states that the two following distributions are computationally indistinguishable:

High residuosity. Let e be an RSA modulus and \(N = e^2\). The high-residuosity assumption states that it is hard to distinguish a e-residue modulo N from an element from \({{\mathbb Z}}^*_{N}\).

More formally, let \({\mathsf {J}}_{N}[e] = {{\mathbb Z}}^*_N\). The high-residuosity assumption states that the two following distributions are computationally indistinguishable:

Gap\(2^t\)-residuosity. We introduce the gap\(2^t\)-residuosity assumption, that states that it is hard for an adversary to decide whether a given element y (in \({{\mathbb Z}}^*_N\)) of Jacobi symbol 1 is a \(2^t\)-residue or is even not a 2-residue, when \(2^t\) divides \(p_1-1\) and \(p_2-1\).

More formally, this assumption states that the two following distributions are computationally indistinguishable:

This assumption has been independently considered and proven secure by Benhamouda, Herranz, Joye, and Libert in [9, 42], under a variant of the quadratic residuosity assumption together with a new reasonable assumption called the “squared Jacobi symbol” assumption.

Strong RSA. The strong RSA assumption states that, given an element \(y \in {{\mathbb Z}}^*_N\), it is hard for an adversary to find an integer \(2 \le e \le 2^{\ell _e}\) and an element \(x \in {{\mathbb Z}}^*_N\) such that \(y = x^e \bmod N\), where \({\ell _e}\) is a function of the security parameter \( k \). In this article, we actually use the variant of the strong RSA assumption described in [41]. As explained in the latter article, compared to the original version of the assumption introduced independently in [14, 27], we restrict N to be a product of two safe primesFootnote 5 and we restrict e to be at most \(2^{\ell _e}\) for some value \({\ell _e}\). We remark that, formally, we have defined a family of assumptions indexed by \({\ell _e}\), a function of \( k \).

2.5 Forward-Secure Signature Schemes

A forward-secure signature scheme is a key-evolving signature scheme in which the secret key is updated periodically while the public key remains the same throughout the lifetime of the scheme [11]. Each time period has a secret signing key associated with it, which can be used to sign messages with respect to that time period. The validity of these signatures can be checked with the help of a verification algorithm. At the end of each time period, the signer in possession of the current secret key can generate the secret key for the next time period via an update algorithm. Moreover, old secret keys are erased after a key update.

Formally, a key-evolving signature scheme is defined by a tuple of algorithms  and a message space \(\mathcal {M}\), providing the following functionality. Via \(( pk , sk ) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k ,1^T)\), a user can run the probabilistic key generation algorithm \({\mathsf {KG}}\) to obtain a pair \(( pk , sk _1)\) of public and secret keys for a given security parameter \( k \) and a given total number of periods T. \( sk _1\) is the secret key associated with time period 1. Via \( sk _{i+1} \leftarrow {\mathsf {Update}}( sk _{i})\), the user in possession of the secret key \( sk _i\) associated with time period \(i \le T\) can generate a secret key \( sk _{i+1}\) associated with time period \(i+1\). By convention, \( sk _{T+1}=\bot \). Via \({(\sigma ,i)} {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {Sign}}( sk _{i}, M )\), the user in possession of the secret key \( sk _i\) associated with time period \(i \le T\) can generate a signature \({(\sigma ,i)}\) for a message \( M \in \mathcal {M}\) for period i. Finally, via \(d\leftarrow {\mathsf {Ver}}( pk ,{(\sigma ,i)}, M )\), one can run the deterministic verification algorithm to check if \(\sigma \) is a valid signature for a message \( M \in \mathcal {M}\) for period i and public key \( pk \), where \(d=1\) if the signature is correct and 0 otherwise. For correctness, it is required that for all honestly generated keys \(( sk _1,\ldots , sk _T)\) and for all messages \( M \in \mathcal {M}\), \({\mathsf {Ver}}( pk ,{\mathsf {Sign}}( sk _i, M ), M )=1\) holds with all but negligible probability.

and a message space \(\mathcal {M}\), providing the following functionality. Via \(( pk , sk ) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k ,1^T)\), a user can run the probabilistic key generation algorithm \({\mathsf {KG}}\) to obtain a pair \(( pk , sk _1)\) of public and secret keys for a given security parameter \( k \) and a given total number of periods T. \( sk _1\) is the secret key associated with time period 1. Via \( sk _{i+1} \leftarrow {\mathsf {Update}}( sk _{i})\), the user in possession of the secret key \( sk _i\) associated with time period \(i \le T\) can generate a secret key \( sk _{i+1}\) associated with time period \(i+1\). By convention, \( sk _{T+1}=\bot \). Via \({(\sigma ,i)} {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {Sign}}( sk _{i}, M )\), the user in possession of the secret key \( sk _i\) associated with time period \(i \le T\) can generate a signature \({(\sigma ,i)}\) for a message \( M \in \mathcal {M}\) for period i. Finally, via \(d\leftarrow {\mathsf {Ver}}( pk ,{(\sigma ,i)}, M )\), one can run the deterministic verification algorithm to check if \(\sigma \) is a valid signature for a message \( M \in \mathcal {M}\) for period i and public key \( pk \), where \(d=1\) if the signature is correct and 0 otherwise. For correctness, it is required that for all honestly generated keys \(( sk _1,\ldots , sk _T)\) and for all messages \( M \in \mathcal {M}\), \({\mathsf {Ver}}( pk ,{\mathsf {Sign}}( sk _i, M ), M )=1\) holds with all but negligible probability.

Existential and strong forward security. Informally, a key-evolving signature scheme is existentially forward-secure under adaptive chosen-message attack (\(\mathrm {EUF}\hbox {-}\mathrm {CMA}\)), if it is infeasible for an adversary—also called forger—to forge a signature \(\sigma ^*\) on a message \( M ^*\) for a time period \(i^*\), even with access to the secret key for a period \(i > i^*\) (and thus to all the subsequent secret keys; this period i is called the break-in period) and to sign messages of its choice for any period (via a signing oracle), as long as he has not requested a signature on \( M ^*\) for period \(i^*\) to the signing oracle.

This notion is a generalization of the existential unforgeability under adaptive chosen-message attacks (\(\mathrm {EUF}\hbox {-}\mathrm {CMA}\) for signature schemes) [30] to key-evolving signature scheme. It is a slightly stronger variant of the definition in [11]. Compared to [11], we do not restrict the adversary to only perform signing queries with respect to the current time period and we allow multiple Break-In queries (only the break-in period taken into account is the minimum of all these periods). The advantage of the first change is that the game is simpler than the one with the definition of Bellare and Miner in [11]. Concerning the second change, the classical notion which only allows a single Break-In query is equivalent, as it is possible to guess which query corresponds to the minimum period. However, that does not preserve the tightness of the reduction. Anyway, it seems that most of the current schemes (maybe even all of them) also satisfy our stronger definition, using nearly the same reductions.

In the remainder of the paper, we also use a stronger notion: strong forward security (\(\mathrm {SUF}\hbox {-}\mathrm {CMA}\)). In this notion, the forger is allowed to produce a signature \(\sigma ^*\) on a message \( M ^*\) for a period \(i^*\), such that the triple \(( M ^*,i^*,\sigma ^*)\) is different from all the triples produced by the signing oracle. In other words, compared to existential forward security, the adversary wins even if the message \( M ^*\) is previously signed, as long as the signature is new. In the sequel, we omit the adjective “strong” when the latter is clear from the context.

More formally, let us consider the games  and

and  depicted in Fig. 1. We then say that

depicted in Fig. 1. We then say that  is \((t,q_h,q_s,\varepsilon )\)-existentially forward-secure, if for any adversary \(\mathcal {A}\) running in time at most t and making at most \(q_h\) queries to the random oracle and \(q_s\) queries to the signing oracle:

is \((t,q_h,q_s,\varepsilon )\)-existentially forward-secure, if for any adversary \(\mathcal {A}\) running in time at most t and making at most \(q_h\) queries to the random oracle and \(q_s\) queries to the signing oracle:  . And

. And  is \((t,q_h,q_s,\varepsilon )\)-forward-secure, if for any such adversary:

is \((t,q_h,q_s,\varepsilon )\)-forward-secure, if for any such adversary:  .

.

Games defining the \(\mathrm {EUF}\hbox {-}\mathrm {CMA}\), \(\mathrm {SUF}\hbox {-}\mathrm {CMA}\), \(\mathrm {W}\hbox {-}\mathrm {EUF}\hbox {-}\mathrm {CMA}\) and \(\mathrm {W}\hbox {-}\mathrm {SUF}\hbox {-}\mathrm {CMA}\) security of a key-evolving signature scheme  . Boxed lines marked (e) are only for the existential variants, boxed lines marked (s) are only for the strong variants, and boxed lines marked (sel) are only for the selective variants

. Boxed lines marked (e) are only for the existential variants, boxed lines marked (s) are only for the strong variants, and boxed lines marked (sel) are only for the selective variants

Selective security notions. In addition to the previous classical security notions, we also consider two weaker notions: selective existential forward security and selective strong forward security, which are useful for the comparison of different schemes. In these notions, the time period of the forgery is chosen at the beginning at random but not disclosed to the adversary, and the adversary loses when it does not produce a forgery for the chosen time period. We could have opted for a more classical selective version where the adversary chooses the time period of the forgery at the beginning, but that would have made notation more cumbersome.

More precisely, when defining the selective existential forward security and selective strong forward security notions, the challenger of the adversary picks a period \({\tilde{\imath }}\) uniformly at random at the beginning and rejects the forged signature if it does not correspond to the period \({\tilde{\imath }}\), as in games  and

and  depicted in Fig. 1. Then we say that a key-evolving signature scheme is \((t,q_h,q_s,\varepsilon ,\delta )\)selectively existentially/strongly forward-secure if there is no adversary \(\mathcal {A}\) (running in time at most t, doing at most \(q_h\) requests to the random oracle, and \(q_s\) requests of signatures), such that, with probability at least \(\delta \), the challenger chooses a period \({\tilde{\imath }}\) and a key pair \(( pk , sk _1)\), such that \(\mathcal {A}\) forges a correct signature for period \({\tilde{\imath }}\) with probability at least \(\varepsilon \). The idea of this definition is to separate the success probability for a given period and a given key pair from the choice of the period and the key pair. This enables us to repeat the experiments with the same period and key pair to increase the success probability of the adversary (for a given period and key pair). The main reason we need to keep the same period and key pair at each repetition is that our reduction “embeds” the challenge of the underlying assumption into them and this challenge cannot be changed between two repetitions, as the assumptions we use are not known to be random self-reducible.

depicted in Fig. 1. Then we say that a key-evolving signature scheme is \((t,q_h,q_s,\varepsilon ,\delta )\)selectively existentially/strongly forward-secure if there is no adversary \(\mathcal {A}\) (running in time at most t, doing at most \(q_h\) requests to the random oracle, and \(q_s\) requests of signatures), such that, with probability at least \(\delta \), the challenger chooses a period \({\tilde{\imath }}\) and a key pair \(( pk , sk _1)\), such that \(\mathcal {A}\) forges a correct signature for period \({\tilde{\imath }}\) with probability at least \(\varepsilon \). The idea of this definition is to separate the success probability for a given period and a given key pair from the choice of the period and the key pair. This enables us to repeat the experiments with the same period and key pair to increase the success probability of the adversary (for a given period and key pair). The main reason we need to keep the same period and key pair at each repetition is that our reduction “embeds” the challenge of the underlying assumption into them and this challenge cannot be changed between two repetitions, as the assumptions we use are not known to be random self-reducible.

Finally, we remark that our selective notions are actually extensions of the security definition of Micali and Reyzin in [53]. These notions are weaker than the previous ones in the following way: if a scheme is \((t,q_h,q_s, T \varepsilon \delta )\)existentially/strongly forward-secure, then it is \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively existentially/strongly forward-secure, as proven in Appendix A. As for strong forward security, we sometimes omit the adjective “strong” in selective strong forward security when it is clear from the context.

Remark 2.4

In order to be able to compare different schemes, as in [53], we suppose that any attacker which \((t,q_h,q_s,\varepsilon )\)-breaks the selective forward security (where there is no \(\delta \) and \(\epsilon \) is the classical success probability here) of a scheme also \((t,q_h,q_s,\varepsilon ,\delta =1/2)\)-breaks it.Footnote 6 Under this assumption, \((t,q_h,q_s,\varepsilon ,1/2)\)-selective forward security implies \((t,q_h,q_s,T \varepsilon )\)-forward security. As shown in Sect. 5.4, it enables us to do a quite fair comparison, if we consider that a \((t,q_h,q_s,T\varepsilon )\)-forward-secure scheme has \(\log _2(t / (T\varepsilon ))\) bits of security, which is the intuitive notion of security.

At first, we might think that just assuming that a \((t,q_h,q_s,T\varepsilon )\)-forward-secure scheme provides \(\log _2(t / (T\varepsilon ))\) bits of security should be sufficient to do fair comparisons. This would indeed be sufficient for our new security reductions, as they basically ensure that if some assumption (e.g., the \(\phi \)-hiding assumption) is \((t',\varepsilon ')\)-hard, then the signature scheme is \((t,q_h,q_s,T\varepsilon )\)-forward-secure for \(t \approx t'\) and \(\varepsilon \approx \varepsilon '\). But unfortunately, security reductions based on the forking lemma (to which we want to compare our new security reductions) only ensure that if some assumption (e.g., the RSA assumption) is \((t',\varepsilon ')\)-hard, then the signature scheme is \((t,q_h,q_s,T\varepsilon )\)-forward-secure for \(t \approx t'\) and \(\varepsilon \approx \varepsilon '^2 / q_h\). In that case, \(\log _2(t / (T\varepsilon ))\) is even not well defined. That is why, following [53], we introduced the notions of \((t,q_h,q_s,\varepsilon ,\delta )\)-selective-(existential)-forward-security to solve this problem (see Theorem C.1 and also the discussions around Theorem 2 in [53]).

More details on the relations between these security notions can be found in Appendix A.

3 Lossy Key-Evolving Identification and Signature Schemes

In this section, we present a new notion, called lossy key-evolving identification scheme, which combines the notions of lossy identification schemes [5, 6], which can be transformed to tightly secure signature scheme, and key-evolving identification schemes [11], which can be transformed to forward-secure signature via a generalized Fiat–Shamir transform (not necessarily tight, and under some conditions). Although this new primitive is not very useful for practical real-world applications, it is a tool that will enable us to construct forward-secure signatures with tight reductions, via the generalized Fiat–Shamir transform described in Sect. 3.2.

3.1 Lossy Key-Evolving Identification Scheme

The operation of a key-evolving identification scheme is divided into time periods \(1,\ldots , T\), where a different secret is used in each time period, and such that the secret key for a period \(i+1\) can be computed from the secret key for the period i. The public key remains the same in every time period. In this paper, a key-evolving identification scheme is a three-move protocol in which the prover first sends a commitment\( cmt \) to the verifier, then the verifier sends a challenge\( ch \) uniformly at random, and finally the prover answers by a response\( rsp \). The verifier’s final decision is a deterministic function of the conversation with the prover (the triple \(( cmt , ch , rsp )\)), of the public key, and of the index of the current time period.

Informally, a lossy key-evolving identification scheme has \(T+1\) types of public keys: normal public keys, which are used in the real protocol, and i-lossy public keys, for \(i \in \{1,\ldots ,T\}\), which are such that no prover (even not computationally bounded) should be able to make the verifier accept for the period i with non-negligible probability. Furthermore, for each period i, it is possible to generate an i-lossy public key, such that the latter is indistinguishable from a normal public key even if the adversary is given access to any secret key for period \(i' > i\).

More formally, a lossy key-evolving identification scheme  is defined by a tuple \(({\mathsf {KG}}, {\mathsf {LKG}}, {\mathsf {Update}}, {\mathsf {Prove}}, \mathcal {C}, {\mathsf {Ver}})\) such that:

is defined by a tuple \(({\mathsf {KG}}, {\mathsf {LKG}}, {\mathsf {Update}}, {\mathsf {Prove}}, \mathcal {C}, {\mathsf {Ver}})\) such that:

-

\({\mathsf {KG}}\) is the normal key generation algorithm which takes as input the security parameter \( k \) and the number of periods T and outputs a pair \(( pk , sk _1)\) containing the public key and the prover’s secret key for the first period.

-

\({\mathsf {LKG}}\) is the lossy key generation algorithm which takes as input the security parameter \( k \) and the number of periods T and a period i and outputs a pair \(( pk , sk _{i+1})\) containing an i-lossy public key \( pk \) and a prover’s secret key for period \(i+1\) (\( sk _{T+1} = \bot \)).

-

\({\mathsf {Update}}\) is the deterministic secret key update algorithm which takes as input a secret key \( sk _i\) for period i and outputs a secret key \( sk _{i+1}\) for period \(i+1\) if \( sk _i\) is a secret key for some period \(i < T\), and \(\bot \) otherwise. We write \({\mathsf {Update}}^j\) the function \({\mathsf {Update}}\) composed j times with itself (\({\mathsf {Update}}^j( sk _i)\) is a secret key \( sk _{i+j}\) for period \(i+j\), if \(i+j \le T\)).

-

\({\mathsf {Prove}}\) is the prover algorithm which takes as input the secret key for the current period, the current conversation transcript (and the current state \( st \) associated with it, if needed) and outputs the next message to be sent to the verifier, and the next state (if needed). We suppose that any secret key \( sk _{i}\) for period i always contains i, and so i is not an input of \({\mathsf {Prove}}\).

-

\(\mathcal {C}\) is the set of possible challenges that can be sent by the verifier. The set \(\mathcal {C}\) might implicitly depend on the public key. In our constructions, it is of the form \({\{0,\ldots ,\mathfrak {c}-1\}}^\ell \).

-

\({\mathsf {Ver}}\) is the deterministic verification algorithm which takes as input the conversation transcript and the period i and outputs 1 to indicate acceptance, and 0 otherwise.

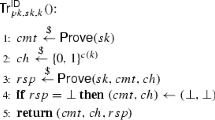

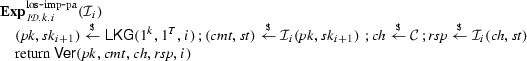

A randomized transcript generation oracle  is associated to each

is associated to each  , \( k \), and \(( pk , sk _i)\).

, \( k \), and \(( pk , sk _i)\).  takes no inputs and returns a random transcript of an “honest” execution for period i. More precisely, the transcript generation oracle

takes no inputs and returns a random transcript of an “honest” execution for period i. More precisely, the transcript generation oracle  is defined as follows:

is defined as follows:

An identification scheme is said to be lossy if it has the following properties:

-

1.

Completeness of normal keys.

is said to be complete, if for every period i, every security parameter \( k \) and all honestly generated keys \(( pk , sk _1) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k )\), \({\mathsf {Ver}}( pk , cmt , ch , rsp ,i)=1\) holds with probability 1 when

is said to be complete, if for every period i, every security parameter \( k \) and all honestly generated keys \(( pk , sk _1) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k )\), \({\mathsf {Ver}}( pk , cmt , ch , rsp ,i)=1\) holds with probability 1 when  , with \( sk _i = {\mathsf {Update}}^{i-1}( sk _1)\).

, with \( sk _i = {\mathsf {Update}}^{i-1}( sk _1)\). -

2.

Simulatability of transcripts. Let \(( pk , sk _1)\) be the output of \({\mathsf {KG}}(1^ k )\) for a security parameter \( k \), and \( sk _i\) be the output of \({\mathsf {Update}}^{i-1}( sk _1)\). Then,

is said to be \(\varepsilon \)-simulatable if there exists a probabilistic polynomial time simulator

is said to be \(\varepsilon \)-simulatable if there exists a probabilistic polynomial time simulator  with no access to any secret key, which can generate transcripts \(\{( cmt , ch , rsp )\}\) whose distribution is statistically indistinguishable from the transcripts output by

with no access to any secret key, which can generate transcripts \(\{( cmt , ch , rsp )\}\) whose distribution is statistically indistinguishable from the transcripts output by  , where \(\varepsilon \) is an upper-bound for the statistical distance. When \(\varepsilon =0\), then

, where \(\varepsilon \) is an upper-bound for the statistical distance. When \(\varepsilon =0\), then  is said to be perfectly simulatable.

is said to be perfectly simulatable.This property is also often called statistical honest-verifier zero-knowledge [12, 23, 31].

-

3.

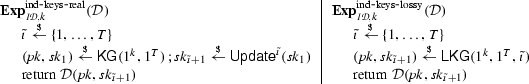

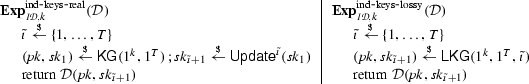

Key indistinguishability. Consider the experiments

and

and  , defined as follows:

, defined as follows:

\(\mathcal {D}\) is said to \((t,\varepsilon )\)-solve the key indistinguishability problem if it runs in time t and

Furthermore, we say that

is \((t,\varepsilon )\)-key-indistinguishable, if no algorithm \((t,\varepsilon )\)-solves the key-indistinguishability problem.

is \((t,\varepsilon )\)-key-indistinguishable, if no algorithm \((t,\varepsilon )\)-solves the key-indistinguishability problem. -

4.

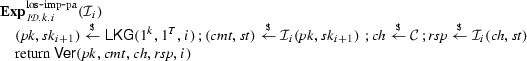

Lossiness. Let \(\mathcal {I}_i\) be an impersonator for period i (\(i \in \{1,\ldots ,T\}\)), \( st \) be its state. We consider the experiment

played between \(\mathcal {I}_i\) and a hypothetical challenger:

played between \(\mathcal {I}_i\) and a hypothetical challenger:

The impersonator \(\mathcal {I}_i\) is said to \(\varepsilon \)-solve the impersonation problem with respect to i-lossy public keys if

. Furthermore,

. Furthermore,  is said to be \(\varepsilon \)-lossy if, for any period \(i \in \{1,\ldots ,T\}\), no (computationally unrestricted) algorithm \(\varepsilon \)-solves the impersonation problem with respect to i-lossy keys.

is said to be \(\varepsilon \)-lossy if, for any period \(i \in \{1,\ldots ,T\}\), no (computationally unrestricted) algorithm \(\varepsilon \)-solves the impersonation problem with respect to i-lossy keys.

In addition, the commitment space of  has min-entropy at least \(\beta \), if for every period i, every security parameter \( k \), and all honestly generated keys \(( pk , sk _1) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k )\), every bit string \( cmt ^*\), if \( sk _i = {\mathsf {Update}}^{i-1}( sk _1)\), then:

has min-entropy at least \(\beta \), if for every period i, every security parameter \( k \), and all honestly generated keys \(( pk , sk _1) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k )\), every bit string \( cmt ^*\), if \( sk _i = {\mathsf {Update}}^{i-1}( sk _1)\), then:

Usually, an identification scheme is also required to be sound, i.e., for a period i chosen uniformly at random, it should not be possible for an adversary to run the protocol with a honest verifier and to make the latter accept, if the public key has been generated honestly (\(( pk , sk _1) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k , 1^T)\)) and if the adversary is only given a secret key \( sk _{i+1}\). But, in our case, this soundness property follows directly from the key indistinguishability and the lossiness properties.

We could also consider more general lossy identification schemes where the simulatability of transcripts is computational instead of being statistical. However, we opted for not doing so because our security reduction is not tight with respect to the simulatability of transcripts.

We remark that, for \(T=1\), a key-evolving lossy identification scheme becomes a standard lossy identification scheme, described in [5, 6].Footnote 7

Finally, we say that  is response-unique if the following holds either for every lossy public key or for every normal public key (or for both): for all periods \(i \in \{1,\ldots ,T\}\), for all bit strings \( cmt \) (which may or may not be a correctly generated commitment), and for all challenges \( ch \), there exists at most one response \( rsp \) such that \({\mathsf {Ver}}( pk , cmt , ch , rsp ,i)=1\).

is response-unique if the following holds either for every lossy public key or for every normal public key (or for both): for all periods \(i \in \{1,\ldots ,T\}\), for all bit strings \( cmt \) (which may or may not be a correctly generated commitment), and for all challenges \( ch \), there exists at most one response \( rsp \) such that \({\mathsf {Ver}}( pk , cmt , ch , rsp ,i)=1\).

3.2 Generalized Fiat–Shamir Transform

The forward-secure signature schemes considered in this paper are built from a key-evolving identification scheme via a straightforward generalization of the Fiat–Shamir transform [28], depicted in Fig. 2. More precisely, the signature for period i is just the signature obtained from a Fiat–Shamir transform with secret key \( sk _i = {\mathsf {Update}}^{i-1}( sk _1)\) (with the period i included in the random oracle input).

Let  be the signature scheme obtained via this generalized Fiat–Shamir transform.

be the signature scheme obtained via this generalized Fiat–Shamir transform.

Main security theorem. The following theorem is a generalization of Theorem 1 in [5, 6] to key-evolving schemes. If we set \(T=1\) in Theorem 3.1, we get the latter theorem with slightly improved bounds, since forward security for \(T=1\) reduces to the notion of strong unforgeability for signature schemes. For the sake of simplicity and contrary to [5, 6] where the completeness property of the underlying lossy identification scheme was only assumed to hold statistically, we assume perfect completeness, as this is satisfied by all of the schemes that we consider.

Theorem 3.1

Let  be a key-evolving lossy identification scheme whose commitment space has min-entropy at least \(\beta \) (for every period i), let \({\mathsf {H}}\) be a hash function modeled as a random oracle, and let

be a key-evolving lossy identification scheme whose commitment space has min-entropy at least \(\beta \) (for every period i), let \({\mathsf {H}}\) be a hash function modeled as a random oracle, and let  be the signature scheme obtained via the generalized Fiat–Shamir transform (Fig. 2). If

be the signature scheme obtained via the generalized Fiat–Shamir transform (Fig. 2). If  is \(\varepsilon _{s}\)-simulatable, complete, \((t',\varepsilon ')\)-key-indistinguishable, and \(\varepsilon _{\ell }\)-lossy, then

is \(\varepsilon _{s}\)-simulatable, complete, \((t',\varepsilon ')\)-key-indistinguishable, and \(\varepsilon _{\ell }\)-lossy, then  is \((t,q_h,q_s,\varepsilon )\) existentially forward-secure in the random oracle model for:

is \((t,q_h,q_s,\varepsilon )\) existentially forward-secure in the random oracle model for:

where \(t_{{\mathsf {Sim-Sign}}}\) denotes the time to simulate a transcript using  and \(t_{\mathsf {Update}}\) denotes the time to update a secret key using \({\mathsf {Update}}\). Furthermore, if

and \(t_{\mathsf {Update}}\) denotes the time to update a secret key using \({\mathsf {Update}}\). Furthermore, if  is response-unique,

is response-unique,  is also \((t,q_h,q_s,\varepsilon )\)-forward-secure.

is also \((t,q_h,q_s,\varepsilon )\)-forward-secure.

The proof of Theorem 3.1 is an adaptation of the proof in [5, 6] to the forward-security setting. As in [5, 6], the main idea of the proof is to switch the public key of the signature scheme with a lossy one, for which forgeries are information theoretically impossible with high probability. In our case, however, we need to guess the period \(i^*\) of the signature output by the adversary, in order to choose the correct type of lossy key to be used in the reduction and this is why we lose a factor T in the reduction. Moreover, as in [5, 6], signatures queries are easy to answer thanks to the simulatability of the identification scheme. Finally, similarly to [5, 6] and contrary to [1], we remark that the factor \(q_H\) only multiplies terms which are statistically negligible and, hence, it has no effect on the tightness of the proof.

Proof

Let us suppose there exists an adversary \(\mathcal {A}\) which \((t,q_h,q_s,\varepsilon )\)-breaks the existential forward security of  . Let us consider the games \(\text {G}_0, \ldots , \text {G}_9\) of Figs. 3 and 4. The random oracle \(\mathbf{H }\) is simulated using a table \(\mathsf {HT}\) containing all the previous queries to the oracle and its responses.

. Let us consider the games \(\text {G}_0, \ldots , \text {G}_9\) of Figs. 3 and 4. The random oracle \(\mathbf{H }\) is simulated using a table \(\mathsf {HT}\) containing all the previous queries to the oracle and its responses.

Before describing precisely all the games and formally showing that two consecutive games are indistinguishable, let us give a high-level overview of these games. The first game \(\text {G}_0\) corresponds to the original security notion. We then change the way signatures are computed: instead of getting the challenge \( ch \) from the random oracle after generating the commitment \( cmt \), we first choose it and then program the random oracle. We deal with programming and the possible collisions that it could generate in the games \(\text {G}_1\), \(\text {G}_2\), and \(\text {G}_3\). We then simulate all signatures using the transcript simulator  in the game \(\text {G}_4\), as \( ch \) can now be chosen independently of \( cmt \). From this point on, the secret keys are only used to answer the \(\mathbf{Break }\hbox {-}\mathbf{In } \) queries. Hence, we can now guess the time period \(i^*\) of the forgery, abort if this is not guessed correctly, and generate a lossy public key for period \(i^*+1\) onwards (in the games \(\text {G}_6\), \(\text {G}_7\), and \(\text {G}_8\)). This makes us lose a factor T in the reduction. Finally, the lossiness property ensures that the adversary cannot generate a valid signature with non-negligible probability.

in the game \(\text {G}_4\), as \( ch \) can now be chosen independently of \( cmt \). From this point on, the secret keys are only used to answer the \(\mathbf{Break }\hbox {-}\mathbf{In } \) queries. Hence, we can now guess the time period \(i^*\) of the forgery, abort if this is not guessed correctly, and generate a lossy public key for period \(i^*+1\) onwards (in the games \(\text {G}_6\), \(\text {G}_7\), and \(\text {G}_8\)). This makes us lose a factor T in the reduction. Finally, the lossiness property ensures that the adversary cannot generate a valid signature with non-negligible probability.

Let us now provide the proof details. First, we will assume that the set of queries to the random oracle made by the adversary always contains the query \({( cmt ^*, M ^*)}\). This is without loss of generality because, given any adversary, we can always create an adversary (with the same success probability and approximately the same running time) that performs this query before calling \({\mathbf{Finalize }}\). It only increases the total amount of hash queries by 1.

Games \(\text {G}_0,\ldots ,\text {G}_4\) for proof of Theorem 3.1. \(\text {G}_1\) includes the boxed code at line 034 but \(\text {G}_0\) does not

Games \(\text {G}_5,\ldots ,\text {G}_9\) for proof of Theorem 3.1. \(\text {G}_6\) includes the boxed code at lines 525 and 556 but \(\text {G}_5\) does not; \(\text {G}_9\) includes the boxed code at line 757 but \(\text {G}_7\) and \(\text {G}_8\) do not

\(\text {G}_0\) corresponds to a slightly stronger game than the game  defining the existential forward security of a key-evolving signature built from a key-evolving scheme via generalized Fiat–Shamir transform. We only force the forgery to be such that \({( cmt ^*, M ^*, i^*)}\) is different from all the previous queries to the signing oracle, instead of just \({(M^*, i^*)}\) being different from all the previous queries. This corresponds to a security notion stronger than existential forward-security but still weaker than strong forward-security (where we have to consider \({( cmt ^*, rsp ^*, M ^*, i^*)}\)).

defining the existential forward security of a key-evolving signature built from a key-evolving scheme via generalized Fiat–Shamir transform. We only force the forgery to be such that \({( cmt ^*, M ^*, i^*)}\) is different from all the previous queries to the signing oracle, instead of just \({(M^*, i^*)}\) being different from all the previous queries. This corresponds to a security notion stronger than existential forward-security but still weaker than strong forward-security (where we have to consider \({( cmt ^*, rsp ^*, M ^*, i^*)}\)).

In \(\text {G}_0\), we have inlined the code of the random oracle in the procedure \({\mathsf {Sign}}\), and we set \(\mathsf {bad}\) whenever \(\mathbf{H }({( cmt , M ,i)})\) is already defined. We have also modified the code of the random oracle \(\mathbf{H }\) such that the \(\mathrm {fp}\)-th query (the critical query eventually related to the forgery) is answered by \( ch ^*\), a random challenge chosen in \({\mathbf{Initialize }}\), where \(\mathrm {fp}\) is a random integer in the range \(\{1, \ldots , q_h+1\}\). These modifications do not change the output of the original game.

To compute the probability \({\Pr \left[ \,{\text {G}_0(\mathcal {A}) \text{ sets } \mathsf {bad}}\,\right] }\), we remark that, for each signing query, the probability that there is a collision (i.e., \(\mathsf {bad}\) is set for this query) is at most \((q_h + q_s + 1)/2^\beta \). By the union bound, we have \( {\Pr \left[ \,{\text {G}_0(\mathcal {A}) \text{ sets } \mathsf {bad}}\,\right] } \le (q_h + q_s + 1) q_s /2^\beta \).

In \(\text {G}_1\), when \(\mathsf {bad}\) is set, a new random value for \(\mathbf{H }({( cmt , M ,i)})\) is set in \(\mathbf{Sign }\). Since \(\text {G}_0\) and \(\text {G}_1\) are identical until \(\mathsf {bad}\), thanks to Lemma 2.1, we have \( {\Pr \left[ \,{\text {G}_0(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } - {\Pr \left[ \,{\text {G}_1(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } \le {\Pr \left[ \,{\text {G}_0(\mathcal {A}) \text{ sets } \mathsf {bad}}\,\right] } \le (q_s + 1) q_s /2^\beta \).

In \(\text {G}_2\), \(\mathsf {bad}\) is no more set and the procedure \(\mathbf{Sign }\) is rewritten in an equivalent way. Since the latter does not change the output of the game, we have \({\Pr \left[ \,{\text {G}_1(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } = {\Pr \left[ \,{\text {G}_2(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\).

In \(\text {G}_3\), the procedure \(\mathbf{Sign }\) is changed such that the values \(( cmt , ch , rsp )\) are computed using the transcript generation function  . Since the latter does not change the output of the game, we have \({\Pr \left[ \,{\text {G}_2(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } = {\Pr \left[ \,{\text {G}_3(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\).

. Since the latter does not change the output of the game, we have \({\Pr \left[ \,{\text {G}_2(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } = {\Pr \left[ \,{\text {G}_3(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\).

In \(\text {G}_4\), the \(q_s\) calls to the transcript generation function  are replaced by \(q_s\) calls to the simulated transcript generation function

are replaced by \(q_s\) calls to the simulated transcript generation function  . Since the statistical distance between the distributions output by

. Since the statistical distance between the distributions output by  and by

and by  is at most \(\varepsilon _s\), we have \( {\Pr \left[ \,{\text {G}_3(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } - {\Pr \left[ \,{\text {G}_4(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } \le q_s \varepsilon _s\).

is at most \(\varepsilon _s\), we have \( {\Pr \left[ \,{\text {G}_3(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } - {\Pr \left[ \,{\text {G}_4(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } \le q_s \varepsilon _s\).

In \(\text {G}_5\), a period \({\tilde{\imath }}\in \{1, \ldots , T\}\) is chosen uniformly at random, and \(\mathsf {bad}\) is set when the adversary queries \(\mathbf{Break }\hbox {-}\mathbf{In } \) with a period \(\mathrm {b}\le {\tilde{\imath }}\) or when the adversary outputs a signature for a period \(i^* \ne {\tilde{\imath }}\). Since if \(\text {G}_5\) outputs 1, we have \(i^* < \mathrm {b}\), “\(\mathsf {bad}\) is never set and \(\text {G}_5\) outputs 1” (event \(\text {G}_5(\mathcal {A}) \,{\Rightarrow }\,1 \wedge \mathsf {Good}_5\)) if and only if “\(i^* = {\tilde{\imath }}\) and \(\text {G}_5\) outputs 1.” Therefore, we have:

where the second equality comes from the fact that \({\Pr \left[ \,{{\tilde{\imath }}= i}\,\right] } = \frac{1}{T}\) and that the event “\({\tilde{\imath }}= i\)” is independent from the event “\(\text {G}_5(\mathcal {A}) \,{\Rightarrow }\,1 \wedge i^* = i\).”

In \(\text {G}_6\), the empty string \(\bot \) is returned if \(\mathbf{Break }\hbox {-}\mathbf{In } \) is queried with a period \(i \le {\tilde{\imath }}\), and the game outputs 0 if \(i^* \ne {\tilde{\imath }}\). Since \(\text {G}_5\) and \(\text {G}_6\) are identical until bad, according to Lemma 2.2, we have

In \(\text {G}_7\), some procedures have been rewritten in an equivalent way, and \(\mathsf {bad}\) is now set when the query \(( cmt ^*, M ^*)\) is not the \(\mathrm {fp}^{\text {th}}\) query to the random oracle. Since the latter does not change the output of the experiment, we have \({\Pr \left[ \,{\text {G}_6(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } = {\Pr \left[ \,{\text {G}_7(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\).

In \(\text {G}_8\), the key is generated using the lossy key generation algorithm \({\mathsf {LKG}}\) for period \({\tilde{\imath }}\) instead of the normal key generation algorithm \({\mathsf {KG}}\). From any adversary \(\mathcal {A}\) able to distinguish \(\text {G}_7\) from \(\text {G}_8\), it is straightforward to construct an adversary which \((t', \varepsilon '')\)-solves the key indistinguishability problem with \(t' \approx t + (q_s t_{\mathsf {Sim-Sign}}+ (T-1) t_{\mathsf {Update}})\) and \(\varepsilon '' = \left| {\Pr \left[ \,{\text {G}_7(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } - {\Pr \left[ \,{\text {G}_8(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } \right| \). Therefore, thanks to the \((t',\varepsilon ')\)-key-indistinguishability of  , if the adversary runs in time approximately at most \(t' - (q_s t_{\mathsf {Sim-Sign}}+ (T-1) t_{\mathsf {Update}})\):

, if the adversary runs in time approximately at most \(t' - (q_s t_{\mathsf {Sim-Sign}}+ (T-1) t_{\mathsf {Update}})\):

In \(\text {G}_9\), the game outputs 0 if the signature does not correspond to the challenge \( ch ^*\). Since we have

and \({\Pr \left[ \,{\text {G}_9(\mathcal {A}) \,{\Rightarrow }\,1 \wedge \mathsf {Good}_9}\,\right] } = {\Pr \left[ \,{\text {G}_9(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\), according to Lemma 2.2, we have \( {\Pr \left[ \,{\text {G}_8(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } = (q_h + 1) {\Pr \left[ \,{\text {G}_9(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\).

From any adversary \(\mathcal {A}\) for \(\text {G}_9\), it is straightforward to construct an adversary \(\mathcal {I}\) (not necessarily computationally bounded) which \(\varepsilon ''\)-solves the impersonation problem with \(\varepsilon '' = {\Pr \left[ \,{\text {G}_9(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\). Therefore, we have \( {\Pr \left[ \,{\text {G}_9(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } \le \varepsilon _\ell \).

From the previous equalities and inequalities, we deduce that, for any adversary \(\mathcal {A}\) running in time approximately at most \(t' - (q_s t_{\mathsf {Sim-Sign}}+ (T-1) t_{\mathsf {Update}})\):

Let us now prove that  is strongly forward-secure (with the same parameters) if

is strongly forward-secure (with the same parameters) if  is response-unique.

is response-unique.

We first remark that, if we replace line 055 of \(\text {G}_0\) in Fig. 3 by

then we get exactly the game for forward security.

Therefore, if normal keys are response-unique, it is clear that this new game is equivalent to the original game \(\text {G}_0\), since if \(S[{( cmt ^*, M ^*,i^*)}]\) is defined, it is the only possible response \( rsp ^*\).

If lossy keys are response-unique, to prove forward security, it is sufficient to replace lines 055, 558, and 758 for games \(\text {G}_0,\ldots ,\text {G}_9\) in Figs. 3 and 4 by

Then the probability the adversary wins the new game \(\text {G}_9\) is still bounded by \(\varepsilon _l\) since if \(S[{( cmt ^*, M ^*,i^*)}]\) is defined, it is the only possible response \( rsp ^*\). \(\square \)

Remark 3.2

As in the standard Fiat–Shamir transform, the signature obtained via the generalized transform consists of a commitment-response pair. However, in all schemes proposed in this paper, the commitment can be recovered from the challenge and the response. Hence, since the challenge is often shorter than the commitment, it is generally better to use the challenge-response pair as the signature in our schemes. Obviously, this change does not affect the security of our schemes.

Security theorems for comparisons with previous schemes. In the sequel, to be able to do a fair comparison, we also need the following variant of Theorem 3.1 and its associated straightforward corollary.

Theorem 3.3

Let  be a key-evolving lossy identification scheme whose commitment space has min-entropy at least \(\beta \) (for every period i), let \({\mathsf {H}}\) be a hash function modeled as a random oracle, and let

be a key-evolving lossy identification scheme whose commitment space has min-entropy at least \(\beta \) (for every period i), let \({\mathsf {H}}\) be a hash function modeled as a random oracle, and let  be the signature scheme obtained via the generalized Fiat–Shamir transform. If

be the signature scheme obtained via the generalized Fiat–Shamir transform. If  -simulatable, complete, \((t',\varepsilon ')\)-key-indistinguishable, and \(\varepsilon _{\ell }\)-lossy, then

-simulatable, complete, \((t',\varepsilon ')\)-key-indistinguishable, and \(\varepsilon _{\ell }\)-lossy, then  is \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively existentially forward-secure in the random oracle model for:

is \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively existentially forward-secure in the random oracle model for:

as long as

where \(t_{{\mathsf {Sim-Sign}}}\) denotes the time to simulate a transcript using  , \(t_{\mathsf {Update}}\) denotes the time to update a secret key using \({\mathsf {Update}}\), and \({\mathrm e}\) (not to be confused with e) is the base of the natural logarithm. Furthermore, if

, \(t_{\mathsf {Update}}\) denotes the time to update a secret key using \({\mathsf {Update}}\), and \({\mathrm e}\) (not to be confused with e) is the base of the natural logarithm. Furthermore, if  is response-unique,

is response-unique,  is also \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively forward-secure.

is also \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively forward-secure.

Corollary 3.4

Under the same hypothesis of Theorem 3.3,  is \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively existentially forward-secure in the random oracle model for:

is \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively existentially forward-secure in the random oracle model for:

as long as

Furthermore, if  is response-unique,

is response-unique,  is also \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively forward-secure.

is also \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively forward-secure.

In concrete instantiations in the sequel, we often omit \(t_{\mathsf {Update}}\) and \(t_{\mathsf {Sim-Sign}}\) as these values are small compared to \(t'\), for any reasonable parameters. For any \(\varepsilon > 0\) satisfying the above inequalities, under the assumption of Remark 2.4, we can say that the scheme  is about \(({\textstyle \frac{t' \varepsilon }{2}}, q_h, q_s, T \varepsilon )\)-forward-secure (i.e., provide about \(\log _2(t'/(2 T))\) bits of security), if the underlying identification scheme

is about \(({\textstyle \frac{t' \varepsilon }{2}}, q_h, q_s, T \varepsilon )\)-forward-secure (i.e., provide about \(\log _2(t'/(2 T))\) bits of security), if the underlying identification scheme  is (about) \((t',{(1 - 1/e)}/2)\)-hard. In other words, the security reduction loses a factor about T.

is (about) \((t',{(1 - 1/e)}/2)\)-hard. In other words, the security reduction loses a factor about T.

Proof of Corollary 3.4 from Theorem 3.3

It is a direct corollary of Theorem 3.3. The condition \( \varepsilon > 2 \left( q_s \varepsilon _s + (q_h + q_s + 1) q_s / 2^\beta \right) \) ensures that \(\varepsilon - q_s \varepsilon _s - (q_h + q_s + 1) q_s / 2^\beta \ge \varepsilon / 2\). \(\square \)

Proof of Theorem 3.3

Let us suppose there exists an adversary \(\mathcal {A}\) that \((t,q_h,q_s,\varepsilon ,\delta )\)-breaks  . In particular, \(\mathcal {A}\)\((t,q_h,q_s,\varepsilon \delta )\)-breaks

. In particular, \(\mathcal {A}\)\((t,q_h,q_s,\varepsilon \delta )\)-breaks  .

.

The proof of Theorem 3.3 is very similar to the proof of Theorem 3.1. We use the same games, except for \({\mathbf{Initialize }}\) and \({\mathbf{Finalize }}\) of games \(\text {G}_1,\ldots ,\text {G}_5\) which are replaced by the ones of game \(\text {G}_6\). Indeed, in the game of the selective security, a period \({\tilde{\imath }}\) is chosen in \({\mathbf{Initialize }}\) and the adversary has to forge a signature for this period. Then the proof is identical except that \({\Pr \left[ \,{\text {G}_4(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } = {\Pr \left[ \,{\text {G}_5(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] } = {\Pr \left[ \,{\text {G}_6(\mathcal {A}) \,{\Rightarrow }\,1}\,\right] }\) and except for the inequality of Eq. (3.1) (p. 20).

We remark that, if we write \(\gamma = \left( q_s \varepsilon _s + (q_h + q_s + 1) q_s / 2^\beta \right) \):

Let us construct an adversary \(\mathcal {B}\) which \((t'',\varepsilon '')\)-breaks the key indistinguishability property with \(t'' \approx \frac{t + q_s t_{\mathsf {Sim-Sign}}}{\varepsilon - \gamma } + (T-1) t_{\mathsf {Update}}\) and \(\varepsilon '' \ge \delta \, \left( 1 - \frac{1}{{\mathrm e}} \right) - \frac{1}{\varepsilon - \gamma } \, (q_h + 1) \, \varepsilon _\ell \). \(\mathcal {B}\) takes as input a period \({\tilde{\imath }}\), a public key \( pk \) and a secret key \( sk _{{\tilde{\imath }}+1}\) for period \({\tilde{\imath }}+1\). It then runs \(\mathcal {A}\)\( \frac{1}{\varepsilon - \gamma } \) times and simulates the oracles as in game \(\text {G}_7\) (or \(\text {G}_8\), which is equivalent), except for \({\mathbf{Initialize }}\) where it uses directly its inputs \({\tilde{\imath }}\), \( pk \) and \( sk _{{\tilde{\imath }}+1}\) (instead of picking them at random). If \(\mathcal {A}\) outputs a correct forgery during one of its runs, \(\mathcal {B}\) outputs 1. Otherwise, it outputs 0.

Clearly \(\mathcal {B}\) perfectly simulates the environment of \(\mathcal {A}\) in the game \(\text {G}_7\), if \( pk \) is not lossy, or in the game \(\text {G}_8\), if \( pk \) is lossy. According to Eq. (3.2), if \( pk \) is not lossy, we have

and, according to Eq. (3.3), if \( pk \) is lossy, we have

Therefore, the advantage of \(\mathcal {B}\) is \(\varepsilon '' \ge \delta \, \left( 1 - \frac{1}{{\mathrm e}} \right) - \frac{1}{\varepsilon - \gamma } \, (q_h+1) \, \varepsilon _\ell \). Its running time is \(t'' \approx \frac{t + q_s t_{\mathsf {Sim-Sign}}}{\varepsilon - \gamma } + (T-1) t_{\mathsf {Update}}\). This proves the theorem. \(\square \)

4 Tighter Security Reductions for Guillou–Quisquater-Like Schemes

In this section, we prove tighter security reductions for the Guillou–Quisquater scheme (GQ [34]) and for a slight variant of the Itkis–Reyzin scheme (IR [41]), which can also be seen as a forward-secure extension of the GQ scheme. We analyze the practical performance of this new scheme in the next section of this article.

4.1 Guillou–Quisquater Scheme

Let us describe the identification scheme corresponding to the GQ signature scheme,Footnote 8 before presenting our tight reduction and comparing it with the swap method.

Scheme. Let N be a product of two distinct \({\ell _N}\)-bit primes \(p_1,p_2\) and let e be a \({\ell _e}\)-bit prime, co-prime to \(\phi (N) = (p_1 - 1)(p_2 - 1)\), chosen uniformly at random.Footnote 9 Let S be an element chosen uniformly at random in \({{\mathbb Z}}_N^*\) and let \(U = S^e \bmod N\). Let \(\mathfrak {c}= e \ge 2^{{\ell _e}-1}\) and \(\mathcal {C}= \{0,\ldots ,\mathfrak {c}-1\}\). The (normal) public key is \( pk = (N,e,U)\) and the secret key is \( sk =(N,e,S)\).

The goal of the identification scheme is to implicitly prove U is an e-residue.Footnote 10 The identification scheme is depicted in Figs. 5 and 6. It works as follows. We recall that the scheme only supports one time-period (i.e., \(T=1\)). Therefore, the \({\mathsf {Update}}\) algorithm is not needed and \( sk _1= sk \). First, the prover chooses a random element \(R \in {{\mathbb Z}}_N^*\), computes \(Y \leftarrow R^e \bmod N\). It sends Y to the verifier, which in turn chooses \( c \in \{0,\ldots ,\mathfrak {c}-1\}\) and returns it to the prover. Upon receiving \( c \), the prover computes \(Z \leftarrow R \cdot S^ c \bmod N\) and sends this value to the verifier. Finally, the verifier checks whether \(Z \in {{\mathbb Z}}_N^*\) and \(Z^e = Y \cdot U^ c \) and accepts only in this case.Footnote 11

The algorithm \({\mathsf {LKG}}\) chooses e and \(N=p_1p_2\) such that e divides \(p_1-1\), instead of being co-prime to \(\phi (N)\), and chooses U uniformly at random among the non-e-residue modulo N. The lossy public key is then \( pk = (N,e,U)\). Propositions B.13 and B.16 show that if U is chosen uniformly at random in \({{\mathbb Z}}_N^*\), it is not an e-residue with probability \(1-1/e\) and that it is possible to efficiently check whether U is an e-residue or not if the factorization of N is known: U is a e-residue if and only if, for any \(j \in \{1,2\}\), e does not divide \(p_j-1\) or \(U^{(p_j - 1) / e} = 1\bmod p_j\).

In the original scheme, any prime number e of large enough length \({\ell _e}\) could be used—to get negligible soundness or lossiness probability, \({\ell _e}\) needs to be at least equal to \( k \). However, for our proof to work, we need the \(\phi \)-hiding assumption to hold. This implies in addition that \({\ell _e}< {\ell _N}/ 4\).

Security. Existing proofs for the GQ scheme lose a factor \(q_h\) in the reduction. In this section, we prove the previously described identification scheme  is a lossy identification scheme, under the \(\phi \)-hiding assumption. This yields a security proof of the strong unforgeability of the GQ scheme, with a tight reduction to this assumption.

is a lossy identification scheme, under the \(\phi \)-hiding assumption. This yields a security proof of the strong unforgeability of the GQ scheme, with a tight reduction to this assumption.

More formally, we prove the following theorem:

Theorem 4.1

The identification scheme  depicted in Figs. 5 and 6 is complete, perfectly simulatable, key-indistinguishable, \((1/\mathfrak {c})\)-lossy, and response-unique. More precisely, if the \(\phi \)-hiding problem is \((t',\varepsilon ')\)-hard, then the identification scheme

depicted in Figs. 5 and 6 is complete, perfectly simulatable, key-indistinguishable, \((1/\mathfrak {c})\)-lossy, and response-unique. More precisely, if the \(\phi \)-hiding problem is \((t',\varepsilon ')\)-hard, then the identification scheme  is \((t,\varepsilon )\)-key-indistinguishable for:

is \((t,\varepsilon )\)-key-indistinguishable for:

Furthermore, the min-entropy \(\beta \) of the commitment space is \(\log _2(\phi (N)) \ge {\ell _N}-1\).

Thanks to Corollary 3.4 (with \(T=1\)), we have the following corollary:

Theorem 4.2

If the \(\phi \)-hiding problem is \((t',\varepsilon ')\)-hard, then the GQ scheme is \((t,q_h,q_s,\varepsilon ,\delta )\)-selectively forward-secure for \(T=1\) period in the random oracle model for:

as long as

where \({\mathrm e}\) (not to be confused with e) is the base of the natural logarithm.

Under the assumption of Remark 2.4, we can say that the scheme is about \(({\textstyle \frac{t \varepsilon }{2}}, q_h, q_s, \varepsilon )\)-strongly-unforgeable if the \(\phi \)-hiding problem is \((t,(1 - 1/e)/2)\)-hard. This means roughly that if we want a \( k \)-bit security, the modulus has to correspond to a security level of \( k ' \approx k \) bits, which is tight.

Proof of Theorem 4.1

The proof that  is complete follows immediately from the fact that, if \(U = S^e \bmod N\), an honest execution of the protocol will always result in acceptance as \(Z^e = {(R \cdot S^{ c })}^e = R^e \cdot {(S^e)}^{ c } = Y \cdot U^{ c }\).

is complete follows immediately from the fact that, if \(U = S^e \bmod N\), an honest execution of the protocol will always result in acceptance as \(Z^e = {(R \cdot S^{ c })}^e = R^e \cdot {(S^e)}^{ c } = Y \cdot U^{ c }\).

Simulatability follows from the fact that, given \( pk = (N,e, U)\), we can easily generate transcripts whose distribution is perfectly indistinguishable from the transcripts output by an honest execution of the protocol. This is done by choosing Z uniformly at random in \({{\mathbb Z}}^*_N\) and \( c \) uniformly at random in \(\{0,\ldots ,\mathfrak {c}-1\}\), and setting \(Y = Z^e / U^{ c }\).

follows from the fact that, given \( pk = (N,e, U)\), we can easily generate transcripts whose distribution is perfectly indistinguishable from the transcripts output by an honest execution of the protocol. This is done by choosing Z uniformly at random in \({{\mathbb Z}}^*_N\) and \( c \) uniformly at random in \(\{0,\ldots ,\mathfrak {c}-1\}\), and setting \(Y = Z^e / U^{ c }\).

Let us prove the key indistinguishability property. When e is co-prime with \(\phi (N)\), the function f defined by \(f(x)=x^e \bmod N\) is a permutation over \({{\mathbb Z}}_N^*\) (see, e.g., Corollary B.14). Therefore, when \( pk =(N,e,U) {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{\mathsf {KG}}(1^ k ,1)\), the element \(U = S^e \in {{\mathbb Z}}_N^*\) (with \(S {\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}}{{\mathbb Z}}_N^*\)) is uniformly random. The distribution of normal public keys is therefore indistinguishable from the one where e divides \(\phi (N)\) and U is chosen uniformly at random, according to the \(\phi \)-hiding assumption. And in this latter distribution, U is not a e-residue with probability \(\ge 1-1/e \ge 1-1/2^{{\ell _e}-1}\) according to Corollary B.15, so this distribution is \((1/2^{{\ell _e}-1})\)-close to the distribution of lossy keys according to Lemma 2.3. Hence, we get the bound of the theorem.