Abstract

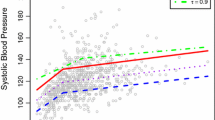

Composite quantile regression (CQR) can be more efficient and sometimes arbitrarily more efficient than least squares for non-normal random errors, and almost as efficient for normal random errors. Based on CQR, we propose a test method to deal with the testing problem of the parameter in the linear regression models. The critical values of the test statistic can be obtained by the random weighting method without estimating the nuisance parameters. A distinguished feature of the proposed method is that the approximation is valid even the null hypothesis is not true and power evaluation is possible under the local alternatives. Extensive simulations are reported, showing that the proposed method works well in practical settings. The proposed methods are also applied to a data set from a walking behavior survey.

Similar content being viewed by others

References

Barbe P, Bertail P (1995) The weighted bootstrap. Lecture Notes in Statistics 98. Springer, New York

Chen K, Ying Z, Zhang H, Zhao L (2008) Analysis of least absolute deviation. Biometrika 95:107–122

Guo J, Tian M, Zhu K (2012) New efficient and robust estimation in varying-coefficient models with heteroscedasticity. Stat Sin 22:1075–1101

Jiang R, Zhou ZG, Qian WM, Chen Y (2013) Two step composite quantile regression for single-index models. Comput Stat Data Anal 64:180–191

Jiang R, Zhou ZG, Qian WM, Shao WQ (2012a) Single-index composite quantile regression. J Korean Stat Soc 3:323–332

Jiang R, Qian WM, Zhou ZG (2012b) Variable selection and coefficient estimation via composite quantile regression with randomly censored data. Stat Probab Lett 2:308–317

Jiang R, Yang XH, Qian WM (2012c) Random weighting m-estimation for linear errors-in-variables models. J Korean Stat Soc 41:505–514

Kai B, Li R, Zou H (2011) New efficient estimation and variable selection methods for semiparametric varying-coefficient partially linear models. Ann Stat 39:305–332

Kai B, Li R, Zou H (2010) Local composite quantile regression smoothing: an efficient and safe alternative to local polynomial regression. J R Stat Soc Ser B 72:49–69

Knight K (1998) Limiting distributions for \(l_{1}\) regression estimators under general conditions. Ann Stat 26:755–770

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Pollard D (1991) Asymptotics for least absolute deviation regression estimators. Econom Theory 7:186–199

Praestgaard J, Wellner JA (1993) Exchangeably weighted bootstraps of the general empirical process. Ann Probab 21:2053–2086

Rao CR, Zhao LC (1992) Approximation to the distribution of M-estimates in linear models by randomly weighted bootstrap. Sankhy\(\bar{a}\) A 54:323–331

Rubin DB (1981) The bayesian bootstrap. Ann Stat 9:130–134

Tang L, Zhou Z, Wu C (2012a) Weighted composite quantile estimation and variable selection method for censored regression model. Stat Probab Lett 3:653–663

Tang L, Zhou Z, Wu C (2012) Efficient estimation and variable selection for infinite variance autoregressive models. J Appl Math Comput 2012(40):399–413

Wang Z, Wu Y, Zhao LC (2009) Approximation by randomly weighting method in censored regression model. Sci China Ser A 52:561–576

Wu XY, Yang YN, Zhao LC (2007) Approximation by random weighting method for m-test in linear models. Sci China Ser A 50:87–99

Zheng Z (1987) Random weighting method. Acta Math Appl Sin 10:247–253 (In Chinese)

Zou H, Yuan M (2008) Composite quantile regression and the oracle model selection theory. Ann Stat 36:1108–1126

Acknowledgments

The authors would like to thank Dr. Yong Chen for sharing the walking behavior survey data and thank the Editor, Associate Editor and Referees for their helpful suggestions that improved the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

To prove main results in this paper, the following technical conditions are imposed.

- A1. :

-

\(d^2_n=\max _{1\le i \le n}\{X_i^TS_nX_i\}\rightarrow 0\) as \(n\rightarrow \infty \).

- A2. :

-

\(S=\lim _{n\rightarrow \infty }S_n/n\) is a \(p\times p\) positive definite matrix. For each p-vector \(u\),

$$\begin{aligned}&\lim _{n\rightarrow \infty }\frac{1}{n}\sum _{i=1}^{n}\int \limits _0^{u_0+X_i^Tu}\sqrt{n}[F(a+t/\sqrt{n})-F(a)]dt =\frac{1}{2}f(a)(u_0,u^T)\left[ \begin{array}{cc} 1&{} 0\\ 0 &{} S\\ \end{array}\right] \\&\quad (u_0,u^T)^T. \end{aligned}$$ - A3. :

-

The random weights \(\omega _{1},\ldots ,\omega _{n}\) are i.i.d. with \(P(\omega _{1}\ge 0)\), \(E(\omega _{1})=Var(\omega _{1})=1\), and the sequence \(\{\omega _{i}\}\) and \(\{Y_{i},X_{i}\}\) are independent.

Remark 3

Conditions A1 and A3 are standard conditions in the random weighting method, see Rao and Zhao (1992). And conditions A2 is commonly assumed in the quantile regression, see Koenker (2005).

Write

The model (2.1) can be written as

Denote

where \(\beta _0(n)=S_n^{1/2}(\beta _0-b)\) and \(\beta _0\) is the true value.

Lemma 1

Under the conditions of Theorem 2, we have

where \(A^*=-\frac{1}{2n}\sum _{k=1}^{K}f^{-1}(b_{\tau _k})\left[ \sum _{i=1}^{n}\omega _i\{I(\varepsilon _i<b_{\tau _k})-\tau _k\}\right] ^2\).

Particularly, when \(w\equiv 1\), we have

where \(A=-\frac{1}{2n}\sum _{k=1}^{K}f^{-1}(b_{\tau _k})\left[ \sum _{i=1}^{n}\{I(\varepsilon _i<b_{\tau _k})-\tau _k\}\right] ^2\).

Proof

Let \(\hat{\beta }^*(n)-\beta _0(n)=\mathbf{u}_{n}\) and \(\sqrt{n}(\hat{b}_{k}-b_{\tau _{k}})=v_{n,k}\). Then \((v_{n,1},\ldots ,v_{n,q},\mathbf{u}_{n})\) is the minimizer of the following criterion:

To apply the identity (Knight 1998)

Thus we rewrite \(L^*_{n}\) as follows:

Denote \(L^*_{2n}=\sum _{k=1}^{K}L_{2n}^{*(k)}\), where \(L_{2n}^{*(k)}=\sum _{i=1}^{n}\omega _i\int _{0}^{[v_{k}/ \sqrt{n}+X_{ni}^{T}\mathbf{u}]} [I(\varepsilon _{i}\le b_{\tau _{k}}+t)-I(\varepsilon _{i}\le b_{\tau _{k}})]dt\). By A3, noting that \(\max _{1\le i\le n}\parallel X_{in}\parallel =d_n\rightarrow 0\) by A1, we have

Hence, \(L_{2n}^{*(k)} \xrightarrow {p}\frac{1}{2}f(b_{\tau _k})(v_{k},\mathbf{u})\left[ \begin{array}{cc} 1&{} 0\\ 0 &{} I_p \end{array} \right] (v_{k},\mathbf{u}^T)^{T}.\) Thus it follows that

Since \(L^*_{n}-\sum _{k=1}^{K}\sum _{i=1}^{n}\omega _i[v_{k}/ \sqrt{n}+X_{ni}^{T}\mathbf{u}][I(\varepsilon _{i}<b_{\tau _{k}})-\tau _{k}]\) converges in probability to the convex function \(\frac{1}{2}\sum _{k=1}^{K}f(b_{\tau _k})(v_{k},\mathbf{u})\left[ \begin{array}{cc} 1&{} 0\\ 0 &{} I_p \end{array} \right] (v_{k},\mathbf{u}^T)^{T}\), it follows from the convexity lemma (Pollard 1991) that, for any compact set, the quadratic approximation to \(L^*_{n}\) holds uniformly for \((v_{1},\ldots ,v_{K},\mathbf{u})\) in any compact set, which leads to

Thus, we can obtain

The Lemma is proved.

Now we proceed to prove the theorems.

Proof of Theorem 1

Suppose \(0<q<p\) and let \(K\) be a \(p\times (p-q)\) matrix of the rank \((p-q)\) such that \(H^TK=0\) and \(K^T\omega _{n}=0\). Without loss of generality, \(H_{0}\) and \(H_{2,n}\) can be written as

Write

thus,

When H\(_0\) is true, model (A.1) can be written as

where \(\gamma _0(n)=(K^{T}S_nK)^{1/2}\gamma \). Then, replacing \(X_{ni}\) in (A.1) by \(K_n^TX_{ni}\), and using a similar argument as that of Lemma 1, we have

where \(\hat{\gamma }(n)\) is the CQR estimate of \(\gamma _0(n)\) and \(\tilde{\beta }(n)=S_n^{1/2}(\tilde{\beta }-b)=K_n\hat{\gamma }(n)\).

Hence, under the null hypotheses, we have

When \(H_{2,n}\) is true, the model (A.1) can be written as

where \(\gamma _2(n)=(K^{T}S_nK)^{1/2}\gamma +K_n^TS_n^{1/2}\omega _n\) and \(\delta (n)=H_n^TS_n^{1/2}\omega _n\). Then by Lemma 1, we have

Hence, under the local alternative hypotheses, we have

The theorem is thus proved.

Proof of Theorem 2

By Lemma 2, we can obtain

Similarly, under the null and local alternative hypotheses, we can obtain

where \(\hat{\beta }^*(n)=S_n(\hat{\beta }^*-b)\) and \(\tilde{\beta }^*(n)=S_n(\tilde{\beta }^*-b)\). Therefore, we can obtain

By the checking the Lindeberg condition, we know that the conditional distribution of \(\sum _{i=1}^{n}(\omega _i-1)H_n^TX_{ni}\sum _{k=1}^{K}\left[ I(\varepsilon _i<b_{\tau _k})-\tau _k\right] \) given \(Y_1,\ldots ,Y_n\) converge to \(N\left( \mathbf{0}, A\left[ \sum _{k=1}^{K}f(b_{\tau _k})\right] ^{-2}\cdot I_q\right) \). Therefore, the conditional distribution of \(M_n^*\) given \(Y_1,\ldots ,Y_n\) converges to \(\chi ^2_q/\left[ 2A^{-1}\sum _{k=1}^{K}f(b_{\tau _k})\right] \). The proof of Theorem 2 is completed.

Rights and permissions

About this article

Cite this article

Jiang, R., Qian, WM. & Li, JR. Testing in linear composite quantile regression models. Comput Stat 29, 1381–1402 (2014). https://doi.org/10.1007/s00180-014-0497-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-014-0497-y