Abstract

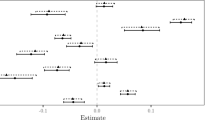

In this article, we aim to reduce the computational complexity of the recently proposed composite quantile regression (CQR). We propose a new regression method called infinitely composite quantile regression (ICQR) to avoid the determination of the number of uniform quantile positions. Unlike the composite quantile regression, our proposed ICQR method allows combining continuous and infinite quantile positions. We show that the proposed ICQR criterion can be readily transformed into a linear programming problem. Furthermore, the computing time of the ICQR estimate is far less than that of the CQR, though it is slightly larger than that of the quantile regression. The oracle properties of the penalized ICQR are also provided. The simulations are conducted to compare different estimators. A real data analysis is used to illustrate the performance.

Similar content being viewed by others

References

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96(456):1348–1360

Hunter DR, Lange K (2000) Quantile regression via an MM algorithm. J Comput Graph Stat 9(1):60–77

Jiang R, Qian W (2013) Composite quantile regression for nonparametric model with random censored data. Open J Stat 3:65–73

Jiang X, Jiang J, Song X (2012) Oracle model selection for nonloinear models based on weight composite quantile regression. Stat Sin 22:1479–1506

Kai B, Li R (2010) Local composite quantile regression smoothing: an efficient and safe alternative to local polynomial regression. J R Stat Soc 72:49–69

Koenker R (1984) A note on L-estimates for linear models. Stat Probab Lett 2(6):323–325

Koenker R (2005) Quantile regression, vol 38. Cambridge University Press, Cambridge

Koenker R, Bassett Jr G (1978) Regression quantiles. Econometrica 46(1):33–50

Kozumi H, Kobayashi G (2011) Gibbs sampling methods for Bayesian quantile regression. J Stat Comput Simul 81(11):1565–1578

Koenker R, Ng P, Portnoy S (1994) Quantile smoothing splines. Biometrika 81(4):673–680

Reich BJ, Smith LB (2013) Bayesian quantile regression for censored data. Biometrics 69(3):651–660

Tian M (2006) A quantile regression analysis of family background factor effects on mathematical achievement. J Data Sci 4(4):461–478

Tian M, Chen G (2006) Hierarchical linear regression models for conditional quantiles. Sci China Ser A Math 49(12):1800–1815

Tian Y, Tian M, Zhu Q (2014) Linear quantile regression based on EM algorithm. Commun Stat Theory Methods 43(16):3464–3484

Tibshirani R (1996) Regression shrinkage and selection via the LASSO. J R Stat Soc B (Methodol) 58(1):267–288

Tsionas EG (2003) Bayesian quantile inference. J Stat Comput Simul 73(9):659–674

Yu K, Moyeed RA (2001) Bayesian quantile regression. Stat Probab Lett 54(4):437–447

Zou H (2006) The adaptive LASSO and its oracle properties. J Am Stat Assoc 101(476):1418–1429

Zou H, Yuan M (2008) Composite quantile regression and the oracle model selection theory. Ann Stat 36(3):1108–1126

Acknowledgements

The work was partially supported by the major research Projects of philosophy and social science of the Chinese Ministry of Education (No. 15JZD015), National Natural Science Foundation of China (No. 11271368), Project supported by the Major Program of Beijing Philosophy and Social Science Foundation of China (No. 15ZDA17), Project of Ministry of Education supported by the Specialized Research Fund for the Doctoral Program of Higher Education of China (Grant No. 20130004110007), the Key Program of National Philosophy and Social Science Foundation Grant (No. 13AZD064), the Major Project of Humanities Social Science Foundation of Ministry of Education (No. 15JJD910001), the Fundamental Research Funds for the Central Universities, and the Research Funds of Renmin University of China (No. 15XNL008)

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Comparison of computational complexity between ICQR and CQR

For the ICQR estimation, the optimization problem (7) can be casted into the linear programming. First, we introduce the slack variables as follows:

Denote \(\varvec{\beta }^+=(\beta _1^+,\ldots ,\beta _p^+)^T\) and \(\varvec{\beta }^-=(\beta _1^-,\ldots ,\beta _p^-)^T\). With the aid of slack variables, solving the optimization problem (7) is equivalent to solving the following linear programming problem:

where \(\varvec{z}=\left( \left( \varvec{\beta }^+\right) ^T,\left( \varvec{\beta }^-\right) ^T,\left( \widetilde{\varvec{b}}^+\right) ^T,\left( \widetilde{\varvec{b}}^-\right) ^T,\left( \varvec{u}^+\right) ^T,\left( \varvec{u}^-\right) ^T\right) ^T\), \(\varvec{u}^+=\left( u_1^+,\ldots ,u_n^+\right) ^T\), \(\varvec{u}^-=\left( u_1^-,\ldots ,u_n^-\right) ^T\), \(\widetilde{\varvec{b}}^+=\left( \widetilde{b}_1^+,\ldots ,\widetilde{b}_n^+\right) ^T\), and \(\widetilde{\varvec{b}}^-=\left( \widetilde{b}_1^-,\ldots ,\widetilde{b}_n^-\right) ^T\).

Similarly, for the CQR estimation, minimizing the criterion (3) can also be transformed into the linear programming. Let

Then minimizing (3) is equivalent to solving the following linear programming problem:

where \(\varvec{z}^*=\left( \left( \varvec{\beta }^+\right) ^T,\left( \varvec{\beta }^-\right) ^T,\left( \varvec{b}^{*+}\right) ^T,\left( \varvec{b}^{*-}\right) ^T,\left( \varvec{u}^{*+}\right) ^T,\left( \varvec{u}^{*-}\right) ^T\right) ^T,\, \varvec{u}^{*+}=\left( u_{11}^{*+},\ldots ,u_{n1}^{*+},\ldots , u_{1K}^{*+},\ldots ,u_{nK}^{*+}\right) ^T\), \(\varvec{u}^{*-}=\left( u_{11}^{*-},\ldots ,u_{n1}^{*-},\ldots ,u_{1K}^{*-},\ldots ,u_{nK}^{*-}\right) ^T\), \(\varvec{b}^{*+}=\left( b_1^{*+},\ldots ,b_K^{*+}\right) ^T\), and \(\varvec{b}^{*-}=\left( b_1^{*-},\ldots ,b_K^{*-}\right) ^T\).

Obviously, if \(K>1\) then the scale of the linear programming (10) is smaller than (11). Even for a moderately big K, the ICQR has computational advantage comparing to the CQR when using either simplex or interior point algorithm.

Appendix B: Proof of Theorem 1

For the ICQR estimator, we consider the following criterion:

Write \(\widehat{\varvec{\beta }}_n=\widehat{\varvec{\beta }}^{\text {ICQR}}\) and \(\widehat{\varvec{b}}_n=\widehat{\widetilde{\varvec{b}}}\). Let \(\varvec{\delta }_n=\sqrt{n}(\widehat{\varvec{\beta }}_n-\varvec{\beta })\) and \(\varvec{v}_n=\sqrt{n}(\widehat{\varvec{b}}_n-\widetilde{\varvec{b}})=(v_{n,1},\ldots ,v_{n,n})^T\). Denote \(\varvec{\theta }_n=(\varvec{\delta }_n^T,\varvec{v}_n^T)^T\). Let \(\varvec{\theta }\) be a vector. Minimizing expression (12) is equivalent to minimizing

Obviously, the function \(Z_n(\varvec{\theta })\) is convex and \(\varvec{\theta }_n\) is its minimizer. Noting that

where \(\text {sgn}(\cdot )\) denotes the sign function, we have

Under condition A2, we know that \(\widetilde{\varepsilon }_i\)’s are also independent and identically distributed. Moreover, the distribution of \(\widetilde{\varepsilon }_i\), denoted by \(\widetilde{f}(\cdot )\), also has an uniformly bounded and strictly positive density. Therefore, the convex function \(M(t)=E_{\varepsilon }[w_i(|\widetilde{\varepsilon }_i-t|-|\widetilde{\varepsilon }_i|)]\) has a unique minimizer at zero. We have \(E_{\varepsilon }[w_i\text {sgn}(\widetilde{\varepsilon }_i)]=0\). On the other hand,

Under condition A1(ii), we know \(\lim _{n\rightarrow \infty }\varvec{x}_i/n=\varvec{0},i=1,\ldots ,n\). And because of the condition A1(i), it is clear that \(E_{\varepsilon }Z_n(\varvec{\theta })=w_0\widetilde{f}(0)(\varvec{\delta }^T,v_i)D_1(\varvec{\delta }^T,v_i)^T+o(1)\) with \(D_1=\left( \begin{array}{cc}D&{}\varvec{0}\\ \varvec{0}&{}1\end{array}\right) \). Let

and

Noting that \(E_{\varepsilon }[w_i\text {sgn}(\widetilde{\varepsilon }_i)]=0\), we have

Now we show that \(\sum _{i=1}^n\left[ R_{in}(\varvec{\theta })-E_{\varepsilon }R_{in}(\varvec{\theta })\right] \) = \(o_p(1)\). It is sufficient to show that \(\text {var}_{\varepsilon }\left\{ \sum _{i=1}^n\left[ R_{in}(\varvec{\theta })-E_{\varepsilon }R_{in}(\varvec{\theta })\right] \right\} =o_p(1)\). In fact, based on the inequality

we get

The last inequality holds since \(w_i^2\le 1\). Under Assumption A1(ii), we have

Therefore, we have

The convexity of the limiting objective function above guarantees the uniqueness of the minimizer. We have

Under Assumption A1(i) and using the equality \(\text {var}_{\varepsilon }[w_i\text {sgn}(\widetilde{\varepsilon }_i)]=E_{\varepsilon }(w_1^2)=1/12\), we have \(E_{\varepsilon }\varvec{W}_n=\varvec{0}\) and \(\text {var}_{\varepsilon }\varvec{W}_n\rightarrow D_1/12\) as \(n\rightarrow \infty \). By the Cramér–Wald device and CLT, we have \(\varvec{W}_n\mathop {\rightarrow }\limits ^{\mathcal {L}}\mathcal {N}\left( \varvec{0},D_1/12\right) \). Thus,

Combining all the results for \(i=1,\ldots ,n\) completes the proof.

Rights and permissions

About this article

Cite this article

Wu, Y., Tian, M. An effective method to reduce the computational complexity of composite quantile regression. Comput Stat 32, 1375–1393 (2017). https://doi.org/10.1007/s00180-017-0749-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-017-0749-8