Summary

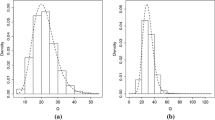

In a structural errors-in-variables model the true regressors are treated as stochastic variables that can only be measured with an additional error. Therefore the distribution of the latent predictor variables and the distribution of the measurement errors play an important role in the analysis of such models. In this article the conventional assumptions of normality for these distributions are extended in two directions. The distribution of the true regressor variable is assumed to be a mixture of normal distributions and the measurement errors are again taken to be normally distributed but the error variances are allowed to be heteroscedastie. It is shown how an EM algorithm solely based on the error-prone observations of the latent variable can be used to find approximate ML estimates of the distribution parameters of the mixture. The procedure is illustrated by a Swiss data set that consists of regional radon measurements. The mean concentrations of the regions serve as proxies for the true regional averages of radon. The different variability of the measurements within the regions motivated this approach.

Similar content being viewed by others

References

Böhning, D. (1999). Computer-Assisted Analysis of Mixtures and Applications; Meta-Analysis, Disease Mapping and Others. Chapman and Hall, London.

Bönning, D., Dietz, E., Schoub, R., Schlattman, P., and Lindsay, B.G. (1994). The distribution of the likelihood ratio for mixtures of densities from the one parameter exponential family. Annals of the Institute of Statistical Mathematics. 46, 373–388.

Carroll, R.J., Ruppert, D. and Stefanski, L.A. (1995). Measurement Error in Nonlinear Models. Chapman and Hall, London.

Caudill, S.B. and Acharya, R.N. (1998). Maximum likelihood estimation of a mixture of normal regressions: starting values and singularities. Communications in Statistics, B — Simulation and Computation. 27, 667–674.

Dempster, A.P., Laird, N.M. and Rubin, D.B. (1977). Maximum Likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society. B 39, 1–38.

Feng, Z.D. and McCulloch, C.E. (1994). On the likelihood ratio test statistic for the number of components in a normal mixture with unequal variances. Biometrics. 50, 1158–1162.

Hathaway, R.J. (1985). A constrained formulation of maximum-likelihood estimation for normal mixture distributions. Tie Annals of Statistics. 13, 795–800.

Hosmer Jr., D.W. (1973). On MLE of the parameters of a mixture of two normal distributions when the sample size is small. Communications in Statistics. 1, 217–227.

Kiefer, J. and Wolfowitz, J. (1956). Consistency of the maximum-likelihood estimation in the presence of infinitely many incidental parameters. Annals of Mathematical Statistics. 27, 887–906.

Kiefer, N.M. (1978). Discrete parameter variation: efficient estimation of a switching regression model. Econometrica. 46, 427–434.

Küchenhoff, H. and Carroll, R. J. (1997). Segmented regression with errors in predictors: semi-parametric and parametric methods. Statistics in Medicine. 16, 169–188.

Lindsay, B.G. (1995). Mixture Models: Theory, Geometry and Applications. NSF-CBMS Regional Conference Series in Probability and Statistics, Vol.5. Institute of Mathematical Statistics, Hayward, California.

Louis, T. A. (1982). Finding the observed information matrix when using the EM algorithm. Journal of the Royal Statistical Society. B 44, 226–233.

Minder, Ch. E. and Völkle, H. (1995). Radon und Lungenkrebssterblichkeit in der Schweiz. 324. Bericht der mathematisch-statistischen Sektion der Forschungsgesellschaft Johanneum, 115–124.

Pierce, D.A., Stram, D.O., Vaeth, M. und Schafer, D.W. (1992). The errors-in-variables problem: considerations provided by radiation dose-response analyses of the A-bomb survivor data. Journal of the American Statistical Association. 87, 351–359.

Quandt, R.E., and Ramsey, J.B. (1978). Estimating mixtures of normal distributions and switching regressions. Journal of the American Statistical Association. 73, 730–738.

Redner, R.A. and Walker, H.F. (1984). Mixture densities, maximum likelihood and the EM algorithm. SIAM Review. 26, 195–240.

Richardson, S. and Green, P.J. (1997). On Bayesian analysis of mixtures with an unknown number of components. Journal of the Royal Statistical Society. B 59, 731–792.

Roeder, K. and Wasserman, L. (1997). Practical Bayesian density estimation using mixtures of normals. Journal of the American Statistical Association. 92, 894–902.

Thamerus, M. (1998). Nichtlineare Regressionsmodelle mit heteroskedastischen Meßfehlern. Logos Verlag, Berlin.

Acknowledgement

This research was partly supported by the Deutsche Forschungsgemeinschaft (German Research Council). I would like to thank Ch. E. Minder for discussion and introducing the problem. I would also like to thank an anonymous referee for directing my attention to some very general problems in the estimation of mixture models. Helpful discussions with H. Schneeweiss and R. Wolf are gratefully acknowledged.

Author information

Authors and Affiliations

Appendix

Appendix

Al Maximization of (7) with respect to α1,…,αk: To maximize (7) with respect to the proportion parameters α1,…, αm under the restriction \(\sum\nolimits_{k = 1}^m {} {\alpha _k} = 1\) we maximize the function

Partial differentiation yields the conditions

-

i)

\( - \lambda _L^{ - 1}\sum\limits_{i = 1}^n {} p_k^{(c)}({W_i}) = \alpha _k^{(n)}\;\;\;{\rm{for}}\;\;\;k = 1, \ldots ,m,\) which inserted into the restriction lead to the equation

-

ii)

$$\sum\limits_{i = 1}^n {} \sum\limits_{k = 1}^m {} p_k^{(c)}({W_i}) = - {\lambda _L}.$$

If we replace the weights \(p_k^{(c)}({W_i})\) in ii) with their original expressions (6) it is easily seen that −λL = n and from there with this result plugged into i) the solutions (8) follow.

A2 Maximization of (7) with respect to θk and ςk under a homoscedastic measurement error model: In the case of a homoscedastic measurement error model, that is \({U_i} \sim N(0,\sigma _U^2)\) for i = 1,…, n, the solutions \(\theta _k^{(n)}\) and \(\varsigma _k^{(n)}\) of the maximization problem (9) follow directly from the equations (10). For k = 1,…,m the next approximations \(\theta _k^{(n)}\) and \(\varsigma _k^{(n)}\), respectively \(\varsigma _k^{2(n)}\), are given by

A3 Computation of the matrices Hk(θk, ςk): The matrices Hk(θk, ςk) of the second derivatives of qk(θk, ςk) used in the Newton approximation of the M Step of the algorithm are given by

with the elements

Rights and permissions

About this article

Cite this article

Thamerus, M. Fitting a Mixture Distribution to a Variable Subject to Heteroscedastie Measurement Errors. Computational Statistics 18, 1–17 (2003). https://doi.org/10.1007/s001800300129

Published:

Issue Date:

DOI: https://doi.org/10.1007/s001800300129