Abstract

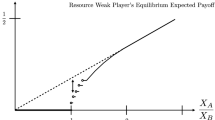

The Colonel Blotto game is one of the most classical zero-sum games, with diverse applications in auctions, political elections, etc. We consider the discrete two-battlefield Colonel Blotto Game, a basic case that has not yet been completely characterized. We study three scenarios where at least one player’s resources are indivisible (discrete), and compare them with a benchmark scenario where the resources of both players are arbitrarily divisible (continuous). We present the equilibrium values for all three scenarios, and provide a complete equilibrium characterization for the scenario where both players’ resources are indivisible. Our main finding is that, somewhat surprisingly, the distinction between continuous and discrete strategy spaces generally has no effect on players’ equilibrium values. In some special cases, however, the larger continuous strategy space when resources are divisible does bring the corresponding player a higher equilibrium value than when resources are indivisible, and this effect is more significant for the stronger player who possesses more resources than for the weaker player.

Similar content being viewed by others

Notes

We remind the reader that, generally, a strategy may be more complicated than an action. Since the model addressed in this paper is static, a strategy is equivalent to an action, and hence we do not distinguish between a strategy and an action.

We note that Hart’s Proposition 10 is problematic when \(B=m\). For instance, if \(A=2B+1\), then \((0.5I_B+0.5I_{B+1} 0.5I_0+0.5I_{B})\) is an NE. Therefore, the equilibrium value is 3/4. However, the upper bound in Proposition 10 is 7/12, which is smaller than 3/4.

When \(\Delta = 2\), r takes two possible values, 0 and 1, with \(r=1\) falling into the large-remainder case that will be discussed in Theorem 6.

References

Ahmadinejad A, Dehghani S, Hajiaghayi M, Lucier B, Mahini H, Seddighin S (2019) From duels to battlefields: computing equilibria of Blotto and other games. Math Oper Res 44(4):1304–1325

Alpern S, Howard J (2017) Winner-take-all games: the strategic optimisation of rank. Oper Res 65(5):1165–1176

Alpern S, Howard J (2019) A short solution to the many-player silent duel with arbitrary consolation prize. Eur J Oper Res 273(2):646–649

Amir N (2018) Uniqueness of optimal strategies in Captain Lotto games. Internat J Game Theory 47(1):55–101

Apostol TM (2013) Introduction to analytic number theory. Springer Science & Business Media

Behnezhad S, Dehghani S, Derakhshan M, Hajiaghayi M, Seddighin S (2022) Fast and simple solutions of Blotto games. Oper Res 71(2):506–516

Boix-Adserà E, Edelman BL, Jayanti S (2021) The multiplayer Colonel Blotto game. Games Econ Behav 129:15–31

Borel E (1921) La théorie du jeu et les équations intégralesa noyau symétrique. Comptes Rendus l’Acad Sci 173:1304–1308

Borel E (1953) The theory of play and integral equations with skew symmetric kernels. Econometrica 21(1):97–100

Borel E, Ville J (1938) Applications de la théorie des probabilités aux jeux de hasard. Paris: Gauthier-Villars

Cheng Y, Du D, Han Q (2018) A hashing power allocation game in cryptocurrencies. In: Deng X (ed) Algorithmic game theory, Cham, Springer International Publishing, pp 226–238. https://doi.org/10.1007/978-3-319-99660-8_20. (ISBN: 978-3-319-99660-8)

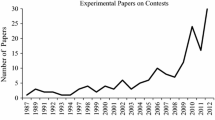

Dechenaux E, Kovenock D, Sheremeta RM (2015) A survey of experimental research on contests, all-pay auctions and tournaments. Exp Econ 18(4):609–669

Gross O, Wagner R (1950) A continuous Colonel Blotto game. RAND Res Memorandum RM-408(1)

Hart S (2008) Discrete Colonel Blotto and General Lotto games. Internat J Game Theory 36(3):441–460

Hart S (2016) Allocation games with caps: from Captain Lotto to all-pay auctions. Internat J Game Theory 45(1–2):37–61

Kovenock D, Roberson B (2012) Coalitional Colonel Blotto games with application to the economics of alliances. J Public Econ Theory 14(4):653–676

Kovenock D, Roberson B (2021) Generalizations of the General Lotto and Colonel Blotto games. Econ Theor 71(3):997–1032

Li X, Zheng J (2021) Even-split strategy in sequential Colonel Blotto games. Available at SSRN: https://ssrn.com/abstract=3947995. Accessed 19 April 2023

Macdonell ST, Mastronardi N (2015) Waging simple wars: a complete characterization of two-battlefield Blotto equilibria. Econ Theor 58(1):183–216

Dziubiński M (2013) Non-symmetric discrete General Lotto games. Internat J Game Theory 42(4):801–833

Powell R (2009) Sequential, nonzero-sum Blotto: allocating defensive resources prior to attack. Games Econ Behav 67(2):611–615

Roberson B (2006) The Colonel Blotto game. Econ Theor 29(2):1–24

Roberson B (2010) Allocation games. Wiley Encycl Oper Res Manag Sci. https://doi.org/10.1002/9780470400531.eorms1022. Accessed 19 April 2023

Thomas C (2018) N-dimensional Blotto game with heterogeneous battlefield values. Econ Theor 6:1–36

Washburn A (2013) OR forum-Blotto politics. Oper Res 61(5):532–543

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Z. Cao: This author thanks the financial support from National Natural Science Foundation of China (No. 71922003 ,71871009, 72271016) and Beijing Natural Science Foundation (No. Z22000). X. Yang: This author thanks the financial support from National Natural Science Foundation of China (No. 72192800) and National Key Research Program (No. 2018AAA0101000).

Appendices

Proofs in Sect. 4

1.1 Proof of Theorem 2

Proof

(i) When \(\Delta =1\) and \(B\ge 2\), we show that the following strategy profile is an NE:

Please note that when \(B=2\), let the middle term \(\frac{1}{A+1}\sum _{i=1}^{B-2}I_{i+0.5}=0\) and

On the one hand, for each of Player 2’s pure strategy \({\mathcal {Y}}\in [0, B]\), we discuss in two cases.

-

(1)

When \({\mathcal {Y}}\in [B]\), i.e., Player 2 takes an integral strategy, there is no way for Player 1 to win both battlefields. The probability that Player 1 gets one win and one draw (when \({\mathcal {X}}^*={\mathcal {Y}}\) or \({\mathcal {X}}^*={\mathcal {Y}}+1\)) is \(2/(A+1)\), and one win and one loss is \((A-1)/(A+1)\). Therefore, \(H({\mathcal {X}}^*,{\mathcal {Y}})=1/(A+1)\).

-

(2)

When \({\mathcal {Y}}\notin [B]\), i.e., Player 2 takes a fractional strategy, draw is impossible. Player 1 gets two wins with probability \(1/(A+1)\) (when \({\mathcal {X}}^*=\lceil {\mathcal {Y}}\rceil\)), and one win and one loss with probability \(A/(A+1)\). Therefore, \(H({\mathcal {X}}^*,{\mathcal {Y}})=1/(A+1)\).

On the other hand, for each of Player 1’s pure strategy \({\mathcal {X}}\in [A]\), we discuss in two cases.

-

(1)

When \({\mathcal {X}}\in \{0,1,B,B+1\}\), Player 1 gets one win and one draw with probability \(2/(A+1)\), and one win and one loss with probability \((A-1)/(A+1)\), hence \(H({\mathcal {X}},{\mathcal {Y}}^*)=1/(A+1)\);

-

(2)

When \(1<{\mathcal {X}}<B\), Player 1 gets two wins with probability \(1/(A+1)\) (which happens when \(\mathcal {Y=X}+0.5\)), and one win and one loss with probability \(A/(A+1)\), hence \(H({\mathcal {X}},{\mathcal {Y}}^*)=1/(A+1)\).

In summary, \(H({\mathcal {X}}^*,{\mathcal {Y}})=H({\mathcal {X}},{\mathcal {Y}}^*)=1/(A+1)\) for all \({\mathcal {Y}}\in [0, B]\) and all \({\mathcal {X}}\in [A]\), implying that \(({\mathcal {X}}^*,{\mathcal {Y}}^*)\) is indeed an NE.

Moreover, when \(\Delta = 1\) and \(B=1\), namely, \(A=2\) and \(B=1\). We show that the following profile consititutes an NE:

For each of Player 2’s pure strategy \({\mathcal {Y}}\in [0,1]\), we discuss in two cases.

-

(1)

When \({\mathcal {Y}}\in \{0,1\}\), Player 1 gets one win and one draw, hence \(H({\mathcal {X}}^*,{\mathcal {Y}})=1/2\);

-

(2)

When \(0<{\mathcal {Y}}<1\), Player 1 wins both two battlefields, i.e., \(H({\mathcal {X}}^*,{\mathcal {Y}})=1\).

For each of Player 1’s pure strategy \({\mathcal {X}}\in [2]\), we discuss in two cases.

-

(1)

When \({\mathcal {X}}\in \{0,2\}\), Player 1 gets one win and one draw with probability 1/2, and one win and one lose with probability 1/2, hence \(H({\mathcal {X}},{\mathcal {Y}}^*)=1/4\);

-

(2)

When \({\mathcal {X}} = 1\), Player 1 gets one win and one draw, hence \(H({\mathcal {X}},{\mathcal {Y}}^*)=1/2\).

In summary, \(H({\mathcal {X}}^*,{\mathcal {Y}})\ge 1/2\), for all \({\mathcal {Y}}\in [0, 1]\) and \(H({\mathcal {X}},{\mathcal {Y}}^*)\le 1/2\) for all \({\mathcal {X}}\in [2]\), implying that \(({\mathcal {X}}^*,{\mathcal {Y}}^*)\) is indeed an NE, bringing a value of 1/2.

(ii) When \(\Delta \ge 2\) and \(0\le r \le \Delta -2\), the strategy \({\mathcal {X}}^*\) in Corollary 1 and the strategy \({\mathcal {Y}}^*\) in Lemma 4 constitute an NE.

(iii) When \(\Delta \ge 2\), \(r=\Delta -1\) and \(k\ge 2\), we show that the following \({\mathcal {X}}^*\) and \({\mathcal {Y}}^*\) constitute an NE:

Recall the formula in Lemma 1:

On the one hand, for each of Player 2’s pure strategy \({\mathcal {Y}}\in [0,B]\), we discuss in seven cases.

-

(1)

When \({\mathcal {Y}}=0\), it is impossible for \({\mathcal {X}}^*={\mathcal {Y}}\), and hence

$$\begin{aligned}H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=\Delta -1)+0.5P({\mathcal {X}}^*=\Delta )\\= & {} \, \frac{3}{2(2k+1)}+\frac{1}{2(2k+1)}=\frac{1}{k+1/2}. \end{aligned}$$Similarly, when \({\mathcal {Y}}=k\Delta -1\), it is impossible for \({\mathcal {X}}^*={\mathcal {Y}}+\Delta\), and hence

$$\begin{aligned} H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=k\Delta )+0.5P({\mathcal {X}}^*=k\Delta -1)\\= & {} \, \frac{3}{2(2k+1)}+\frac{1}{2(2k+1)}=\frac{1}{k+1/2}.\end{aligned}$$ -

(2)

When \(0< {\mathcal {Y}}< \Delta -1\),

$$\begin{aligned}H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=\Delta -1)+P({\mathcal {X}}^*=\Delta )\\= & {} \, \frac{3}{2(2k+1)}+\frac{1}{2k+1}\\> & {} \frac{1}{k+1/2}. \end{aligned}$$Similarly, when \((k-1)\Delta<{\mathcal {Y}}<k\Delta -1\),

$$\begin{aligned} H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=k\Delta )+P({\mathcal {X}}^*=k\Delta -1)\\= & {} \, \frac{5}{2(2k+1)}>\frac{1}{k+1/2}.\end{aligned}$$ -

(3)

When \({\mathcal {Y}}=\Delta -1\),

$$\begin{aligned}H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=\Delta )+0.5[P({\mathcal {X}}^*=\Delta -1)+P({\mathcal {X}}^*=2\Delta -1)]\\= & {} \, \frac{1}{2k+1}+\frac{1}{2}\left[ \frac{3}{2(2k+1)}+\frac{1}{2k+1}\right] \\= & {} \, \frac{9}{4(2k+1)}>\frac{1}{k+1/2}.\end{aligned}$$Similarly, when \({\mathcal {Y}}=(k-1)\Delta\),

$$\begin{aligned} H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=k\Delta -1)+0.5[P({\mathcal {X}}^*=(k-1)\Delta )+P({\mathcal {X}}^*=k\Delta )]\\= & {} \, \frac{9}{4(2k+1)}>\frac{1}{k+1/2}.\end{aligned}$$ -

(4)

When \(i\Delta -1<{\mathcal {Y}}<i\Delta\) for some \(1\le i\le k-1\),

$$\begin{aligned}H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=i\Delta )+P({\mathcal {X}}^*=i\Delta -1)\\= & {} \, \frac{1}{2k+1}+\frac{1}{2k+1}\\= & {} \, \frac{1}{k+1/2}.\end{aligned}$$ -

(5)

When \({\mathcal {Y}}=i\Delta\) for some \(1\le i<k-1\),

$$\begin{aligned}H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=(i+1)\Delta -1)+0.5[P({\mathcal {X}}^*=i\Delta )+P({\mathcal {X}}^*=(i+1)\Delta ]\\= & {} \, \frac{1}{2k+1}+\frac{1}{2}\left[ \frac{1}{2k+1}+\frac{1}{2k+1}\right] \\= & {} \, \frac{1}{k+1/2}.\end{aligned}$$ -

(6)

When \(i\Delta<{\mathcal {Y}}<(i+1)\Delta -1\) for some \(1\le i<k-1\),

$$\begin{aligned}H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=(i+1)\Delta -1)+P({\mathcal {X}}^*=(i+1)\Delta )\\= & {} \, \frac{1}{2k+1}+\frac{1}{2k+1}=\frac{1}{k+1/2}. \end{aligned}$$ -

(7)

When \({\mathcal {Y}}=i\Delta -1\) for some \(1<i\le k-1\),

$$\begin{aligned}H({\mathcal {X}}^*,{\mathcal {Y}})= & {} \, P({\mathcal {X}}^*=i\Delta )+0.5[P({\mathcal {X}}^*=i\Delta -1)+P({\mathcal {X}}^*=(i+1)\Delta -1]\\= & {} \, \frac{1}{2k+1}+\frac{1}{2}\left[ \frac{1}{2k+1}+\frac{1}{2k+1}\right] =\frac{1}{k+1/2}.\end{aligned}$$

On the other hand, for each of Player 1’s pure strategy \({\mathcal {X}}\in [A]\), we discuss in eight cases.

-

(1)

When \({\mathcal {X}}=0\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, 0.5P({\mathcal {Y}}^*=0)=\frac{1}{k+1/2}.\end{aligned}$$Similarly, when \({\mathcal {X}}=(k+1)\Delta -1\),

$$\begin{aligned} H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, 0.5P({\mathcal {Y}}^*=k\Delta -1)=\frac{1}{k+1/2}.\end{aligned}$$ -

(2)

When \(0<{\mathcal {X}}<\Delta\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=0)=1/(k+1/2).\end{aligned}$$Similarly, when \(k\Delta \le {\mathcal {X}}<(k+1)\Delta -1\),

$$\begin{aligned} H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=k\Delta -1)=\frac{1}{k+1/2}.\end{aligned}$$ -

(3)

When \({\mathcal {X}}=\Delta\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=\Delta -0.5)+0.5P({\mathcal {Y}}^*=0)\\= & {} \, \frac{1}{2k+1}+\frac{1}{2k+1}\\= & {} \, \frac{1}{k+1/2}.\end{aligned}$$Similarly, when \({\mathcal {X}}=k\Delta -1\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=(k-1)\Delta -0.5)+0.5P({\mathcal {Y}}^*=k\Delta -1)\\= & {} \, \frac{1}{k+1/2}.\end{aligned}$$ -

(4)

When \({\mathcal {X}}=\Delta +1\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=\Delta -0.5)+0.5P({\mathcal {Y}}^*=\Delta +1)\\= & {} \, \frac{1}{2k+1}+\frac{1}{2(2k+1)}\\< & {} \frac{1}{k+1/2}.\end{aligned}$$ -

(5)

When \(i\Delta +1<{\mathcal {X}}\le (i+1)\Delta -1\) for some \(1\le i <k-1\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=i\Delta -0.5)+P({\mathcal {Y}}^*=i\Delta +1)\\= & {} \, \frac{1}{2k+1}+\frac{1}{2k+1}=\frac{1}{k+1/2}. \end{aligned}$$ -

(6)

When \({\mathcal {X}}=i\Delta\) for some \(1<i\le k-1\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=(i-1)\Delta +1)+P({\mathcal {Y}}^*=i\Delta -0.5)\\= & {} \, \frac{1}{2k+1}+\frac{1}{2k+1}=\frac{1}{k+1/2}.\end{aligned}$$ -

(7)

When \({\mathcal {X}}=i\Delta +1\) for some \(1<i\le k-1\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)= & {} \, P({\mathcal {Y}}^*=(i-1)\Delta -0.5)+0.5[P({\mathcal {Y}}^*=(i-1)\Delta +1)+P({\mathcal {Y}}^*=i\Delta +1)]\\= & {} \, \frac{1}{2k+1}+\frac{1}{2}\left[ \frac{1}{2k+1}+\frac{1}{2k+1}\right] =\frac{1}{k+1/2}.\end{aligned}$$ -

(8)

When \((k-1)\Delta +1<{\mathcal {X}}<k\Delta -1\),

$$\begin{aligned}H({\mathcal {X}},{\mathcal {Y}}^*)=P({\mathcal {Y}}^*=\Delta -0.5)=\frac{1}{2k+1}<\frac{1}{k+1/2}.\end{aligned}$$

In summary, \(H({\mathcal {X}}^*,{\mathcal {Y}})\ge \frac{1}{k+1/2}\), for all \({\mathcal {Y}}\in [0, B]\) and \(H({\mathcal {X}},{\mathcal {Y}}^*)\le \frac{1}{k+1/2}\) for all \({\mathcal {X}}\in [A]\), implying that \(({\mathcal {X}}^*,{\mathcal {Y}}^*)\) is indeed an NE, bringing a value of \(\frac{1}{k+1/2}\).

When \(\Delta \ge 2\), \(r=\Delta -1\) and \(k=1\), namely, \(A = 2\Delta -1\) (or \(A = 2B+1\)). We show that the unique NE is

For each of Player 2’s pure strategy \({\mathcal {Y}}\in [0,B]\), we discuss in two cases.

-

(1)

When \({\mathcal {Y}}\in \{0,B\}\), Player 1 gets one win and one draw with probability 1/2, and two wins with probability 1/2, hence \(H({\mathcal {X}}^*,{\mathcal {Y}})=3/4\);

-

(2)

When \(0<{\mathcal {Y}}<B\), Player 1 wins in both battlefields, i.e., \(H({\mathcal {X}}^*,{\mathcal {Y}})=1\).

For each of Player 1’s pure strategy \({\mathcal {X}}\in [A]\), we discuss in two cases.

-

(1)

When \({\mathcal {X}}\in \{B,B+1\}\), Player 1 gets one win and one draw with probability 1/2, and two wins with probability 1/2, hence \(H({\mathcal {X}},{\mathcal {Y}}^*)=3/4\);

-

(2)

When \({\mathcal {X}} = [A]/\{B,B+1\}\), Player 1 gets one win and one loss, hence \(H({\mathcal {X}},{\mathcal {Y}}^*)=0\).

In summary, \(H({\mathcal {X}}^*,{\mathcal {Y}})\ge 3/4\), for all \({\mathcal {Y}}\in [0, B]\) and \(H({\mathcal {X}},{\mathcal {Y}}^*)\le 3/4\) for all \({\mathcal {X}}\in [A]\), implying that \(({\mathcal {X}}^*,{\mathcal {Y}}^*)\) is indeed an NE, bringing a value of 3/4. \(\square\)

1.2 Proof of Theorem 3

Proof

(i) The payoff matrix \(M_{(A+1)\times (B+1)}\) is such that

Suppose now \(A=2n\) and \(B=2n-1\) for some positive integer n. Consider the following strategy profile:

It can be checked that

Therefore, \(X^*MY'=1/A\) for all strategy Y of Player 2, and \(XMY^{*'}\le 1/A\) for all strategy X of Player 1. This shows that \((X^*,Y^*)\) is indeed an NE for the case \(A=2n\) and \(B=2n-1\), bringing a value of \(1/A=1/k\).

The case \(A=2n+1\) and \(B=2n\) for some nonnegative integer n can be shown to be valid in a similar way. In fact, it can be checked that the following strategy profile is an NE:

bringing a value of \(1/(A+1)=1/(k+1)\).

(ii) When \(\Delta \ge 2\) and \(0\le r\le \Delta -2\), this theorem follows from Corollary 1 and Corollary 2.

(iii) When \(\Delta \ge 2\) and \(r=\Delta -1\), we can show that the equilibrium strategy profile, in terms of distributions, is

In terms of vectors, the equilibrium is

If Player 2 is also restricted to discrete strategies, then the payoff matrix \(M_{(A+1)\times (B+1)}\) is

It can be checked that

where e is a row vector of all ones with a suitable dimension. This proves that the above strategy profile \((X^*,Y^*)\) is indeed an NE. \(\square\)

Proofs in Sect. 5

Two steps are used to demonstrate the Theorems 4 and 5. First, by removing both players’ redundant strategies, we obtain a simplified game. Then we completely characterize the NEs of the simplified game and offer the conditions to avoid that each player takes the redundant strategies.

1.1 Proof of Theorem 4

Before proving Theorem 4, we provide a useful lemma.

Lemma 6

Suppose that \(\Delta =1\) and denote \(h=\lceil A/2\rceil\). By removing the redundant strategies, the payoff matrix can be simplified to the following form

where \(M_i=[0.5,0.5]'\) when A is odd, and \(M_i=[0.5,0.5]\) when A is even.

Proof

The payoff matrix \(M_{(A+1)\times (B+1)}\) is such that

We only consider the case that A is odd, as the result can be similarly proved when A is even. According to Theorem 3, the equilibrium value is \(\frac{1}{A+1}=\frac{1}{2h}\). Let \({\mathcal {Y}}\) be an equilibrium strategy. Denote \(\widehat{w}_i=H(i,{\mathcal {Y}})\) as the payoff of Player 1 when she uses the pure strategy \({\mathcal {X}}=i\) (and Player 2 uses \({\mathcal {Y}}\)), then

For each \(t\in [h-2]\), adding up the inequalities (5) over all \(i=1,3,\ldots , 2t+1\) and all \(i=2t+2,2t+4,\ldots ,B\) and multiplying by 2 on both sides, we have

This implies that \(y_{2t+1}=0\) for all \(t\in [h-2]\), because \(\sum _{i=0}^{B}y_i=1\). Therefore, the payoff matrix can be simplified as \(M^*\) by removing all the odd columns of M, as desired. \(\square\)

Proof of Theorem 4

Suppose A is odd. Following Lemma 6, any even pure strategy for Player 2 (i.e., \({\mathcal {Y}}=2j\), with \(j\in [h-1]\)) constitutes an equivalence class. Hence, Player 2’s equilibrium strategy is unique.

As for Player 1, all the equivalence classes are: \(\{2i,2i+1\}\), \(i\in [h-1]\). Therefore \(x_{2i}+x_{2i+1}=1/h\) for all \(i\in [h-1]\).

Furthermore,

Then

implying that \(x_{2i}\le x_{2i+2}, i\in [h-2]\), i.e., the two conditions in the theorem are necessary.

Regarding sufficiency, suppose that Player 1’s strategy \({\mathcal {X}}\) satisfies the two conditions. Then

Combining with the monotonicity condition \(x_{2i}\le x_{2i+2}, i\in [h-2]\), the inequality system \(XM\ge \frac{1}{2\,h} e\) always holds. Hence \({\mathcal {X}}\) is an equilibrium strategy for Player 1. This finishes the complete equilibrium characterizations of both players. The case that A is even can be analogously analyzed using the same method. \(\square\)

1.2 Proof of Theorem 5

Lemma 7

Suppose that \(\Delta \ge 3\) and \(1\le r\le \Delta -3\). Then the payoff matrix M can be simplified as

where each submatrix \(M_i\), \(1\le i\le k\), is of size \((\Delta -r-1)\times (r+1)\) and has all-one entries.

Proof

Recall that the equilibrium value is 1/k. Let \({\mathcal {X}}\) be an equilibrium strategy of Player 1. Then we must have

For each \(t\in [r]\), adding up all the k inequalities over \(i=t,\Delta +t,\ldots , (k-1)\Delta +t\) in (6) gives

Since \(\sum _{i=0}^{A}x_i=1\), we must have

Furthermore, for each \(s\in [k-2]\) and each \(t\in [r]{\setminus }\{0\}\), adding up all the k inequalities in (6) gives

Since \(\sum _{i=0}^{A}x_i=1\), we must have

Combining (7) and (8), we know that \(\{{\mathcal {X}}=s\Delta +t, \forall s\in [k],t\in [r]\}\) are all the redundant strategies of Player 1. Similarly, for Player 2, \(\{{\mathcal {Y}}={s\Delta +t}, \forall r+1\le t\le \Delta -1,s\in [k-2]\}\) are all the redundant strategies.

Combining the above two facts proves the lemma. \(\square\)

Proof of Theorem 5(i)

For all \(s\in [k-1]\), denote \(T_s^1=\{i: s\Delta +r+1\le i\le (s+1)\Delta -1\}\). Due to Lemma 7, these sets are all the equivalence classes of Player 1’s strategies, which are also equitable. Therefore condition (1) must be satisfied.

Furthermore, to prevent the redundant strategies being adopted by her opponent, the following restrictions should be added for Player 1:

Then

Consequently

implying condition (2). The discussion for Player 2 is similar.

On the other hand, suppose that Player 1’s strategy X satisfies conditions (1) and (2) . Denote \((XM)_i\) as the i-th component of XM. Then, due to condition (1) , \((XM)_i=1/k\) for all \(i=s\Delta +t\) with \(s\in [k-1], t\in [r]\).

As for the other components, combining with the inequalities ( 9 ) and condition (2) , we know that \((XM)_i\ge 1/k\) for all \(i=s\Delta +t\) with \(s\in [k-2]\) and \(r+1\le t\le \Delta -1.\) In summary, the inequality system \(XM\ge \frac{1}{k}e\) is valid. Therefore X is Player 1’s equilibrium strategy. The analysis for Player 2 can be similarly done. \(\square\)

Lemma 8

If \(\Delta =2\) or \(r=0\), then the payoff matrix M can be simplified as

where each \(M_i\) is a \(\Delta -1\) dimensional column vector with all-one elements for all \(1\le i\le k\).

Proof

Using the same method that is adopted in Lemma 7, we know that

which proves the lemma. \(\square\)

Proof of Theorem 5(ii)

Suppose \(r=0\). For all \(s\in [k-1]\), denote \(T_s^2=\{s\Delta \}\). Due to Lemma 8, these sets are all the equivalence classes for Player 2, which are also equitable. Therefore Player 2’s equilibrium strategy is unique.

It follows that the strategies in set \(T_s^2\) for Player 2 are not redundant. As a consequence, condition (1) must be satisfied. Furthermore, to prevent Player 2’s redundant strategies being adopted, condition (2) should also be satisfied for Player 1.

As for sufficiency, suppose now \({\mathcal {X}}\) and \({\mathcal {Y}}\) satisfy conditions (1) and (2). Using the same method as in the proofs of Theorem 4 and Theorem 5 (i), we have \(XM\ge \frac{1}{k}e\) and \(MY'\le \frac{1}{k}e'\). As a consequence, \({\mathcal {X}}\) is optimal for Player 1 and \({\mathcal {Y}}\) is optimal for Player 2. Therefore, the theorem is valid. \(\square\)

Lemma 9

If \(r=\Delta -2\), then the payoff matrix M can be simplified as

where \(M_i\) is a \(\Delta -1\) dimensional row vector with all-one elements.

Proof

Using the same method that is adopted in Lemma 7, we know that

Note that Player 2 does not have any redundant strategies in this case. This completes the proof. \(\square\)

Proof of Theorem 5(iii)

The proof is similar to that of Theorem 5(ii) and therefore is omitted. \(\square\)

1.3 Proof of Theorem 6

As in the proofs of Theorems 4 and 5, we first obtain a simplified game as stated in Lemma 10 below. Furthermore, we prove that both players place positive probabilities over the pure strategies in this simplified game. Finally, the uniqueness of the solution can be obtained by applying the elementary theory of linear algebra.

Lemma 10

If \(\Delta \ge 2\) and \(r=\Delta -1\), then the payoff matrix can be simplified as

which is a tridiagonal matrix that is positive definite and hence invertible.

Proof

Recall from Theorem 3 that the following profile is a Nash equilibrium:

Denote

It suffices to show that the supports of all equilibrium strategies of Player 1 and Player 2 are subsets of \(R_1\) and \(R_2\), respectively. Suppose on the contrary that there is an equilibrium strategy \({\mathcal {Y}}\) for Player 2 whose support contains \(i\notin R_2\). Then \(({\mathcal {X}}^*,{\mathcal {Y}})\) is also a Nash equilibrium. However, at shown in the proof of Theorem 2, \(H({\mathcal {X}}^*,i)\) for \(i\notin R_2\) is strictly bigger than the equilibrium value, a contradiction. It can be proved in a similar way that there is no equilibrium strategy \({\mathcal {X}}\) for Player 1 whose support contains \(i\notin R_1\).

Since \(M^*\) is a tridiagonal matrix, we can get the following recursive formula through the Laplace expansion.

where the subscript (\(n\ge 2\)) indicates the dimension of the matrix. Using the facts that \(det(M_1)=1\) and \(det(M_2)=3/4\), we have \(det(M_n)=\frac{n+1}{2^n}>0.\) Since the cardinalities of \(R_1\) and \(R_2\) are always even, \(M^*\) is invertible. This finishes the proof. \(\square\)

Proof of Theorem 6

The notation in the proof of Lemma 10 is still valid. Due to Lemma 10, we assume that any equilibrium strategy of Player 1 (resp. Player 2) is a distribution on \(R_1\) (resp. \(R_2\)), and hence is of 2k dimension. We further show that \(R_2\) is precisely the support of any equilibrium strategy of Player 2, i.e., all pure strategies in \(R_2\) are used in an equilibrium strategy of Player 2.

Suppose on the contrary that there exists an equilibrium strategy Y of Player 2 such that pure strategy \(j\in R_2\) is not used. Denote \(Y_0\) as the vector obtained by removing the j-th component of Y, and \(M^*_0\) the matrix obtained by removing the j-th column of \(M^*\). So \(Y_0\) is a \((2k-1)\)-dimensional row vector and \(M_0^*\) is of size \(2k\times (2k-1)\).

Since \(Y_0\) is an equilibrium strategy of Player 2, we must have

where \(\alpha\) is the equilibrium value and e is the all-one row vector. Since \(X^*\) is an equilibrium strategy of Player 1, the strategy profile \((X^*, Y_0)\) must be an NE. Since it can be checked that \(X^*\beta =\alpha\), no entry of \(M_0^*Y_0'\) could be strictly smaller than \(\alpha\), because otherwise we would have \(X^*M_0^*Y_0'<\alpha\), contradicting the fact that \((X^*, Y_0)\) is an NE. This implies that \(M_0^*Y_0'=\beta\) and hence the linear equation system \(M_0^*Y'=\beta\) has solutions. Denote by \(M_1^*\) the matrix obtained by replacing the j-th column of \(M^*\) with \(\beta\).

Following the fact \(M^*Y^*=\beta\) we know that \(\beta\) can be linearly represented by column vectors \(\{m_1,m_2,\ldots ,m_j,\ldots ,m_{2k}\}\) of \(M^*\). By The Substitution Theorem, we know that \(rank(M_1^*)=rank(M^*)=2k\). However, \(rank(M_0^* )=2k-1<rank(M_1^*)\), implying that \(M_0^*Y_0=\beta\) is impossible. A contradiction.

The above contradiction implies that \(R_2\) is precisely the support of all equilibrium strategies of Player 2. In a similar way, we can show that \(R_1\) is precisely the support of all equilibrium strategies of Player 1. Let X and Y be arbitrary equilibrium strategies of Player 1 and Player 2, respectively. Then

Since \(M^*\) is invertible, \((X^*,Y^*)\) is the unique solution. This finishes the proof. \(\square\)

Extreme point representation

In Sect. 5, the equilibrium strategy sets in cases (i) and (ii) are characterized by some linear inequalities, which form convex polyhedrons (Case (iii) is special, in which both players’ equilibrium strategies are unique). As suggested by an anonymous reviewer, a more desirable characterization might be by specifying their extreme points. In this appendix, we provide some incomplete results.

In this appendix, we denote by \({{\,\mathrm{\textrm{Conv}}\,}}(\textbf{E})\) the convex hull of a finite set \(\textbf{E} = \{\textbf{x}_1,\ldots , \textbf{x}_n\}\). That is, \({{\,\mathrm{\textrm{Conv}}\,}}(\textbf{E}) = \left\{ \sum _{i=1}^n\lambda _i\textbf{x}_i \Big | \sum _{i=1}^{n}\lambda _i=1;\lambda _i\ge 0, 1\le i\le n\right\} .\)

1.1 Case (i): \(\Delta =1\)

Recall that \(h = \lceil A/2\rceil\). Denote

Proposition 1

C.1 The extreme points of \({\mathcal {F}}^1_x\) and \({\mathcal {F}}^1_y\) are, respectively,

where \(\textbf{e}_1=(1,0)\) and \(\textbf{e}_2=(0,1)\).

Following Proposition 1 and Theorem 4:

-

if A is odd, then Player 1’s equilibrium strategies can be rewritten as

$$\begin{aligned} {\mathcal {X}}^*\sim \sum _{i=0}^{A}x_iI_i: \textbf{x}= \big (x_{2i}, x_{2i+1}\big )'_{i\in [h-1]}\in {{\,\mathrm{\textrm{Conv}}\,}}\left( \textbf{E}^1_x\right) . \end{aligned}$$ -

if A is even, then Player 2’s equilibrium strategies can be rewritten as

$$\begin{aligned} {\mathcal {Y}}^*\sim \sum _{j=0}^{B}y_jI_j: \textbf{y}= \left( y_{2j}, y_{2j+1}\right) '_{j\in [h-1]}\in {{\,\mathrm{\textrm{Conv}}\,}}\left( \textbf{E}^1_y\right) . \end{aligned}$$

Note that if A is odd (resp. even), Player 2’s (resp. Player 1’s) equilibrium strategy is not discussed in the above description, because it is unique as showed in Theorem 4.

1.2 Part 1 of Case (ii): \(\Delta \ge 3\) and \(1\le r\le \Delta -3\)

Denote

where all other entries that are not described in \(\textbf{x}\) and \(\textbf{y}\) are zero.

Suppose that the points in \({\mathcal {F}}^2_x\) satisfy

-

(1)

\(\sum _{t=1}^{\Delta -r-1}x_{s\Delta +r+t}=\frac{1}{k}\) for all \(s\in [k-1]\),

-

(2)

\(\left( x_{s\Delta +r+1}\right) _{s=0}^{k-1}\) and \(\left( \sum _{z=r+1}^{t-1}x_{s\Delta +z}+0.5x_{s\Delta +t}\right) _{s=0}^{k-1}\) are non-decreasing for all integer \(t=r+2,r+3,\ldots , \Delta -1\),

and points in \({\mathcal {F}}^2_y\) satisfy

-

(1)

\(\sum _{t=0}^{r}y_{s\Delta +t}=\frac{1}{k}\) for all \(s\in [k-1]\),

-

(2)

\(\left( y_{s\Delta }\right) _{s=0}^{k-1}\) and \(\left( \sum _{z=0}^{t-1}y_{s\Delta +z}+0.5y_{s\Delta +t}\right) _{s=0}^{k-1}\) are non-increasing for all integer \(t\in [r]\setminus \{0\}\).

Proposition 2

C.2 The extreme points of \({\mathcal {F}}^2_x\) and \({\mathcal {F}}^2_y\) are, respectively,

where, for all \(j = 1,\ldots , k\), the \((i_j)\)-th component of the row vector \(e_{i_j}\in {\mathbb {R}}^{\Delta -r-1}\) is 1 and all other components are zero.

Following Proposition 2 and Theorem 5, when \(\Delta \ge 3\) and \(1\le r\le \Delta -3\), Player 1’s equilibrium strategies can be rewritten as

and Player 2’s equilibrium strategies can be rewritten as

Note that we did not completely describe the extreme points of the convex polyhedrons in Parts (2) and (3) of Case (ii). They seem to possess very complicated structures, and complete descriptions of them are unlikely to help us better understand the Blotto game.

1.3 Proof of propositions in Appendix C

Proof of Proposition 1

We only discuss \(\textbf{E}^1_x\), other cases are similar. Clearly, for any \(w\in [h]\), \(\textbf{q}_x^w\) belongs to \({\mathcal {F}}^1_x\) and cannot be represented by any other two points of \({\mathcal {F}}^1_x\), because non-zero components of \(\textbf{q}_x^w\) all attain the maximum \(\frac{1}{h}\).

For any \(\textbf{x} = \left( x_{2i}, x_{2i+1}\right) '_{i\in [h-1]} \in {\mathcal {F}}^1_x\),

where the last equality applies \(x_{2\,h-2}+x_{2\,h-1}-x_{2i} = \frac{1}{h} - x_{2i} = x_{2i+1}\). Moreover, the coefficients, which are non-negtive, satisfy that \(hx_0 + \sum _{l = 1}^{h-1}h(x_{2\,l}-x_{2\,l-2}) + hx_{2\,h-1} = 1.\) That is, every point in \({\mathcal {F}}^1_x\) can be represented by a convex combination of certain \(\textbf{q}_x^{w}, \forall w \in [h]\). Therefore, \(\textbf{E}^1_x\) contains all the extreme points of \({\mathcal {F}}^1_x\). \(\square\)

Proof of Proposition 2

We only discuss \(\textbf{E}_x^2\). Clearly, every point in \(\textbf{E}_x^2\) belongs to \({\mathcal {F}}^2_x\) and cannot be represented by any other two points of \({\mathcal {F}}^2_x\), because non-zero components of every point in \(\textbf{E}_x^2\) all attain the maximum \(\frac{1}{k}\).

For any \(\textbf{x} = \Big (\{x_{s\Delta +r+t}\}_{t=1}^{\Delta -r -1}, s\in [k-1] \Big )' \in {\mathcal {F}}_x\), we demonstrate constructively that \(\textbf{x}\) can be represented by a convex combination of certain points in \(\textbf{E}_x^2\).

Step 1.

where \(\phi _{11} = \sum _{w = 1}^{\Delta - r -2}x_{r+w}\).

Step 2.

where \(\phi _{21} = \phi _{22}-\phi _{11},\) and \(\phi _{22} = \sum _{w = 1}^{\Delta - r -2}x_{\Delta +r+w}\).

Step 3.

where \(\phi _{31} = \phi _{33}-\phi _{11},\) \(\phi _{32} =\phi _{33}-\phi _{22},\) and \(\phi _{33} = \sum _{w = 1}^{\Delta - r -2}x_{2\Delta +r+w}\).

Step \(k-1\).

where \(\phi _{k-1, j} = \phi _{k-1,k-1}-\phi _{j,j}, \forall j= 1,\ldots , k-2\), and \(\phi _{k-1, k-1} = \sum _{w = 1}^{\Delta - r -2}x_{(k-2)\Delta +r+w}\).

Step k.

where \(\phi _{k, j} = \sum _{w = 1}^{\Delta - r -1}x_{(k-1)\Delta +r+w}-\phi _{j,j}, \forall j= 1,\ldots , k-1\).

Moreover, all the coefficients satisfy

Since \(\textbf{x}\in {\mathcal {F}}_x\) and \(\sum _{t=1}^{\Delta -r-1}x_{s\Delta +r+t}=\frac{1}{k}, \forall s\in [k-1]\), we know that \(\phi _{j,j}=\frac{1}{k}- x_{j\Delta -1}\) and \(\phi _{k,j}= x_{j\Delta -1}\) for all \(j = 1,\ldots , k-1\). Thus, the above k steps construct a convex combination representation of each \(\textbf{x}=\textbf{z}_k\) by certain points in \(\textbf{E}^2_x\).

In summary, every point in \({\mathcal {F}}^2_x\) can be represented by a convex combination of certain points in \(\textbf{E}_x^2\), implying that \(\textbf{E}^2_x\) contains all the extreme points of \({\mathcal {F}}^2_x\). \(\square\)

Note that the above representation relies on the condition \(\phi _{s,s} \le x_{s\Delta +r+1}, \forall s =1,\ldots , k-1\). When this condition does not hold, some coefficients might be negative. However, this condition is not restrictive, because by rearranging the order of the k steps, a similar method is applicable. We illustrate via the following example how this works.

Suppose that \(A = 11\) and \(B = 6\). The points in \({\mathcal {F}}_x^2\) are characterized by

According to Proposition 2, the extreme points of \({\mathcal {F}}_x^2\) are as follows:

For any \(\textbf{x} = (x_2,x_3,x_4,x_7,x_8,x_9)' \in {\mathcal {F}}_x\), due to \(x_2+x_3+x_4 = x_7+x_8+x_9\), exactly one of the following two assertions is true:

-

(1)

\(x_2+x_3 \le x_7\) (i.e., \(\phi _{11} = \frac{1}{2} - x_4 \le x_7\), which implies \(x_4 > x_8+x_9\));

-

(2)

\(x_2+x_3 > x_7\) (i.e., \(\phi _{11} = \frac{1}{2} - x_4 > x_7\), which implies \(x_4 \le x_8+x_9\)).

When Assertion (1) is true, we have

When Assertion (2) is true, we have

That is, every point in \({\mathcal {F}}^2_x\) can be represented by a convex combination of these extreme points. Consequently, \(\{\textbf{q}_i: i=0,\ldots ,5\}\) is the set of all extreme points.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liang, D., Wang, Y., Cao, Z. et al. Discrete Colonel Blotto games with two battlefields. Int J Game Theory 52, 1111–1151 (2023). https://doi.org/10.1007/s00182-023-00853-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00182-023-00853-4