Abstract

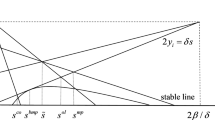

We consider a discrete time Markov Decision Process (MDP) under the discounted payoff criterion in the presence of additional discounted cost constraints. We study the sensitivity of optimal Stationary Randomized (SR) policies in this setting with respect to the upper bound on the discounted cost constraint functionals. We show that such sensitivity analysis leads to an improved version of the Feinberg–Shwartz algorithm (Math Oper Res 21(4):922–945, 1996) for finding optimal policies that are ultimately stationary and deterministic.

Similar content being viewed by others

References

Altman E (1999) Constrained Markov decision processes. Chapman and Hall, Boca Raton

Bertsekas DP (1995) Dynamic programming and optimal control, Vol.1 and 2. Athena Scientific, Belmont

Balaji J, Hemachandra N (2005) Sensitivity analysis of (s, S) policies. Under revision

Chvatal V (1983) Linear programming. W.H. Freeman and Company, New York

Derman C, Klein M (1965) Some remarks on finite horizon Markovian decision models. Oper Res 13: 272–278

Feinberg EA, Shwartz A (1994) Markov decision models with weighted discounted criteria. Math Oper Res 19: 152–168

Feinberg EA, Shwartz A (1995) Constrained Markov decision models with weighted discounted rewards. Math Oper Res 20: 302–320

Feinberg EA, Shwartz A (1996) Constrained discounted dynamic programming. Math Oper Res 21(4): 922–945

Feinberg EA, Shwartz A (2002) Handbook of Markov decision processes: methods and applications. Kluwer, Boston

Iyer KR, Hemachandra N (2007) Ultimately stationary deterministic strategies for stochastic games. In: Proceedings of the international conference on advances in control and optimization of dynamical systems (ACODS 2007), IISc., Bangalore, Feb 1–2, pp 414–421

Iyer K, Hemachandra N (2006) An algorithm for some constrained optimal stopping time problems. Working Paper, IEOR, IIT Bombay

Jaquette SC (1976) A utility criterion for Markov decision processes. Manag Sci 23(1): 43–49

Puranam KS, Hemachandra N (2005) Sensitivity analysis of control limit with respect to the cost parameters in an M/M/1 queue. Vision 2020: the strategic role of operational research, 2006. Based on 37th annual convention of Operational Research Society of India, IIM Ahmedabad, Jan 8–11, pp 433–439

Puterman ML (1994) Markov decision processes. Wiley, New York

Zadorojniy A, Shwartz A (2006) Robustness of policies in constrained Markov decision processes. IEEE Trans Autom Control 51(4): 635–638

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Iyer, K., Hemachandra, N. Sensitivity analysis and optimal ultimately stationary deterministic policies in some constrained discounted cost models. Math Meth Oper Res 71, 401–425 (2010). https://doi.org/10.1007/s00186-010-0303-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-010-0303-8