Abstract

A model-based clustering method based on Gaussian Cox process is proposed to address the problem of clustering of count process data. The model allows for nonparametric estimation of intensity functions of Poisson processes, while simultaneous clustering count process observations. A logistic Gaussian process transformation is imposed on the intensity functions to enforce smoothness. Maximum likelihood parameter estimation is carried out via the EM algorithm, while model selection is addressed using a cross-validated likelihood approach. The proposed model and methodology are applied to two datasets.

Similar content being viewed by others

Notes

Historical data available at https://www.capitalbikeshare.com/

Data obtained from http://sociograph.blogspot.ie/2011/04/communication-networks-part-2-mit.html

References

Abraham, C., Cornillon, P. A., Matzner-Løber, E., & Molinari, N. (2003). Unsupervised curve clustering using B-splines. Scand. J. Statist., 30, 581–595.

Adams, R. P., Murray, I., & MacKay, D. J. C. (2009). Tractable nonparametric Bayesian inference in Poisson processes with Gaussian process intensities. In Proceedings of the 26th annual international conference on machine learning (pp. 9–16).

Basu, S., & Dassios, A. (2002). A Cox process with log-normal intensity. Insurance sMath. Econom., 31, 297–302.

Baudry, J. -P., Raftery, A. E., Celeux, G., Lo, K., & Gottardo, R. (2010). Combining mixture components for clustering. J. Comput. Graph. Statist., 19, 332–353.

Bengio, Y., & Grandvalet, Y. (2004). No unbiased estimator of the variance of K-fold cross-validation. Journal of Machine Learning Research, 5, 1089–1105.

Bouveyron, C., & Brunet-Saumard, C. (2014). Model-based clustering of high-dimensional data: a review. Comput. Statist. Data Anal., 71, 52–78.

Bouveyron, C., Celeux, G., Murphy, T.B., & Raftery, A. E. (2019). Model-based clustering and classification for data science: with applications in R. Cambridge: Cambridge University Press.

Chen, J. (2017). Consistency of the MLE under mixture models. Statist Sci., 32, 47–63.

Chen, J., Li, S., & Tan, X. (2016). Consistency of the penalized MLE for two-parameter gamma mixture models. Sci China Math., 59, 2301–2318.

Chen, J., Tan, X., & Zhang, R. (2008). Inference for normal mixtures in mean and variance. Statist Sinica, 18, 443–465.

Cheng, R. C. H., & Liu, W. B. (2001). The consistency of estimators in finite mixture models. Scand J. Statist., 28, 603–616.

Ciuperca, G., Ridolfi, A., & Idier, J. (2003). Penalized maximum likelihood estimator for normal mixtures. Scand J. Statist., 30, 45–59.

Côme, E., & Latifa, O. (2014). Model-based count series clustering for Bike-sharing system usage mining, a case study with the Velib’ system of Paris,́ ACM Transactions on Intelligent Systems and Technology (TIST) - Special Section on Urban Computing, 5.

Cunningham, J. P., Shenoy, K. V., & Sahani, M. (2008). Fast Gaussian process methods for point process intensity estimation. In Proceedings of the 25th international conference on machine learning (pp. 192–199).

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from incomplete data via the EM algorithm. J. Roy. Statist. Soc. Ser. B, 39, 1–38. with discussion.

Eagle, N., & Pentland, A. (2006). Reality mining: sensing complex social systems. Pers Ubiquitous Comput., 10, 255–268.

Fraley, C., & Raftery, A. E. (2002). Model-based clustering, discriminant analysis, and density estimation. J. Amer. Statist. Assoc., 97, 611–631.

Fraley, C., & Raftery, A. E. (2007). Bayesian regularization for normal mixture estimation and model-based clustering. Journal of Classification, 24, 155–181.

Giacofci, M., Lambert-Lacroix, S., Marot, G., & Picard, F. (2013). Wavelet-based clustering for mixed-effects functional models in high dimension. Biometrics, 69, 31–40.

Heikkinen, J., & Arjas, E. (1999). Modeling a Poisson forest in variable elevations: a nonparametric Bayesian approach. Biometrics, 55, 738–745.

Jacques, J., & Preda, C. (2014). Model-based clustering for multivariate functional data. Comput Statist. Data Anal., 71, 92–106.

Kiefer, J., & Wolfowitz, J. (1956). Consistency of the maximum likelihood estimator in the presence of infinitely many incidental parameters. Ann. Math. Statist., 27, 887–906.

Kim, H., & Ghahramani, Z. (2006). Bayesian Gaussian process classification with the EM-EP algorithm. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28, 1948–1959.

Kohavi, R. (1995). A study of cross-validation and bootstrap for accuracy estimation and model selection. In: Proceedings of the 14th international joint conference on artificial intelligence - vol. 2, San Francisco, CA, USA: Morgan Kaufmann Publishers Inc., IJCAI’95, pp. 1137–1143.

Lenk, P. J. (1988). The logistic normal distribution for Bayesian, nonparametric, predictive densities. J. Amer. Statist. Assoc., 83, 509–516.

Lenk, P. J. (1991). Towards a practicable Bayesian nonparametric density estimator. Biometrika, 78, 531–543.

Leonard, T. (1978). Density estimation, stochastic processes and prior information. J. Roy. Statist. Soc. Ser. B, 40, 113–146. with discussion.

Lloyd, C., Gunter, T., Osborne, M. A., & Roberts, S. J. (2015). Variational inference for Gaussian process modulated Poisson processes. In Proceedings of the 32nd international conference on international conference on machine learning -, (Vol. 37 pp. 1814–1822).

McNicholas, P.D. (2016). Mixture model-based classification. Boca Raton: CRC Press.

Murray, I., MacKay, D., & Adams, R. P. (2009). The Gaussian process density sampler. In Koller, D., Schuurmans, D., Bengio, Y., & Bottou, L. (Eds.) Advances in neural information processing systems 21 (pp. 9–16): Curran Associates, Inc.

O’Hagan, A., Murphy, T. B., Scrucca, L., & Gormley, I. C. (2019). Investigation of parameter uncertainty in clustering using a Gaussian mixture model via jackknife, bootstrap and weighted likelihood bootstrap. Comput Stat., 34, 1779–1813.

Rasmussen, C. E., & Williams, C. K. I. (2006). Gaussian processes for machine learning, adaptive computation and machine learning. MIT Press: Cambridge.

Sapatinas, T. (1995). Identifiability of mixtures of power-series distributions and related characterizations. Ann. Inst. Statist. Math., 47, 447–459.

Smyth, P. (2000). Model selection for probabilistic clustering using cross-validated likelihood. Stat Comp., 10, 63–72.

Tokdar, S. T., & Ghosh, J. K. (2007). Posterior consistency of logistic Gaussian process priors in density estimation. J. Statist. Plann. Inference, 137, 34–42.

Williams, C. K. I., & Rasmussen, C. E. (1996). Gaussian processes for regression. In Advances in neural information processing systems 8 (pp. 514–520). Cambridge: MIT Press.

Zhang, Y., & Yang, Y. (2015). Cross-validation for selecting a model selection procedure. J. Econom., 187, 95–112.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Consistency of Penalized MLE

Sufficient conditions for \(\hat {P}\) to be strongly consistent are given in Kiefer and Wolfowitz (1956) and are stated below.

-

(C1) Identifiability: Let H(yi; αi, P) be the cumulative distribution function of h(yi; αi, P). If for any αi, H(yi; αi, P) = H(yi; αi, P∗) for all yi, then D(P, P∗) = 0.

-

(C2) Continuity: The component parameter space \(\mathcal {F}\) is a closed set. For all yi, αi and any given P0,

$$ \begin{array}{@{}rcl@{}} \lim_{P \rightarrow P_{0}} h(y_{i};{\alpha}_{i},P) = h(y_{i};{\alpha}_{i},P_{0}) {.} \end{array} $$ -

(C3) Finite Kullback–Leibler Information: For any P≠P∗, there exists an 𝜖 > 0 such that

$$ \begin{array}{@{}rcl@{}} E^{*}[ \log(h(Y_{i}; {\alpha}_{i}, B_{\epsilon}(P) ) / h(Y_{i}; {\alpha}_{i}, P^{*}) ) ]^{+} < \infty { ,} \end{array} $$where B𝜖(P) is the open ball of radius 𝜖 > 0 centered at P with respect to the metric D, and

$$ \begin{array}{@{}rcl@{}} h(Y_{i}; {\alpha}_{i}, B_{\epsilon}(P)) = \sup_{\tilde{P} \in B_{\epsilon}(P) } h(Y_{i}; {\alpha}_{i}, \tilde{P}) { .} \end{array} $$ -

(C4) Compactness: The definition of the mixture density h(yi; αi, P) in P can be continuously extended to a compact space \( \overline { \mathbb {P} }\).

Identifiability of the mixture model is established in Proposition 3.1. In the case of mixture of Poisson processes, the component parameter space \( \mathcal {F} \) is clearly closed. To compactify the space of mixing distributions \(\mathbb { P}\), we notice that the distance between any two mixing distributions is bounded above by:

We can extend \(\mathbb {P}\) to \(\overline {\mathbb { P}}\) by including all sub-distributions ρP for any ρ ∈ [0, 1) as in Chen (2017).

We now show the continuity of h(yi; αi, P) on \( \overline { \mathbb {P} } \). Recall that \(D(P_{m},P) \rightarrow 0 \) if and only if \( P_{m} \rightarrow P\) in distribution if only if \( {\int \limits } u(\mathbf {f}) d P_{m}(\mathbf {f}) \rightarrow {\int \limits } u(\mathbf {f}) dP_{0}(\mathbf {f}) \) for all bounded and continuous function u(⋅).

For any given \(\mathbf {f}_{0} \in \mathcal {F}\) and αi > 0, we clearly have \( \lim _{\mathbf {f} \rightarrow \mathbf {f}_{0} } h(y_{i};{\alpha }_{i},\mathbf {f}) = h(y_{i};{\alpha }_{i},\mathbf {f}_{0}) \), and \( \lim _{| \mathbf {f} | \rightarrow \infty } h(y_{i}; {\alpha }_{i}, \mathbf {f} ) = 0\). Hence, h(yi; αi, f) is continuous and bounded on \( \mathcal {F} \). Suppose that \(P_{m} \rightarrow P_{0} \in \overline {\mathbb {P}}\) in distribution, we have that:

which shows that h(yi; αi, P) is continuous in \(\mathbb {P}\).

To prove finite Kullback–Leibler information, we need the extra sufficient condition that all scaling factors {αi}i are bounded above, that is, \( {\alpha }_{i} < \alpha ^{(M)} < \infty \) almost surely ∀i. Let f0 be a support point of P∗. There must be a positive constant δ such that:

uniformly for all αi < α(M). Therefore, we have that:

Since αi < α(M) almost surely, both E∗(Yi, k) and \(E^{*}(\log (Y_{i,k}!))\) are finite for all k. Hence, \( E^{*}(\log h(y_{i};{\alpha }_{i},P^{*}) ) > - \infty \). Since h(yi; αi, P∗) is bounded from above, \( E^{*}(\log h(y_{i};{\alpha }_{i},P^{*}) ) < \infty \). Therefore, \( E^{*}|\log h(y_{i};{\alpha }_{i},P^{*}) | < \infty \).

Since \(h(Y_{i};{\alpha }_{i},B_{\epsilon (P)}) < c < \infty \) for any P, 𝜖 and αi,

Therefore, we have shown that the four conditions above are satisfied and the penalized MLE under mixture of Poisson process is strongly consistent.

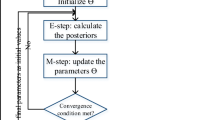

Appendix B: Derivation of EM Algorithm

Recall the complete data log-likelihood defined in Eq. 10. The E-step requires the computation of the expected value of the complete data log-likelihood function with respect to the conditional distribution of Z given x and current estimates of parameters \(\hat {\theta }^{(t)}\). This conditional expression can be expressed as:

The M-step finds the parameters θ that maximize the expression above. It is straightforward to show (by differentiation) that the values of α and τ that maximize the expression above are given by Eqs. 12 and 13 respectively.

To optimize Eq. 16 with respect to fg, we write fg = (fg,1,⋯ , fg, m)T and retain terms that involve fg for g = 1,⋯ , G to obtain:

where b = (b1,⋯ , bm)T with yk defined below.

where [sk− 1, sk) is the k th interval of the m equally spaced intervals of [0, T).

For g = 1,⋯ , G, we now optimize Q with respect to fg, and as analytical expression does not exist, we use Newton’s method. For each g, the Jacobian Jg(Q) of Q can be written as:

where ug = (ug,1,⋯ , ug, m)T with

for l = 1,⋯ , m. The Hessian Hg(Q) can be written as:

Rights and permissions

About this article

Cite this article

Ng, T.L.J., Murphy, T.B. Model-based Clustering of Count Processes. J Classif 38, 188–211 (2021). https://doi.org/10.1007/s00357-020-09363-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00357-020-09363-4