Abstract

We introduce Transfer Physics Informed Neural Network (TPINN), a neural network-based approach for solving forward and inverse problems in nonlinear partial differential equations (PDEs). In TPINN, one or more layers of physics informed neural network (PINN) corresponding to each non-overlapping subdomains are changed using a unique set of parameters for each PINN. The remaining layers of individual PINNs are the same through parameter sharing. The subdomains can be those obtained by partitioning the global computational domain or subdomains part of the problem definition, which adds to the total computational domain. Solutions from different subdomains are connected while training using problem-specific interface conditions incorporated into the loss function. The proposed method handles forward and inverse problems where PDE formulation changes or when there is a discontinuity in PDE parameters across different subdomains efficiently. Parameter sharing reduces parameter space dimension, memory requirements, computational burden and increases accuracy. The efficacy of the proposed approach is demonstrated by solving various forward and inverse problems, including classical benchmark problems and problems involving parameter heterogeneity from the heat transfer domain. In inverse parameter estimation problems, statistical analysis of estimated parameters is performed by solving the problem independently six times. Noise analysis by varying the noise level in the input data is performed for all inverse problems.

Similar content being viewed by others

References

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25:1097–1105

Collobert R, Weston J (2008) A unified architecture for natural language processing: deep neural networks with multitask learning. ICML 2008.160-167.https://doi.org/10.1145/1390156.1390177

Amato F, López A, Peña-Méndez EM, Vaňhara P, Hampl A, Havel J (2013) Artificial neural networks in medical diagnosis. J Appl Biomed 11(2):47–58. https://doi.org/10.2478/v10136-012-0031-x

Moosazadeh S, Namazi E, Aghababaei H, Marto A, Mohamad H, Hajihassani M (2019) Prediction of building damage induced by tunnelling through an optimized artificial neural network. Eng Comput 35:579–591. https://doi.org/10.1007/s00366-018-0615-5

Ghaleini EN, Koopialipoor M, Momenzadeh M et al (2019) A combination of artificial bee colony and neural network for approximating the safety factor of retaining walls. Eng Comput 35:647–658. https://doi.org/10.1007/s00366-018-0625-3

Moayedi H, Moatamediyan A, Nguyen H et al (2020) Prediction of ultimate bearing capacity through various novel evolutionary and neural network models. Eng Comput 36:671–687. https://doi.org/10.1007/s00366-019-00723-2

Gordan B, Koopialipoor M, Clementking A et al (2019) Estimating and optimizing safety factors of retaining wall through neural network and bee colony techniques. Eng Comput 35:945–954. https://doi.org/10.1007/s00366-018-0642-2

Lagaris IE, Likas A, Fotiadis DI (1998) Artificial neural networks for solving ordinary and partial differential equations. IEEE Trans Neural Netw 9:987–1000. https://doi.org/10.1109/72.712178

Lagaris IE, Likas AC, Papageorgiou DG (2000) Neural-network methods for boundary value problems with irregular boundaries. IEEE Trans Neural Netw 11(05):1041–1049. https://doi.org/10.1109/72.870037

Meade AJ Jr, Fernandez AA (1994) The numerical solution of linear ordinary differential equations by feedforward neural networks. Math Comput Model 19(12):1–25. https://doi.org/10.1016/0895-7177(94)90095-7

Meade AJ Jr, Fernandez AA (1994) Solution of nonlinear ordinary differential equations by feedforward neural networks. Math Comput Model 20(9):19–44. https://doi.org/10.1016/0895-7177(94)00160-X

Rudd K, Ferrari S (2015) A constrained integration (CINT) approach to solving partial differential equations using artificial neural networks. Neurocomputing 155:277–285. https://doi.org/10.1016/j.neucom.2014.11.058

Berg J, Nystrom K (2018) A unified deep artificial neural network approach to partial differential equations in complex geometries. Neurocomputing 317:28–41. https://doi.org/10.1016/j.neucom.2018.06.056

Sirignano J, Spiliopoulos K (2018) DGM: a deep learning algorithm for solving partial differential equations. J Comput Phys 375:1339–1364. https://doi.org/10.1016/j.jcp.2018.08.029

Raissi M, Perdikaris P, Karniadakis GE (2018) Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J Comput Phys 378:686–707. https://doi.org/10.1016/j.jcp.2018.10.045

Anitescu C, Atroshchenko E, Alajlan N, Rabczuk T (2019) Artificial neural network methods for the solution of second order boundary value problems. Comput Mater Contin 59(1):345–359. https://doi.org/10.32604/cmc.2019.06641

Gao H, Sun L, Wang J-X (2021) PhyGeoNet: physics-informed geometry-adaptive convolutional neural networks for solving parameterized steady-state PDEs on irregular domain. J Comput Phys. https://doi.org/10.1016/j.jcp.2020.110079

Nabian MA, Meidani H (2019) A deep learning solution approach for high-dimensional random differential equations. Prob Eng Mech 57:14–25. https://doi.org/10.1016/j.probengmech.2019.05.001

Malek A, Beidokhti RS (2006) Numerical solution for high order differential equations using a hybrid neural network-optimization method. Appl Math Comput 183(1):260–271. https://doi.org/10.1016/j.amc.2006.05.068

Zhang R, Bilige S (2019) Bilinear neural network method to obtain the exact analytical solutions of nonlinear partial differential equations and its application to p-gBKP equation. Nonlinear Dyn 95:3041–3048. https://doi.org/10.1007/s11071-018-04739-z

Paripour M, Ferrara M, Salimi M (2017) Approximate solutions by artificial neural network of hybrid fuzzy differential equations. Adv Mech Eng 9(9):1–9. https://doi.org/10.1177/1687814017717429

Jafarian A, Mokhtarpour M, Baleanu D (2017) Artificial neural network approach for a class of fractional ordinary differential equation. Neural Comput Appl 28:765–773. https://doi.org/10.1007/s00521-015-2104-8

Panghal S, Kumar M (2020) Optimization free neural network approach for solving ordinary and partial differential equations. Eng Comput. https://doi.org/10.1007/s00366-020-00985-1

Panghal S, Kumar M (2021) Neural network method: delay and system of delay differential equations. Eng Comput. https://doi.org/10.1007/s00366-021-01373-z

Samaniego E, Anitescu C, Goswami S, Nguyen-Thanh VM, Guo H, Hamdia K, Zhuang X, Rabczuk T (2020) An energy approach to the solution of partial differential equations in computational mechanics via machine learning: concepts, implementation and applications. Comput Methods Appl Mech Eng. https://doi.org/10.1016/j.cma.2019.112790

Ramabathiran AA, Ramachandran P (2021) SPINN: sparse, physics-based, and partially interpretable neural networks for PDEs. J Comput Phys. https://doi.org/10.1016/j.jcp.2021.110600

Goswami S, Anitescu C, Chakraborty S, Rabczuk T (2020) Transfer learning enhanced physics informed neural network for phase-field modeling of fracture. Theor Appl Fract Mech. https://doi.org/10.1016/j.tafmec.2019.102447

Niaki SA, Haghighat E, Campbell T, Poursartip A, Vaziri R (2021) Physics-informed neural network for modelling the thermochemical curing process of composite-tool systems during manufacture. Comput Methods Appl Mech Eng. https://doi.org/10.1016/j.cma.2021.113959

Nguyen-Thanh VM, Anitescu C, Alajlan N, Rabczuk T, Zhuang X (2021) Parametric deep energy approach for elasticity accounting for strain gradient effects. Comput Methods Appl Mech Eng 386:114096. https://doi.org/10.1016/j.cma.2021.114096

Cai S, Wang Z, Wang S, Perdikaris P, Karniadakis GE (2021) Physics informed neural networks for heat transfer problems. ASME J Heat Transf 143(6):060801. https://doi.org/10.1115/1.4050542

He Z, Ni F, Wang W, Zhang J (2021) A physics-informed deep learning method for solving direct and inverse heat conduction problems of materials. Mater Today Commun 28:102719. https://doi.org/10.1016/j.mtcomm.2021.102719

Zobeiry N, Humfeld KD (2021) A physics-informed machine learning approach for solving heat transfer equation in advanced manufacturing and engineering applications. Eng Appl Artif Intell 101:104232. https://doi.org/10.1016/j.engappai.2021.104232

Oommen V, Srinivasan B (2022) Solving inverse heat transfer problems without surrogate models: a fast, data-sparse, physics informed neural network approach. J Comput Inf Sci Eng 22(4):041012. https://doi.org/10.1115/1.4053800

Haghighat E, Juanes R (2021) SciANN: A Keras/TensorFlow wrapper for scientific computations and physics-informed deep learning using artificial neural networks. Comput Methods Appl Mech Eng 373:113552. https://doi.org/10.1016/j.cma.2020.113552

Mishra S, Molinaro R (2021) Physics informed neural networks for simulating radiative transfer. J Quant Spectrosc Radiat Transf 270:107705. https://doi.org/10.1016/j.jqsrt.2021.107705

Zhu Q, Liu Z, Yan J (2021) Machine learning for metal additive manufacturing: predicting temperature and melt pool fluid dynamics using physics-informed neural networks. Comput mech 67:619–635. https://doi.org/10.1007/s00466-020-01952-9

Lu L, Meng X, Mao Z, Karniadakis GE (2021) DeepXDE: a deep learning library for solving differential equations. SIAM Rev 63(1):208–228. https://doi.org/10.1137/19M1274067

Chen F, Sondak D, Protopapas P, Mattheakis M, Liu S, Agarwal D, Giovanni MD (2020) NeuroDiffEq: a Python package for solving differential equations with neural networks. J Open Source Softw 5(46):1931. https://doi.org/10.21105/joss.01931

Dwivedi V, Parashar N, Srinivasan B (2019) Distributed learning machines for solving forward and inverse problems in partial differential equations. Neurocomputing 420:299–316. https://doi.org/10.1016/j.neucom.2020.09.006

Dwivedi V, Srinivasan B (2019) Physics informed extreme learning machine (PIELM)-A rapid method for the numerical solution of partial differential equations. Neurocomputing 391:96–118. https://doi.org/10.1016/j.neucom.2019.12.099

Basdevant C, Deville M, Haldenwang P et al (1986) Spectral and finite difference solutions of the Burgers equation. Comput Fluids 14(1):23–41. https://doi.org/10.1016/0045-7930(86)90036-8

Marchi CH, Suero R, Araki LK (2009) The lid-driven square cavity flow: numerical solution with a 1024 x 1024 grid. J Braz Soc Mech Sci Eng 31(3):186–198

Ghia U, Ghia KN, Shin CT (1982) High-Re solutions for incompressible flow using the Navier-Stokes equations and a multigrid method. J Comput Phys 48(3):387–411. https://doi.org/10.1016/0021-9991(82)90058-4

Zhang X, Zhang P (2014) Heterogeneous heat conduction problems by an improved element-free Galerkin method. Numer Heat Transf Part B Fundam 65(4):359–375. https://doi.org/10.1080/10407790.2013.857221

Chang KC, Payne UJ (1991) Analytical solution for heat conduction in a two-material-layer slab with linearly temperature dependent conductivity. J Heat Transf 113(1):237–239. https://doi.org/10.1115/1.2910531

Chen X, Han P (2000) A note on the solution of conjugate heat transfer problems using SIMPLE-like algorithms. Int J Heat Fluid Flow 21(4):463–467. https://doi.org/10.1016/S0142-727X(00)00028-X

Kingma DP, Ba JL (2017) ADAM: a method for stochastic optimization. arXiv:1412.6980v9

Kraft D (1988) A software package for sequential quadratic programming. Technical Report DFVLR-FB 88-28

Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado GS, Davis A, Dean J, Devin M, et al. (2016) Tensorflow: large-scale machine learning on heterogeneous distributed systems. arXiv:1603.04467

Google collaboratory: frequently asked questions. https://research.google.com/colaboratory/faq.html

Jagtap AD, Kharazmi E, Karniadakis GE (2020) Conservative physics-informed neural networks on discrete domains for conservation laws: applications to forward and inverse problems. Comput Methods Appl Mech Eng 365:113028. https://doi.org/10.1016/j.cma.2020.113028

Jagtap AD, Karniadakis GE (2020) Extended physics-informed neural networks (XPINNs): a generalized space-time domain decomposition based deep learning framework for nonlinear partial differential equations. Commun Comput Phys 28(5):2002–2041. https://doi.org/10.4208/cicp.OA-2020-0164

Li K, Tang K, Wu T, Liao Q (2020) D3M: a deep domain decomposition method for partial differential equations. IEEE Access 8:5283–5294. https://doi.org/10.1109/ACCESS.2019.2957200

Kharazmi E, Zhang Z, Karniadakis GE (2021) hp-VPINNs: variational physics-informed neural networks with domain decomposition. Comput Methods Appl Mech Eng 374:113547. https://doi.org/10.1016/j.cma.2020.113547

Dong S, Li Z (2020) Local extreme learning machines and domain decomposition for solving linear and nonlinear partial differential equations. arXiv:2012.02895

Henkes A, Wessels H, Mahnken R (2022) Physics informed neural networks for continuum micromechanics. Comput Methods Appl Mech Eng 393:114790. https://doi.org/10.1016/j.cma.2022.114790

Yan CA, Vescovini R, Dozio L (2022) A framework based on physics-informed neural networks and extreme learning for the analysis of composite structures. Comput struct 265:106761. 10.1016/j.compstruc.2022.106761

Jin X, Cia S, Li H, Karniadakis GE (2021) NSFnets (Navier–Stokes flow nets): physics-informed neural networks for the incompressible Navier–Stokes equations. J Comput Phys 426:109951. https://doi.org/10.1016/j.jcp.2020.109951

Wang S, Teng Y, Perdikaris P (2020) Understanding and mitigating gradient pathologies in physics-informed neural networks. arXiv:2001.04536

Jagtap AD, Kawaguchi K, Karniadakis GE (2020) Locally adaptive activation functions with slope recovery for deep and physics-informed neural networks. Proc R Soc A. 476:20200334. https://doi.org/10.1098/rspa.2020.0334

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. Aistats 9:249–256

Goldberg DE (1989) Genetic algorithms in search, optimization, and machine learning. Addison-Wesley, Reading

Acknowledgements

This work was supported by Robert Bosch Centre for Data Science and Artificial Intelligence, Indian Institute of Technology Madras, Chennai (Project no. SB21221641MERBCX008832).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Appendix 1: Conjugate heat transfer in a 2D counter-flow heat exchanger

Conjugate heat transfer problems come across in many practical applications, where heat conduction in a solid region is tightly coupled with convection heat transfer in an adjacent fluid region. Common examples include heat transfer enhancement with a finned surface, cooling of microelectronic chips, heat transfer in a cavity with thermally conducting walls or internal baffle, heat transfer in the workpiece for arc welding etc.

We try to solve a conjugate heat transfer problem in a two-dimensional counter-flow heat exchanger using our approach. This problem is discussed and solved using a SIMPLE-like algorithm in Chen et al. [46]. The problem’s geometry is shown in Fig. 26a. The following assumptions are made for the present problem; steady-state condition, no surface heat source, phase change, or fluid dissipation (or suction) at the solid-fluid interface. Solid and fluid properties are assumed to be constant, although the solid material properties may differ from those of the liquid. Both fluid flows are assumed to be 2D laminar flow through a straight channel occurring in the x direction. The governing equations are Eqs. (14–16, 18). For solid region these equations are still valid by putting u = v = 0 as convection is absent there. Also, there is no heat generation anywhere, so Q = 0. In Fig. 26a bottom fluid is cold, and the top fluid is hot. The upper and lower parallel flow passages with widths \(\Delta _1\) and \(\Delta _3\) are separated by a metallic plate with a thickness of \(\Delta _2\).

The outer walls of the heat exchanger are assumed to be adiabatic. It is assumed that hot and cold fluids possess the same properties. Uniform velocity and temperature inlet conditions, zero outlet pressure conditions are applied for both fluids. It should be noted that the direction of velocities should be considered while applying boundary conditions by changing the signs. The values of the variables for the current problem are as follows: \(\Delta _1\) = \(\Delta _2\) = \(\Delta _3\) = 0.1 m and l = 1 m; fluid parameters are \(U_1\) = 0.2 m/s, \(T_1\) = 800 K, \(U_3\) = 0.1 m/s, \(T_3\) = 300 K, \(\rho _{\text {f}}\) = 1000 kg/m\(^3\), \(k_{\text {f}}\) = 10 W/(m K), \(C_\mathrm{pf}\) = 25 J/(kg K) and \(\mu _{\text {f}}\) = 0.15 kg/(ms); solid properties \(\rho _s\) = 8000 kg/m\(^3\), \(k_{\text {s}}\) = 50 W/(m K) and \(C_\mathrm{ps}\) = 500 J/(kg K). All the physical dimensions, velocity, temperature values are scaled in the range of 0 to 1 using Min–Max scaling, and corresponding scaled continuity, x momentum, y momentum, and energy equations are used. The cold fluid, solid region, and hot fluid are taken as subdomain 1,2,3, respectively. The neural network of TPINN with the first hidden layer change contains 3 hidden layers with 15 neurons in each of them. Input layer takes x, y co-ordinates and output layer gives x component velocity u, pressure p and temperature T. y component velocity v for this problem is zero everywhere. We used 1444 random interior data points and 40 random data points along each boundary and interface per subdomain for training the neural networks. Penalty factors are \(\lambda _{\text {pde}}\) = 1, \(\lambda _{\text {bc}}\) = 2, \(\lambda _{\text {if}}\) = 1. Optimization is carried out initially using Adam optimizer with a learning rate of 0.001 for 30000 iterations, followed by the SLSQP optimizer. Figure 26b shows the comparison of temperature profile at x = l/2. The predicted temperature profile is in good agreement with those reported in [46]. Fig. 26c shows the contour plot of predicted temperature across the whole domain. The contour plot is matching with that reported in [46]. We can observe that the temperature is not bounded at the inlet of both hot and cold fluid. It is because uniform inlet temperature boundary condition enforces a zero temperature slope condition. On the other hand, the conservation of heat flux condition at the interface enforces a non-zero temperature slope condition. So we have conflicting boundary conditions and interface flux conditions.

Hence, the neural network cannot strictly apply the uniform temperature inlet condition, and because of that, the temperature goes out of bounds at the inlets.

Appendix 2: Estimating parameter of temperature dependent thermal conductivity in a 1D steady-state heterogeneous heat conduction

We shall consider the inverse problem of estimating the parameter of temperature-dependent thermal conductivity in one-dimensional steady-state heat conduction through a two-layered composite slab with no heat generation. Figure 23 can be considered as the geometry for the present problem with a = 0.5. The non dimensional temperatures are \(T_l\) = 0 to \(T_r\) = 1. The interface is at x=0.5. A perfect thermal contact at the interface is assumed. For subdomains 1 and 2, the thermal conductivity functions are assumed to be of the form \(k_1(T) = e^{a_1T}\) and \(k_2(T) = e^{a_2T}\). True values are \(a_1\) = 0.28 and \(a_2\) = 1.2. In this problem, estimating the values of \(a_1\) and \(a_2\) using noisy temperature and heat flux measurements from the interior of the subdomains is considered. Input data are synthetic observations obtained by adding error to true heat flux values and uncorrelated Gaussian noise to the exact temperature values. Each slab is taken as a subdomain here also. TPINN with first layer change is adopted here. The architecture is depth = 3 and width = 16. For subdomains 1 and 2, heat flux and temperature values are available at x = \(\{\)0.02, 0.25, 0.48\(\}\) and x = \(\{\)0.52, 0.75, 0.98\(\}\) respectively. \(\therefore\) \(N_{\text {domain}}^j\) = 3 in Eq. (11), \(N_{\text {flux}}^j\) = 3 in Eq. (12) \(\forall\) j. Along with the locations where temperature and heat flux observations are available, \(J_{\text {pde}}\) in Eq. (6) is calculated at 20 more random x data points from each subdomain. So \(N_{jpde}^j\)=23 in Eq. (6) \(\forall\) j. The parameters \(a_1\), \(a_2\) to be estimated are set as trainable parameter of corresponding neural networks and are initialized with a value of 3.0. [1,1.5,1] are the penalty factors used for \(J_{\text {pde}}\), \(J_{\text {domain}}\), and \(J_{\text {flux}}\) respectively. Optimization is carried out using Adam optimizer with a learning rate of 0.001 up to 7000 iterations, followed by the SLSQP optimizer. The weights are initialized using Xavier initialization approach. The present problem is solved independently for 6 times and the statistics of the estimated parameters for a confidence level of 95% are shown in Table 7. The relative error of mean is calculated w.r.t the corresponding true parameter value. Average MSE reported are calculated using \(T_{\text {prediction}}\) & \(k_{\text {prediction}}\) on a test data set consisting of 20 uniformly spaced x values from each subdomain. We can notice that TPINN has predicted the parameters with good accuracy when the noise level is 0%. When the input data is corrupted with error, the accuracy falls. The confidence interval width appears to be increasing as the noise level increases, which is expected. The relative error of the mean value is high for the first subdomain than the second subdomain for all noise levels.

However, the MSE of \(k_{\text {prediction}}\) is comparatively high for subdomain 2, which can be due to the fact that thermal conductivity is more sensitive to the corresponding parameter in the second subdomain than the first subdomain.

Solution using conventional approach

The conventional approach for solving inverse parameter estimation problems involves a forward model to solve the corresponding forward problem. An inverse model is there, which updates the unknown parameter through an optimization algorithm. A stopping criterion is set to terminate the iterative procedure. The conventional approach can be described as:

-

Step 1: Obtain the heat flux and temperature values at the measurement locations by running the forward model using the available values or initial values of parameters.

-

Step 2: Calculate the objective function value representing the error in the heat flux and temperature values estimated from the forward model w.r.t the available measurements.

-

Step 3: Update the values of parameters using the inverse model to minimize the objective function. Sensitivity coefficients calculation may be required depending on the inverse model.

-

Step 4: Using the updated values of parameters solve the forward problem and calculate the objective function value.

-

Step 5: Check the satisfaction of stopping criteria. If no, go to step 3. If yes, take the latest values of parameters as estimated values, and terminate the iterative procedure.

Conventional techniques involve solving the forward and inverse models iteratively till convergence which is time-consuming. The present problem is solved using a conventional way for comparison purposes. TPINN itself is taken as the forward model, and the Genetic algorithm is taken as the optimization algorithm for the inverse model. Results obtained are compared with that of TPINN for computational time and accuracy.

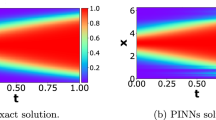

TPINN forward model: TPINN with first layer change is used. The architecture is depth = 3 and width = 10. All penalty factors have a value of 1. \(N_{pde}^j \forall j\) = 10 \(\forall j\) in Eq. (5). The weights of the neural networks are initialized using the Xavier initialization approach. Loss minimization is accomplished using Adam optimizer (learning rate = 0.001) for the first 200 iterations followed by the SLSQP optimizer. The validation of TPINN forward model is shown in Fig. 27 for different combination of \(a_1\) and \(a_2\) values. The temperature and thermal conductivity values predicted by TPINN are in a close match with the exact solution. Hence TPINN can be used as a reliable forward model for the conventional approach.

Genetic Algorithm: In the Genetic algorithm (GA), the parameter to be estimated is represented as a string of fixed string length containing zeros and ones. The decimal equivalent of the binary string is obtained using the upper and lower bounds of the parameters given. The fitness function or objective function value is calculated using the decimal form. The optimization begins with a finite population of random strings generated corresponding to each parameter to be estimated. Population size is fixed throughout the optimization procedure and is decided before the procedure starts. The population is passed through three phases; reproduction, crossover, and mutation to obtain a new population. The fitness values of the new population are then calculated, and convergence criteria are checked. A generation of GA consists of one cycle of reproduction, crossover, mutation, and further evaluation of fitness function. The procedure is terminated when the convergence criteria are satisfied; otherwise, the new population is passed to the next generation. The three phases of GA for a maximization problem can be described as:

Reproduction: The reproduction phase chooses the best candidates(strings) from the population to create a mating pool according to a probabilistic procedure. The candidates are chosen with a probability proportional to their fitness function value. Zero to multiple copies of each candidate in the population are copied to the mating pool. For the present problem Roulette wheel selection [62] approach is employed. By this approach, probabilistically, more copies of candidates with high fitness values are placed into the mating pool. No new candidates are generated in the reproduction stage. This phase ensures that highly fit candidates survive and reproduce, while less fit ones are rejected from the process. At the end of this phase, a mating pool with size equals population size is obtained.

Crossover: The mating pool created at the end of the reproduction phase is passed to the crossover phase. A crossover operation starts by randomly selecting two candidates (parents). Along the string length, a site for a single crossover is decided then. A number between 0 and 1 is generated randomly. If this number is less than crossover probability (\(P_{\text {c}}\))—a predefined parameter, the portion of the strings to the right of the crossover site of both the parents are swapped with each other forming two new child candidates. Otherwise, no changes are performed on the parents. The child candidates obtained may or may not be fitter than the parents depending on the crossover site. In this phase, new candidates are generated.

Validation of TPINN forward model for Appendix 2. \((a_1,a_2)\) values for different cases are, \(C_1\):(0.02,2), \(C_2\):(0.8,1.5), \(C_3\):(1.5,0.8), \(C_4\):(2,0.02)

Mutation: After the crossover stage, the population is passed to the final phase called mutation. A bit-wise mutation is used here. In bit-wise mutation, a candidate is selected, and each bit (0 or 1) of its string is considered at a time in sequence. A number between 0 and 1 is generated randomly. If the random number is less than mutation probability (\(P_{\text {m}}\))—a predefined value, the bit considered is changed from 0 to 1 or 1 to 0. Else no change is done. Mutation operation makes alterations in the candidate locally and helps in maintaining the diversity of the population.

For the present problem, the objective function to maximize by GA is given by

where \(T_{lm,{\text {est}}}\), \(T_{lm,{\text {meas}}}\) are the temperature estimated from the forward model, the temperature measured at mth measurement location in lth subdomain. Similarly \(q_{lm,{\text {est}}}\), \(q_{lm,{\text {meas}}}\) are the heat flux estimated from the forward model, the heat flux measured at mth measurement location in lth subdomain. For the present problem, \({N_{\text {meas}}^1} = {N_{\text {meas}}^2} = {N_{\text {meas}}^3} = {N_{\text {meas}}^4} =\) 3. The details of input variables for GA are as follow: String length = 7, \(P_{\text {m}}\) = 0.01, \(P_{\text {c}}\) = 0.6, population size = 60. For both parameters, 0.02 and 2 are given as lower and upper bounds to search the solution. We ran GA for three generations. After each generation, the values of parameters corresponding to the highest objective function among the population are stored along with the objective function value. After three generations, the values of the parameters corresponding to the highest objective function among the stored ones are considered as estimated values of the parameters \(a_1\) and \(a_2\).

The results obtained on input data with 0% noise by executing four independent runs of the conventional approach are shown in Table 8. In each run, GA runs for three generations. By comparing the results of Table 8 with results obtained for TPINN shown in Table 7, we can observe that TPINN’s performance is much better in terms of both accuracy and computational time. TPINN estimates the parameters with high accuracy and less variance than the conventional method on clean data. The conventional approach estimates the parameters with a considerable amount of variance across different runs. The maximum relative error in the estimated values of \(a_1\) and \(a_2\) are 63.21% and 12.6%. The computational time taken by the conventional approach is nearly 15x more than that of TPINN. These initial results show that TPINN could be a promising method for parameter estimation problems by providing a significant amount of savings on computational time and cost.

Appendix 3: Number of learnable parameters of TPINN and DPINN for a given depth and width of the network

Let D = Depth of the neural network (No. of hidden layers plus output layer)

W = Width of the neural network (No. of neurons in each hidden layer. Same for all hidden layers)

\({N_1}\) = Number of neurons in the input layer

\({N_n}\) = Number of neurons in the output layer

\({N_{\text {SD}}}\) = Number of subdomains

\({P_{\text {D}}}\) = Total number of learnable parameters of DPINN

\({P_{\text {T}}}\) = Total number of learnable parameters of TPINN.

As an example, TPINN with first hidden layer change is considered. Then,

\({P_{\text {D}}}\) = \({(N_1W+W+(W^2+W)(D-2)+WN_n+N_n)N_{\text {SD}}}\)

\({P_{\text {T}}}\) = \({(N_1W+W)N_{\text {SD}}+(W^2+W)(D-2)+WN_n+N_n}\)

\({P_{\text {D}}} - {P_{\text {T}}}\) = \({((W^2+W)(D-2)+WN_n+N_n)(N_{\text {SD}}-1)}\)

When \({N_{\text {SD}}} > 1\), \({P_{\text {D}} > P_{\text {T}}}\). Hence for same depth and width, the total number of learnable parameters of TPINN is less than DPINN.

Rights and permissions

About this article

Cite this article

Manikkan, S., Srinivasan, B. Transfer physics informed neural network: a new framework for distributed physics informed neural networks via parameter sharing. Engineering with Computers 39, 2961–2988 (2023). https://doi.org/10.1007/s00366-022-01703-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-022-01703-9