Abstract

Facial expression is highly correlated with the facial motion. According to whether the temporal information of facial motion is used or not, the facial expression features can be classified as static and dynamic features. The former, which mainly includes the geometric features and appearance features, can be extracted by convolution or other learning filters; the latter, which are aimed to model the dynamic properties of facial motion, can be calculated through optical flow or other methods, respectively. When 3D convolutional neural networks (CNNs) are introduced, the extraction of two different types of features mentioned above becomes easy. In this paper, one 3D CNN architecture is presented to learn the static and dynamic features from facial image sequences and extract high-level dynamic features from optical flow sequences. Two types of dense optical flow, which contain the tracking information of facial muscle movement, are calculated according to different image pair construction methods. One is the common optical flow, and the other is an enhanced optical flow which is called accumulative optical flow. Four components of each type of optical flow are used in experiments. Three databases, two acted databases and one nearly realistic database, are selected to conduct the experiments. The experiments on the two acted databases achieve state-of-the-art accuracy, and indicate that the vertical component of optical flow has an advantage over other components in recognizing facial expression. The experimental results on the three selected databases show that more discriminative features can be learned from image sequences than from optical flow or accumulative optical flow sequences, and the accumulative optical flow contains more motion information than optical flow if the frame distance of the image pairs used to calculate them is not too large.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Schmidt, Karen L., Cohn, Jeffrey F.: Human facial expressions as adaptations: evolutionary questions in facial expression research. Am. J. Phys. Anthropol. 116, 3–24 (2001)

Pantic, M., Bartlett, M.S.: Machine analysis of facial expressions. In: Delac, K., Grgic, M. (eds.) Face Recognition, pp. 77–416 (2007)

Russell, J.A., Fernández-Dols, J.M. (eds.): The Psychology of Facial Expression. Cambridge University Press, Cambridge (1997)

Fasel, B., Luettin, J.: Automatic facial expression analysis: a survey. Pattern Recogn. 36(1), 259–275 (2003)

Tian, Y.I., Kanade, T., Cohn, J.F.: Recognizing action units for facial expression analysis. IEEE Trans. Pattern Anal. Mach. Intell. 23(2), 97–115 (2001)

Tian, Y.-L., Kanade, T., Cohn, J.F.: Facial expression analysis. In: Handbook of Face Recognition, pp. 247–275. Springer, New York (2005)

Goto, T., Lee, W.-S., Magnenat-Thalmann, N.: Facial feature extraction for quick 3D face modeling. Signal Process. Image Commun. 17(3), 243–259 (2002)

Chi, J., Changhe, T., Zhang, C.: Dynamic 3D facial expression modeling using Laplacian smooth and multi-scale mesh matching. Vis. Comput. 30(6–8), 649–659 (2014)

Agarwal, S., Santra, B., Mukherjee, D.P.: Anubhav: recognizing emotions through facial expression. Vis. Comput. 34, 177 (2018). https://doi.org/10.1007/s00371-016-1323-z

Huang, Y., Li, Y., Fan, N.: Robust symbolic dual-view facial expression recognition with skin wrinkles: local versus global approach. IEEE Trans. Multimed. 12(6), 536–543 (2010)

Cohn, J.F.: Automated analysis of the configuration and timing of facial expression. In: What the Face Reveals: Basic and Applied Studies of Spontaneous Expression Using the Facial Action Coding System (FACS). Oxford University Press Series in Affective Science, pp. 388–392 (2005)

Liu, P., et al.: Facial expression recognition via a boosted deep belief network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2014)

Levi, G., Hassner, T.: Emotion recognition in the wild via convolutional neural networks and mapped binary patterns. In: Proceedings of the 2015 ACM on International Conference on Multimodal Interaction, pp. 503–510 (2015)

Barros, P., Weber, C., Wermter, S.: Emotional expression recognition with a cross-channel convolutional neural network for human-robot interaction. In: IEEE-RAS, International Conference on Humanoid Robots, pp. 582–587 (2015)

Kim, B.K., et al.: Hierarchical committee of deep convolutional neural networks for robust facial expression recognition. J. Multimodal User Interfaces 10(2), 173–189 (2016)

Moore, S., Bowden, R.: Local binary patterns for multi-view facial expression recognition. Comput. Vis. Image Underst. 115(4), 541–558 (2011)

Happy, S.L., Routray, A.: Robust facial expression classification using shape and appearance features. In: Eighth International Conference on Advances in Pattern Recognition IEEE, pp. 1–5 (2015)

Ding, H., Zhou, S.K., Chellappa, R..: Facenet2expnet: regularizing a deep face recognition net for expression recognition. In: 2017 12th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2017). IEEE (2017)

Berretti, S., Del Bimbo, A., Pala, P.: Automatic facial expression recognition in real-time from dynamic sequences of 3D face scans. Vis. Comput. 29(12), 1333–1350 (2013)

Khorrami, P., et al.: How deep neural networks can improve emotion recognition on video data. In: 2016 IEEE International Conference on Image Processing (ICIP). IEEE (2016)

Mollahosseini, A., Chan, D., Mahoor, M.H.: Going deeper in facial expression recognition using deep neural networks. In: 2016 IEEE Winter Conference on Applications of Computer Vision (WACV). IEEE, New York (2016). https://doi.org/10.1109/WACV.2016.7477450

Mayya, V., Pai, R.M., Pai, M.M.M.: Automatic facial expression recognition using DCNN. Procedia Comput. Sci. 93, 453–461 (2016)

Danelakis, A., Theoharis, T., Pratikakis, I.: A spatio-temporal wavelet-based descriptor for dynamic 3D facial expression retrieval and recognition. Vis. Comput. 32(6–8), 1001–1011 (2016)

Seung Ho, L., Baddar, W.J., Yong, M.R.: Collaborative expression representation using peak expression and intra class variation face images for practical subject-independent emotion recognition in videos. Pattern Recogn. 54((C)), 52–67 (2016)

Barros, P., Wermter, S.: Developing crossmodal expression recognition based on a deep neural model. Adapt. Behav. 24(5), 373–396 (2016)

Gharavian, D., Bejani, M., Sheikhan, M.: Audio-visual emotion recognition using FCBF feature selection method and particle swarm optimization for fuzzy ARTMAP neural networks. Multimed. Tools Appl. 76(2), 2331–2352 (2017)

Charles, D., Prodger, P.: The expression of the emotions in man and animals. Oxford University Press, USA (1998)

Gibson, J.J.: The perception of the visual world. Houghton Mifflin Company, Boston (1950)

Horn, B.K.P., Brian, G.S.: Determining optical flow. Artif. Intell. 17(13), 185–203 (1980)

Chrani, S., et al.: Facial Expressions: A CrossCultural Study. Emotion Recognition: A Pattern Analysis Approach, pp. 69–87. Wiley, New York (2015)

Zhang, Y., Ji, Q.: Active and dynamic information fusion for facial expression understanding from image sequences. IEEE Trans. Pattern Anal. Mach. Intell. 27(5), 699–714 (2005)

Farneback, G.: Two-frame motion estimation based on polynomial expansion. Lecture Notes in Computer Science, pp. 363–370 (2003)

LeCun, Y., et al.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 1, 541–551 (1989)

Behnke, S.: Discovering hierarchical speech features using convolutional non-negative matrix factorization. In: International Joint Conference on Neural Networks IEEE, vol. 4, pp. 2758–2763 (2003)

Goodfellow, I., Bengio, Y., Courville, A.: Deep Learning. MIT Press, Cambridge (2016)

Krogh, A., Hertz, J.: A simple weight decay can improve generalization. In: Neural Information Processing Systems, pp. 950–957 (1992)

Srivastava, N., et al.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15.1, 1929–1958 (2014)

Loughrey, J., Cunningham, P.: Using Early Stopping to Reduce Overfltting in Wrapper-Based Feature Weighting. Trinity College Dublin, Department of Computer Science, Dublin (2005)

Hutter, F., Hoos, H.H., Leytonbrown, K.: Sequential model-based optimization for general algorithm configuration. In: Learning and Intelligent Optimization, pp. 507–523 (2011)

Bergstra, J., et al.: Algorithms for hyper-parameter optimization. In: Neural Information Processing Systems, pp. 2546–2554 (2011)

Snoek, J., Larochelle, H., Adams, R.P.: Practical Bayesian optimization of machine learning algorithms. In: Neural Information Processing Systems, pp. 2951–2959 (2012)

Thornton, C., et al.: Auto-WEKA: combined selection and hyperparameter optimization of classification algorithms. In: Knowledge Discovery and Data Mining, pp. 847–855 (2013)

Bergstra, J., Yamins, D., Cox, D.D.: Hyperopt: a python library for optimizing the hyperparameters of machine learning algorithms. In: Proceedings of the 12th Python in Science Conference (2013)

Kanade, T., Cohn, J.F., Tian, Y.: Comprehensive database for facial expression analysis. In: IEEE International Conference on Automatic Face and Gesture Recognition, pp. 46–53 (2000)

Lucey, P., et al.: The extended cohn-kanade dataset (CK+): a complete dataset for action unit and emotion-specified expression. In: Computer Vision and Pattern Recognition, pp. 94–101 (2010)

Haq, S., Jackson, P.J.B.: Speaker-dependent audio-visual emotion recognition. In: Proceedings of the International Conference on Auditory-Visual Speech Processing (AVSP’09), Norwich, pp. 53–58 (2009)

Dhall, A., Goecke, R., Lucey, S., Gedeon, T.: Collecting large, richly annotated facial expression databases from movies. IEEE MultiMed. 19, 34–41 (2012)

Dhall, A., Goecke, R., Joshi, J., Sikka, J., Gedeon, T.: Emotion recognition in the wild challenge 2014: baseline, data and protocol. In: ACM ICMI (2014)

Dhall, A., et al.: Video and image based emotion recognition challenges in the wild: EmotiW 2015. In: International Conference on Multimodal Interfaces, pp. 423–426 (2015)

Dhall, A., et al.: EmotiW 2016: video and group-level emotion recognition challenges. In: ACM International Conference on Multimodal Interaction ACM, pp. 427–432 (2016)

Wilson, P.I., Fernandez, J.D.: Facial feature detection using Haar classifiers. J. Comput. Sci. Coll. 21(4), 127–133 (2006)

Franois Chollet.: Keras. https://github.com/fchollet/keras (2015)

Fan, X., Tjahjadi, T.: A dynamic framework based on local Zernike moment and motion history image for facial expression recognition. Pattern Recogn. 64, 399–406 (2017)

Kaya, H., Gürpınar, F., Salah, A.A.: Video-based emotion recognition in the wild using deep transfer learning and score fusion. Image Vis. Comput. 65, 66–75 (2017)

Acknowledgements

Part of this work was done when the first author worked as a visiting scholar in Advanced Analytics Institute (AAI), University of Technology, Sydney. Jianfeng Zhao and Xia Mao’s work in this paper was supported in part by the Specialized Research Fund for the Doctoral Program of Higher Education under Grant No. 20121102130001.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Zhao, J., Mao, X. & Zhang, J. Learning deep facial expression features from image and optical flow sequences using 3D CNN. Vis Comput 34, 1461–1475 (2018). https://doi.org/10.1007/s00371-018-1477-y

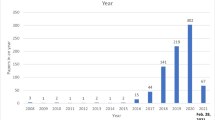

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-018-1477-y