Abstract

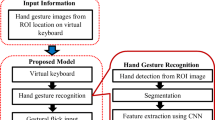

A non-touch character input is a modern system for communication between humans and computers that can help the user to interact with a computer, a machine, or a robot in unavoidable circumstances or industrial life. There have been many studies in the field of touch and non-touch character input systems (i.e., hand gesture languages), such as aerial handwriting, sign languages, and the finger alphabet. However, many previously developed systems require substantial effort in terms of learning and overhead processing for character recognition. To address this issue, this paper proposes a gesture flick input system that offers a quick and easy input method using a hygienic and safe non-touch character input system. In the proposed model, the position and state of the hands (i.e., open or closed) are recognized to enable flick input and to relocate and resize the on-screen virtual keyboard for the user. In addition, this system recognizes hand gestures that perform certain motion functions, such as delete, add a space, insert a new line, and select language, an approach which reduces the need for recognition of a large number of overhead gestures for the characters. To reduce the image-processing overhead and eliminate the surrounding noise and light effects, body index skeleton information from the Kinect sensor is used. The proposed system is evaluated based on the following factors: (a) character selection, recognition and speed of character input (in Japanese hiragana, English, and numerals); and (b) accuracy of gestures for the motion functions. The system is then compared to state-of-the-art algorithms. A questionnaire survey was also conducted to measure the user acceptance and usability of this system. The experimental results show that the average recognition rates for characters and motion functions were 98.61% and 97.5%, respectively, thus demonstrating the superiority of the proposed model compared to the state-of-the-art algorithms.

Similar content being viewed by others

References

Tanaka, S., Takuma, M., Tsukada, Y.: Recognition of finger alphabet remote image sensor (in Japanese). In: Proceedings of the 76th National Convention (IPSJ), vol. 76(2), pp. 2275–2276 (2014)

Khan, Z.H., Khalid, A., Iqbal, J.: Towards realizing robotic potential in future intelligent food manufacturing systems. Innov. Food Sci. Emerg. Technol. (2018)

Miyake, T., Wakatsuki, D., Naito, I.: A basic study on recognizing fingerspelling with hand movements by the use of depth image. Technol. Tech. Rep. Tsukuba Univ. 20(1), 7–13 (2012)

Takabayashi, D., Ohkawa, Y., Setoyama, K., Tanaka, Y.: Training system for learning finger alphabets with feedback functions. The Institute of Electronics, Information and Communication Engineers, Technical Report of IEICE 112(483), HIP2012-90, pp. 79–84 (2013)

Kumar, P., Saini, R., Behera, S.K., Dogra, D.P., Roy. P.P.: Real-time recognition of sign language gestures and air-writing using leap motion. In: IEEE 15th IAPR International Conference on Machine Vision Applications (MVA), pp. 157–160 (2017)

Ben Jmaa, A., Mahdi, W., Ben Jemaa, Y., Ben Hamadou, A.: A new approach for hand gestures recognition based on depth map captured by RGB-D camera. Computación y Sistemas 20(4), 709–721 (2016)

Maehatake, M., Nishida, M., Horiuchi, Y., Ichikawa, A.: A study on sign language recognition based on gesture components of position and movement (in Japanese). In: Proceedings of Workshop on Interactive Systems and Software (WISS), Japan, pp. 129–130 (2007)

Nishimura, Y., Imamura, D., Horiuchi, Y., Kawamoto, K., Shinozaki, T.: HMM sign language recognition using Kinect and particle filter. The Institute of Electronics, Information, and Communication Engineers, Pattern Recognition and Media Understanding (PRMU), vol. 111(430), pp. 161–166 (2012)

Pedersoli, F., Benini, S., Adami, N., Leonardi, R.: XKin: an open source framework for hand pose and gesture recognition using Kinect. Vis. Comput. 30(10), 1107–1122 (2014)

Gajjar, V., Mavani, V., Gurnani, A.: Hand gesture real time paint tool-box: Machine learning approach. In: IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), pp. 856-860 (2017)

Kuramochi, K., Tsukamoto, K., Yanai, H. F.: Accuracy improvement of aerial handwritten katakana character recognition. In: IEEE 56th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), pp. 116–119 (2017)

Ohkura, M., Manabe, R., Shimada, H., Shimada, Y.: A recognition algorithm of numerals written in the air by a finger-tip. IPSJ J. Inf. Process. (JIP) 52(2), 910–916 (2011)

Sonoda, T., Muraoka, Y.: A letter input system based on handwriting gestures. IEICE, D-II J86-D-II, pp. 1015–1025 (2003)

Fujii, Y., Takezawa, M., Sanada, H., Watanabe, K. An aerial handwritten character input system (in Japanese). IPSJ (MBL), Tech. Rep. 50(6), 1–4 (2009)

Kane, L., Khanna, P.: Vision-based mid-air unistroke character input using polar signatures. IEEE Trans. Hum.-Mach. Syst. 47(6), 1077–1088 (2017)

Shin, J., Kim, C.M.: Non-touch character input system based on hand tapping gestures using Kinect sensor. IEEE Access 5, 10496–10505 (2017)

Cai, Z., Han, J., Liu, L., Shao, L.: RGB-D datasets using microsoft Kinect or similar sensors: a survey. Multimed. Tools Appl. 76(3), 4313–4315 (2016)

Zhang, Z.: Microsoft Kinect sensor and its effect. IEEE Multimed. 19(2), 4–10 (2012)

Kawauchi, M.: Dimension Data of the Hands of the Japanese (2012). https://www.dh.aist.go.jp/database/hand/index.html

Nakamura, K.: Kinect for Windows SDK Programming C++. Shuwa System, Tokyo (2012). (in Japanese)

Suzuki, S., Abe, K.: Topological structural analysis of digital binary image by border following. J. Comput. Vis. Graph. Image Process 30(1), 32–46 (1985)

Chen, M., AlRegib, G., Juang, B.H.: Air-writing recognition: Part I: Modeling and recognition of characters, words, and connecting motions. IEEE Trans. Hum.-Mach. Syst. 46(3), 403–413 (2016)

Chen, M., AlRegib, G., Juang, B.H.: Air-writing recognition: Part II: detection and recognition of writing activity in continuous stream of motion data. IEEE Trans. Hum.-Mach. Syst. 46(3), 436–444 (2016)

Ye, Z., Zhang, X., Jin, L., Feng, Z., Xu, S.: Finger-writing-in-the-air system using Kinect sensor. In: IEEE International Conference on Multimedia and Expo Workshops (ICMEW), pp. 1-4 (2013)

Zhang, X., Ye, Z., Jin, L., Feng, Z., Xu, S.: A new writing experience: finger writing in the air using a Kinect sensor. Multimed. IEEE 20(4), 85–93 (2013)

Brooke, J.: SUS: a quick and dirty usability scale. Usability Eval. Ind. 189, 4–7 (1996)

Fukatsu, Y., Shizuki, B., Tanaka, J.: No-look flick: Single-handed and eyes-free Japanese text input system on touch screens of mobile devices. In: Proceedings of the 15th International Conference on Human–Computer Interaction with Mobile Devices and Services. ACM, pp. 161–170 (2013)

Tojo, T., Kato, T., Yamamoto, S.: BubbleFlick: Investigating effective interface for Japanese text entry on smartwatches. In: Proceedings of the 20th International Conference on Human–Computer Interaction with Mobile Devices and Services. ACM, p. 44 (2018)

Sauro, J., Lewis, J.R.: Quantifying the user experience: Practical statistics for user research. Morgan Kaufmann, (Jul. 2016)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rahim, M.A., Shin, J. & Islam, M.R. Gestural flick input-based non-touch interface for character input. Vis Comput 36, 1559–1572 (2020). https://doi.org/10.1007/s00371-019-01758-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-019-01758-8