Abstract

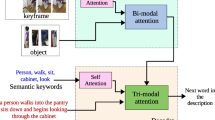

The task of video captioning is to generate a video description corresponding to the video content, so there are stringent requirements for the extraction of fine-grained video features and the language processing of tag text. A new method using global control of the text and local strengthening during training is proposed in this paper. In this method, the context can be referred to when the training generates text. In addition, more attention is given to important words in the text, such as nouns and predicate verbs, and this approach greatly improves the recognition of objects and provides more accurate prediction of actions in the video. Moreover, in this paper, the authors adopt 2D and 3D multimodal feature extraction for the process of video feature extraction. Better results are achieved by the fine-grained feature capture of global attention and the fusion of bidirectional time flow. The method in this paper obtains good results on both the MSR-VTT and MSVD datasets.

Similar content being viewed by others

References

Yu, H. , Wang, J. , Huang, Z. , Yang, Y. : Video paragraph captioning using hierarchical recurrent neural networks. In:IEEE Conference on Computer Vision and Pattern Recognition.IEEE,pp. 4584–4593 (2016)

Zanfir, M. , Marinoiu, E. , Sminchisescu, C. :Spatio-temporal attention models for grounded video captioning.In:Asian Conference on Computer Vision. Springer , pp.104–119 (2016)

Liu, X., Xu, Q., Wang, N.: A survey on deep neural network-based image captioning. Vis. Comput. 35(3), 445–470 (2019)

Yao,L. , Torabi, A., Cho,K.: Video caption Generation Incorporating Spatio - temporal Features and a Soft- attention Mechanism. In:IEEE Conference on Computer Vision (ICCV). Eprint Arxiv, 53, pp.199–211 (2015)

Venugopalan, S. , Rohrbach, M. , Donahue, J.:Sequence to sequence-video to text.In:IEEE international Conference on Computer Vision. IEEE,pp.4534–4542 (2015)

Zhang, J. , Peng,Y.: Object-Aware Aggregation With Bidirectional Temporal Graph for Video Captioning. CVPR ,pp. 8327–8336 (2019)

Hori, C. , Hori, T. , Lee, T. Y. , Sumi, K.: Attention-based multimodal fusion for video caption.In: 2017 IEEE International Conference on Computer Vision (ICCV). IEEE ,pp.4203–4212 (2017)

Jin,T. ,Li,Y. ,Zhang,Z. : Recurrent convolutional video captioning with global and local attention. Neurocomputing 370, pp. 118–127(2019)

Jin, Q., Chen J., Chen S.: Describing videos using multi-modal fusion, ACM Multimedia, pp.1087–1091. (2016)

Wang, H. , Gao, C. , Han, Y. :Sequence in sequence for video captioning. Pattern Recognit,pp.327–334 (2020)

Pasunuru, R. , Bansal, M.: Multi-Task Video Captioning with Video and Entailment Generation. ACL ,pp. 1273–1283 (2017)

Pan, Y. , Yao, T. , Li, H.:Video Captioning with Transferred Semantic Attributes. CVPR,pp. 984–992 (2017)

Lebret,R., O,P., Pinheiro, Collobert,R.: Phrase-based image captioning. ICML ,pp.2085–2094 (2015)

Rohrbach, M. , Wei, Q. , Titov, I. Translating video content to natural language descriptions. ICCV,pp.433–440 (2013)

Technicolor, T. , Related, S. , Technicolor, T. :ImageNet classification with deep convolutional neural networks[C]. In NIPS (2012)

Russakovsky,O., Deng, J., Su,H.:ImageNet large scale visual recognition challenge. IJCV , pp.1–42 (2015)

Zhu,Y. ,Liu,G. : Fine-grained action recognition using multi-view attentions. The Visual Computer, pp. 1771–1881 (2020)

Venugopalan, S. , Xu, H. , Donahue, J.: Translating videos to natural language using deep recurrent neural networks. arXiv, pp.1494–1504 (2014)

Zheng, Q. , Wang, C. , Tao, D. : Syntax-Aware Action Targeting for Video Captioning. CVPR ,pp.13093 -13102. (2020)

Corpus English stop words.[EB/OL](2012). https://blog.csdn.net/weixin_30360497/article/details/95088316

Guadarrama,S. ,Krishnamoorthy,N. , Malkar-nenkar,G.: Youtube2text: Recognizing and describing arbitrary activities using semantic hierarchies and zero-shot recognition, ICCV, pp. 2712–2719. (2013)

Xu,J. , Mei,T. ,Yao,T. ,Rui,Y.:Msr-vtt: A large video caption dataset for bridging video and language.CVPR , pp.5288–5296. (2016)

Xu,K. , Ba,J. , Kiros, R. :Show attend and tell: Neural image caption generation with visual attention.ICML,pp. 2048–2057 (2015)

Wang, B. , Ma, L. , Zhang, W. , Liu, W. :Reconstruction network for video captioning.CVPR, pp.7622–7631(2018)

N Aafaq, N Akhtar, Liu, W. : Spatio-temporal dynamics and semantic attribute enriched visual encoding for video captioning. CVPR, pp.12487 -12496 (2019)

Xu,J. , Yao, T. ,Zhang,Y. ,Mei, T.:Learning multimodal attention lstm networks for video captioning . ACM Multimedia , pp.537–545 (2017)

Zheng, Q. , Wang, C. , Tao, D. : Syntax-Aware Action Targeting for Video Captioning. CVPR pp.13093–13102 (2020)

Shen, Z. , Li, J. , Su, Z. :Weakly supervised dense video captioning.In:IEEE Conference on Computer Vision and Pattern Recognition. CVPR ,pp. 5159–5167 (2017)

Wang, B. , Ma, L. , Zhang, W. Controllable.: video captioning with pos sequence guidance based on gated fusion network. ICCV ,pp.2641–2650 (2019)

Cherian, A. , J Wang, Hori, C. : Spatio-Temporal Ranked-Attention Networks for Video Captioning. WACV ,pp.1606–1615 (2020)

Zhang, J. ,Peng, Y. :Hierarchical vision- language alignment for video captioning. MMM, Springer, pp. 42–54 (2019)

Gao,L. , Guo,Z. , Zhang,H. :Video captioning with attention -based LSTM and semantic consistency.IEEE Trans. Multimed,pp.2045–2055 (2017)

Yu, H. , Wang, J. , Huang, Z. :Video paragraph captioning using hierarchical recurrent neural networks. CVPR, pp. 4584 -4593 (2016)

Xu,Y. ,Han,Y. ,Hong,R. :Sequential video VLAD: training the aggregation locally and temporally.IEEE Trans. Image Process,pp.4933–4944. (2018)

Song, J. , Guo, Y. , Gao, L. :From deterministic to generative: multimodal stochastic RNNs for video captioning. IEEE Trans. Neural Netw. Learn. Syst, pp.3047–3058(2018)

Funding

This paper is supported by the Natural Science Foundation of Hebei Province (F2021202038).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Peng, Y., Wang, C., Pei, Y. et al. Video captioning with global and local text attention. Vis Comput 38, 4267–4278 (2022). https://doi.org/10.1007/s00371-021-02294-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02294-0