Abstract

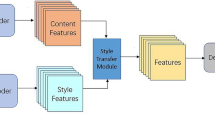

Neural style transfer, as a new auxiliary means for digital art design, can reduce the threshold of technical design and improve the efficiency of creation. The existing methods have achieved good results in terms of speed and style quantity, but most of them change or erase the semantic information of the original content image to varying degrees during the process of stylization, resulting in the loss of most of the original content features and emotion; although some methods can maintain specific original semantic mentioned above, they need to introduce a corresponding semantic description network, leading to a relatively complex stylization framework. In this paper, we propose a multi-semantic preserving fast style transfer approach based on Y channel information. By constructing a multi-semantic loss consisting of a feature loss and a structure loss derived from a pre-trained VGG network with the input of Y channel image and content image, the training of stylization model is constrained to realize the multi-semantic preservation. The experiments indicate that our stylization model is relatively light and simple, and the generated artworks can effectively maintain the original multi-semantic information including salience, depth and edge semantics, emphasize the original content features and emotional expression and show better visual effects.

Similar content being viewed by others

References

Jing, Y., Yang, Y., Feng, Z., Ye, J., Song, M.: Neural style transfer: a review. IEEE Trans. Visual. Comput. Graph. 26, 3365–3385 (2020)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition. pp. 2414–2423. IEEE (2016)

Liu, Y., Xu, Z., Ye, W., Zhang, Z., Weng, S., Chang, C., Tang, H.: Image neural style transfer with maintaining salient regions. IEEE ACCESS. 7, 40027–40037 (2019)

Gatys, L.A., Ecker, A.S., Bethge, M.: A neural algorithm of artistic style. J. Vis.on. 1–16 (2015)

Johnson, J., Alahi, A., Li, F.F.: Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision. pp. 694–711 (2016)

Dumoulin, V., Shlens, J., Kudlur, M.: A Learned representation for artistic style. In: International Conference on Learning Representations. pp. 1–27 (2017)

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.: Universal style transfer via feature transforms. In: International Conference on Learning Representations. pp. 1–10 (2017)

Huang, X., Belongie, S.: Arbitrary style transfer in real-time with adaptive instance normalization. In: IEEE International Conference on Computer Vision. pp. 1–10. IEEE (2017)

Li, P., Zhang, D., Zhao, L., Xu, D.: Style permutation for diversified arbitrary style transfer. IEEE Access. 8, 199147–199158 (2020)

Gatys, L.A., Bethge, M., Hertzmann, A., Shechtman, E.: Preserving color in neural artistic style transfer. 1–8 (2016). https://arxiv.org/abs/1606.05897

Yin R.: Content-aware neural style transfer. In: Computer Vision and Pattern Recognition. pp. 1–15 (2016)

Deng, X., Yang, R., Xu, M., Dragotti, P.: Wavelet domain style transfer for an effective perception-distortion tradeoff in single image super-resolution. In: The IEEE/CVF International Conference on Computer Vision. pp. 1–10 (2019)

Liu, X.C., Cheng, M.M., Lai, Y.K., Rosin, P.L.: Depth-aware neural style transfer. In: Symposium on Non-Photorealistic Animation and Rendering. pp. 1–10 (2017)

Chen, W., Fu, Z., Yang, D., Deng, J.: Single-image depth perception in the wild. In: the 30th International Conference on Neural Information Processing Systems. pp. 730–738 (2016)

Champandard, A.J.: Semantic style transfer and turning two-bit doodles into fine artworks. 1–7 (2016). https://arxiv.org/abs/1603.01768

Zhou, B., Khosla, A., Lapedriza, A., Oliva, A., Torralba, A.: Learning deep features for discriminative localization. In: The IEEE conference on computer vision and pattern recognition. pp. 2921–2929 (2016)

Li, R., Wu, C., Liu, S., Wang, J., Wang, G., Liu, G., Zeng, B.: SDP-GAN: saliency detail preservation generative adversarial networks for high perceptual quality style transfer. IEEE Trans. Image Process. 30, 374–385 (2021)

Cheng, M., Liu, X., Wang, J., Lu, S., Rosin, P.: Structure-preserving neural style transfer. IEEE Trans. Image Process. 29, 909–920 (2019)

Xie, S., Tu, Z.: Holistically-nested edge detection. In: IEEE International Conference on Computer Vision. pp. 1395–1403. (2015)

Reimann, M., Klingbeil, M., Pasewaldt, S., Semmo, A., Trapp, M., Dollner, J.: Locally controllable neural style transfer on mobile devices. Vis. Comput. 35, 1531–1547 (2019)

Zheng, H., Wang, X., Deng, L., Gao, X.: Perception-preserving convolutional networks for image enhancement on smartphones. In: the European Conference on Computer Vision (ECCV) Workshops. pp. 197–213 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: The IEEE conference on computer vision and pattern recognition. pp. 770–778 (2016)

Tian, J., Li, Y., Li, T.: Comparative study of activation functions in convolutional neural networks. Comput. Syst. Appl. 27(7), 43–49 (2018)

Karen, S., Andrew, Z.: Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representation. pp. 1–14 (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.: ImageNet classification with deep convolutional neural networks. In: The 25th International Conference on Neural Information Processing Systems. 1, pp. 1097–1105 (2012)

Lin, T., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollar, P., Zitnick, C.: Microsoft COCO: common objects in context. In: European Conference on Computer Vision. pp. 740–755 (2014)

Wang, Z., Bovik, A., Sheikh, H., Simoncelli, E.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Acknowledgements

This work was supported in part by Key-Area Research and Development Program of Guangdong Province under Grant Nos. 2018B030338001, 2018B010115002, 2018B010107003, and in part by Innovative Talents Program of Guangdong Education Department and Young Hundred Talents Project of Guangdong University of Technology under grant No. 220413548.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ye, W., Zhu, X. & Liu, Y. Multi-semantic preserving neural style transfer based on Y channel information of image. Vis Comput 39, 609–623 (2023). https://doi.org/10.1007/s00371-021-02361-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-021-02361-6