Abstract

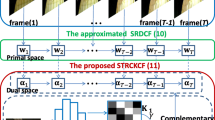

Discriminative correlation filters (DCF) have demonstrated competitive tracking performance in recent years. In these approaches, DCF methods only learn the appearance models with the historical tracking results, thus have the risks of drifting the targets due to the unforeseen target appearances in the future. In this paper, we present a novel tracking framework which rectifies the DCF models in the current frame with the potential future target appearances. To achieve this, the tracking model is updated with time-delay strategies and the model learning in each frame consists of two strategies: an exploration module and an exploitation module. The exploration module aims at discovering the potential target appearances in the near future, while the exploitation module further combines the future target appearances with the historical tracking results to learn more robust DCF models. To validate the proposed method, we integrate it into two state-of-the-art DCF trackers, i.e., spatially regularized discriminative correlation filters decontamination and efficient convolution operators, and also conduct extensive experiments on three tracking benchmarks: OTB-2015, Temple-Color and LaSOT. The results show that by incorporating with the proposed framework, the modified DCF methods can leverage the future target appearances for learning more robust models and are also superior to the baseline methods. In addition, they can also achieve competitive performance against the state-of-the-art methods on several datasets.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Bertinetto, L., Valmadre, J., Golodetz, S., Miksik, O., Torr, P.H.S.: Staple: Complementary learners for real-time tracking. In: International Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.: Fully-convolutional siamese networks for object tracking pp. 850–865 (2016)

Bhat, G., Johnander, J., Danelljan, M., Khan, F.S., Felsberg, M.: Unveiling the power of deep tracking. In: European Conference on Computer Vision, pp. 493–509 (2018)

Bibi, A., Mueller, M., Ghanem, B.: Target Response Adaptation for Correlation Filter Tracking. Springer International Publishing, New York (2016)

Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 2544–2550. IEEE (2010)

Chao, M., Huang, J.B., Yang, X., Yang, M.H.: Hierarchical convolutional features for visual tracking. In: 2015 IEEE International Conference on Computer Vision (ICCV) (2015)

Chen, Z., Hong, Z., Tao, D.: An experimental survey on correlation filter-based tracking. arXiv preprint arXiv:1509.05520 (2015)

Choi, J., Jin Chang, H., Fischer, T., Yun, S., Lee, K., Jeong, J., Demiris, Y., Young Choi, J.: Context-aware deep feature compression for high-speed visual tracking pp. 479–488 (2018)

Danelljan, M., Bhat, G., Shahbaz Khan, F., Felsberg, M.: Eco: efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6638–6646 (2017)

Danelljan, M., Hager, G., Khan, F., Felsberg, M.: Accurate scale estimation for robust visual tracking. In: British Machine Vision Conference, Nottingham, September 1-5, 2014. BMVA Press (2014)

Danelljan, M., Hager, G., Khan, F., Felsberg, M.: Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4310–4318 (2015)

Danelljan, M., Hager, G., Khan, F., Felsberg, M.: Adaptive decontamination of the training set: A unified formulation for discriminative visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1430–1438 (2016)

Danelljan, M., Hager, G., Khan, F.S., Felsberg, M.: Convolutional features for correlation filter based visual tracking. In: 2015 IEEE International Conference on Computer Vision Workshop (ICCVW) (2015)

Danelljan, M., Hager, G., Khan, F.S., Felsberg, M.: Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intel. 39(8), 1561–1575 (2016)

Danelljan, M., Khan, F.S., Felsberg, M., Weijer, J.V.D.: Adaptive color attributes for real-time visual tracking. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014)

Danelljan, M., Robinson, A., Khan, F.S., Felsberg, M.: Beyond correlation filters: Learning continuous convolution operators for visual tracking. In: European Conference on Computer Vision, pp. 472–488. Springer (2016)

Fan, H., Lin, L., Yang, F., Chu, P., Ling, H.: Lasot: A high-quality benchmark for large-scale single object tracking (2018)

Fan, H., Ling, H.: Parallel tracking and verifying: A framework for real-time and high accuracy visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 5486–5494 (2017)

Fazl-Ersi, E., Nooghabi, M.K.: Revisiting correlation-based filters for low-resolution and long-term visual tracking. Vis. Comput. 35(10), 1447–1459 (2019)

Galoogahi, H.K., Sim, T., Lucey, S.: Multi-channel correlation filters. In: 2013 IEEE International Conference on Computer Vision (ICCV) (2013)

Gladh, S., Danelljan, M., Khan, F.S., Felsberg, M.: Deep motion features for visual tracking. In: International Conference on Pattern Recognition, pp. 1243–1248 (2016)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: Exploiting the circulant structure of tracking-by-detection with kernels. In: European Conference on Computer Vision, pp. 702–715. Springer (2012)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intel. 37(3), 583–596 (2014)

Hong, S., You, T., Kwak, S., Han, B.: Online tracking by learning discriminative saliency map with convolutional neural network pp. 597–606 (2015)

Kiani Galoogahi, H., Fagg, A., Lucey, S.: Learning background-aware correlation filters for visual tracking. In: IEEE International Conference on Computer Vision, pp. 1144–1152 (2017)

Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8971–8980 (2018)

Li, F., Yao, Y., Li, P., Zhang, D., Zuo, W., Yang, M.H.: Integrating boundary and center correlation filters for visual tracking with aspect ratio variation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2001–2009 (2017)

Li, Y., Zhu, J.: A scale adaptive kernel correlation filter tracker with feature integration. In: European Conference on Computer Vision, pp. 254–265. Springer (2014)

Liang, P., Blasch, E., Ling, H.: Encoding color information for visual tracking: algorithms and benchmark. IEEE Trans. Image Process. 24(12), 5630–5644 (2015)

Liu, T., Wang, G., Yang, Q.: Real-time part-based visual tracking via adaptive correlation filters. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4902–4912 (2015)

Lukežič, A., Zajc, L.Č., Kristan, M.: Deformable parts correlation filters for robust visual tracking. IEEE Transactions on Cybernetics pp. 1849–1861 (2017)

Lukežič, A., Zajc, L.Č., Vojíř, T., Matas, J., Kristan, M.: Fucolot–a fully-correlational long-term tracker. In: Asian Conference on Computer Vision, pp. 595–611. Springer (2018)

Ma, C., Yang, X., Zhang, C., Yang, M.H.: Long-term correlation tracking. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 5388–5396 (2015)

Mbelwa, J.T., Zhao, Q., Wang, F.: Visual tracking tracker via object proposals and co-trained kernelized correlation filters. The Visual Computer pp. 1–15 (2019)

Nam, H., Han, B.: Learning multi-domain convolutional neural networks for visual tracking pp. 4293–4302 (2016)

Qi, Y., Zhang, S., Qin, L., Yao, H., Huang, Q., Lim, J., Yang, M.H.: Hedged deep tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4303–4311 (2016)

Song, Y., Ma, C., Wu, X., Gong, L., Bao, L., Zuo, W., Shen, C., Lau, R.W., Yang, M.H.: Vital: Visual tracking via adversarial learning pp. 8990–8999 (2018)

Valmadre, J., Bertinetto, L., Henriques, J.F., Vedaldi, A., Torr, P.H.: End-to-end representation learning for correlation filter based tracking pp. 2805–2813 (2017)

Wang, L., Ouyang, W., Wang, X., Lu, H.: Visual tracking with fully convolutional networks. In: 2015 IEEE International Conference on Computer Vision (ICCV) (2016)

Wang, M., Liu, Y., Huang, Z.: Large margin object tracking with circulant feature maps. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4021–4029 (2017)

Wu, Y., Lim, J., Yang, M.H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intel. 37(9), 1834–1848 (2015)

Yang, M., Lin, Y., Huang, D., Kong, L.: Accurate visual tracking via reliable patch. The Visual Computer pp. 1–14 (2021)

Zhang, J., Ma, S., Sclaroff, S.: Meem: robust tracking via multiple experts using entropy minimization. In: European Conference on Computer Vision, pp. 188–203. Springer (2014)

Zhang, T., Xu, C., Yang, M.H.: Multi-task correlation particle filter for robust object tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4335–4343 (2017)

Zhang, Y., Yang, Y., Zhou, W., Shi, L., Li, D.: Motion-aware correlation filters for online visual tracking. IEEE Sensors 18(11), 3937 (2018)

Zhao, D., Xiao, L., Fu, H., Wu, T., Xu, X., Dai, B.: Augmenting cascaded correlation filters with spatial-temporal saliency for visual tracking. Inf. Sci. 470, 78–93 (2019)

Zuo, W., Wu, X., Lin, L., Zhang, L., Yang, M.H.: Learning support correlation filters for visual tracking. IEEE Trans. Pattern Anal. Mach. Intel. 41(5), 1158–1172 (2018)

Acknowledgements

This work was supported by the National key R&D program of China under Grant No. 2018YFB1701701, and the National Natural Science Foundation of China under Grant No. U19A2073.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Miao, Q., Xu, C., Li, F. et al. Delayed rectification of discriminative correlation filters for visual tracking. Vis Comput 39, 1237–1250 (2023). https://doi.org/10.1007/s00371-022-02401-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02401-9