Abstract

Infrared and visible image fusion makes full use of abundant detailed information of multi-sensor to help people better understand various scenarios. In this paper, a novel method of infrared and visible image fusion based on multi-level saliency integration is proposed. First, the background image of each sub-image is reconstructed by the means of Bessel interpolation after the quadtree decomposition on the infrared image, and the difference saliency is extracted by the difference between the source infrared image and the estimated background. Then, the sparse saliency is calculated from the infrared image using the sparsity of salient objects and the low rank of background. Third, the multi-scale saliency is obtained by Laplacian transformation between the visible image and infrared image to preserve the detailed information. At last, the fusion strategy based on the adaptive weighting coefficient is present to get more natural fusion results. Experimental results on 20 pairs of source images demonstrate that the proposed method outperforms the other state-of-the-art methods in terms of subjective vision and objective evaluation.

source infrared image; b the quadtree structure obtained at the first level; c the quadtree structure obtained at the second level; d the final quadtree structure

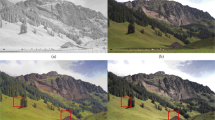

source image pairs TEST1

source image pairs TEST2

source image pairs TEST3

source image pairs TEST4

source image pairs TEST5–TEST12

source image pairs TEST13–TEST20

source images

Similar content being viewed by others

References

Kamboj, A., Rani, R., Nigam, A.: A comprehensive survey and deep learning-based approach for human recognition using ear biometric. Vis. Comput. (2021). https://doi.org/10.1007/s00371-021-02119-0

Liao, X., Li, K., Zhu, X., Liu, K.J.R.: Robust detection of image operator chain with two-stream convolutional neural network. IEEE J. Sel. Top. Signal Process. 14(5), 955–968 (2020). https://doi.org/10.1109/JSTSP.2020.3002391

Hu, J., Liao, X., Wang, W., Qin, Z.: Detecting compressed Deepfake videos in social networks using frame-temporality two-stream convolutional network. IEEE Trans. Circuits Syst. Video Technol. (2021). https://doi.org/10.1109/TCSVT.2021.3074259

Wang, S., Shen, Y.: Multi-modal image fusion based on saliency guided in NSCT domain. IET Image Process. 14(13), 3039–3045 (2020). https://doi.org/10.1049/iet-ipr.2019.1319

Wang, Q., Ding, Z., Tao, Z., Gao, Q., Fu, Y.: Generative partial multi-view clustering with adaptive fusion and cycle consistency. IEEE Trans. Image Process. 30, 1771–1783 (2021). https://doi.org/10.1109/TIP.2020.3048626

Jin, Q., Chen, J., Chen, S., Xiong, Y., Hauptmann, A.: Describing videos using multi-modal fusion. In: MM 2016—Proceedings of the 2016 ACM Multimedia Conference, pp. 1087–1091 (2016). https://doi.org/10.1145/2964284.2984065

Liao, X., Yu, Y., Li, B., Li, Z., Qin, Z.: A new payload partition strategy in color image steganography. IEEE Trans. Circuits Syst. Video Technol. 30(3), 685–696 (2020). https://doi.org/10.1109/TCSVT.2019.2896270

Liao, X., Yin, J., Chen, M., Qin, Z.: Adaptive payload distribution in multiple images steganography based on image texture features. IEEE Trans. Dependable Secur. Comput. (2020). https://doi.org/10.1109/TDSC.2020.3004708

Xi, J., Wang, L., Zheng, J., Yang, X.: Energy-constraint formation for multiagent systems with switching interaction topologies. IEEE Trans. Circuits Syst. I Regul. Pap. 67(7), 2442–2454 (2020). https://doi.org/10.1109/TCSI.2020.2975383

Xi, J., Wang, C., Yang, X., Yang, B.: Limited-budget output consensus for descriptor multiagent systems with energy constraints. IEEE Trans. Cybern. (2020). https://doi.org/10.1109/tcyb.2019.2963172

Lu, R., Yang, X., Li, W., Fan, J., Li, D., Jing, X.: Robust infrared small target detection via multidirectional derivative-based weighted contrast measure. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2020). https://doi.org/10.1109/lgrs.2020.3026546

Lu, R., Yang, X., Jing, X., Chen, L., Fan, J., Li, W., Li, D.: Infrared small target detection based on local hypergraph dissimilarity measure. IEEE Geosci. Remote Sens. Lett. 19, 1–5 (2020). https://doi.org/10.1109/LGRS.2020.3038784

Ma, J., Zhang, H., Shao, Z., Liang, P., Xu, H.: GANMcC: a generative adversarial network with multiclassification constraints for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 70, 1–14 (2021). https://doi.org/10.1109/TIM.2020.3038013

Song, Z., Yang, J., Zhang, D., Wang, S., Li, Z.: Semi-supervised dim and small infrared ship detection network based on Haar wavelet. IEEE Access 9, 29686–29695 (2021). https://doi.org/10.1109/ACCESS.2021.3058526

Dai, L., Liu, G., Huang, L., Xiao, G., Xu, Z., Ruan, J.: Feature transfer method for infrared and visible image fusion via fuzzy lifting scheme. Infrared Phys. Technol. 114, 103621 (2021). https://doi.org/10.1016/j.infrared.2020.103621

Zhao, Z., Xu, S., Zhang, C., Liu, J., Zhang, J.: Bayesian fusion for infrared and visible images. Signal Process. 177, 107734 (2020). https://doi.org/10.1016/j.sigpro.2020.107734

Chen, J., Li, X., Luo, L., Mei, X., Ma, J.: Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Inf. Sci. (Ny) 508, 64–78 (2020). https://doi.org/10.1016/j.ins.2019.08.066

Mo, Y., Kang, X., Duan, P., Sun, B., Li, S.: Attribute filter based infrared and visible image fusion. Inf. Fusion 75(April), 41–54 (2021). https://doi.org/10.1016/j.inffus.2021.04.005

Rajakumar, C., Satheeskumaran, S.: QRCP decomposition-based hybrid approach for fusion of visible and infrared images. Circuits Syst. Signal Process. (2021). https://doi.org/10.1007/s00034-021-01757-y

Zhang, Y., Zhang, L., Bai, X., Zhang, L.: Infrared and visual image fusion through infrared feature extraction and visual information preservation. Infrared Phys. Technol. 83, 227–237 (2017). https://doi.org/10.1016/j.infrared.2017.05.007

Vanmali, A.V., Gadre, V.M.: Visible and NIR image fusion using weight-map-guided Laplacian–Gaussian pyramid for improving scene visibility. Sadhana Acad. Proc. Eng. Sci. 42(7), 1063–1082 (2017). https://doi.org/10.1007/s12046-017-0673-1

Yu, X., Ren, J., Chen, Q., Sui, X.: A false color image fusion method based on multi-resolution color transfer in normalization YCBCR space. Optik (Stuttg) 125(20), 6010–6016 (2014). https://doi.org/10.1016/j.ijleo.2014.07.059

Madheswari, K., Venkateswaran, N.: Swarm intelligence based optimisation in thermal image fusion using dual tree discrete wavelet transform. Quant. Infrared Thermogr. J. 14(1), 24–43 (2017). https://doi.org/10.1080/17686733.2016.1229328

Jin, X., et al.: Infrared and visual image fusion method based on discrete cosine transform and local spatial frequency in discrete stationary wavelet transform domain. Infrared Phys. Technol. 88, 1–12 (2018). https://doi.org/10.1016/j.infrared.2017.10.004

Meng, F., Song, M., Guo, B., Shi, R., Shan, D.: Image fusion based on object region detection and Non-Subsampled contourlet transform. Comput. Electr. Eng. 62, 375–383 (2017). https://doi.org/10.1016/j.compeleceng.2016.09.019

Ren, L., Pan, Z., Cao, J., Zhang, H., Wang, H.: Infrared and visible image fusion based on edge-preserving guided filter and infrared feature decomposition. Signal Process. 186, 108108 (2021). https://doi.org/10.1016/j.sigpro.2021.108108

Ren, L., Pan, Z., Cao, J., Liao, J., Wang, Y.: Infrared and visible image fusion based on weighted variance guided filter and image contrast enhancement. Infrared Phys. Technol. 114, 103662 (2021). https://doi.org/10.1016/j.infrared.2021.103662

Li, Q., Wu, W., Lu, L., Li, Z., Ahmad, A., Jeon, G.: Infrared and visible images fusion by using sparse representation and guided filter. J. Intell. Transp. Syst. Technol. Plan. Oper. 24(3), 254–263 (2020). https://doi.org/10.1080/15472450.2019.1643725

Liu, F., Chen, L., Lu, L., Jeon, G., Yang, X.: Infrared and visible image fusion via rolling guidance filter and convolutional sparse representation. J. Intell. Fuzzy Syst. 40(6), 10603–10616 (2021). https://doi.org/10.3233/JIFS-201494

Zhu, Z., Yin, H., Chai, Y., Li, Y., Qi, G.: A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. (Ny) 432, 516–529 (2018). https://doi.org/10.1016/j.ins.2017.09.010

Duan, P., Ghamisi, P., Kang, X., Rasti, B., Li, S., Gloaguen, R.: Fusion of dual spatial information for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 59(9), 7726–7738 (2020). https://doi.org/10.1109/tgrs.2020.3031928

Kong, W., Lei, Y., Zhao, H.: Adaptive fusion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization. Infrared Phys. Technol. 67, 161–172 (2014). https://doi.org/10.1016/j.infrared.2014.07.019

Ma, J., et al.: Infrared and visible image fusion via detail preserving adversarial learning. Inf. Fusion 54, 85–98 (2020). https://doi.org/10.1016/j.inffus.2019.07.005

Feng, Y., Lu, H., Bai, J., Cao, L., Yin, H.: Fully convolutional network-based infrared and visible image fusion. Multimed. Tools Appl. 79(21–22), 15001–15014 (2020). https://doi.org/10.1007/s11042-019-08579-w

Xu, D., Wang, Y., Zhang, X., Zhang, N., Yu, S.: Infrared and visible image fusion using a deep unsupervised framework with perceptual loss. IEEE Access 8, 206445–206458 (2020). https://doi.org/10.1109/ACCESS.2020.3037770

Li, H., Jun Wu, X., Durrani, T.S.: Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys. Technol. 102, 103039 (2019). https://doi.org/10.1016/j.infrared.2019.103039

Gao, C., Qi, D., Zhang, Y., Song, C., Yu, Y.: Infrared and visible image fusion method based on ResNet in a nonsubsampled contourlet transform domain. IEEE Access 9, 91883–91895 (2021). https://doi.org/10.1109/ACCESS.2021.3086096

Li, H., Wu, X.J.: DenseFuse: a fusion approach to infrared and visible images. IEEE Trans. Image Process. 28(5), 2614–2623 (2019). https://doi.org/10.1109/TIP.2018.2887342

Jian, L., Yang, X., Liu, Z., Jeon, G., Gao, M., Chisholm, D.: SEDRFuse: a symmetric encoder-decoder with residual block network for infrared and visible image fusion. IEEE Trans Instrum. Meas. 70, 1–15 (2021). https://doi.org/10.1109/TIM.2020.3022438

Ma, J., Yu, W., Liang, P., Li, C., Jiang, J.: FusionGAN: a generative adversarial network for infrared and visible image fusion. Inf. Fusion 48, 11–26 (2019). https://doi.org/10.1016/j.inffus.2018.09.004

Ma, J., Xu, H., Jiang, J., Mei, X., Zhang, X.P.: DDcGAN: a dual-discriminator conditional generative adversarial network for multi-resolution image fusion. IEEE Trans. Image Process. 29(3), 4980–4995 (2020). https://doi.org/10.1109/TIP.2020.2977573

Li, J., Huo, H., Liu, K., Li, C.: Infrared and visible image fusion using dual discriminators generative adversarial networks with Wasserstein distance. Inf. Sci. (Ny) 529, 28–41 (2020). https://doi.org/10.1016/j.ins.2020.04.035

Yang, Y., Liu, J.X., Huang, S.Y., Lu, H.Y., Wen, W.Y.: VMDM-fusion: a saliency feature representation method for infrared and visible image fusion. Signal Image Video Process. 15(6), 1221–1229 (2021). https://doi.org/10.1007/s11760-021-01852-2

Ma, J., Zhou, Z., Wang, B., Zong, H.: Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys. Technol. 82, 8–17 (2017). https://doi.org/10.1016/j.infrared.2017.02.005

Zhao, C., Huang, Y., Qiu, S.: Infrared and visible image fusion algorithm based on saliency detection and adaptive double-channel spiking cortical model. Infrared Phys. Technol. 102, 102976 (2019). https://doi.org/10.1016/j.infrared.2019.102976

Meng, F., Guo, B., Song, M., Zhang, X.: Image fusion with saliency map and interest points. Neurocomputing 177, 1–8 (2016). https://doi.org/10.1016/j.neucom.2015.10.080

Bavirisetti, D.P., Dhuli, R.: Two-scale image fusion of visible and infrared images using saliency detection. Infrared Phys. Technol. 76, 52–64 (2016). https://doi.org/10.1016/j.infrared.2016.01.009

Yang, Y., Zhang, Y., Huang, S., Zuo, Y., Sun, J.: Infrared and visible image fusion using visual saliency sparse representation and detail injection model. IEEE Trans. Instrum. Meas. 70, 1–14 (2021). https://doi.org/10.1109/TIM.2020.3011766

Gao, C., Song, C., Zhang, Y., Qi, D., Yu, Y.: Improving the performance of infrared and visible image fusion based on latent low-rank representation nested with rolling guided image filtering. IEEE Access 9, 91462–91475 (2021). https://doi.org/10.1109/ACCESS.2021.3090436

Duan, C., Wang, Z., Xing, C., Lu, S.: Infrared and visible image fusion using multi-scale edge-preserving decomposition and multiple saliency features. Optik (Stuttg) 228, 165775 (2021). https://doi.org/10.1016/j.ijleo.2020.165775

Zhang, L.: In situ image segmentation using the convexity of illumination distribution of the light sources. IEEE Trans. Pattern Anal. Mach. Intell. 30(10), 1786–1799 (2008). https://doi.org/10.1109/TPAMI.2007.70830

Bai, X., Zhang, Y., Zhou, F., Xue, B.: Quadtree-based multi-focus image fusion using a weighted focus-measure. Inf. FUSION 22, 105–118 (2014). https://doi.org/10.1016/j.inffus.2014.05.003

Yan, L., Cao, J., Rizvi, S., Zhang, K., Hao, Q., Cheng, X.: Improving the performance of image fusion based on visual saliency weight map combined with CNN. IEEE Access 8, 59976–59986 (2020). https://doi.org/10.1109/ACCESS.2020.2982712

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61806209, in part by the Natural Science Foundation of Shaanxi Province under Grant 2020JQ-490, and in part by the Aeronautical Science Fund under Grant 201851U8012.

Author information

Authors and Affiliations

Contributions

Conceptualization, FG and RL; Methodology, RL and FG; Software, RL and JF; Investigation, DL and YH; Resources, YH; Writing-original draft preparation, RL and FG; Writing-review and editing, XY, JF and DL; Visualization, JF; Supervision, RL and YH; Project administration, XY; Funding acquisition, RL All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lu, R., Gao, F., Yang, X. et al. A novel infrared and visible image fusion method based on multi-level saliency integration. Vis Comput 39, 2321–2335 (2023). https://doi.org/10.1007/s00371-022-02438-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02438-w