Abstract

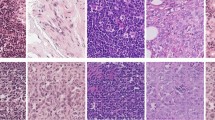

High-quality histopathology images are significant for accurate diagnosis and symptomatic treatment. However, local cross-contamination or missing data are common phenomena due to many factors, such as the superposition of foreign bodies and improper operations in obtaining and processing pathological digital images. The interpretation of such images is time-consuming, laborious, and inaccurate. Thus, it is necessary to improve diagnosis accuracy by reconstructing pathological images. However, corrupted image restoration is a challenging task, especially for pathological images. Therefore, we propose a multi-scale self-attention generative adversarial network (MSSA GAN) to restore colon tissue pathological images. The MSSA GAN uses a self-attention mechanism in the generator to efficiently learn the correlations between the corrupted and uncorrupted areas at multiple scales. After jointly optimizing the loss function and understanding the semantic features of pathology images, the network guides the generator in these scales to generate restored pathological images with precise details. The results demonstrated that the proposed method could obtain pixel-level photorealism for histopathology images. Parameters such as RMSE, PSNR, and SSIM of the restored image reached 2.094, 41.96 dB, and 0.9979, respectively. Qualitative and quantitative comparisons with other restoration approaches illustrate the superior performance of the improved algorithm for pathological image restoration.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Tfc, A., Js, B.: Non-texture Inpainting by curvature-driven diffusions. J. Vis. Commun. Image Represent. 12(4), 436–449 (2001)

Criminisi, A., Perez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 3(9), 1200–1212 (2004)

Ruzic, T., Pizurica, A.: Context-aware patch-based image inpainting using markov random field modeling. IEEE Trans. Image Process. 24(1), 444–456 (2015)

Jin, K.H., Ye, J.C.: Annihilating filter-based low-rank hankel matrix approach for image inpainting. IEEE Trans. Image Process. 24(11), 3498–3511 (2015)

Xue, H., Zhang, S., Cai, D.: Depth image inpainting: improving low rank matrix completion with low gradient regularization. IEEE Trans. Image Process. 26(9), 4311–4320 (2017)

Wei, Y., Liu, S.: Domain-based structure-aware image inpainting. SIViP 10(5), 911–919 (2016)

Bertalmio, M., Sapiro, G., Caselles, V.: Image inpainting. Siggraph 4(9), 417–424 (2000)

Barnes, C.: Patchmatch: a randomized correspon-dence algorithm for structural image editing. ACM Trans. Graph. (2009). https://doi.org/10.1145/1531326.1531330

Ying, H., Kai, L., Ming, Y.: An improved image inpainting algorithm based on image segmentation. Procedia Comput. Sci. 107, 796–801 (2017)

Qin, Z., Zeng, Q., Zong, Y.: Image inpainting based on deep learning: a review. Displays 69(2), 102028 (2021)

Chang Y L, Liu Z Y, Hsu W: Vornet: Spatio-temporally consistent video inpainting for object removal. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. (2019)

Zeng Y, Fu J, Chao H: Learning pyramid-context encoder network for high-quality image inpainting. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Pp.1486–1494. (2019)

Liu H, Jiang B, Xiao Y: Coherent semantic attention for image inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 4170–4179 (2019)

Liao, L., Hu, R., Xiao, J.: Artist-net: decorating the inferred content with unified style for image inpainting. IEEE Access. 7, 36921–36933 (2019)

Hertz A, Fogel S, Hanocka R: Blind visual motif removal from a single image. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 6858–6867 (2019).

Yang C, Lu X, Lin Z: igh-resolution image inpainting using multi-scale neural patch synthesis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 6721–6729 (2017)

Liu P, Zhang H, Zhang K: Multi-level wavelet-CNN for image restoration. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops. pp. 773–782 (2018)

Dolhansky B, Ferrer C C: Eye Inpainting with Exemplar Generative Adversarisal Networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 7902–7911 (2018)

Li, H., Li, G., Lin. Li,: Context-aware semantic inpainting. IEEE Trans. Cybern. 14(8), 4398–4411 (2015)

Zheng C, Cham T J, J Cai: Pluralistic image completion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. (2019)

Yu J, Lin Z, Yang J: Generative Image Inpainting with Contextual Attention. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition IEEE. pp. 5505–5514 (2018)

Chen, Y., Hu, H.: An improved method for semantic image inpainting with gans: progressive inpainting. Neural Process. Lett. 49(3), 1355–1367 (2018)

Uddin, S.M., Jung, Y.J.: Global and local attention-based free-form image inpainting. Sensors 20(11), 3204 (2020)

Yang, Y., Cheng, Z., Yu, H.: MSE-Net: generative image inpainting with multi-scale encoder. Vis. Comput. (2021). https://doi.org/10.1007/s00371-021-02143-0

Pathak D, Krahenbuhl P, Donahue J: Context encoders: Feature learning by inpainting. In: Proceedings of the IEEE conference on computer vision and pattern recognition. (2016)

Liu G, Reda F A, Shih K J: Image inpainting for irregular holes using partial convolutions. In: European Conference on Computer Vision. (2018).

Yu J, Lin Z, Yang J: Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. (2019)

Iizuka, S., Simo-Serra, E., Ishikawa, H.: Globally and locally consistent image completion. ACM Trans. Graph. 36(4), 1–14 (2017)

Nazeri K, Ng E, Joseph T: EdgeConnect: Generative image inpainting with adversarial edge learning. https://arxiv.org/abs/1901.00212 (2019).

Zhao L, Mo Q, Lin S: Uctgan: Diverse image inpainting based on unsupervised cross-space translation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 5741–5750 (2020)

Hui Z, Li J, X Wang: Image fine-grained inpainting. https://arxiv.org/abs/2002.02609 (2020)

Li X, Zhou S: GLAGAN image inpainting algorithm based on global and local consistency.In: International Information Technology and Artificial Intelligence Conference (ITAIC). (2020)

Yang, G., Yu, S., Dong, H.: Deep de-aliasing generative adversarial networks for fast compressed sensing mri reconstruction. IEEE Trans. Med. Imaging 37(6), 1310–1321 (2017)

Quan, T.M., Nguyen-Duc, T., Jeong, W.K.: Compressed sensing MRI reconstruction using a generative adversarial network with a cyclic loss. IEEE Trans. Med. Imaging 37(6), 1488–1497 (2018)

Lei, B., Kim, J., Kumar, A.: Synthesis of positron emission tomography (PET) images via multi-channel generative adversarial networks (GANs). Lect. Notes Comput. Sci. 1055, 43–51 (2017)

Kaushik, H., Singh, D., Kaur, M.: Diabetic retinopathy diagnosis from fundus images using stacked generalization of deep models. IEEE Access. 9, 108276–108292 (2021)

Liang, M., Ren, Z., Yang, J.: Identification of colon cancer using multi-scale feature fusion convolutional neural network based on shearlet transform. IEEE Access. 8, 208969–208977 (2020). https://doi.org/10.1109/ACCESS.2020.3038764

Pimkin A, Samoylenko A, Antipina N: Multidomain CT metal artifacts reduction using partial convolution based inpainting. In: International Joint Conference on Neural Networks (IJCNN). (2020)

Deng K, Sun C, Liu Y: Real-time limited-view CT inpainting and reconstruction with dual domain based on spatial information. https://arxiv.org/abs/2101.07594 (2021).

Liu, X., Xing, F., Yang, C.: Symmetric-constrained irregular structure inpainting for brain MRI registration with tumor pathology. Int. MICCAI Brainlesion Workshop (2020). https://doi.org/10.1007/978-3-030-72084-1_8

Armanious K, Kumar V, Abdulatif S: ipA-MedGAN: inpainting of arbitrary regions in medical imaging. In: Proceedings of the IEEE International Conference on Image Processing. pp. 3005–3009 (2020)

Armanious K, Mecky Y, Gatidis S: Adversarial inpainting of medical image modalities. In: Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing. pp. 3267–3271 (2019). https://doi.org/10.1109/ICASSP.2019.8682677

F Yu, Koltun V: Multi-scale context aggregation by dilated convolutions. https://arxiv.org/abs/1511.07122 (2015).

Zhang H, Goodfellow I, Metaxas D. Self-attention generative adversarial networks. In: Proceedings of International conference on machine learning. pp. 7354–7363 (2019)

He K, Zhang X, Ren S: Deep residual learning for image recognition. In: Proceedings of IEEE conference on computer vision and pattern recognition. pp. 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90

Liu F, X Ren, Zhang Z: Rethinking skip connection with layer normalization. In: Proceedings of International Conference on Computational Linguistics. pp. 3586–3598 (2020)

Li J, Madry A, Peebles J: On the limitations of first-order approximation in GAN dynamics. In: Proceedings of International Conference on Machine Learning. pp. 3005–3013 (2018)

Lei, N., An, D., Guo, Y.: A geometric understanding of deep learning. Engineering 6(3), 361–374 (2020)

Belli D, Hu S, Sogancioglu E: Context encoding chest X-rays. https://arxiv.org/abs/1812.00964 (2018)

Mahendran A, Vedaldi A: Understanding deep image representations by inverting them. In: Proceedings of IEEE conference on computer vision and pattern recognition. pp. 5188–5196 (2015)

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 11804209, Natural Science Foundation of Shanxi Province under Grant 201901D211173, Scientific and Technological Innovation Programs of Higher Education Institutions in Shanxi under Grant 2019 L0064, and Natural Science Foundation of Shanxi Province under Grant 201901D111031.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 11804209, Natural Science Foundation of Shanxi Province under Grant 201901D211173, Scientific and Technological Innovation Programs of Higher Education Institutions in Shanxi under Grant 2019 L0064, and Natural Science Foundation of Shanxi Province under Grant 201901D111031.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liang, M., Zhang, Q., Wang, G. et al. Multi-scale self-attention generative adversarial network for pathology image restoration. Vis Comput 39, 4305–4321 (2023). https://doi.org/10.1007/s00371-022-02592-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02592-1