Abstract

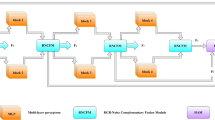

As an advanced image editing technology, image inpainting leaves very weak traces in the tampered image, causing serious security issues, particularly those based on deep learning. In this paper, we propose the global–local feature fusion network (GLFFNet) to locate the image regions tampered by inpainting based on deep learning. GLFFNet consists of a two-stream encoder and a decoder. In the two-stream encoder, a spatial self-attention stream (SSAS) and a noise feature extraction stream (NFES) are designed. By a transformer network, the SSAS extracts global features regarding deep inpainting manipulations. The NFES is constructed by the residual blocks, which are used to learn manipulation features from noise maps produced by filtering the input image. Through a feature fusion layer, the features output by the encoder is fused and then fed into the decoder, where the up-sampling and convolutional operations are employed to derive the confidential map for inpainting manipulation. The proposed network is trained by the designed two-stage loss function. Experimental results show that GLFFNet achieves a high location accuracy for deep inpainting manipulations and effectively resists JPEG compression and additive noise attacks.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Bayar, B., Stamm, M.C.: Constrained convolutional neural networks: a new approach towards general purpose image manipulation detection. IEEE Trans. Inf. Forensics Secur. 13(11), 2691–2706 (2018)

Chen, H., Han, Q., Li, Q., Tong, X.: Digital image manipulation detection with weak feature stream. The Vis. Comput. 1–15 (2021)

Gao, H., Gao, T., Cheng, R.: Robust detection of median filtering based on data-pair histogram feature and local configuration pattern. J. Inf. Secur. Appl. 53, 102506 (2020)

Chen, B., Qi, X., Zhou, Y., Yang, G., Zheng, Y., Xiao, B.: Image splicing localization using residual image and residual-based fully convolutional network. J. Vis. Commun. Image Represent. 73, 102967 (2020)

Huh, M., Liu, A., Owens, A., Efros, A.A.: Fighting fake news: Image splice detection via learned self-consistency. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 101–117 (2018)

Yang, J., Liang, Z., Gan, Y., Zhong, J.: A novel copy-move forgery detection algorithm via two-stage filtering. Dig. Sig. Process. 113, 103032 (2021)

Bertalmio, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. In: Proceedings of the 27th annual conference on Computer graphics and interactive techniques, pp. 417–424 (2000)

Chan, T.F., Shen, J.: Nontexture inpainting by curvature-driven diffusions. J. Vis. Commun. Image Represent. 12(4), 436–449 (2001)

Esedoglu, S., Shen, J.: Digital inpainting based on the Mumford-Shah-Euler image model. Eur. J. Appl. Math. 13(4), 353–370 (2002)

Shen, J., Chan, T.F.: Mathematical models for local nontexture inpaintings. SIAM J. Appl. Math. 62(3), 1019–1043 (2002)

Chen, Y., Zhang, H., Liu, L., Tao, J., Zhang, Q., Yang, K., Xia, R., Xie, J.: Research on image inpainting algorithm of improved total variation minimization method. J. Ambient Intell. Humaniz. Comput. 1–10 (2021)

Criminisi, A., Pérez, P., Toyama, K.: Region filling and object removal by exemplar-based image inpainting. IEEE Trans. Image Process. 13(9), 1200–1212 (2004)

Grossauer, H.: A combined PDE and texture synthesis approach to inpainting. In: European conference on computer vision, pp. 214–224. Springer (2004)

Hays, J., Efros, A.A.: Scene completion using millions of photographs. ACM Trans. Graph. (ToG) 26(3), 4–es (2007)

Chen, Y., Liu, L., Tao, J., Xia, R., Zhang, Q., Yang, K., Xiong, J., Chen, X.: The improved image inpainting algorithm via encoder and similarity constraint. Vis. Comput. 37(7), 1691–1705 (2021)

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.S.: Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 4471–4480 (2019)

Zhao, S., Cui, J., Sheng, Y., Dong, Y., Liang, X., Chang, E.I., Xu, Y.: Large scale image completion via co-modulated generative adversarial networks. In: International Conference on Learning Representations (ICLR) (2021)

Zheng, C., Cham, T.J., Cai, J.: Pluralistic image completion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1438–1447 (2019)

Suvorov, R., Logacheva, E., Mashikhin, A., Remizova, A., Ashukha, A., Silvestrov, A., Kong, N., Goka, H., Park, K., Lempitsky, V.: Resolution-robust large mask inpainting with fourier convolutions. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 2149–2159 (2022)

Chen, Y., Liu, L., Phonevilay, V., Gu, K., Xia, R., Xie, J., Zhang, Q., Yang, K.: Image super-resolution reconstruction based on feature map attention mechanism. Appl. Intell. 51(7), 4367–4380 (2021)

Wan, Z., Zhang, J., Chen, D., Liao, J.: High-fidelity pluralistic image completion with transformers. arXiv preprint arXiv:2103.14031 (2021)

Chang, I.C., Yu, J.C., Chang, C.C.: A forgery detection algorithm for exemplar-based inpainting images using multi-region relation. Image Vis. Comput. 31(1), 57–71 (2013)

Li, H., Luo, W., Huang, J.: Localization of diffusion-based inpainting in digital images. IEEE Trans. Inf. Forensics Secur. 12(12), 3050–3064 (2017)

Li, X.H., Zhao, Y.Q., Liao, M., Shih, F.Y., Shi, Y.Q.: Detection of tampered region for JPEG images by using mode-based first digit features. EURASIP J. Adv. Sig. Process. 2012(1), 1–10 (2012)

Li, H., Huang, J.: Localization of deep inpainting using high-pass fully convolutional network. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp. 8301–8310 (2019)

Zhu, X., Qian, Y., Zhao, X., Sun, B., Sun, Y.: A deep learning approach to patch-based image inpainting forensics. Sig. Process.: Image Commun. 67, 90–99 (2018)

Liu, X., Liu, Y., Chen, J., Liu, X.: PSCC-Net: progressive spatio-channel correlation network for image manipulation detection and localization. arXiv preprint arXiv:2103.10596 (2021)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Adv. Neural Inf. Process. Syst. 5998–6008 (2017)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: International Conference on Computer Vision (ICCV) (2021)

Zheng, S., Lu, J., Zhao, H., Zhu, X., Luo, Z., Wang, Y., Fu, Y., Feng, J., Xiang, T., Torr, P.H., et al.: Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6881–6890 (2021)

Wu, Q., Sun, S.J., Zhu, W., Li, G.H., Tu, D.: Detection of digital doctoring in exemplar-based inpainted images. In: 2008 International Conference on Machine Learning and Cybernetics, vol. 3, pp. 1222–1226. IEEE (2008)

Bacchuwar, K.S., Ramakrishnan, K., et al.: A jump patch-block match algorithm for multiple forgery detection. In: 2013 International Mutli-Conference on Automation, Computing, Communication, Control and Compressed Sensing (iMac4s), pp. 723–728. IEEE (2013)

Stamm, M.C., Liu, K.R.: Forensic detection of image manipulation using statistical intrinsic fingerprints. IEEE Trans. Inf. Forensics Secur. 5(3), 492–506 (2010)

Zhang, D., Liang, Z., Yang, G., Li, Q., Li, L., Sun, X.: A robust forgery detection algorithm for object removal by exemplar-based image inpainting. Multimed. Tools Appl. 77(10), 11823–11842 (2018)

Zhao, Y.Q., Liao, M., Shih, F.Y., Shi, Y.Q.: Tampered region detection of inpainting JPEG images. Optik 124(16), 2487–2492 (2013)

Liu, Q., Sung, A.H., Zhou, B., Qiao, M.: Exposing inpainting forgery in JPEG images under recompression attacks. In: 2016 15th IEEE International Conference on Machine Learning and Applications (ICMLA), pp. 164–169. IEEE (2016)

Zhang, J., Liao, Y., Zhu, X., Wang, H., Ding, J.: A deep learning approach in the discrete cosine transform domain to median filtering forensics. IEEE Sig. Process. Lett. 27, 276–280 (2020)

Nair, G., Venkatesh, K., Sen, D., Sonkusare, R.: Identification of multiple copy-move attacks in digital images using FFT and CNN. In: 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), pp. 1–6. IEEE (2021)

Amerini, I., Uricchio, T., Ballan, L., Caldelli, R.: Localization of JPEG double compression through multi-domain convolutional neural networks. In: 2017 IEEE Conference on computer vision and pattern recognition workshops (CVPRW), pp. 1865–1871. IEEE (2017)

Chen, H., Han, Q., Li, Q., Tong, X.: A novel general blind detection model for image forensics based on DNN. The Vis. Comput. 1–16 (2021)

Khan, M.J., Khan, M.J., Siddiqui, A.M., Khurshid, K.: An automated and efficient convolutional architecture for disguise-invariant face recognition using noise-based data augmentation and deep transfer learning. The Vis. Comput. 1–15 (2021)

Vinolin, V., Sucharitha, M.: Dual adaptive deep convolutional neural network for video forgery detection in 3D lighting environment. Vis. Comput. 37(8), 2369–2390 (2021)

Lu, M., Niu, S.: A detection approach using LSTM-CNN for object removal caused by exemplar-based image inpainting. Electronics 9(5), 858 (2020)

Wu, H., Zhou, J.: IID-Net: Image inpainting detection network via neural architecture search and attention. IEEE Trans. Circuits Syst. Video Technol. 32(3), 1172–85 (2021)

Wang, X., Wang, H., Niu, S.: An image forensic method for AI inpainting using faster R-CNN. In: International Conference on Artificial Intelligence and Security, pp. 476–487. Springer (2019)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Systems 28 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778 (2016)

Wang, X., Niu, S., Wang, H.: Image inpainting detection based on multi-task deep learning network. IETE Tech. Rev. 38(1), 149–157 (2021)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE international conference on computer vision, pp. 2961–2969 (2017)

Fridrich, J., Kodovsky, J.: Rich models for steganalysis of digital images. IEEE Trans. Inf. Forensics Secur. 7(3), 868–882 (2012)

Zhou, P., Han, X., Morariu, V.I., Davis, L.S.: Learning rich features for image manipulation detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1053–1061 (2018)

Zhou, B., Lapedriza, A., Khosla, A., Oliva, A., Torralba, A.: Places: A 10 million image database for scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1452–1464 (2017)

Chen, Y., Liu, L., Tao, J., Chen, X., Xia, R., Zhang, Q., Xiong, J., Yang, K., Xie, J.: The image annotation algorithm using convolutional features from intermediate layer of deep learning. Multimed. Tools Appl. 80(3), 4237–4261 (2021)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE international conference on computer vision, pp. 1026–1034 (2015)

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. In: International Conference on Learning Representations (2018)

Funding

This study was supported by the National Natural Science Foundation of China under Grants 61972282 and 61971303, and by the Opening Project of State Key Laboratory of Digital Publishing Technology under Grant Cndplab-2019-Z001. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhu, X., Lu, J., Ren, H. et al. A transformer–CNN for deep image inpainting forensics. Vis Comput 39, 4721–4735 (2023). https://doi.org/10.1007/s00371-022-02620-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02620-0