Abstract

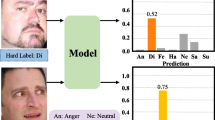

Supervised learning style requires a large amount of manually annotated data which are not readily available for facial attributes. Particularly annotations for emotion images are subjective and inconsistent, thus labeled facial expression data are insufficient, which cannot satisfy the demand for large-scale Facial Expression Recognition in deep learning era. In this paper, we propose a new self-supervised pretext task, called Weighted Contrastive Learning to make full use of extensive non-curated facial data, and learn the discriminative representation in a self-supervised way. Specifically, WeiCL learns the discriminative representation by two steps: (1) a pre-classification module is designed to build a specific batch for weighted contrastive learning by obtaining pseudo labels of the pre-training unlabeled facial data, and (2) a novel weighted contrastive objective function is proposed to reduce the intra-class variation and enlarge the distance among different instances by weighting the intermediate samples. Experiments on benchmark FER datasets validate the effect of our method. Results demonstrate that our approach outperforms current leading methods with 86.96% on RAF-DB and 71.42% on FER2013, under a rigorous evaluation protocol.

Similar content being viewed by others

References

Sonawane, B., Sharma, P.: Review of automated emotion-based quantification of facial expression in parkinson’s patients. Vis. Comput. 37, 1151–1167 (2021)

Kumar, S., Bhuyan, M.K., Iwahori, Y.: Multi-level uncorrelated discriminative shared gaussian process for multi-view facial expression recognition. Vis. Comput. 37(1), 143–159 (2021)

Kumar, R., Sundaram, M., Arumugam, N.: Facial emotion recognition using subband selective multilevel stationary wavelet gradient transform and fuzzy support vector machine. Vis. Comput. 37(8), 2315–2329 (2021)

Lucey, P., Cohn, J.F., Kanade, T., Saragih, J., Ambadar, Z., Matthews, I.: The extended cohn-kanade dataset (ck+): a complete dataset for action unit and emotion-specified expression. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 94–101. IEEE (2010)

Dhall, A., Goecke, R., Lucey, S., Gedeon, T.: Static facial expression analysis in tough conditions: Data, evaluation protocol and benchmark. In: 2011 IEEE International Conference on Computer Vision Workshops, pp. 2106–2112. IEEE (2011)

Goodfellow, I.J., Erhan, D., Carrier, P.L., Courville, A., Mirza, M., Hamner, B., Cukierski, W., Tang, Y., Thaler, D., Lee, D.-H., et al.: Challenges in representation learning: a report on three machine learning contests. In: International Conference on Neural Information Processing, pp. 117–124. Springer (2013)

Mollahosseini, A., Hasani, B., Mahoor, M.H.: Affectnet: a database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 10(1), 18–31 (2017)

Fabian Benitez-Quiroz, C., Srinivasan, R., Martinez, A.M.: Emotionet: an accurate, real-time algorithm for the automatic annotation of a million facial expressions in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5562–5570. (2016)

Li, S., Deng, W., Du, J.: Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2852–2861. (2017)

Sang, D.V., Van Dat, N., et al.: Facial expression recognition using deep convolutional neural networks. In: 2017 9th International Conference on Knowledge and Systems Engineering (KSE), pp. 130–135. IEEE (2017)

Li, Y., Zeng, J., Shan, S., Chen, X.: Occlusion aware facial expression recognition using cnn with attention mechanism. IEEE Trans. Image Process. 28(5), 2439–2450 (2019)

Wang, Z., Zeng, F., Liu, S., Zeng, B.: Oaenet: oriented attention ensemble for accurate facial expression recognition. Pattern Recogn. 112, 107694 (2021)

Larsson, G., Maire, M., Shakhnarovich, G.: Colorization as a proxy task for visual understanding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6874–6883. (2017)

Chen, T., Zhai, X., Ritter, M., Lucic, M., Houlsby, N.: Self-supervised gans via auxiliary rotation loss. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 12154–12163. (2019)

Gidaris, S., Singh, P., Komodakis, N.: Unsupervised representation learning by predicting image rotations. In: International Conference on Learning Representations. (2018)

Donahue, J., Simonyan, K.: Large scale adversarial representation learning. In: Advances in Neural Information Processing Systems, pp. 10542–10552. (2019)

Brock, A., Donahue, J., Simonyan, K.: Large scale gan training for high fidelity natural image synthesis. In: 7th International Conference on Learning Representations (2019)

Dumoulin, V., Belghazi, I., Poole, B., Mastropietro, O., Lamb, A., Arjovsky, M., Courville, A.: Adversarially learned inference. In: International Conference on Learning Representations. (2016)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: 37th International Conference on Machine Learning. (2020)

Misra, I., Maaten, L.V.d.: Self-supervised learning of pretext-invariant representations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6707–6717. (2020)

He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9729–9738 (2020)

Li, K., Jin, Y., Akram, M.W., Han, R., Chen, J.: Facial expression recognition with convolutional neural networks via a new face cropping and rotation strategy. Vis. Comput. 36(2), 391–404 (2020)

Ng, P.C., Henikoff, S.: Sift: predicting amino acid changes that affect protein function. Nucleic Acids Res. 31(13), 3812–3814 (2003)

Shan, C., Gong, S., McOwan, P.W.: Facial expression recognition based on local binary patterns: a comprehensive study. Image Vis. Comput. 27(6), 803–816 (2009)

Tian, Y.-l., Kanade, T., Cohn, J.F.: Evaluation of gabor-wavelet-based facial action unit recognition in image sequences of increasing complexity. In: Proceedings of Fifth IEEE International Conference on Automatic Face Gesture Recognition, pp. 229–234. IEEE (2002)

Rudovic, O., Pantic, M., Patras, I.: Coupled gaussian processes for pose-invariant facial expression recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35(6), 1357–1369 (2012)

Gupta, S.K., Agrwal, S., Meena, Y.K., Nain, N.: A hybrid method of feature extraction for facial expression recognition. In: 2011 Seventh International Conference on Signal Image Technology & Internet-Based Systems, pp. 422–425. IEEE (2011)

Wang, X., Jin, C., Liu, W., Hu, M., Xu, L., Ren, F.: Feature fusion of hog and wld for facial expression recognition. In: Proceedings of the 2013 IEEE/SICE International Symposium on System Integration, pp. 227–232. IEEE (2013)

Jiang, J., Han, F., Ling, Q., Wang, J., Li, T., Han, H.: Efficient network architecture search via multiobjective particle swarm optimization based on decomposition. Neural Netw. 123, 305–316 (2020)

Gou, J., Wu, H., Song, H., Du, L., Ou, W., Zeng, S., Ke, J.: Double competitive constraints-based collaborative representation for pattern classification. Comput. Electr. Eng. 84, 106632 (2020)

Lee, W., Na, J., Kim, G.: Multi-task self-supervised object detection via recycling of bounding box annotations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4984–4993. (2019)

Zhou, M., Bai, Y., Zhang, W., Zhao, T., Mei, T.: Look-into-object: self-supervised structure modeling for object recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11774–11783. (2020)

Yang, C., Wu, Z., Zhou, B., Lin, S.: Instance localization for self-supervised detection pretraining. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3987–3996. (2021)

Hung, W.-C., Jampani, V., Liu, S., Molchanov, P., Yang, M.-H., Kautz, J.: Scops: self-supervised co-part segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 869–878. (2019)

Chen, M.-H., Li, B., Bao, Y., AlRegib, G., Kira, Z.: Action segmentation with joint self-supervised temporal domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9454–9463. (2020)

Wang, Y., Zhang, J., Kan, M., Shan, S., Chen, X.: Self-supervised equivariant attention mechanism for weakly supervised semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12275–12284. (2020)

Tan, F., Zhu, H., Cui, Z., Zhu, S., Pollefeys, M., Tan, P.: Self-supervised human depth estimation from monocular videos. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 650–659. (2020)

Guizilini, V., Ambrus, R., Pillai, S., Raventos, A., Gaidon, A.: 3d packing for self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2485–2494. (2020)

Johnston, A., Carneiro, G.: Self-supervised monocular trained depth estimation using self-attention and discrete disparity volume. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4756–4765. (2020)

Poggi, M., Aleotti, F., Tosi, F., Mattoccia, S.: On the uncertainty of self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3227–3237. (2020)

Chopra, S., Hadsell, R., LeCun, Y.: Learning a similarity metric discriminatively, with application to face verification. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 539–546. IEEE (2005)

Henaff, O.: Data-efficient image recognition with contrastive predictive coding. In: 37th International Conference on Machine Learning (2020)

Tian, Y., Krishnan, D., Isola, P.: Contrastive multiview coding. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XI 16, pp. 776–794. Springer (2020)

Kim, M., Tack, J., Hwang, S.J.: Adversarial self-supervised contrastive learning. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, Virtual (2020)

Jiang, Z., Chen, T., Chen, T., Wang, Z.: Robust pre-training by adversarial contrastive learning. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, Virtual (2020)

Ng, P.C., Henikoff, S.: Sift: predicting amino acid changes that affect protein function. Nucleic Acids Res. 31(13), 3812–3814 (2003)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. Nature 323(6088), 533–536 (1986)

Levin, E., Fleisher, M.: Accelerated learning in layered neural networks. Complex Syst. 2(625–640), 3 (1988)

Zhang, K., Zhang, Z., Li, Z., Qiao, Y.: Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 23(10), 1499–1503 (2016)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE (2009)

Van Der Maaten, L.: Accelerating t-sne using tree-based algorithms. J. Mach. Learn. Res. 15(1), 3221–3245 (2014)

Vielzeuf, V., Kervadec, C., Pateux, S., Lechervy, A., Jurie, F.: An occam’s razor view on learning audiovisual emotion recognition with small training sets. In: Proceedings of the 20th ACM International Conference on Multimodal Interaction, pp. 589–593. (2018)

Florea, C., Florea, L., Badea, M.-S., Vertan, C., Racoviteanu, A.: Annealed label transfer for face expression recognition. In: 2019 30th British Machine Vision Conference, p. 104. (2019)

Florea, C., Badea, M., Florea, L., Racoviteanu, A., Vertan, C.: Margin-mix: semi-supervised learning for face expression recognition. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXIII 16, pp. 1–17. Springer (2020)

Zeng, G., Zhou, J., Jia, X., Xie, W., Shen, L.: Hand-crafted feature guided deep learning for facial expression recognition. In: 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition, pp. 423–430. IEEE (2018)

Li, S., Deng, W.: Reliable crowdsourcing and deep locality-preserving learning for unconstrained facial expression recognition. IEEE Trans. Image Process. 28(1), 356–370 (2019)

Zeng, J., Shan, S., Chen, X.: Facial expression recognition with inconsistently annotated datasets. In: Proceedings of the European Conference on Computer Vision, pp. 222–237. (2018)

Chen, S., Wang, J., Chen, Y., Shi, Z., Geng, X., Rui, Y.: Label distribution learning on auxiliary label space graphs for facial expression recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13984–13993. (2020)

Li, S., Deng, W.: A deeper look at facial expression dataset bias. IEEE Trans. Affect. Comput. (2020)

Xie, Y., Chen, T., Pu, T., Wu, H., Lin, L.: Adversarial graph representation adaptation for cross-domain facial expression recognition. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 1255–1264. (2020)

Fan, Y., Lam, J.C., Li, V.O.: Multi-region ensemble convolutional neural network for facial expression recognition. In: International Conference on Artificial Neural Networks, pp. 84–94. Springer (2018)

Shao, J., Cheng, Q.: E-fcnn for tiny facial expression recognition. Appl. Intell. 51(1), 549–559 (2021)

Tang, Y., Zhang, X., Hu, X., Wang, S., Wang, H.: Facial expression recognition using frequency neural network. IEEE Trans. Image Process. 30, 444–457 (2020)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708. (2017)

Zagoruyko, S., Komodakis, N.: Wide residual networks. In: Proceedings of the British Machine Vision Conference (BMVC), pp. 87–18712. (2016)

Acknowledgements

This work is supported in part by the Key Projects of the National Natural Science Foundation of China under Grant U1836220, the National Nature Science Foundation of China under Grant 61672267, 62176106, Jiangsu Province key research and development plan under Grant BE2020036, in part by the Postgraduate Research & Practice Innovation Program of Jiangsu Province under Grant KYCX19_1616.If anyone needs code, you can contact the corresponding author by email and we will give it after evaluation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xi, Y., Mao, Q. & Zhou, L. Weighted contrastive learning using pseudo labels for facial expression recognition. Vis Comput 39, 5001–5012 (2023). https://doi.org/10.1007/s00371-022-02642-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02642-8