Abstract

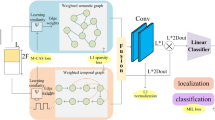

Action recognition in real-world scenarios is a challenging task which involves the action localization and classification for untrimmed video. Since the untrimmed video in real scenarios lacks fine annotation, existing supervised learning methods have limited effectiveness and robustness in performance. Moreover, state-of-the-art methods discuss each action proposal individually, ignoring the exploration of semantic relationship between different proposals from continuity of video. To address these issues, we propose a weakly supervised approach to explore the proposal relations using Graph Convolutional Networks (GCNs). Specifically, the method introduces action similarity edges and temporal similarity edges to represent the context semantic relationship between different proposals for graph constructing, and the similarity of action features is used to weakly supervise the spatial semantic relationship between labeled and unlabeled samples to achieve the effective recognition of actions in the video. We validate the effectiveness of the proposed method on public benchmarks for untrimmed video (THUMOS14 and ActivityNet). The experimental results demonstrate that the proposed method in this paper has achieved state-of-the-art results, and achieves better robustness and generalization performance.

Similar content being viewed by others

References

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. arXiv preprint arXiv:1406.2199 (2014)

Wang, L., Xiong, Y., Wang, Z., Qiao, Y., Lin, D., Tang, X., Van Gool, L.: Temporal segment networks: Towards good practices for deep action recognition. In: European Conference on Computer Vision, pp. 20–36 (2016). Springer

Fan, L., Huang, W., Gan, C., Ermon, S., Gong, B., Huang, J.: End-to-end learning of motion representation for video understanding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6016–6025 (2018)

Vishwakarma, S., Agrawal, A.: A survey on activity recognition and behavior understanding in video surveillance. Vis. Comput. 29(10), 983–1009 (2013)

Shou, Z., Chan, J., Zareian, A., Miyazawa, K., Chang, S.-F.: Cdc: Convolutional-de-convolutional networks for precise temporal action localization in untrimmed videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5734–5743 (2017)

Shou, Z., Wang, D., Chang, S.-F.: Temporal action localization in untrimmed videos via multi-stage cnns. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1049–1058 (2016)

Alwassel, H., Heilbron, F.C., Ghanem, B.: Action search: Spotting actions in videos and its application to temporal action localization. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 251–266 (2018)

Chao, Y.-W., Vijayanarasimhan, S., Seybold, B., Ross, D.A., Deng, J., Sukthankar, R.: Rethinking the faster r-cnn architecture for temporal action localization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1130–1139 (2018)

Dai, X., Singh, B., Zhang, G., Davis, L.S., Qiu Chen, Y.: Temporal context network for activity localization in videos. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 5793–5802 (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Adv. Neural. Inf. Process. Syst. 25, 1097–1105 (2012)

Duan, X., Huang, W., Gan, C., Wang, J., Zhu, W., Huang, J.: Weakly supervised dense event captioning in videos. arXiv preprint arXiv:1812.03849 (2018)

Gan, C., Gong, B., Liu, K., Su, H., Guibas, L.J.: Geometry guided convolutional neural networks for self-supervised video representation learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5589–5597 (2018)

Liu, D., Jiang, T., Wang, Y.: Completeness modeling and context separation for weakly supervised temporal action localization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1298–1307 (2019)

Narayan, S., Cholakkal, H., Khan, F.S., Shao, L.: 3c-net: Category count and center loss for weakly-supervised action localization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8679–8687 (2019)

Gao, J., Yang, Z., Nevatia, R.: Cascaded boundary regression for temporal action detection. arXiv preprint arXiv:1705.01180 (2017)

Lin, T., Zhao, X., Shou, Z.: Single shot temporal action detection. In: Proceedings of the 25th ACM International Conference on Multimedia, pp. 988–996 (2017)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Hu, H., Gu, J., Zhang, Z., Dai, J., Wei, Y.: Relation networks for object detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3588–3597 (2018)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016)

Yan, S., Xiong, Y., Lin, D.: Spatial temporal graph convolutional networks for skeleton-based action recognition. In: Thirty-second AAAI Conference on Artificial Intelligence (2018)

Zeng, R., Huang, W., Tan, M., Rong, Y., Zhao, P., Huang, J., Gan, C.: Graph convolutional networks for temporal action localization. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7094–7103 (2019)

Heilbron, F.C., Niebles, J.C., Ghanem, B.: Fast temporal activity proposals for efficient detection of human actions in untrimmed videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1914–1923 (2016)

Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. Advances in neural information processing systems, 2015, 28.

Gao, J., Yang, Z., Chen, K., Sun, C., Nevatia, R.: Turn tap: Temporal unit regression network for temporal action proposals. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3628–3636 (2017)

Xiong, Y., Zhao, Y., Wang, L., Lin, D., Tang, X.: A pursuit of temporal accuracy in general activity detection. arXiv preprint arXiv:1703.02716 (2017)

Zhao, Y., Xiong, Y., Wang, L., Wu, Z., Tang, X., Lin, D.: Temporal action detection with structured segment networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2914–2923 (2017)

Xu, H., Das, A., Saenko, K.: R-c3d: Region convolutional 3d network for temporal activity detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 5783–5792 (2017)

Yang, K., Qiao, P., Li, D., Lv, S., Dou, Y.: Exploring temporal preservation networks for precise temporal action localization. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Wang, L., Xiong, Y., Lin, D., Van Gool, L.: Untrimmednets for weakly supervised action recognition and detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4325–4334 (2017)

Shou, Z., Gao, H., Zhang, L., Miyazawa, K., Chang, S.-F.: Autoloc: Weakly-supervised temporal action localization in untrimmed videos. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 154–171 (2018)

Xu, Y., Zhang, C., Cheng, Z., Xie, J., Niu, Y., Pu, S., Wu, F.: Segregated temporal assembly recurrent networks for weakly supervised multiple action detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 9070–9078 (2019)

Tan, M., Shi, Q., van den Hengel, A., Shen, C., Gao, J., Hu, F., Zhang, Z.: Learning graph structure for multi-label image classification via clique generation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4100–4109 (2015)

Shen, Y., Li, H., Yi, S., Chen, D., Wang, X.: Person re-identification with deep similarity-guided graph neural network. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 486–504 (2018)

Wang, X., Gupta, A.: Videos as space-time region graphs. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 399–417 (2018)

Hamilton, W.L., Ying, R., Leskovec, J.: Inductive representation learning on large graphs. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 1025–1035 (2017)

Chen, J., Ma, T., Xiao, C.: Fastgcn: fast learning with graph convolutional networks via importance sampling. arXiv preprint arXiv:1801.10247 (2018)

Huang, W., Zhang, T., Rong, Y., Huang, J.: Adaptive sampling towards fast graph representation learning. arXiv preprint arXiv:1809.05343 (2018)

Shi, B., Dai, Q., Mu, Y., Wang, J.: Weakly-supervised action localization by generative attention modeling. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1009–1019 (2020)

Luo, Z., Guillory, D., Shi, B., Ke, W., Wan, F., Darrell, T., Xu, H.: Weakly-supervised action localization with expectation-maximization multi-instance learning. In: European Conference on Computer Vision, pp. 729–745 (2020). Springer

Min, K., Corso, J.J.: Adversarial background-aware loss for weakly-supervised temporal activity localization. In: European Conference on Computer Vision, pp. 283–299 (2020). Springer

Zhang, C., Cao, M., Yang, D., Chen, J., Zou, Y.: Cola: Weakly-supervised temporal action localization with snippet contrastive learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 16010–16019 (2021)

Yuan, Y., Lyu, Y., Shen, X., Tsang, I.W., Yeung, D.-Y.: Marginalized average attentional network for weakly-supervised learning. arXiv preprint arXiv:1905.08586 (2019)

Yu, T., Ren, Z., Li, Y., Yan, E., Xu, N., Yuan, J.: Temporal structure mining for weakly supervised action detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 5522–5531 (2019)

Lee, P., Uh, Y., Byun, H.: Background suppression network for weakly-supervised temporal action localization. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 11320–11327 (2020)

Liu, Z., Wang, L., Zhang, Q., Tang, W., Yuan, J., Zheng, N., Hua, G.: Acsnet: Action-context separation network for weakly supervised temporal action localization. arXiv preprint arXiv:2103.15088 (2021)

Jiang, Y.-G., Liu, J., Roshan Zamir, A., Toderici, G., Laptev, I., Shah, M., Sukthankar, R.: THUMOS Challenge: Action Recognition with a Large Number of Classes. http://crcv.ucf.edu/THUMOS14/ (2014)

Ghanem, B., Niebles, J.C., Snoek, C., Heilbron, F.C., Alwassel, H., Escorcia, V., Krishna, R., Buch, S., Dao, C.D.: The activitynet large-scale activity recognition challenge 2018 summary. arXiv preprint arXiv:1808.03766 (2018)

Lin, T., Zhao, X., Su, H., Wang, C., Yang, M.: Bsn: Boundary sensitive network for temporal action proposal generation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Carreira, J., Zisserman, A.: Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6299–6308 (2017)

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities B200202205 and 2018B47114, the Key Research and Development Program of Jiangsu under grants BK20192004, BE2018004-04, Guangdong Forestry Science and Technology Innovation Project under grant 2020KJCX005, International Cooperation and Exchanges of Changzhou under grant CZ20200035, and by the State Key Laboratory of Integrated Management of Pest Insects and Rodents under grant IPM1914.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yao, X., Zhang, J., Chen, R. et al. Weakly supervised graph learning for action recognition in untrimmed video. Vis Comput 39, 5469–5483 (2023). https://doi.org/10.1007/s00371-022-02673-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02673-1