Abstract

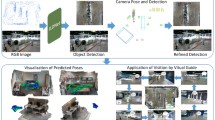

Semantic segmentation is important for the accuracy of target detection. Semantic labels are difficult to obtain for real driving; however, they are easy to obtain in virtual datasets. So this paper presents an adaptive joint training strategy based on real and virtual datasets: (1) building multi-modal fusion networks using image, depth and semantic information. (2) A joint training strategy of virtual and real datasets and data sharing is used for semantic information, and an adaptive optimizer is provided. The monocular detection network obtained by training with this strategy has a large improvement in its effectiveness relative to the conventional network.

Similar content being viewed by others

Data availability

Data available on request from the authors. The data that support the findings of this study are available from the corresponding author, upon reasonable request.

References

Redmon, J., Farhadi, A.: Yolov3: An incremental improvement. Arxiv preprint (2018). arXiv:1804.02767

Han, J., Chen, H., Liu, N., Yan, C., Li, X.: CNNs-based RGB-D saliency detection via cross-view transfer and multiview fusion. IEEE Trans. Cybern. 48, 3171–3183 (2017)

Hackett, J.K., Shah, M.: Multi-sensor fusion: A perspective. In: Proceedings of the IEEE International Conference on Robotics and Automation, pp. 1324–1330 (1990)

Zhang, G., Liu, J., Liu, Y., Zhao, J., Tian, L., Chen, Y.Q.: Physical blob detector and multi-channel color shape descriptor for human detection. J. Vis. Commun. Image Represent. 52, 13–23 (2018)

Zhang, G., Liu, J., Tian, L., Chen, Y.Q.: Reliably detecting humans with RGB-D camera with physical blob detector followed by learning-based filtering. In: 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). pp. 2004–2008. IEEE (2016)

Zhang, G., Liu, J., Li, H., Chen, Y.Q., Davis, L.S.: Joint human detection and head pose estimation via multistream networks for RGB-D videos. IEEE Signal Process. Lett. 24, 1666–1670 (2017)

Zhou, X., Wang, Y., Zhu, Q., Xiao, C., Lu, X.: SSG: Superpixel segmentation and grabcut-based salient object segmentation. Vis. Comput. 35, 385–398 (2019)

Zhao, J., Mao, X., Zhang, J.: Learning deep facial expression features from image and optical flow sequences using 3D CNN. Vis. Comput. 34, 1461–1475 (2018)

Berlin, S.J., John, M.: Spiking neural network based on joint entropy of optical flow features for human action recognition. Vis. Comput. 38, 223–237 (2022)

He, D., He, X., Yuan, R., Li, Y., Shen, C.: Lightweight network-based multi-modal feature fusion for face anti-spoofing. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02420-6

Ahmadian, K., Gavrilova, M.: A multi-modal approach for high-dimensional feature recognition. Vis. Comput. 29, 123–130 (2013)

Ding, Y., Duan, Z., Li, S.: Source-free unsupervised multi-source domain adaptation via proxy task for person re-identification. Vis. Comput. 38, 1871–1882 (2022)

Li, P., Chen, X., Shen, S.: Stereo R-CNN based 3d object detection for autonomous driving. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 7644–7652 (2019)

Qin, Z., Wang, J., Lu, Y.: Monogrnet: A geometric reasoning network for monocular 3d object localization. In: Proceedings of the AAAI Conference on Artificial Intelligence. pp. 8851–8858 (2019)

Park, J., Jung, D.-J.: Deep convolutional neural network architectures for tonal frequency identification in a lofargram. Int. J. Control Autom. Syst. 19, 1103–1112 (2021)

Liu, Z., Wu, Z., Tóth, R.: Smoke: Single-stage monocular 3d object detection via keypoint estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. pp. 996–997 (2020)

Zhang, J., Su, Q., Tang, B., Wang, C., Li, Y.: DPSNet: Multitask learning using geometry reasoning for scene depth and semantics. IEEE Trans. Neural Netw. Learn. Syst. (2021). https://doi.org/10.1109/TNNLS.2021.3107362

Zhang, J., Su, Q., Wang, C., Gu, H.: Monocular 3D vehicle detection with multi-instance depth and geometry reasoning for autonomous driving. Neurocomputing 403, 182–192 (2020)

Li, Y., Luo, F., Li, W., Zheng, S., Wu, H., Xiao, C.: Self-supervised monocular depth estimation based on image texture detail enhancement. Vis. Comput. 37, 2567–2580 (2021)

Ding, P., Shen, Q., Huang, T., Wang, M.: A generation method and verification of virtual dataset. In: International Conference on Man-Machine-Environment System Engineering. pp. 469–475. Springer (2020)

Zhuang, F., Qi, Z., Duan, K., Xi, D., Zhu, Y., Zhu, H., Xiong, H., He, Q.: A comprehensive survey on transfer learning. Proc. IEEE 109, 43–76 (2020)

Luo, H., Hanagud, S.: Dynamic learning rate neural network training and composite structural damage detection. AIAA J. 35, 1522–1527 (1997)

Garcia-Garcia, A., Orts-Escolano, S., Oprea, S., Villena-Martinez, V., Garcia-Rodriguez, J.: A review on deep learning techniques applied to semantic segmentation. arXiv preprint arXiv:1704.06857. (2017)

Vu, T.-H., Jain, H., Bucher, M., Cord, M., Pérez, P.: Dada: Depth-aware domain adaptation in semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 7364–7373 (2019)

Yu, F., Wang, D., Shelhamer, E., Darrell, T.: Deep layer aggregation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 2403–2412 (2018)

Li, B., Zhang, T., Xia, T.: Vehicle detection from 3d lidar using fully convolutional network. arXiv preprint arXiv:1608.07916. (2016)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. (2014)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. Adv. Neural Inf. Process. Syst. 30, 5998–6008 (2017)

Ros, G., Sellart, L., Materzynska, J., Vazquez, D., Lopez, A.M.: The synthia dataset: A large collection of synthetic images for semantic segmentation of urban scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 3234–3243 (2016)

Geiger, A., Lenz, P., Stiller, C., Urtasun, R.: Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 32, 1231–1237 (2013)

Chen, X., Kundu, K., Zhu, Y., Berneshawi, A.G., Ma, H., Fidler, S., Urtasun, R.: 3d object proposals for accurate object class detection. Adv Neural Inf. Process. Syst. 28, 424–432 (2015)

Manhardt, F., Kehl, W., Gaidon, A.: Roi-10d: Monocular lifting of 2d detection to 6d pose and metric shape. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2069–2078 (2019)

Li, P., Zhao, H., Liu, P., Cao, F.: RTM3D: Real-time monocular 3d detection from object keypoints for autonomous driving. In: European Conference on Computer Vision. pp. 644–660. Springer (2020)

Chen, Y., Tai, L., Sun, K., Li, M.: Monopair: Monocular 3d object detection using pairwise spatial relationships. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12093–12102 (2020)

Ding, M., Huo, Y., Yi, H., Wang, Z., Shi, J., Lu, Z., Luo, P.: Learning depth-guided convolutions for monocular 3d object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. pp. 1000–1001 (2020)

Brazil, G., Pons-Moll, G., Liu, X., Schiele, B.: Kinematic 3d object detection in monocular video. In: European Conference on Computer Vision. pp. 135–152. Springer (2020)

Qin, Z., Wang, J., Lu, Y.: Monogrnet: A general framework for monocular 3d object detection. IEEE Trans. Pattern Anal. Mach. Intell. 44, 5170–5184 (2022)

Li, P., Zhao, H.: Monocular 3d detection with geometric constraint embedding and semi-supervised training. IEEE Robot. Autom. Lett. 6, 5565–5572 (2021)

Kumar, A., Brazil, G., Liu, X.: GrooMeD-NMS: Grouped mathematically differentiable NMS for monocular 3d object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8973–8983 (2021)

Shi, X., Ye, Q., Chen, X., Chen, C., Chen, Z., Kim, T.-K.: Geometry-based distance decomposition for monocular 3d object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 15172–15181 (2021)

Zhou, Z., Du, L., Ye, X., Zou, Z., Tan, X., Ding, E., Zhang, L., Xue, X., Feng, J.: SGM3D: Stereo guided monocular 3D object detection. IEEE Robot. Autom. Lett. arXiv preprint arXiv:2112.01914. (2021)

Liu, Z., Zhou, D., Lu, F., Fang, J., Zhang, L.: Autoshape: Real-time shape-aware monocular 3d object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 15641–15650 (2021)

Liu, C., Gu, S., Van Gool, L., Timofte, R.: Deep line encoding for monocular 3d object detection and depth prediction. In: 32nd British Machine Vision Conference (BMVC 2021). p. 354 (2021)

Funding

This work was supported by “the Fundamental Research Funds for the Central Universities, PA2021KCPY0041”,“Innovation Project of New Energy Vehicle and Intelligent Connected Vehicle of Anhui Province” and “The University Synergy Innovation Program of Anhui Province, GXXT-2020-076”. The authors thank the anonymous reviewers for their instructive comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors certify that there is no conflict of interest with any individual/organization for the present work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cheng, T., Sun, L., Zhang, J. et al. Based on real and virtual datasets adaptive joint training in multi-modal networks with applications in monocular 3D target detection. Vis Comput 39, 6367–6377 (2023). https://doi.org/10.1007/s00371-022-02734-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-022-02734-5