Abstract

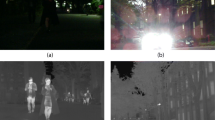

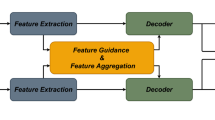

Recently, red-green-blue (RGB) and thermal (RGB-T) data have attracted considerable interest for semantic segmentation because they provide robust imaging under the complex lighting conditions of urban roads. Most existing RGB-T semantic segmentation methods adopt an encoder-decoder structure, and repeated upsampling causes semantic information loss during decoding. Moreover, using simple cross-modality fusion neither completely mines complementary information from different modalities nor removes noise from the extracted features. To address these problems, we developed a dual-decoding hierarchical fusion network (DHFNet) to extract RGB and thermal information for RGB-T Semantic Segmentation. DHFNet uses a novel two-layer decoder and implements boundary refinement and boundary-guided foreground/background enhancement modules. The modules process features from different levels to achieve the global guidance and local refinement of the segmentation prediction. In addition, an adaptive attention-filtering fusion module filters and extracts complementary information from the RGB and thermal modalities. Further, we introduce a graph convolutional network and an atrous spatial pyramid pooling module to obtain multiscale features and deepen the extracted semantic information. Experimental results on two benchmark datasets showed that the proposed DHFNet performed well relative to state-of-the-art semantic segmentation methods in terms of different evaluation metrics.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are openly available in https://www.mi.t.u-tokyo.ac.jp/static/projects/mil_multispectral/https://github.com/ShreyasSkandanS/pst900_thermal_rgb.

References

Wu, J., Zhou, W., Qian, X., Lei, J., Yu, L., Luo, T.: MENet: Lightweight multimodality enhancement network for detecting salient objects in RGB-thermal images. Neurocomputing 527, 119–129 (2023)

Zhou, W., Yue, Y., Fang, M., Qian, X., Yang, R., Yu, L.: BCINet: Bilateral cross-modal interaction network for indoor scene understanding in RGB-D images. Inf. Fusion 94, 32–42 (2023)

Zhou, W., Lv, Y., Lei, J., Yu, L.: Global and local-contrast guides content-aware fusion for RGB-D saliency prediction. IEEE Trans. Syst. Man Cybern. Syst. 51(6), 3641–3649 (2019)

Xu, G., Zhou, W., Qian, X., Ye, L., Lei, J., Yu, L.: CCFNet: Cross-complementary fusion network for RGB-D scene parsing of clothing images. J. Vis. Commun. Image Represent. 90, 103727 (2023)

Zhou, W., Hong, J.: FHENet: Lightweight feature hierarchical exploration network for real-time rail surface defect inspection in RGB-D images. IEEE Trans. Instrum. Meas. (2023). https://doi.org/10.1109/TIM.2023.3237830

Wu, J., Zhou, W., Qian, X., Lei, J., Yu, L., Luo, T.: MFENet: Multitype fusion and enhancement network for detecting salient objects in RGB-T images. Digital Signal Process. 133, 103827 (2023)

Zhou, W., Liu, C., Lei, J., Yu, L., Luo, T.: HFNet: Hierarchical feedback network with multilevel atrous spatial pyramid pooling for RGB-D saliency detection. Neurocomputing 490, 347–357 (2022)

Jin, J., Zhou, W., Yang, R., Ye L., Yu L.: Edge detection guide network for semantic segmentation of remote-sensing images. IEEE Geosci. Remote Sens. Lett. https://doi.org/10.1109/LGRS.2023.3234257

Zhou, W., Yang, E., Lei J., Yu, L.: FRNet: Feature reconstruction network for RGB-D indoor scene parsing. IEEE J. Sel. Topics Signal Process. 16(4), 677–687 (2022)

Zhou, W., Liu, C., Lei, J., Yu, L.: RLLNet: a lightweight remaking learning network for saliency redetection on RGB-D images. Sci. China Inf. Sci. 65(6), 160107 (2022)

Zhou, W., Guo, Q., Lei, J., Yu, L., Hwang, J.-N.: IRFR-Net: Interactive recursive feature-reshaping network for detecting salient objects in RGB-D images. IEEE Trans. Neural Netw. Learn. Syst. https://doi.org/10.1109/TNNLS.2021.3105484

Zhou, W., Yu, L., Zhou, Y., Qiu, W., Wu, M., Luo, T.,: Local and global feature learning for blind quality evaluation of screen content and natural scene images. IEEE Trans. Image Process. 27(5), 2086–2095 (2018)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. (2014) arXiv preprint arXiv:1409.1556

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

Hazirbas, C., Ma, L., Domokos, C., Cremers, D.: Fusenet: Incorporating depth into semantic segmentation via fusion-based CNN architecture. In: Asian Conference on Computer Vision (ACCV), pp. 213–228 (2016)

Yu, C., Wang, J., Peng, C., Gao, C., Yu, G., Sang, N.: Bisenet: Bilateral segmentation network for real-time semantic segmentation. In: Proceedings of the European Conference on Computer Vision, pp. 325–341 (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2818–2826 (2016)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4700–4708 (2017)

Huang, Z., Wang, X., Huang, L., Huang, C., Wei, Y., Liu, W.: Ccnet: Criss-cross attention for semantic segmentation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 603–612 (2019)

He, J., Deng, Z., Zhou, L., Wang, Y., Qiao, Y.: Adaptive pyramid context network for semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7519–7528 (2019)

Hu, X., Yang, K., Fei, L., Wang, K.: Acnet: Attention based network to exploit complementary features for rgbd semantic segmentation. In: 2019 IEEE International Conference on Image Processing, pp. 1440-1444 (2019)

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., Houlsby, N.: An image is worth 16x16 words: transformers for image recognition at scale. (2020) arXiv preprint arXiv:2010.11929

Liu, Z., Mao, H., Wu, C.Y., Feichtenhofer, C., Darrell, T., Xie, S.: A ConvNet for the 2020s. (2022) arXiv preprint arXiv:2201.03545

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022 (2021)

Rizzoli, G., Barbato, F., Zanuttigh, P.: Multimodal semantic segmentation in autonomous driving: a review of current approaches and future perspectives. Technologies 10(4), 90 (2022)

Liu, H., Zhang, J., Yang, K., Hu, X., Stiefelhagen, R.: CMX: Cross-modal fusion for RGB-X semantic segmentation with transformers (2022) arXiv preprnt arXiv:2203.04838

Cui, Y., Yan, L., Cao, Z., Liu, D.: Tf-blender: temporal feature blender for video object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 8138–8147 (2021)

Gong, T., Zhou, W., Qian, X., Lei, J., Yu, L.: Global contextually guided lightweight network for RGB-thermal urban scene understanding. Eng. Appl. Artif. Intell. 117, 105510 (2023)

Pohlen, T., Hermans, A., Mathias, M., Leibe, B.: Full-resolution residual networks for semantic segmentation in street scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4151–4160 (2017)

Sun, Y., Zuo, W., Yun, P., Wang, H., Liu, M.: FuseSeg: semantic segmentation of urban scenes based on RGB and thermal data fusion. IEEE Trans. Autom. Sci. Eng. 18(3), 1000–1011 (2020)

Zhang, Q., Zhao, S., Luo, Y., Zhang, D., Huang, N., Han, J.: ABMDRNet: adaptive-weighted Bi-directional modality difference reduction network for RGB-T semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2633–2642 (2021)

Ha, Q., Watanabe, K., Karasawa, T., Ushiku, Y., Harada, T.: MFNet: towards real-time semantic segmentation for autonomous vehicles with multi-spectral scenes. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5108-5115 (2017)

Sun, Y., Zuo, W., Liu, M.: Rtfnet: Rgb-thermal fusion network for semantic segmentation of urban scenes. IEEE Robot. Autom. Lett. 4(3), 2576–2583 (2019)

Yi, S., Li, J., Liu, X., Yuan, X.: CCAFFMNet: dual-spectral semantic segmentation network with channel-coordinate attention feature fusion module. Neurocomputing 482, 236–251 (2022)

Yan, L., Wang, Q., Cui, Y., Feng, F., Quan, X., Zhang, X., Liu, D.: GL-RG: Global-local representation granularity for video captioning (2022) arXiv preprint arXiv:2205.10706

Zhou, W., Guo, Q., Lei, J., Yu, L., Hwang, J.-N.: ECFFNet: Effective and consistent feature fusion network for RGB-T salient object detection. IEEE Trans. Circuits Syst. Video Technol. 32(3), 1224–1235 (2022)

Woo, S., Park, J., Lee, J.Y., Kweon, I.S.: Cba: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision, pp. 3–19 (2018)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Chen, L.C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 40(4), 834–848 (2017)

Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. (2017) arXiv preprint arXiv:1706.05587

Zhang, L., Li, X., Arnab, A., Yang, K., Tong, Y., Torr, P.H.: Dual graph convolutional network for semantic segmentation (2019) arXiv preprint arXiv:1909.06121

Li, G., Liu, Z., Zeng, D., Lin, W., Ling, H.: Adjacent context coordination network for salient object detection in optical remote sensing images. IEEE Trans. Cybern. (2022). https://doi.org/10.1109/TCYB.2022.3162945

Bochkovskiy, A., Wang, C.Y., Liao, H.Y.M.: Yolov4: Optimal speed and accuracy of object detection (2020) arXiv preprint arXiv:2004.10934

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention, pp. 234–241 (2015)

Zhou, W., Liu, J., Lei, J., Yu, L., Hwang, J.-N.: Gmnet: gradedfeature multilabel-learning network for rgb-thermal urban scene semantic segmentation. IEEE Trans. Image Process. 7790–7802 (2021)

Shivakumar, S.S., Rodrigues, N., Zhou, A., Miller, I.D., Kumar, V., Taylor, C.J.: Pst900: Rgb-thermal calibration, dataset and segmentation network. In: 2020 IEEE International Conference on Robotics and Automation, pp. 9441-9447 (2020)

Pohlen, T., Hermans, A., Mathias, M., Leibe, B.: Full-resolution residual networks for semantic segmentation in street scenes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4151-4160 (2017)

Zhou, W., Jin, J., Lei, J., Yu, L.: CIMFNet: Cross-layer interaction and multiscale fusion network for semantic segmentation of high-resolution remote sensing images. IEEE J. Sel. Topics Signal Process. 16(4), 666–676 (2022)

Yu, C., Wang, J., Peng, C., Gao, C., Yu, G., Sang, N.: Learning a discriminative feature network for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1857–1866 (2018)

Liu, J., He, J., Zhang, J., Ren, J.S., Li, H.: Efficientfcn: holistically-guided decoding for semantic segmentation. Eur. Conf. Comput. Vis. 1–17 (2020)

Funding

This work was supported by National Natural Science Foundation of China (61502429, 61672337, 61972357); the Zhejiang Provincial Natural Science Foundation of China (LY18F020012, LY17F020011); and Zhejiang Key R & D Program (2019C03135).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cai, Y., Zhou, W., Zhang, L. et al. DHFNet: dual-decoding hierarchical fusion network for RGB-thermal semantic segmentation. Vis Comput 40, 169–179 (2024). https://doi.org/10.1007/s00371-023-02773-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02773-6