Abstract

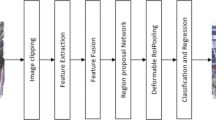

In recent years, real-time vehicle congestion detection has become a hot research topic in the field of transportation due to the frequent occurrence of highway traffic jams. Vehicle congestion detection generally adopts a vehicle counting algorithm based on object detection, but it is not effective in scenarios with large changes in vehicle scale, dense vehicles, background clutter, and severe occlusion. A vehicle object counting network based on a feature pyramid split attention mechanism is proposed for accurate vehicle counting and the generation of high-quality vehicle density maps in highly congested scenarios. The network extracts rich contextual features by using blocks at different scales, and then obtains a multi-scale feature mapping in the channel direction using kernel convolution of different sizes, and uses the channel attention module at different scales separately to allow the network to focus on features at different scales to obtain an attention vector in the channel direction to reduce mis-estimation of background information. Experiments on the vehicle datasets TRANCOS, CARPK, and HS-Vehicle show that the proposed method outperforms most existing counting methods based on detection or density estimation. The relative improvement in MAE metrics is 90.5% for the CARPK dataset compared to Fast R-CNN and 73.0% for the HS-Vehicle dataset compared to CSRNet. In addition, the method is also extended to count other objects, such as pedestrians in the ShanghaiTech dataset, and the proposed method effectively reduces the misrecognition rate and achieves higher counting performance compared to the state-of-the-art methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The labeled dataset used to support the findings of this study is available from the corresponding author upon request.

References

Knaian, A.N.: A wireless sensor network for smart roadbeds and intelligent transportation systems. Diss. MIT Media Lab., 2000

Zhao, J.D., Xu, F.F., Guo, Y.J., Gao, Y.: Traffic congestion detection based on pattern matching and correlation analysis. Adv. Transp. Stud. 40, 27–40 (2016)

Horne, D., Findley, D.J., Coble, D.G., Rickabaugh, T.J., Martin, J.B.: Evaluation of radar vehicle detection at four quadrant gate rail crossings. J. Rail Transp. Plan. Manag. 6(2), 149–162 (2016)

Manana, M., Tu, C.L., Owolawi, P.A.: A survey on vehicle detection based on convolution neural networks. In: Proceedings of the IEEE international conference on computer and communications (ICCC), pp. 1751–1755 (2017)

Deng, P., Wang, K., Han, X.: Real-time object detection based on YOLO-v2 for tiny vehicle object. SN Comput. Sci. 3(4), 329 (2022)

Benjdira, B., Khursheed, T., Koubaa, A., Ammar, A., Ouni, K.: Car detection using unmanned aerial vehicles: Comparison between faster r-cnn and yolov3. In: Proceedings of the 2019 1st international conference on unmanned vehicle systems-Oman (UVS), pp. 1–6 (2019)

Ren, S.Q., He, K.M., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017)

Redmon, J., Farhadi, A.: YOLO9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 6517–6525 (2017)

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement. http://arxiv.org/abs/1804.02767 (2018)

Chollet, F.: Xception: deep learning with depthwise separable convolutions. In: Proceedings of the 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp. 1800–1807 (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.: ImageNet classification with deep convolutional neural networks. Commun. ACM. 60(6), 84–90 (2017)

Li, B., Zhang, Y., Xu, Y., B.: CCST: crowd counting with swin transformer. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02485-3

Khan, S.D., Basalamah, S.: Scale and density invariant head detection deep model for crowd counting in pedestrian crowds. Vis. Comput. 37, 2127–2137 (2021)

Li, Y., Zhang, X., Chen, D.: CSRNet: dilated convolutional neural networks for understanding the highly congested scenes. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 1091–1100 (2018)

Bai, S., He, Z., Qiao, Y., Hu, H., Yan, J.: Adaptive dilated network with self-correction supervision for counting. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 4593–4602 (2020)

Felzenszwalb, P.F., Girshick, R.B., McAllester, D., Ramanan, D.: Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 32(9), 1627–1645 (2010)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 779–788 (2016)

Chen, W., Huang, H., Peng, S., Zhou, C., Zhang, C.: YOLO-face: a real-time face detector. Vis. Comput. 37(4), 805–813 (2021). https://doi.org/10.1007/s00371-020-01831-7

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.Y., Berg, A.C.: SSD: Single Shot MultiBox detector. In: Proceedings of the European conference on computer vision (ECCV), pp. 21–37. Springer, Cham (2016)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the 2014 IEEE conference on computer vision and pattern recognition (CVPR), pp. 580–587 (2014)

Lin, T.Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 2117–2125 (2017)

Harikrishnan, P.M., Thomas, A., Gopi, V.P., Palanisamy, P., Wahid, K.A.: Inception single shot multi-box detector with affinity propagation clustering and their application in multi-class vehicle counting. Appl. Intell. 51, 4714–4729 (2021)

Guo, C., Fan, B., Zhang, Q., Xiang, S., Pan, C.: AugFPN: improving multi-scale feature learning for object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp. 12595–12604 (2020)

Deng, C., Wang, M., Liu, L., Liu, Y., Jiang, Y.: Extended feature pyramid network for small object detection. IEEE Trans. Multimed. 24, 1968–1979 (2021)

Hu, J., Liu, R., Chen, Z., Wang, D., Zhang, Y., Xie, B.: Octave convolution-based vehicle detection using frame-difference as network input. Vis. Comput. (2022). https://doi.org/10.1007/s00371-022-02425-1

Chandrasekar, K.S., Geetha, P.: Multiple objects tracking by a highly decisive three-frame differencing-combined-background subtraction method with GMPFM-GMPHD filters and VGG16-LSTM classifier. J. Vis. Commun. Image Represent. 72, 102905 (2020)

Song, H., Liang, H., Li, H., Dai, Z., Yun, X.: Vision-based vehicle detection and counting system using deep learning in highway scenes. Eur. Transp. Res. Rev. 11, 51 (2019)

Rublee, E., Rabaud, V., Konolige, K., Bradski, G.R.: ORB: an efficient alternative to SIFT or SURF. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp. 2564–2571 (2011)

Avşar, E., Avşar, Y.Ö.: Moving vehicle detection and tracking at roundabouts using deep learning with trajectory union. Multim. Tools Appl. 81, 6653–6680 (2022)

Bochkovskiy, A., Wang, C.Y., Liao, H.: YOLOv4: Optimal Speed and Accuracy of Object Detection. http://arxiv.org/abs/2004.10934 (2020)

Wojke, N., Bewley, A., Paulus, D.: Simple online and realtime tracking with a deep association metric. In: Proceedings of the IEEE international conference on image processing (ICIP), pp. 3645–3649 (2017)

Lempitsky, V., Zisserman, A.: Learning to count objects in images. In: Advances in neural information processing systems 23: 24th annual conference on neural information processing systems 2010 (NIPS), pp. 1324–1332 (2010)

Idrees, H., Saleemi, I., Seibert, C., Shah, M.: Multi-source multiscale counting in extremely dense crowd images. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 2547–2554 (2013)

Zhang, C., Li, H., Wang, X., Yang, X.: Cross-scene crowd counting via deep convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 833–841 (2015)

Idrees, H., Tayyab, M., Athrey, K., Zhang, D., Al-Maadeed, S., Rajpoot, N., Shah, M.: Composition loss for counting, density map estimation and localization in dense crowds. In: Proceedings of the European conference on computer vision (ECCV), pp. 532–546 (2018)

Zhang, Y., Zhou, D., Chen, S., Gao, S., Ma, Y.: Single-image crowd counting via multi-column convolutional neural network. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 589–597 (2016)

Sindagi, V.A., Patel, V.M.: Generating high-quality crowd density maps using contextual pyramid cnns. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp. 1861–1870 (2017)

Zhang, S., Wu, G., Costeira, J.P., Mouray, J.M.F.: FCN-rLSTM: deep spatio-temporal neural networks for vehicle counting in city cameras. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp. 3687–3696 (2017)

Liu, W., Salzmann, M., Fua, P.: Context-aware crowd counting. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp. 5099–5108 (2019)

Chen, X., Bin, Y., Sang, N., Gao, C.: Scale pyramid network for crowd counting. In: Proceedings of the IEEE winter conference on applications of computer vision (WACV), pp. 1941–1950 (2019)

Li, H., Zhang, S., Kong, W.: Bilateral counting network for single-image object counting. Vis. Comput. 36, 1693–1704 (2020). https://doi.org/10.1007/s00371-019-01769-5

Lin, H., Ma, Z., Ji, R., Wang, Y., Hong, X.: Boosting crowd counting via multifaceted attention. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp. 19628–19637 (2022)

Liu, W., Salzmann, M., Fua, P.: Counting people by estimating people flows. IEEE Trans. Pattern Anal. Mach. Intell. 44(11), 8151–8166 (2021)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. http://arxiv.org/abs/1409.1556 (2014)

Sam, D.B., Surya, S., Babu, R.V.: Switching convolutional neural network for crowd counting. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp. 4031–4039 (2017)

Li, Z., Lu, S., Dong, Y., Guo, J.: MSFFA: a multi-scale feature fusion and attention mechanism network for crowd counting. Vis. Comput. (2022). https://doi.org/10.1007/s00371-021-02383-0

Guerrero-Gómez-Olmedo, R., Torre-Jiménez, B., López-Sastre, R., Maldonado-Bascón, S., Oñoro-Rubio, D.: Extremely overlapping vehicle counting. In: Proceedings of the 7th Iberian conference on pattern recognition and image analysis (IbPRIA), pp. 423–431 (2015)

Hsieh, M.R., Lin, Y.L., Hsu, W.H.: Drone-based object counting by spatially regularized regional proposal network. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp. 4165–4173 (2017)

Onoro-Rubio, D., López-Sastre, R.J.: Towards perspective-free object counting with deep learning. In: Proceedings of the European conference on computer vision (ECCV), pp. 615–629 (2016)

Fiaschi, L., Koethe, U., Nair, R., Hamprecht, F.A.: Learning to count with regression forest and structured labels. In: Proceedings of the 21st international conference on pattern recognition (ICPR), pp. 2685–2688 (2012)

Mundhenk, T.N., Konjevod, G., Sakla, W.A., Boakye, K.: A large contextual dataset for classification, detection and counting of cars with deep learning. In: Proceedings of the European conference on computer vision (ECCV), pp. 785–800 (2016)

Cao, X., Wang, Z., Zhao, Y., Su, F.: Scale aggregation network for accurate and efficient crowd counting. In: Proceedings of the European conference on computer vision (ECCV), pp. 757–773 (2018)

Wang, Y., Hu, S., Wang, G., Chen, C., Pan, Z.: Multi-scale dilated convolution of convolutional neural network for crowd counting. Multim. Tools Appl. 79(1–2), 1057–1073 (2020)

Hu, C., Cheng, K., Xie, Y., Li, T.: Arbitrary perspective crowd counting via local to global algorithm. Multim. Tools Appl. 79(21–22), 15059–15071 (2020)

Ding, X., He, F., Lin, Z., Wang, Y., Guo, H., Huang, Y.: Crowd density estimation using fusion of multi-layer features. IEEE Trans. Intell. Transp. Syst. 22(8), 4776–4787 (2021)

Li, P., Zhang, M., Wan, J., Jiang, M.: Multi-scale guided attention network for crowd counting. Sci. Program. 2021, 5596488:1-5596488:13 (2021)

Yao, H.Y., Kang, H., Wan, W., Li, H.: Deep spatial regression model for image crowd counting. http://arxiv.org/abs/1710.09757 (2017)

Liu, L., Wang, H., Li, G., Ouyang, W., Lin, L.: Crowd counting using deep recurrent spatial-aware network. http://arxiv.org/abs/1807.00601 (2018)

Luo, H., Sang, J., Wu, W., Xiang, H., Xiang, Z., Zhang, Q., Wu, Z.: A high-density crowd counting method based on convolutional feature fusion. Appl. Sci. 8(12), 2367 (2018)

Ranjan, V., Le, H., Hoai, M.: Iterative crowd counting. In: Proceedings of the European conference on computer vision (ECCV), pp. 278–293 (2018)

Acknowledgements

This work was supported in part by the Science and Technology Project of the Transportation Department of Jiangxi Province, China (Nos. 2022X0040, 2021X0011).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares no conflict of interest in relation to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, M., Wang, Y., Yi, H. et al. Vehicle object counting network based on feature pyramid split attention mechanism. Vis Comput 40, 663–680 (2024). https://doi.org/10.1007/s00371-023-02808-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02808-y