Abstract

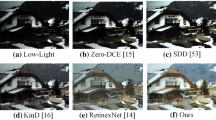

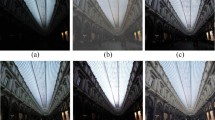

Most of the existing low-light image enhancement methods focus only on enhancing the overall image brightness, ignoring the image details during the enhancement process, which leads to problems such as loss of image details and over-smoothing. In addition, the noise presented in the low-light image is still retained or even amplified after enhancement. This paper proposes a single-stage generative adversarial network, dubbed FBGAN, to address the above issues effectively. A multi-scale feature aggregation module based on an error feedback mechanism and a denoising module integrated with boosting strategy guided by attention mechanism are proposed in our model. The former preserves image details entirely during the enhancement, while the latter can simultaneously enhance low-light images and denoise. By these means, our model is competent to restore images with precise details, noise-free, distinct contrast and natural color. Extensive experiments are conducted to show the superiority of our model in terms of both qualitative and quantitative studies.

Similar content being viewed by others

References

Abdullah-Al-Wadud, M., Kabir, M.H., Dewan, M.A.A., Chae, O.: A dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 53(2), 593–600 (2007)

Anwar, S., Barnes, N.: Real image denoising with feature attention. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3155–3164 (2019)

Cai, J., Shuhang, G., Zhang, L.: Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans. Image Process. 27(4), 2049–2062 (2018)

Chen, C., Chen, Q., Xu, J., Koltun, V.: Learning to see in the dark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3291–3300 (2018)

Chen, Y.-S., Wang, Y.-C., Kao, M.-H., Chuang, Y.-Y.: Deep photo enhancer: unpaired learning for image enhancement from photographs with GANs. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6306–6314 (2018)

Chen, C., Xiong, Z., Tian, X., Wu, F.: Deep boosting for image denoising. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–18 (2018)

Chen, C., Xiong, Z., Tian, X., Zha, Z.-J., Feng, W.: Real-world image denoising with deep boosting. IEEE Trans. Pattern Anal. Mach. Intell. 42(12), 3071–3087 (2019)

Dai, S., Han, M., Wu, Y., Gong, Y.: Bilateral back-projection for single image super resolution. In: 2007 IEEE International Conference on Multimedia and Expo, pp. 1039–1042. IEEE (2007)

Fan, M., Wang, W., Yang, W., Liu, J.: Integrating semantic segmentation and retinex model for low-light image enhancement. In: Proceedings of the 28th ACM International Conference on Multimedia, pp. 2317–2325 (2020)

Guo, X., Li, Y., Ling, H.: LIME: low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26(2), 982–993 (2016)

Haris, M., Shakhnarovich, G., Ukita, N.: Deep back-projection networks for super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1664–1673 (2018)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Ibrahim, H., Kong, N.S.P.: Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 53(4), 1752–1758 (2007)

Ignatov, A., Kobyshev, N., Timofte, R., Vanhoey, K., Van Gool, L.: DSLR-quality photos on mobile devices with deep convolutional networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3277–3285 (2017)

Ignatov, A., Kobyshev, N., Timofte, R., Vanhoey, K., Van Gool, L.: WESPE: weakly supervised photo enhancer for digital cameras. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 691–700 (2018)

Jiang, Y., Gong, X., Ding Liu, Yu., Cheng, C.F., Shen, X., Yang, J., Zhou, P., Wang, Z.: Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 30, 2340–2349 (2021)

Jobson, D.J., Rahman, Z., Woodell, G.A.: A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 6(7), 965–976 (1997)

Kun, L., Zhang, L.: TBEFN: a two-branch exposure-fusion network for low-light image enhancement. IEEE Trans. Multimed. 23, 4093–4105 (2020)

Lee, C., Lee, C., Kim, C.-S.: Contrast enhancement based on layered difference representation. In: 2012 19th IEEE International Conference on Image Processing, pp. 965–968. IEEE (2012)

Lee, C., Lee, C., Kim, C.-S.: Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process. 22(12), 5372–5384 (2013)

Li, M., Liu, J., Yang, W., Sun, X., Guo, Z.: Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 27(6), 2828–2841 (2018)

Lim, S., Kim, W.: DSLR: deep stacked Laplacian restorer for low-light image enhancement. IEEE Trans. Multimed. 23, 4272–4284 (2020)

Lore, K.G., Akintayo, A., Sarkar, S.: LLNet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recogn. 61, 650–662 (2017)

Lv, F., Lu, F., Wu, J., Lim, C.: MBLLEN: low-light image/video enhancement using CNNs. In: BMVC, vol. 220, p. 4 (2018)

Lv, F., Li, Y., Lu, F.: Attention guided low-light image enhancement with a large scale low-light simulation dataset. Int. J. Comput. Vis. 129(7), 2175–2193 (2021)

Ma, K., Zeng, K., Wang, Z.: Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 24(11), 3345–3356 (2015)

Mao, X., Li, Q., Xie, H., Lau, R.Y.K., Wang, Z., Paul Smolley, S.: Least squares generative adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2794–2802 (2017)

Mnih, V., Heess, N., Graves, A., et al.: Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 27 (2014)

Nakai, K., Hoshi, Y., Taguchi, A.: Color image contrast enhacement method based on differential intensity/saturation gray-levels histograms. In: 2013 International Symposium on Intelligent Signal Processing and Communication Systems, pp. 445–449. IEEE (2013)

Park, S., Soohwan, Yu., Moon, B., Ko, S., Paik, J.: Low-light image enhancement using variational optimization-based retinex model. IEEE Trans. Consum. Electron. 63(2), 178–184 (2017)

Pizer, S.M., Amburn, E.P., Austin, J.D., Cromartie, R., Geselowitz, A., Greer, T., Romeny, B.H., Zimmerman, J.B., Zuiderveld, K.: Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 39(3), 355–368 (1987)

Ren, W., Liu, S., Ma, L., Qianqian, X., Xiangyu, X., Cao, X., Junping, D., Yang, M.-H.: Low-light image enhancement via a deep hybrid network. IEEE Trans. Image Process. 28(9), 4364–4375 (2019)

Ren, X., Yang, W., Cheng, W.-H., Liu, J.: LR3M: robust low-light enhancement via low-rank regularized retinex model. IEEE Trans. Image Process. 29, 5862–5876 (2020)

Romano, Y., Elad, M.: Boosting of image denoising algorithms. SIAM J. Imaging Sci. 8(2), 1187–1219 (2015)

Ronneberger, O., Fischer, P., Brox, T.: U-net: convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 234–241. Springer, Berlin (2015)

Shi, W., Caballero, J., Huszár, F., Totz, J., Aitken, A.P., Bishop, R., Rueckert, D., Wang, Z.: Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1874–1883 (2016)

Wang, S., Zheng, J., Hai-Miao, H., Li, B.: Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 22(9), 3538–3548 (2013)

Wang, X., Girshick, R., Gupta, A., He, K.: Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7794–7803 (2018)

Wang, Y., Cao, Y., Zha, Z.-J., Zhang, J., Xiong, Z., Zhang, W., Wu, F.: Progressive retinex: mutually reinforced illumination-noise perception network for low-light image enhancement. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 2015–2023 (2019)

Wang, R., Zhang, Q., Fu, C.-W., Shen, X., Zheng, W.-S., Jia, J.: Underexposed photo enhancement using deep illumination estimation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6849–6857 (2019)

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., Hu, Q.: ECA-Net: efficient channel attention for deep convolutional neural networks, 2020 IEEE. In: CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE (2020)

Wei, C., Wang, W., Yang, W., Liu, J.: Deep retinex decomposition for low-light enhancement. arXiv preprint arXiv:1808.04560 (2018)

Woo, S., Park, J., Lee, J.-Y., Kweon, I.S.: CBAM: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 3–19 (2018)

Wu, X., Liu, X., Hiramatsu, K., Kashino, K.: Contrast-accumulated histogram equalization for image enhancement. In: 2017 IEEE International Conference on Image Processing (ICIP), pp. 3190–3194. IEEE (2017)

Xian, W., Sangkloy, P., Agrawal, V., Raj, A., Lu, J., Fang, C., Yu, F., Hays, J.: Texturegan: controlling deep image synthesis with texture patches. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8456–8465 (2018)

Xiong, W., Liu, D., Shen, X., Fang, C., Luo, J.: Unsupervised real-world low-light image enhancement with decoupled networks. arXiv preprint arXiv:2005.02818 (2020)

Xu, K., Yang, X., Yin, B., Lau, R.W.H.: Learning to restore low-light images via decomposition-and-enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2281–2290 (2020)

Ying, Z., Li, G., Gao, W.: A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv preprint arXiv:1711.00591 (2017)

Ying, Z., Li, G., Ren, Y., Wang, R., Wang, W.: A new image contrast enhancement algorithm using exposure fusion framework. In: International Conference on Computer Analysis of Images and Patterns, pp. 36–46. Springer, Berlin (2017)

Zhang, Y., Zhang, J., Guo, X.: Kindling the darkness: a practical low-light image enhancer. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 1632–1640 (2019)

Zhang, Y., Guo, X., Ma, J., Liu, W., Zhang, J.: Beyond brightening low-light images. Int. J. Comput. Vis. 129(4), 1013–1037 (2021)

Zhu, M., Pan, P., Chen, W., Yang, Y.: Eemefn: Low-light image enhancement via edge-enhanced multi-exposure fusion network. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 13106–13113 (2020)

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62072169 and Natural Science Foundation of Hunan Province under Grant 2021JJ30138.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jiang, B., Wang, R., Dai, J. et al. FBGAN: multi-scale feature aggregation combined with boosting strategy for low-light image enhancement. Vis Comput 40, 1745–1756 (2024). https://doi.org/10.1007/s00371-023-02883-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02883-1