Abstract

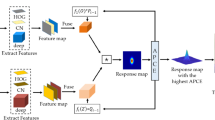

Due to its quick tracking, simple deployment, and straightforward principle, correlation filter-based tracking methods continue to have significant research implications. In order to make full use of different features while balancing the tracking speed and performance, the adaptive cascaded and parallel feature fusion-based tracker (ACPF), which could estimate the position, rotation and scale, respectively, is proposed. Comparing with other correlation filter-based trackers, the ACPF could fuse deep and handcrafted features in both Log-Polar and Cartesian branch and update templates according to the weights of response maps adaptively. Adaptive linear weights (ALW) are proposed to fuse feature response maps adaptively in the Log-Polar coordinates branch to improve the estimation of scale and rotation of object by solving constrained optimization problems. Response maps of shallow and deep features are merged adaptively by cascading numerous ALW modules in the Cartesian branch to make better utilize shallow and deep feature, and increase tracking accuracy. The final results are computed simultaneously by Cartesian and Log-Polar branch in parallel. Additionally, the learning rates are automatically changed in accordance with the weights of the ALW module to execute the adaptive template update. Extensive experiments on benchmarks show that the proposed tracker achieves the comparable results, especially in dealing with the challenges of deformation, rotation and scale variation.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Z. Li, F. Liu, W. Yang, S. Peng, J. Zhou, A survey of convolutional neural networks: analysis, applications, and prospects. In: IEEE Transactions on Neural Networks and Learning Systems (2021)

Li, B., Wu, W., Wang, Q., Zhang, F., Xing, J., Yan, J.: Siamrpn++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4282–4291 (2019)

Guo, D., Wang, J., Cui, Y., Wang, Z., Chen, S.: Siamcar: siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6269–6277 (2020)

Yan, B., Peng, H., Wu, K., Wang, D., Fu, J., Lu, H.: Lighttrack: finding lightweight neural networks for object tracking via one-shot architecture search. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15180–15189 (2021)

Danelljan, M., Bhat, G., Shahbaz Khan, F., Felsberg, M.: Eco: efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6638–6646 (2017)

Lukezic, A., Vojir, T., Cehovin Zajc, L., Matas, J., Kristan, M.: Discriminative correlation filter with channel and spatial reliability. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6309–6318 (2017)

Bertinetto, L., Valmadre, J., Golodetz, S., Miksik, O., Torr, P. H.: Staple: complementary learners for real-time tracking In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1401–1409 (2016)

Zhang, J., Ma, S., Sclaroff, S.: MEEM: robust tracking via multiple experts using entropy minimization. In: European Conference on Computer Vision, Springer, pp. 188–203 (2014)

Wu, Y., Lim, J., Yang, M.-H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015). https://doi.org/10.1109/TPAMI.2014.2388226

Kristan, M., Matas, J., Leonardis, A., Vojir, T., Pflugfelder, R., Fernandez, G., Nebehay, G., Porikli, F., Čehovin, L.: A novel performance evaluation methodology for single-target trackers. IEEE Trans. Pattern Anal. Mach. Intell. 38(11), 2137–2155 (2016). https://doi.org/10.1109/TPAMI.2016.2516982

Li, Y., Zhu, J., Hoi, S.C., Song, W., Wang, Z., Liu, H.: Robust estimation of similarity transformation for visual object tracking. Proc. AAAI Conf. Artif. Intell. 33, 8666–8673 (2019)

Bhat, G., Johnander, J., Danelljan, M., Khan, F. S. , Felsberg, M.: Unveiling the power of deep tracking. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 483–498 (2018)

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), Vol. 1. IEEE, pp. 886–893 (2005)

Van De Weijer, J., Schmid, C., Verbeek, J., Larlus, D.: Learning color names for real-world applications. IEEE Trans. Image Process. 18(7), 1512–1523 (2009)

Wu, Y., Lim, J., Yang, M.-H.: Online object tracking: a benchmark. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2411–2418 (2013)

Wu, Y., Lim, J., Yang, M.-H.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015)

Kristan, M., Matas, J., Leonardis, A., Felsberg, M., Cehovin, L., Fernandez, G., Vojir, T., Hager, G., Nebehay, G., Pflugfelder,R.: The visual object tracking vot2015 challenge results, in Proceedings of the IEEE international conference on computer vision workshops, pp. 1–23 (2015)

M. Kristan, A. Leonardis, J. Matas, M. Felsberg, R. Pflugfelder, L. Cehovin Zajc, T. Vojir, G. Bhat, A. Lukezic, A. Eldesokey, et al.: The sixth visual object tracking vot2018 challenge results. In: Proceedings of the European Conference on Computer Vision (ECCV) Workshops (2018)

Kristan, M., Leonardis, A., Matas, J., et al. The tenth visual object tracking vot2022 challenge results[C]//Computer Vision–ECCV 2022 Workshops: Tel Aviv, Israel, October 23–27, 2022, Proceedings, Part VIII. Cham: Springer Nature Switzerland, 431–460 (2023)

Mueller, M., Smith, N., Ghanem, B.: A benchmark and simulator for UAV tracking. In: European Conference on Computer Vision, Springer, pp. 445–461 (2016)

Abbass, M.Y., Kwon, K.-C., Kim, N., Abdelwahab, S.A., El-Samie, F.E.A., Khalaf, A.A.: A survey on online learning for visual tracking. Vis. Comput. 37(5), 993–1014 (2021)

Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition. IEEE, pp. 2544–2550 (2010)

Henriques, J. F., Caseiro, R., Martins, P., Batista, J.: Exploiting the circulant structure of tracking-by-detection with kernels. In: European Conference on Computer Vision, Springer, pp. 702–715 (2012)

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2014)

Qian, Q., Wu, X.-J., Kittler, J., Xu, T.-Y.: Correlation tracking with implicitly extending search region. Vis. Comput. 37(5), 1029–1043 (2021)

Danelljan, M., Hager, G., Khan, F. S., Felsberg, M.: Convolutional features for correlation filter based visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, , pp. 58–66 (2015)

Danelljan, M., Hager, G., Khan, F. S., Felsberg, M.: Learning spatially regularized correlation filters for visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4310–4318 (2015)

Gundogdu, E., Alatan, A.A.: Good features to correlate for visual tracking. IEEE Trans. Image Process. 27(5), 2526–2540 (2018)

Zhang, W., Du, Y., Chen, Z., Deng, J., Liu, P.: Robust adaptive learning with Siamese network architecture for visual tracking. Vis. Comput. 37(5), 881–894 (2021)

Huang, Y., Lu, R., Li, X., Qi, N., Yang, X.: Discriminative correlation tracking based on spatial attention mechanism for low-resolution imaging systems. Vis. Comput. 38(4), 1495–1508 (2022)

Fan, C., Zhang, R., Ming, Y.: MP-LN: motion state prediction and localization network for visual object tracking. Vis. Comput. 38(12), 4291–4306 (2022)

Zhang, J., Zhao, K., Dong, B., Fu, Y., Wang, Y., Yang, X., Yin, B.: Multi-domain collaborative feature representation for robust visual object tracking. Vis. Comput. 37(9), 2671–2683 (2021)

Danelljan, M., Häger, G., Khan, F., Felsberg, M.: Accurate scale estimation for robust visual tracking. In: British Machine Vision Conference, Nottingham, September 1–5, Bmva Press, (2014)

Li, Y., Zhu, J.: A scale adaptive kernel correlation filter tracker with feature integration. In: European Conference on Computer Vision, Springer, pp. 254–265 ( 2014)

Bouraffa, T., Feng, Z., Yan, L., Xia, Y., Xiao, B.: Multi-feature fusion tracking algorithm based on peak-context learning. Image Vis. Comput. 123, 104468 (2022)

Dunnhofer, M., Simonato, K., Micheloni, C.: Combining complementary trackers for enhanced long-term visual object tracking. Image Vis. Comput. 122, 104448 (2022)

Ma, C., Huang, J.-B., Yang, X., Yang, M.-H.: Hierarchical convolutional features for visual tracking. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3074–3082 (2015)

Valmadre, J., Bertinetto, L., Henriques, J., Vedaldi, A., Torr, P. H.: End-to-end representation learning for correlation filter based tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2805–2813 (2017)

Wang, Q., Gao, J., Xing, J., Zhang, M., Hu, W.: Dcfnet: Discriminant correlation filters network for visual tracking, arXiv preprint arXiv:1704.04057 (2017)

Yang, S., Chen, H., Xu, F., Li, Y., Yuan, J.: High-performance UAVs visual tracking based on Siamese network. Vis. Comput. 38(6), 2107–2123 (2022)

Zhang, J., Sun, J., Wang, J., Yue, X.-G.: Visual object tracking based on residual network and cascaded correlation filters. J. Ambient Intell. Human. Comput. 12(8), 8427–8440 (2021)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition, arXiv preprint arXiv:1409.1556 (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Bertinetto, L., Valmadre, J., Henriques, J. F., Vedaldi, A., Torr, P. H.: Fully-convolutional siamese networks for object tracking. In: European Conference on Computer Vision, Springer, pp. 850–865 (2016)

Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8971–8980 (2018)

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 39(8), 1561–1575 (2016)

Acknowledgements

This research is partially supported by Natural Science Foundation of Hebei Province (F2022201013) and Startup Foundation for Advanced Talents of Hebei University (No. 521100221003).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This document is the results of the research project funded by Natural Science Foundation of Hebei Province (F2022201013) and Startup Foundation for Advanced Talents of Hebei University (No. 521100221003).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, J., Li, S., Li, K. et al. Adaptive cascaded and parallel feature fusion for visual object tracking. Vis Comput 40, 2119–2138 (2024). https://doi.org/10.1007/s00371-023-02908-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02908-9