Abstract

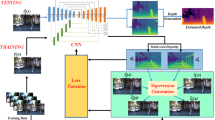

Display technologies have evolved over the years. It is critical to develop practical HDR capturing, processing, and display solutions to bring 3D technologies to the next level. Depth estimation of multi-exposure stereo image sequences is an essential task in the development of cost-effective 3D HDR video content. In this paper, we develop a deep architecture for multi-exposure stereo depth estimation. The proposed architecture has two novel components. First, the stereo matching technique used in traditional stereo depth estimation is revamped. For the stereo depth estimation component of our architecture, a mono-to-stereo transfer learning approach is deployed. The proposed formulation circumvents the cost volume construction requirement, which is replaced by a dual-encoder single-decoder CNN with different weights for feature fusion. EfficientNet-based blocks are used to learn the disparity. Secondly, we combine disparity maps obtained from the stereo images at different exposure levels using a robust disparity feature fusion approach. The disparity maps obtained at different exposures are merged using weight maps calculated for different quality measures. The final predicted disparity map obtained is more robust and retains best features that preserve the depth discontinuities. The proposed CNN offers flexibility to train using standard dynamic range stereo data or with multi-exposure low dynamic range stereo sequences. In terms of performance, the proposed model surpasses state-of-the-art monocular and stereo depth estimation methods, both quantitatively and qualitatively, on challenging Scene flow and differently exposed Middlebury stereo datasets. The architecture performs exceedingly well on complex natural scenes, demonstrating its usefulness for diverse 3D HDR applications.

Similar content being viewed by others

Data Availability

All data generated or analyzed during this study are included in this article. Additionally, a supplementary information file is provided [25]. We strongly encourage readers to see the supplementary results, which clearly reveal the effectiveness of the proposed approach with respect to state-of-the-art monocular and stereo depth estimation methods.

References

Akhavan, T., Kaufmann, H.: Backward compatible HDR stereo matching: a hybrid tone-mapping-based framework. EURASIP JIVP 1–12, 2015 (2015)

Akhavan, T., Yoo, H., Gelautz, M.: A framework for HDR stereo matching using multi-exposed images. In: HDRi2013, pp. 1–4 (2013)

Alhashim, I., Wonka, P.: High quality monocular depth estimation via transfer learning. arXiv: 1812.11941 (2018)

Anil, R., Sharma, M., Choudhary, R.: Sde-dualenet: a novel dual efficient convolutional neural network for robust stereo depth estimation. In: 2021 International Conference on Visual Communications and Image Processing (VCIP), pp. 1–5 (2021)

Burt, P., Adelson, E.: The Laplacian pyramid as a compact image code. IEEE Trans. Commun. 31(4), 532–540 (1983)

Cantrell, K.J., Miller, C.D., Morato, C.W.: Practical depth estimation with image segmentation and serial U-Nets. In: VEHITS, vol. I, pp. 406–414. INSTICC, SciTePress (2020)

Chang, J.-R., Chen, Y.-S.: Pyramid stereo matching network. In: IEEE CVPR 5410–5418 (2018)

Chari, P., Vadathya, A.K., Mitra, K.: Optimal HDR and depth from dual cameras. arXiv:2003.05907 (2020)

Choudhary, R., Sharma, M., Uma, T.V., Anil, R.: Mestereo-du2cnn: a novel dual channel CNN for learning robust depth estimates from multi-exposure stereo images for HDR 3d applications. arXiv preprint arXiv:2206.10375 (2022)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE (2009)

Diebel, J., Thrun, S.: An application of Markov random fields to range sensing. Adv. Neural Inf. Process. Syst. 18 (2005)

Duggal, S., Wang, S., Ma, W.-C., Hu, R., Urtasun, R.: Deeppruner: learning efficient stereo matching via differentiable patchmatch. In ICCV (2019)

Eigen, D., Puhrsch, C., Fergus, R.: Depth map prediction from a single image using a multi-scale deep network. In: Advance Neural Information Processing System, vol. 27, Curran Associates, Inc. (2014)

Eilertsen, G., Kronander, J., Denes, G., Mantiuk, R., Unger, J.: HDR image reconstruction from a single exposure using deep CNNs. ACM Trans. Graph. 36(6), 1–5 (2017)

Farooq Bhat, S., Alhashim, I., Wonka, P.: Adabins: depth estimation using adaptive bins. In: 2021 IEEE/CVF CVPR, pp. 4008–4017 (2021)

Ferstl, D., Ruther, M., Bischof, H.: Variational depth superresolution using example-based edge representations. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 513–521 (2015)

Garcia, F., Aouada, D., Mirbach, B., Ottersten, B.: Real-time distance-dependent mapping for a hybrid ToF multi-camera rig. IEEE J. Sel. Top. Signal Process. 6(5), 425–436 (2012)

Hao, Z., Li, Y., You, S., Lu, F.: Detail preserving depth estimation from a single image using attention guided networks. In: 2018 International Conference on 3D Vision (3DV), pp. 304–313 (2018)

Hasinoff, S.W., Durand, F., Freeman, W.T.: Noise-optimal capture for high dynamic range photography. In: IEEE CVPR, pp. 553–560 (2010)

Hirschmuller, H.: Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 30(2), 328–341 (2008)

Hirschmuller, H., Scharstein, D.: Evaluation of cost functions for stereo matching. In: IEEE CVPR, pp. 1–8 (2007). https://vision.middlebury.edu/stereo/data/scenes2006/

Hu, J., Ozay, M., Zhang, Y., Okatani, T.: Revisiting single image depth estimation: toward higher resolution maps with accurate object boundaries. In: IEEE WACV (2019)

Hui, T.-W., Loy, C.C., Tang, X.: Depth map super-resolution by deep multi-scale guidance. In: European conference on computer vision, pp. 353–369. Springer (2016)

Im, S., Jeon, H.-G., Kweon, I.S.: Robust depth estimation using auto-exposure bracketing. IEEE Trans. Image Process. 28(5), 2451–2464 (2019)

Supplementary information file. Available: tinyurl.com/2p95b2dz

Jeong, Y., Park, J., Cho, D., Hwang, Y., Choi, S.B., Kweon, I.S.: Lightweight depth completion network with local similarity-preserving knowledge distillation. Sensors 22(19), 7388 (2022)

Kalantari, N.K., Ramamoorthi, R.: Deep high dynamic range imaging of dynamic scenes. ACM Trans. Graph. 36(4), 144 (2017)

Kendall, A., Martirosyan, H., Dasgupta, S., Henry P., Kennedy, R.: Abraham Bachrach, and Adam Bry. End-to-end learning of geometry and context for deep stereo regression. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 66–75 (2017)

Kingma, D. P., Ba, J.: Adam: a method for stochastic optimization. Preprint arXiv:1412.6980 (2014)

Kopf, J., Cohen, M., Lischinski, D., Uyttendaele, M.: Joint bilateral upsampling. ACM Trans. Gr. (ToG) 26(3), 96–es (2007)

Kwon, H., Tai, Y.-W., Lin, S.: Data-driven depth map refinement via multi-scale sparse representation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 159–167 (2015)

Laina, I., Rupprecht, C., Belagiannis, V., Tombari, F., Navab, N.: Deeper depth prediction with fully convolutional residual networks. In: 3DV, pp. 239–248 (2016)

Li, Y., Luo, F., Li, W., Zheng, S., Huan-huan, W., Xiao, C.: Self-supervised monocular depth estimation based on image texture detail enhancement. Vis. Comput. 37(9–11), 2567–2580 (2021)

Li, Z., Liu, X., Drenkow, N., Ding, A., Creighton, F.X., Taylor, R.H., Unberath, M.: Revisiting stereo depth estimation from a sequence-to-sequence perspective with transformers. arXiv:2011.02910 (2020)

Liang, Z., Feng, Y., Guo, Y., Liu, H., Chen, W., Qiao, L., Zhou, L., Zhang, J.: Learning for disparity estimation through feature constancy. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2811–2820 (2018)

Lin, G., Milan, A., Shen, C., Reid, I.: Refinenet: multi-path refinement networks for high-resolution semantic segmentation. In: IEEE CVPR 5168–5177 (2017)

Lin, H.-Y., Kao, C.-C.: Stereo matching techniques for high dynamic range image pairs. In: Image and Video Technology 605–616 (2016)

Lin, T.-Y., Dollár, P., Girshick, R., He, K., Hariharan, B., Belongie, S.: Feature pyramid networks for object detection. In: IEEE CVPR, pp. 936–944 (2017)

Liu, Y.-L., Lai, W.-S., Chen, Y.-S., Kao, Y.-L., Yang, M.-H., Chuang, Y.-Y., Huang, J.-B.: Single-image HDR reconstruction by learning to reverse the camera pipeline. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1648–1657 (2020)

Malik, J., Perona, P.: Preattentive texture discrimination with early vision mechanisms. J. Opt. Soc. Am. A 7(5), 923–932 (1990)

Mayer, N., Ilg, E., Häusser, P., Fischer, P., Cremers, D., Dosovitskiy, A., Brox, T.: A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In: 2016 IEEE CVPR, pp. 4040–4048 (2016). https://lmb.informatik.uni-freiburg.de/resources/datasets/SceneFlowDatasets.en.html

Mertens, T., Kautz, J., Van Reeth, F.: Exposure fusion. In: 15th Pacific Conference on Computer Graphics and Applications (PG’07), pp. 382–390 (2007)

Min, D., Jiangbo, L., Do, M.N.: Depth video enhancement based on weighted mode filtering. IEEE Trans. Image Process. 21(3), 1176–1190 (2011)

Mozerov, M.G., van de Weijer, J.: Accurate stereo matching by two-step energy minimization. IEEE Trans. Image Process. 24(3), 1153–1163 (2015)

Nayana, A., Johnson, A.K.: High dynamic range imaging-a review. Int. J. Image Process. 9(4), 198 (2015)

Ning, S., Xu, H., Song, L., Xie, R., Zhang, W.: Learning an inverse tone mapping network with a generative adversarial regularizer. In: 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1383–1387 (2018)

Ohta, Y., Kanade, T.: Stereo by intra- and inter-scanline search using dynamic programming. IEEE Trans. Pattern Anal. Mach. Intell. PAMI 7(2), 139–154 (1985)

Ranftl, R., Lasinger, K., Hafner, D., Schindler, K., Koltun, V.: Towards robust monocular depth estimation: mixing datasets for zero-shot cross-dataset transfer. IEEE TPAMI 1 (2020)

Riegler, G., Ferstl, D., Rüther, M., Bischof, H.: A deep primal-dual network for guided depth super-resolution. Preprint arXiv: 1607.08569 (2016)

Izadi, S., Kim, D., Hilliges, O., Molyneaux, D., Newcombe, R., Kohli, P., Shotton, J., Hodges, Steve, F., Dustin, D., Andrew, et al.: Kinectfusion: real-time 3d reconstruction and interaction using a moving depth camera. In: Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, pp. 559–568 (2011)

Scharstein, D., Szeliski, R., Zabih, R.: A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. In: Proceedings IEEE Workshop on Stereo and Multi-baseline Vision (SMBV 2001), pp. 131–140 (2001)

Scharstein, D., Hirschmüller, H., Kitajima, Y., Krathwohl, G., Nešić, N., Wang, X., Westling, P.: High-resolution stereo datasets with subpixel-accurate ground truth. In: Xiaoyi Jiang, Joachim Hornegger, and Reinhard Koch, editors, Pattern Recognition, pp. 31–42 (2014). https://vision.middlebury.edu/stereo/data/scenes2014

Scharstein, D., Pal, C.: Learning conditional random fields for stereo. In: IEEE CVPR, pp. 1–8 (2007). https://vision.middlebury.edu/stereo/data/scenes2005/

Schuon, S., Theobalt, C., Davis, J., Thrun, S.: Lidarboost: depth superresolution for tof 3d shape scanning. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 343–350. IEEE (2009)

Sharma, M., Sharma, A., Tushar, K.R., Panneer, A.: A novel 3d-unet deep learning framework based on high-dimensional bilateral grid for edge consistent single image depth estimation. In: IC3D, pp. 01–08 (2020)

Tan, M., Le, Q.V.: Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv: 1905.11946 (2019)

Wadaskar, A., Sharma, M., Lal, R.: A rich stereoscopic 3d high dynamic range image & video database of natural scenes. In IC3D, pp. 1–8 (2019). https://ieeexplore.ieee.org/document/8975903

Wang, L., Yoon, K.-J..: Deep learning for HDR imaging: state-of-the-art and future trends. IEEE Transactions on Pattern Analysis and Machine Intelligence 1 (2021)

Watson, J., Firman, M., Brostow, G.J., Turmukhambetov, D.: Self-supervised monocular depth hints. In: IEEE ICCV (2019)

Xian, K., Shen, C., Cao, Z., Hao, L., Xiao, Y., Li, R., Luo, Z.: Monocular relative depth perception with web stereo data supervision. In: IEEE CVPR 311–320 (2018)

Xu, D., Ricci, E., Ouyang, W., Wang, X., Sebe, N.: Multi-scale continuous crfs as sequential deep networks for monocular depth estimation. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 161–169 (2017)

Xu, D., Wang, W., Tang, H., Liu, H., Sebe, N., Ricci, E.: Structured attention guided convolutional neural fields for monocular depth estimation. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3917–3925 (2018)

Yan, J., Zhao, H., Bu, P., Jin, Y.: Channel-wise attention-based network for self-supervised monocular depth estimation. In: 2021 International Conference on 3D Vision (3DV), pp. 464–473 (2021)

Yan, Q., Zhang, L., Liu, Yu., Zhu, Yu., Sun, J., Shi, Q., Zhang, Y.: Deep HDR imaging via a non-local network. IEEE Trans. Image Process. 29, 4308–4322 (2020)

Yang, G., Manela, J., Happold, M., Ramanan, D.: Hierarchical deep stereo matching on high-resolution images. In: IEEE CVPR 5510–5519 (2019)

Yang, J., Wright, J., Huang, T.S., Ma, Y.: Image super-resolution via sparse representation. IEEE Trans. Image Process. 19(11), 2861–2873 (2010)

Yang, J., Ye, X., Li, K., Hou, C., Wang, Y.: Color-guided depth recovery from RGB-D data using an adaptive autoregressive model. IEEE Trans. Image Process. 23(8), 3443–3458 (2014)

Zhao, T., Pan, S., Gao, W., Sheng, C., Sun, Y., Wei, J.: Attention unet++ for lightweight depth estimation from sparse depth samples and a single RGB image. Vis. Comput. 38(5), 1619–1630 (2022)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Choudhary, R., Sharma, M., Uma, T.V. et al. MEStereo-Du2CNN: a dual-channel CNN for learning robust depth estimates from multi-exposure stereo images for HDR 3D applications. Vis Comput 40, 2219–2233 (2024). https://doi.org/10.1007/s00371-023-02912-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02912-z